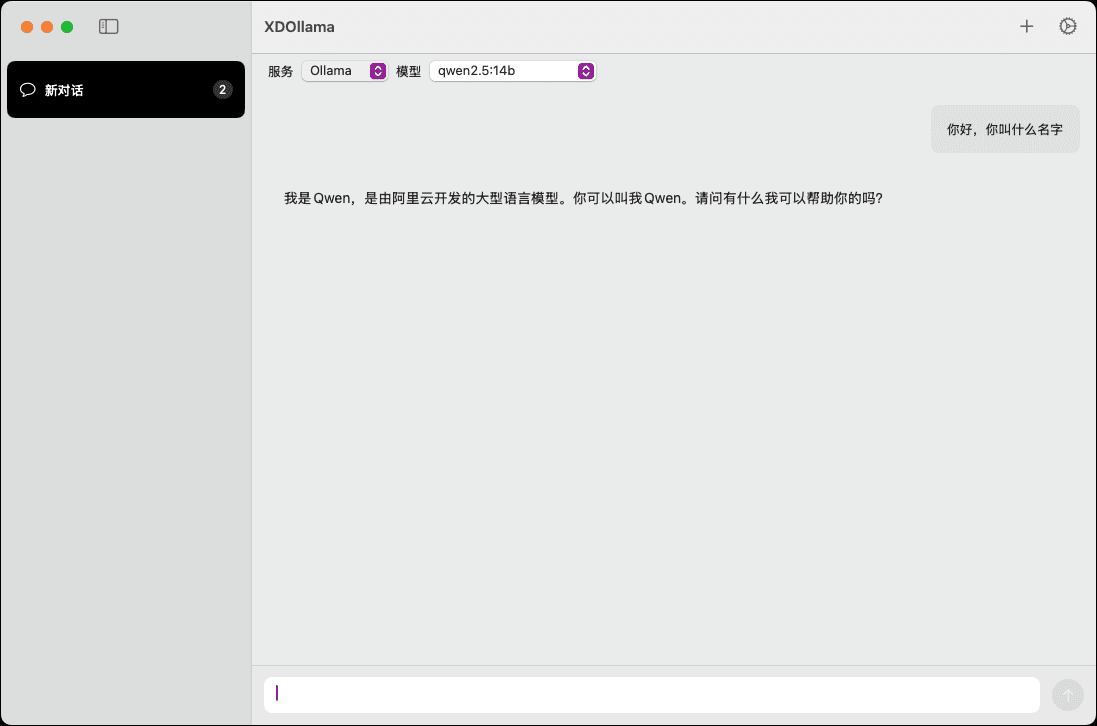

XDOllama: AI modeling interface for quick calls to Ollama\Dify\Xinference on MacOS.

General Introduction

XDOllama is a desktop application designed for MacOS users to quickly invoke Ollama, Dify and Xinference The application enables users to easily call local or online AI models through a simplified interface and operation process. With a simplified interface and operation flow, the application enables users to easily call local or online AI models, improving work efficiency and experience.

Function List

- invoke the local Ollama mould

- Calling the online Ollama model

- Calling the local Xinference model

- Calling the online Xinference model

- invoke the local Dify appliance

- Calling the online Dify application

- Support for multiple AI frameworks

- Easy-to-use interface design

- Efficient model calling speed

Using Help

Installation process

- Download the DMG file.

- Double-click to open the downloaded DMG file.

- Drag XDOllama.app into the Applications folder.

- Once the installation is complete, open the application and it is ready to use.

Guidelines for use

- Open the XDOllama application.

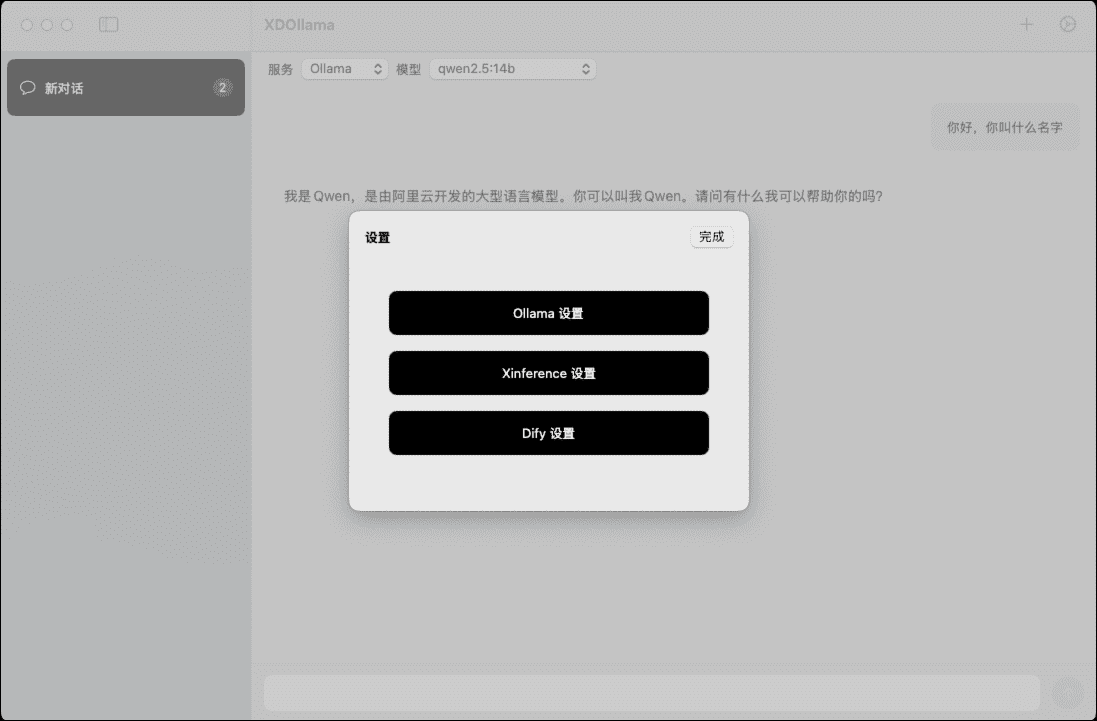

- Select the AI model to call (Ollama, Dify or Xinference).

- Select the calling method (local or online).

- Enter the relevant parameters and settings as prompted.

- Click the "Call" button and wait for the model to load and run.

- View and use model outputs.

Detailed function operation flow

Calling the local Ollama model

- Select "Ollama" from the main screen.

- Select the "Local" call method.

- Enter the model path and parameters.

- Click the "Call" button and wait for the model to load.

- View the model output.

Calling the online Ollama model

- Select "Ollama" from the main screen.

- Select the "Online" call method.

- Enter the URL and parameters of the online model.

- Click the "Call" button and wait for the model to load.

- View the model output.

Calling the local Xinference model

- Select "Xinference" on the main screen.

- Select the "Local" call method.

- Enter the model path and parameters.

- Click the "Call" button and wait for the model to load.

- View the model output.

Calling the online Xinference model

- Select "Xinference" on the main screen.

- Select the "Online" call method.

- Enter the URL and parameters of the online model.

- Click the "Call" button and wait for the model to load.

- View the model output.

Calling the local Dify application

- Select "Dify" from the main screen.

- Select the "Local" call method.

- Enter the application path and parameters.

- Click the "Call" button and wait for the application to load.

- View the application output.

Calling the online Dify application

- Select "Dify" from the main screen.

- Select the "Online" call method.

- Enter the URL and parameters of the online application.

- Click the "Call" button and wait for the application to load.

- View the application output.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...