Windows Native Deployment of DeepSeek-R1-based WeChat Intelligent Chatbot

Good New Year! Greetings to all of you! Recently, my circle of friends has been DeepSeek-R1 I'm sure you've all heard about DeepSeek, our homegrown open source model, and there are many tutorials on how to deploy DeepSeek-R1 locally! I believe you have heard of our domestic open source model - DeepSeek. online tutorials on how to locally deploy DeepSeek-R1 have been quite a lot, today let's do something different, combined with practical examples, hand in hand to take you to play DeepSeek-R1, see how powerful it really is! I'm not sure how powerful it is, but I'm sure it's a good idea!

This issue mainly shares how to use the local DeepSeekR1 to access WeChat to do a WeChat intelligent chatbot! The realization of the steps are divided into three major parts: local deployment of Ollama, access to WeChat, modify the configuration.

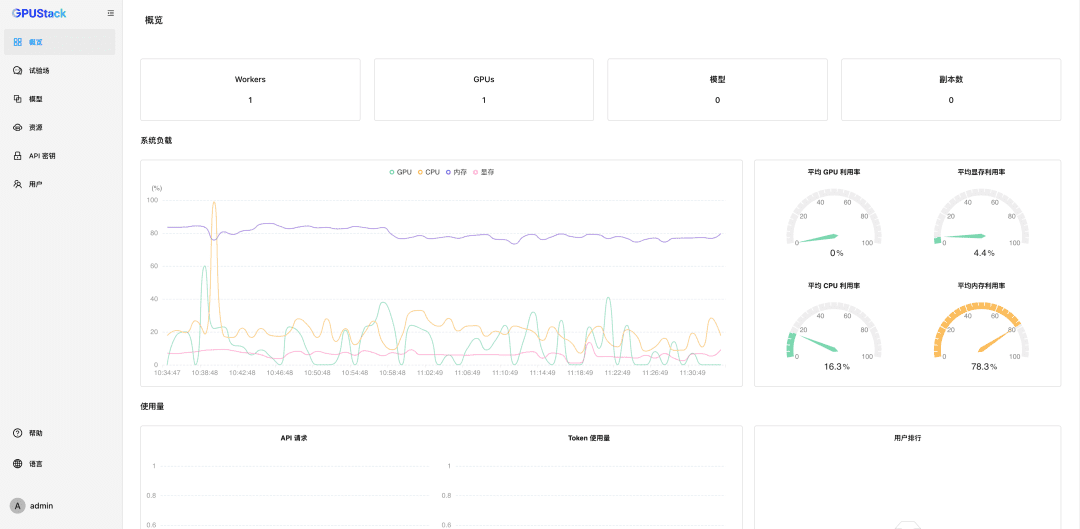

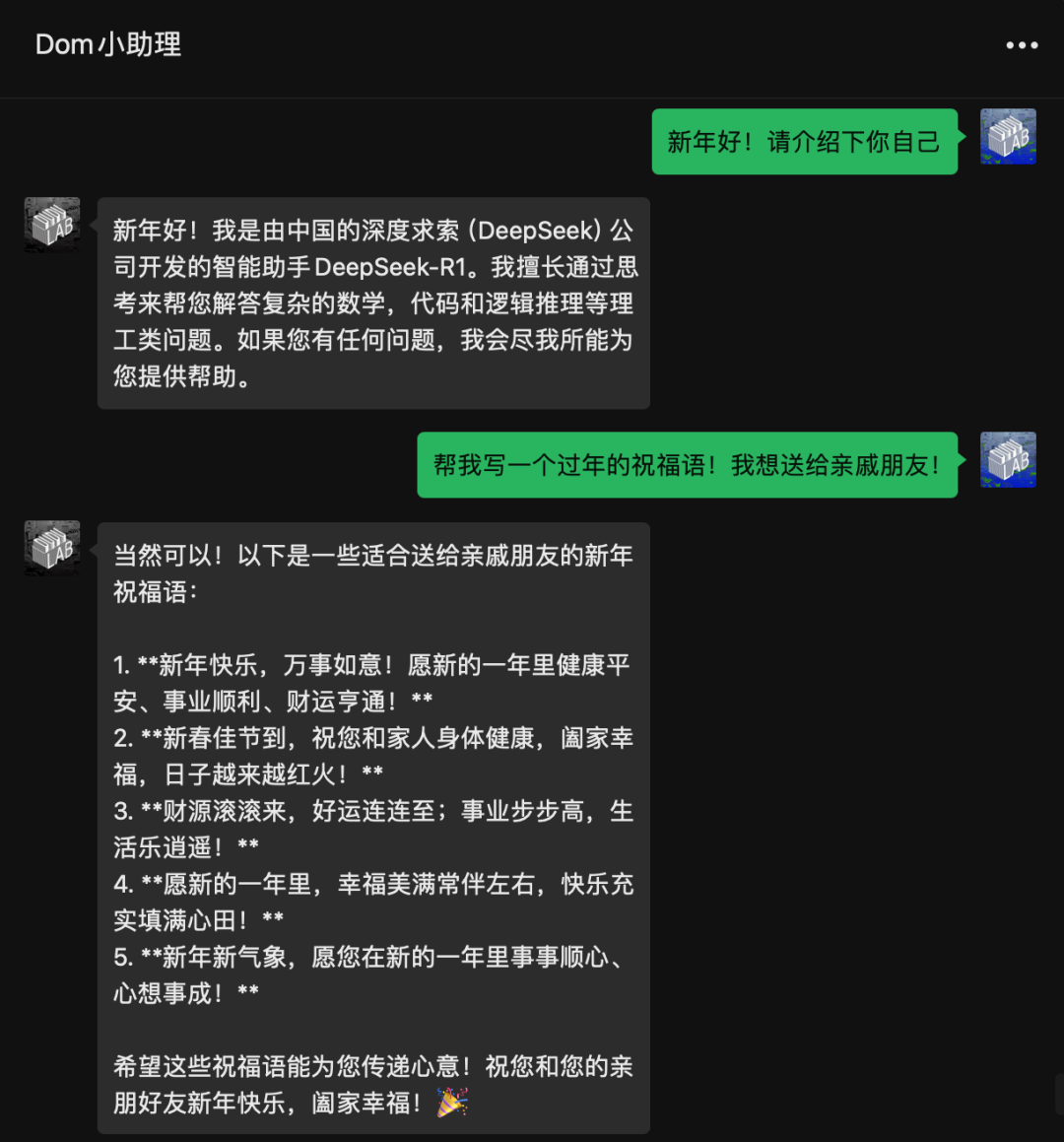

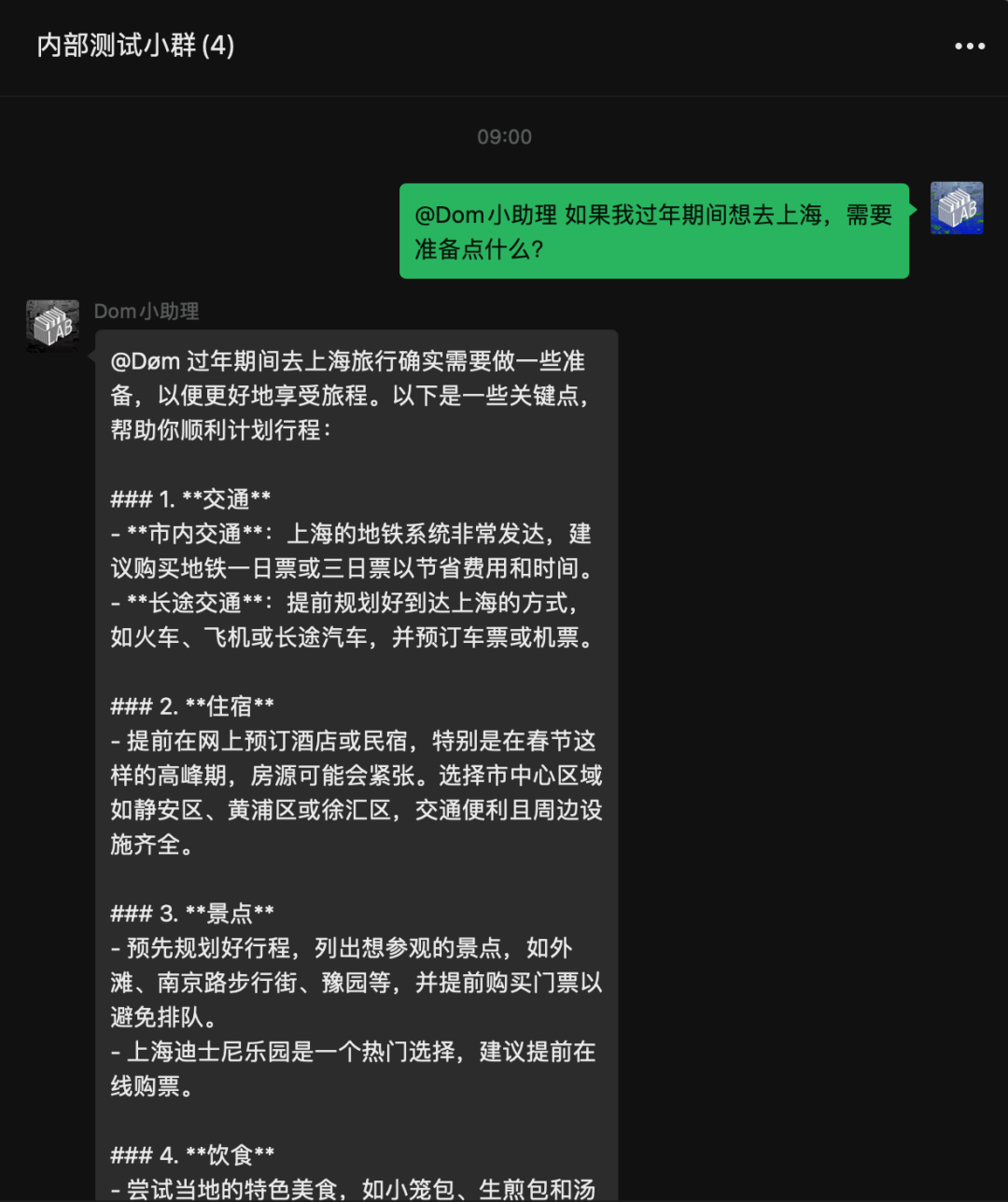

Here's the effect of the access! All localized! No need to access DeepSeek's api.

The whole process is not difficult and takes about 10 minutes. I have encapsulated all the complexities of the job, so all you need to do is download it and just install and run it!

Local deployment of DeepSeek-R1

First you need to deploy DeepSeek-R1 locally. If you are unable to install DeepSeek-R1 locally, please go to the next step:Deploying DeepSeek-R1 Open Source Models Online with Free GPU Computing Power

It should be noted that the 1.5B, 7B, 8B and so on that are currently open are the "distilled" versions of Qwen/llama that have been enhanced with the help of R1 reasoning and are not the real R1, which can be simply understood as not being a pure-blooded R1. The 671B full-volume version is the real R1, of course, our general consumer graphics card is not able to take the 671B full-volume version, so first use the distilled version to play.

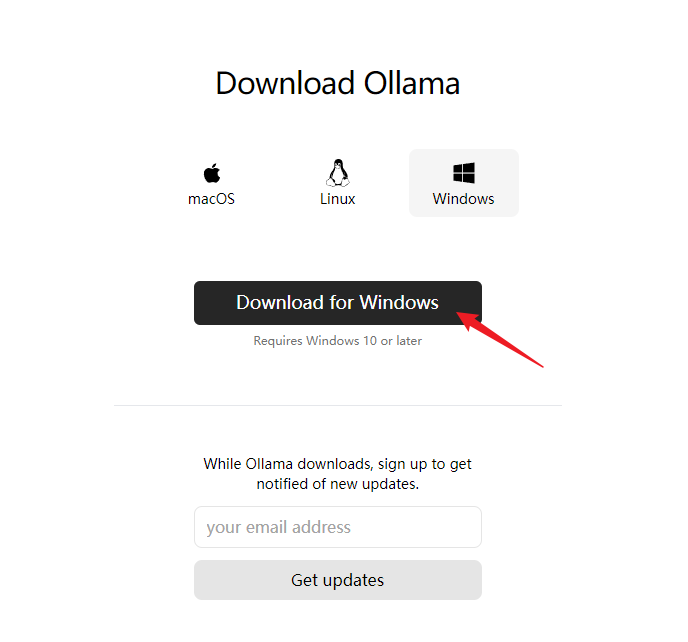

Installation of Ollama

Here we use the tool Ollama, which I've put on my disk, or you can download it from the official website.

official website

https://ollama.com/

Download windows version (here to say, at present, due to the limitations of WeChat, access to WeChat, only support windows platform)

Setting the installation and model paths

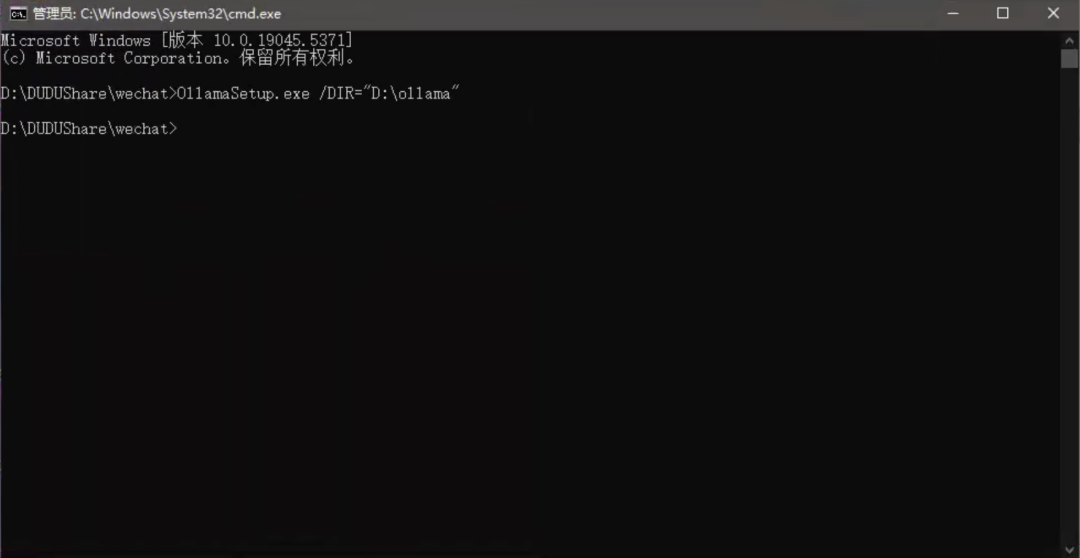

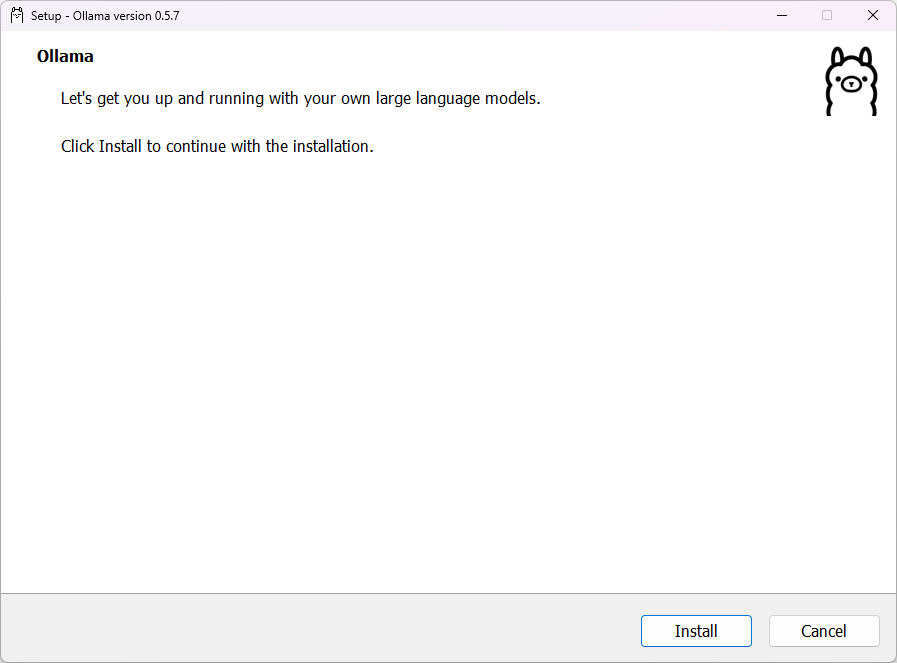

Open the installer to install, by default it is installed to the C drive. You can do this by typing at the command line plus/DIR=to specify the installation path.

OllamaSetup.exe /DIR="D:ollama"

If you have already installed Ollama on your C drive, but want to change the installation directory.

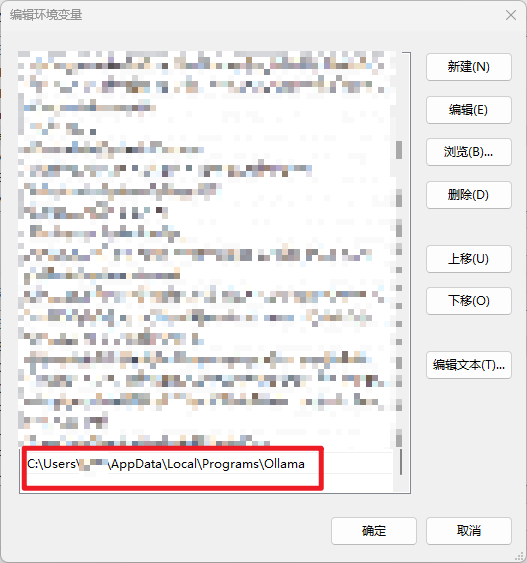

Setting this path to theOllamaMove the folder to the directory where you want to install

C:Users你的用户名AppDataLocalProgramsOllama

for example

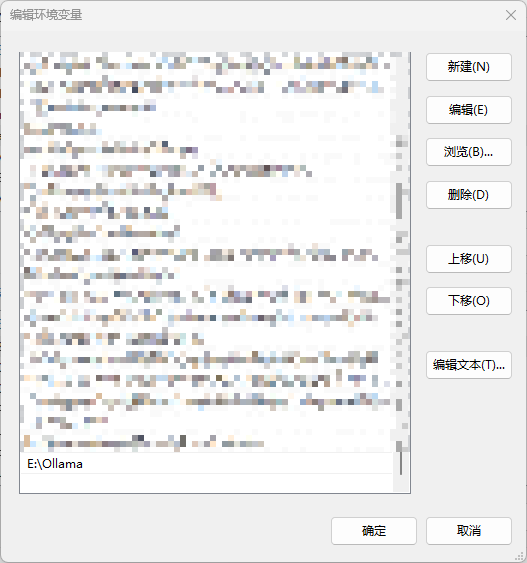

E:Ollama

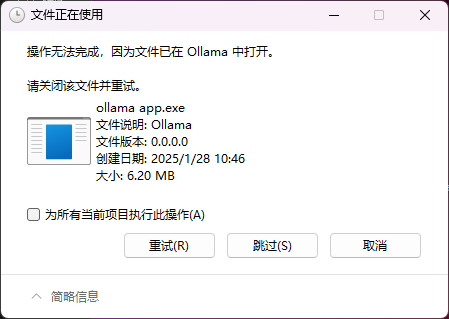

You may encounter the following problem when moving, this is due to the fact that after installing theOllamaIt's already started by default.

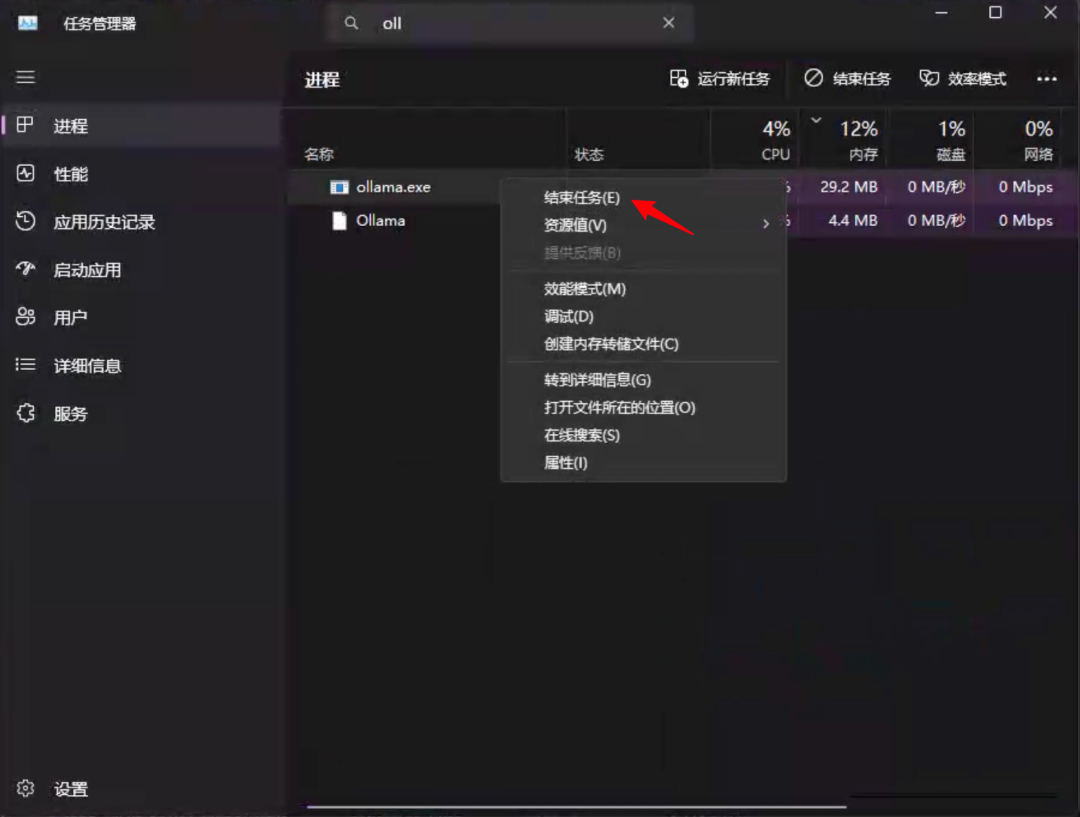

It needs to be found in the task managerOllamacap (a poem)ollama.exeFor both processes, right-click结束任务. It needs to be turned off firstOllamarebootollama.exeThe

Then next you need to change the environment variables

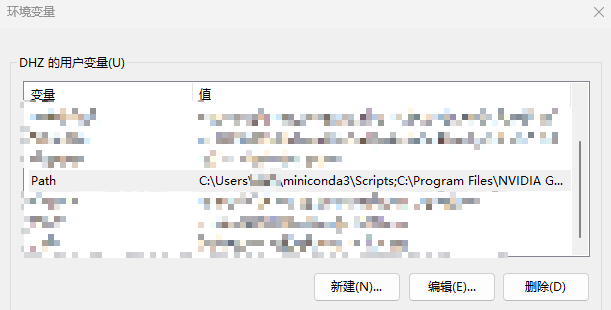

Open Settings-Advanced System Settings-Environmental Variables-Find Path, double-click to edit.

Find this on the C drive.Ollamatrails

Change to the directory you specified

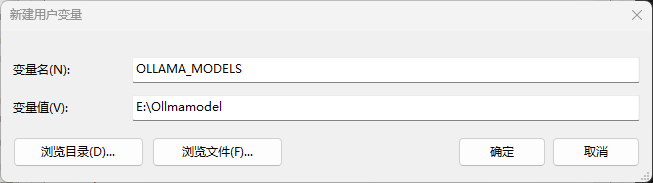

Add a new user variableOLLAMA_MODELSThis is the location where the downloaded model is stored, if you don't set it, it will be in the user folder of C disk by default.

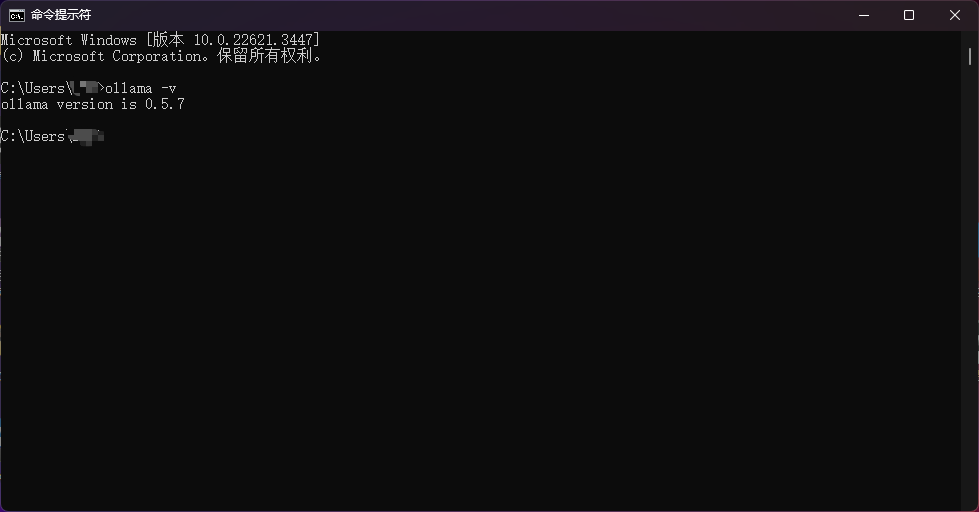

After all of the above is set up, the command line entersollama -vVerify that it worked.

Download model

https://ollama.com/library/deepseek-r1:1.5b

Go to the page to see all the models currently available for the R1

Here is the corresponding video memory needed to run the model.

- deepseek-r1:1.5b - 1-2G video memory

- deepseek-r1:7b - 6-8G video memory

- deepseek-r1:8b - 8G video memory

- deepseek-r1:14b - 10-12G video memory

- deepseek-r1:32b - 24G-48 video memory

- deepseek-r1:70b - 96G-128 video memory

- deepseek-r1:671b - requires more than 496GB of video memory

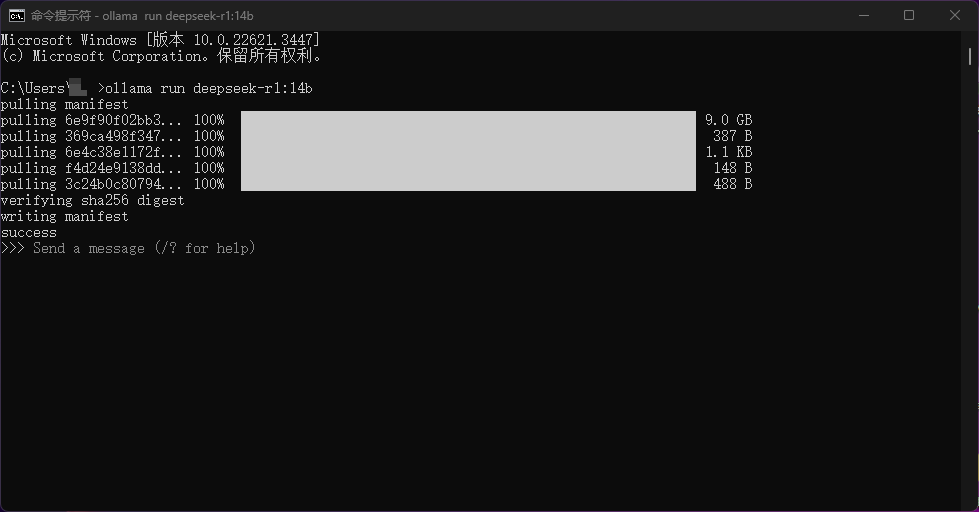

Select the corresponding model according to your computer's configuration, paste the command in the command line and execute it, the model will be downloaded automatically. For example, what I executed here isollama run deepseek-r1:14b

I also prepared a 1.5B model and a 14B model in the directory of the network disk, the speed of the Internet is not very good students directly unzipped to your model path can run, no need to use again!ollamaDownload.

Deploy BOT to access WeChat

When you have performed this step, you are getting closer to success! The remaining steps are very simple!

We need to use theNGCBotThis project to convert the localDeepSeekAccess to WeChat

Project Address:

https://github.com/ngc660sec/NGCBot

The original project supports access to the api of platforms such as xunfei starfire, kimi, gpt, deepseek and so on.But for local DeepSeek access is not supported, I made the following changes to the original project and open source!You are welcome to join in the maintenance!

- Support for local deepseek

- Shield think

- Contextual dialog support

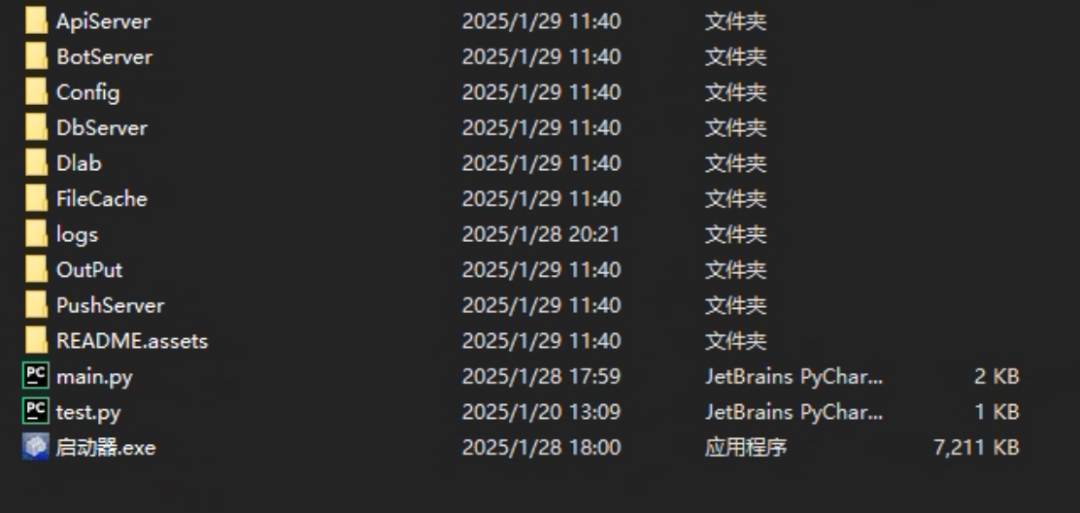

Place the web drive in theNGCBot.zipUnpacking

Unzip and double click the launcher to open the project

The program will automatically open your WeChat, which will prompt at this point:只支持64位微信or当前微信版本不支持

This is because the version of WeChat installed on our computer is too new.NGCBotA specific version needs to be installed.

Install the version of WeChat I have prepared and reopen theNGCBotProject, swipe to log in to WeChat, done!

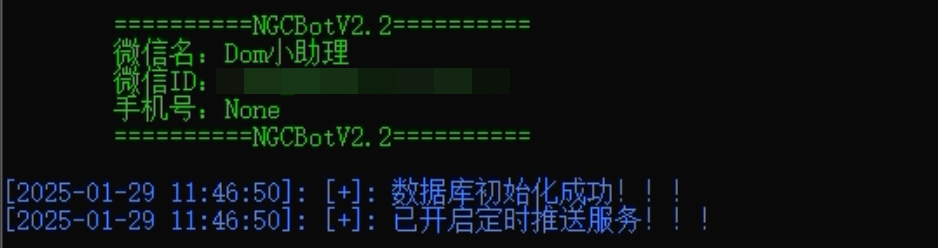

When the following is displayed on the launcher, it proves that the service has been initialized successfully.

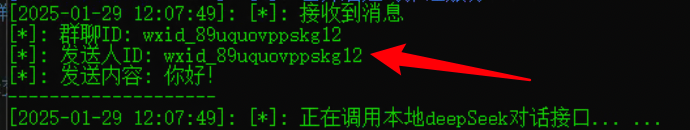

At this point we send a message to the logged in WeChat to test it.

Done!

Modify the BOT configuration

Nah, the robot's done! Don't be so happy! There's something else you need to know.NGCBotconfiguration settings!

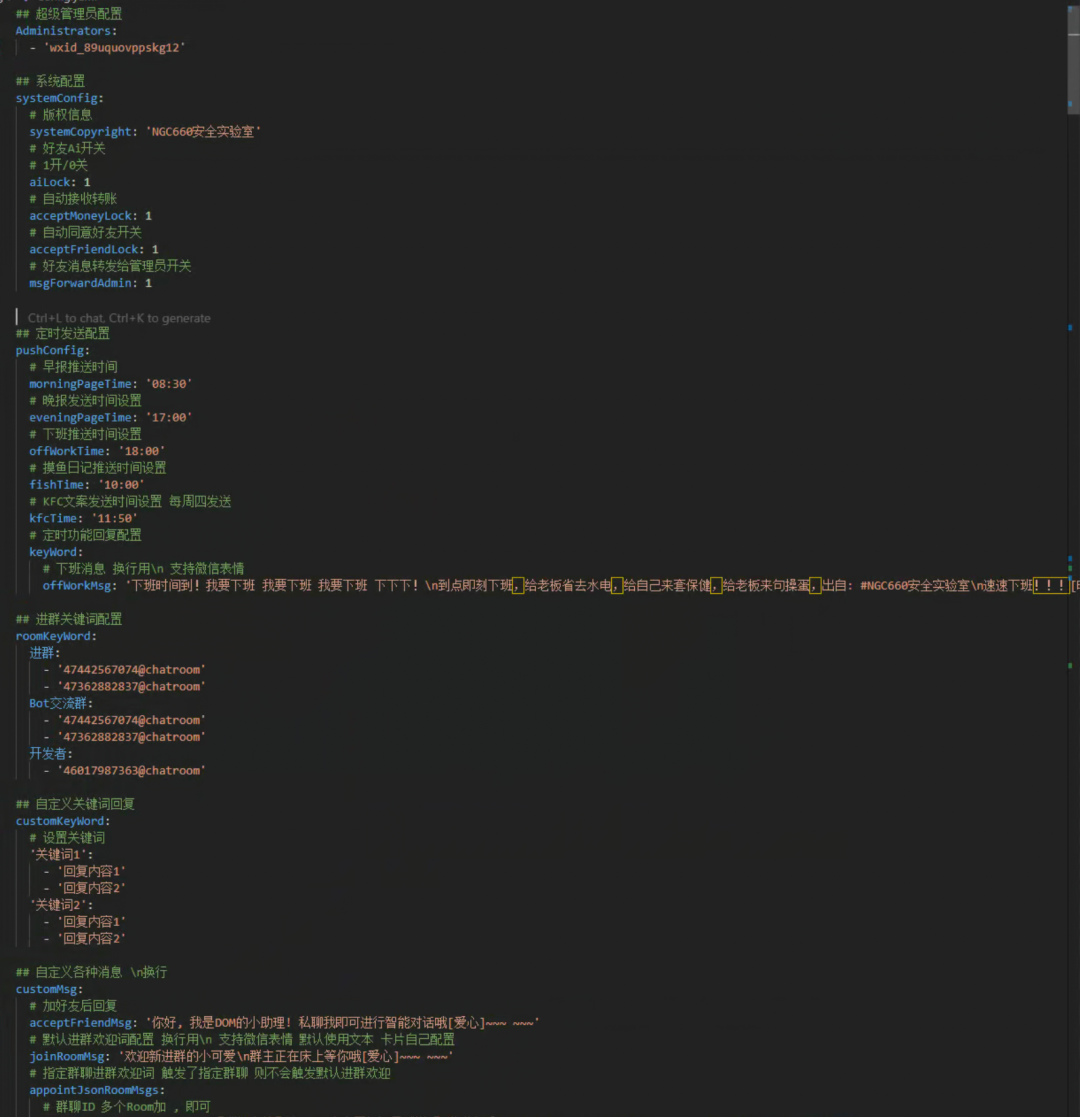

show (a ticket)NGCBotin the project root directory.Config/Config.yamlfile

Only two necessary settings are described here, and you can check the official details for the rest.

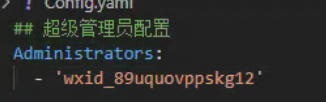

The first is a modification超级管理员配置, here fill in the id of your other weibo, the admin weibo.

The id can be obtained by copying any message sent to the robot from another micro-signal to this micro-signal.

The second place is to modifylocalDeepSeeklowerdeepSeekmodelThe name of the model in the

Which model you installed with ollama is filled in here.

After completing the above steps, then congratulations, you have a local version of DeepSeek-R1 microsoft chatbot.

touch

- Q: Can the MAC be accessed?

- A: No.

NGCBotThe project only supports windows, but Ollama can be deployed. - Q: Do I need to be hooked up to WeChat all the time? Can't I turn it off?

- A: Yes, it is equivalent to logging into the windows version of WeChat, the phone can chat normally, but it certainly can't run normally after shutting down the computer. If you want to run continuously, it is recommended to deploy it with a cloud server.

- Q: Is this the same as the previous

chatgpt-on-wechatWhat's the difference? - A: The protocols are different, and some time ago the microfilm enveloped the

chatgpt-on-wechatinterface used, so currently this Hook-basedNGCBotThe program is still very stable.

Integration pack acquisition

Tootsie Lab Version:

Quark: https://pan.quark.cn/s/bc26b60912da

Baidu:https://pan.baidu.com/s/1QKFWV1tMti9s4m9K_HAPFg?pwd=428a

write at the end

I got a private message a year ago sayingchatgpt-on-wechatThis project interface was officially blocked by WeChat, and was intended to be combined with theCOZEShare.NGCBotThis program, hahaha just rightDeepSeek-R1Across the board! And so . ...COZE let's put it on the back burner for now! Happy New Year everyone, I'm off to eat dumplings .....

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...