Attention Mechanism (Attention Mechanism) is what, an article to read and understand

Definition of Attention Mechanisms

Attention Mechanism (Attention Mechanism) is a computational technique that mimics human cognitive processes, initially applied in the field of machine translation and later becoming an important part of deep learning. The core idea is to allow the model to dynamically focus on relevant parts of the input when processing information, like humans automatically focus on key words when reading. The importance of input elements is represented by assigning different weights, with higher weights representing stronger attention. In terms of technical implementation, the attention function maps a query (Query) to a set of Key-Value pairs (Key-Value pairs) as a weighted summation output, where the weights are computed from a compatibility function between the query and the keys.

The most common implementation is dot product attention, where the attention distribution is obtained by computing the dot product of queries and keys. The success of the attention mechanism lies in its ability to efficiently handle long sequences of data, solving the problem of long range dependency that exists in traditional recurrent neural networks. With the development, the attention mechanism has been extended from the initial encoder-decoder architecture to forms such as Self-Attention, which has become a core component of the Transformer architecture. This technique has not only improved the quality of machine translation, but has also been widely used in many fields such as image processing, speech recognition, etc., significantly improving the model's ability to capture important information.

Historical origins of attentional mechanisms

- Foundations of Cognitive Psychology: The concept of attention mechanisms has its roots in the study of human cognitive psychology. As early as the 19th century, psychologist William James proposed that attention is "the process by which the mind occupies itself with one of the many simultaneous objects or trains of thought it may possess". This mechanism of selective human attention provides a theoretical basis for computational modeling.

- Early attempts at computational modeling: In the 1990s, researchers began to introduce the idea of attention to neural networks. The Alignment Model, proposed by Joshua Bengio's team in 1997, can be seen as a prototype of an attention mechanism for improving the performance of sequence-to-sequence learning

- Breakthroughs in Machine Translation: In 2014, Bahdanau et al. successfully implemented the attention mechanism in neural machine translation for the first time, which significantly improved the translation quality of long sentences by means of soft alignment. This work opens the way for the wide application of the attention mechanism in the field of natural language processing

- Revolutionizing the Transformer Architecture: In 2017, Vaswani et al. published the paper Attention Is All You Need, which builds the Transformer architecture entirely based on the attention mechanism, eschewing the traditional recurrent neural network structure and creating a new performance benchmark

- Cross-domain expansion: With the depth of research, the attention mechanism has been extended from natural language processing to computer vision, speech recognition, recommender systems, and many other fields, becoming an important part of deep learning models

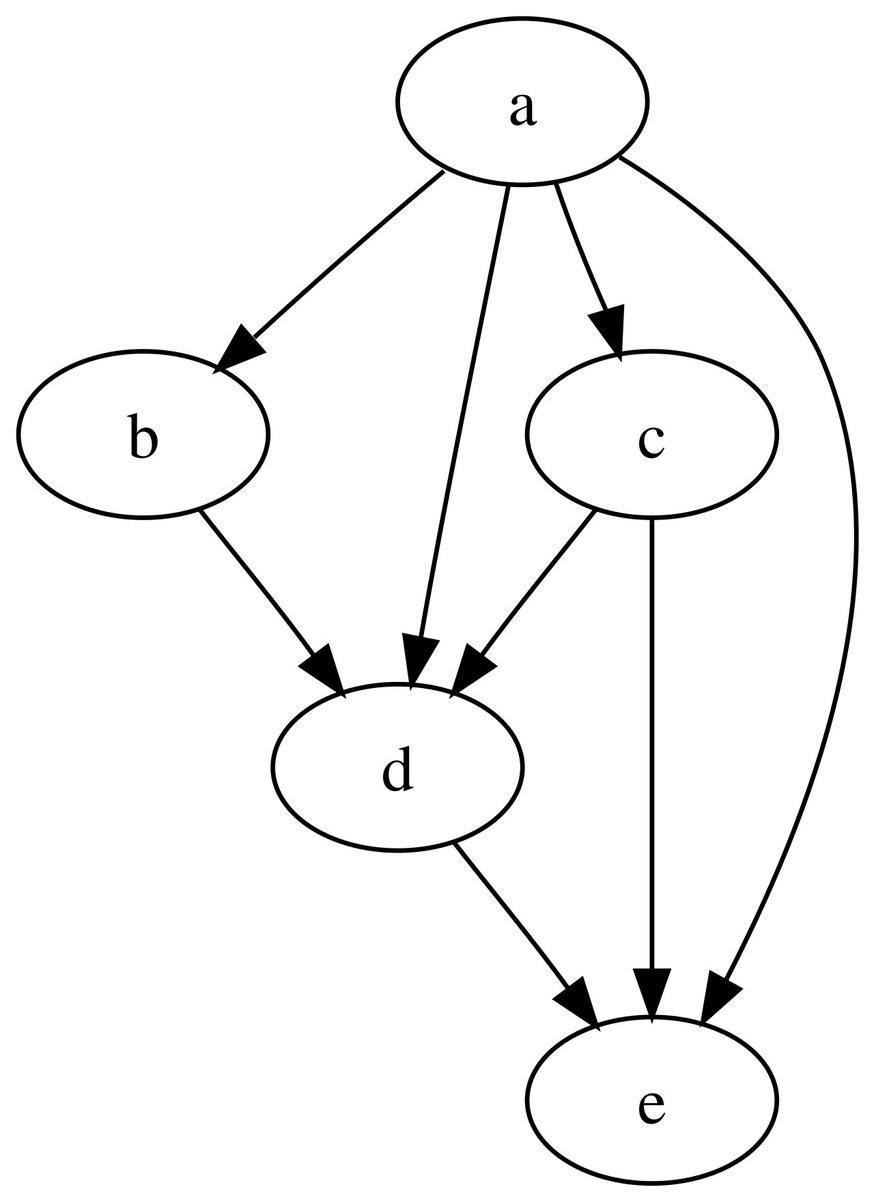

How the Attention Mechanism Works

- input stage: The input sequence is first converted into a vector representation, where each word or element corresponds to a high-dimensional vector. These vectors contain information about both the element itself and its position in the sequence

- Query-key-value framework: The attention mechanism uses three components, Query, Key and Value. The Query represents the current content to be focused on, the Key represents the object to be compared, and the Value is the actual information to be extracted

- Similarity calculation: The attention score is obtained by calculating the similarity between the query and each key. Commonly used similarity calculation methods include dot product, additive attention, etc. The dot product method is widely used because of its high computational efficiency.

- weight normalization: Use the softmax function to convert the attention scores to a probability distribution, ensuring that all weights sum to 1, to form the attention weights matrix

- Weighted sum output: Finally, the attention weights are multiplied and summed with the corresponding value vectors to obtain a weighted output representation. This output concentrates the most relevant information in the input sequence

Main types of attentional mechanisms

- Soft versus hard attentionSoft attention assigns continuous weights to the entire input sequence, which is differentiable and easy to train; hard attention focuses only on a specific location, which is computationally efficient but not differentiable, and requires training by methods such as reinforcement learning

- Global versus local attention: global attention considers all input positions, with high computational overhead but high accuracy; local attention focuses on only some positions within the window, balancing accuracy and efficiency

- Self-attention mechanism: Self-attention, also known as internal attention, allows each position in a sequence to compute attentional weights with respect to all positions in the sequence, and excels at capturing dependencies within the sequence

- Long Attention: Enhance the expressive power of the model and its ability to capture multiple relationships by parallelizing multiple attention heads and allowing the model to simultaneously attend to information in different representation subspaces.

- divided attention: used between two different sequences, allowing one sequence to act as a query and the other to provide keys and values, commonly used for cross-modal interactions in encoder-decoder architectures

Scenarios for the application of the attention mechanism

- machine translation system: In neural machine translation, the attention mechanism helps the model to automatically focus on the relevant parts of the source language sentence when generating words in the target language, which significantly improves the translation quality of long sentences.

- Image Recognition and Processing: Attentional mechanisms in computer vision allow models to focus on key regions of an image, with good results in image classification, target detection, and image generation tasks

- speech recognition technology: Attention mechanism for aligning audio frames with output text in speech recognition, handling input and output sequences of different lengths, and improving recognition accuracy.

- Recommended System Optimization: By analyzing the sequence of user behaviors, the attention mechanism can capture changes in user interests and focus on the historical behaviors that are most important for recommendation prediction to improve recommendation accuracy.

- Medical Diagnostic Aids: In medical image analysis, the attention mechanism helps the model to focus on the lesion area and provide decision support for doctors, while enhancing the interpretability of the model.

Advantageous features of the attention mechanism

- parallel computing capability: Unlike recurrent neural networks that process sequences sequentially, the attention mechanism can compute the attention weights of all positions in parallel, dramatically improving training and inference efficiency

- Long-range dependency modeling: Can directly establish the connection between any two positions in a sequence, effectively solving the dependency capturing problem in long sequences, and overcoming the gradient vanishing problem of traditional RNNs

- Interpretability enhancement: Attention weight distributions provide visual insights into the model's decision-making process, helping researchers understand the focus of the model's attention and increasing model transparency

- Flexibility Architecture Design: can be easily integrated into various neural network architectures without changing the main structure, providing more possibilities for model design

- Multi-modal fusion capability: Specializes in handling interactions between different modal data and is able to effectively integrate multiple types of information such as text, images, audio, etc.

Challenges to the realization of the attention mechanism

- Computational complexity issues: The computational complexity of the attention mechanism grows in square steps with the length of the sequence and faces computational and memory constraints when dealing with long sequences.

- huge memory footprint: Attention weight matrices need to be stored, and memory requirements increase dramatically when sequences are long, placing high demands on hardware devices

- The phenomenon of excessive smoothing: Sometimes the attention weights are too evenly distributed, resulting in the model not being able to effectively focus on key information and affecting model performance

- Training instability: In particular, in multi-head attention, inconsistent learning rates may occur across attention heads, requiring fine hyperparameter tuning

- Location information encoding: the self-attention mechanism is not location-aware by itself and requires additional location coding to inject sequence order information

Directions for improvement of the attention mechanism

- Design for Efficient Attention: Researchers have proposed multiple variants of sparse attention, localized attention, etc. to reduce computational complexity and enable models to handle longer sequences

- Memory Optimization Solution: Reduce memory footprint using techniques such as chunking and gradient checkpointing to enable attention mechanisms to operate in resource-constrained environments

- Exploration of structural innovations: Introduce new methods such as relative position encoding and rotational position encoding to better handle positional relationships and information in sequences

- multiscale attention: Combine different granularity of attention mechanisms to focus on both local details and global context to improve model performance.

- Theoretical Basic Research: Enhance theoretical analysis of attention mechanisms to understand their workings and limitations and guide better model design

Future Development of Attention Mechanisms

- Unified Cross-Modal Architecture: Attention mechanisms are expected to be a fundamental framework for unified processing of multimodal data and promote multimodal AI development

- Neuroscience Inspired: Further drawing on the neural mechanisms of the human attention system to develop a more biologically sound model of attention

- adaptive attention: Development of intelligent systems capable of automatically adjusting the scope and precision of attention according to the difficulty of the task to improve computational efficiency

- Interpretability enhancement: Combining visualization techniques and interpretive methods to make the explanations provided by attention mechanisms more accurate and reliable

- Edge device deployment: Enabling attention-based models to run efficiently on mobile and IoT devices through model compression and optimization

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...