What is Artificial Intelligence Governance (AI Governance) in One Article

Definition and core connotations of AI governance

AI governance is a comprehensive framework encompassing technology, ethics, law, and society that effectively guides, manages, and oversees the entire lifecycle of AI systems-from design, development, deployment, and end use. The core goal is not to impede technological innovation, but to ensure that the development and application of AI technology always proceeds in a direction that is safe, reliable, responsible and consistent with the values of humanity as a whole. Understood as the "traffic rules" and "constitution" of the AI world, AI governance should not only safeguard the vitality and innovation of this new world (allowing vehicles to drive at high speeds), but also ensure that it operates in an orderly and fair manner without catastrophic accidents (setting up traffic lights and speed limits). speed limit standards). AI governance involves multiple dimensions: technologically, it requires systems to be transparent, interpretable and robust; ethically, it emphasizes fairness, unbiasedness, privacy protection and human oversight; legally, it requires a clear mechanism for identifying responsibilities and compliance standards; and socially, it calls for broad public participation and global collaboration. Ultimately, AI governance answers a fundamental question: how do we harness a force that may be smarter and more powerful than we are, so that it becomes a tool that serves human well-being rather than an out-of-control threat? This is not just a question for large corporations and governments to consider; its construction is about the future of each and every one of us.

Core elemental components of AI governance

AI governance is not an empty concept, but a solid system consisting of several mutually supportive core elements that work together to ensure that AI activities are regulated and organized.

- Ethical guidelines first: Ethics is a cornerstone of governance. A set of globally recognized ethical principles for AI typically includes: fairness (avoiding algorithmic discrimination), transparency and interpretability (decision-making processes can be understood), privacy protection and data governance (proper handling of user data), non-maliciousness (safe and secure, causing no harm), accountability (someone is responsible for problems), and human oversight and control (the final decision is in the hands of humans). These principles provide value guidance for specific technology development and regulation making.

- Legal and regulatory framework: Soft ethical guidelines need hard laws and regulations to be implemented. AI regulatory systems are being built at an accelerated pace across the globe. For example, the EU's Artificial Intelligence Act grades AI applications according to risk level, prohibits unacceptably risky applications (e.g., social scoring), and imposes strict access and ongoing regulation on high-risk applications (e.g., medical diagnostic AI). The legal framework clarifies the legal responsibilities of developers and deployers and establishes red lines and bottom lines.

- Transparency and interpretability: It is the key to building trust. A "black box" AI model, even if it makes the right decisions, can hardly be trusted. Governance requires that AI be as transparent as possible, so that decision logic can be scrutinized and understood by humans. When AI rejects a loan application or recommends a medical treatment, it must be able to provide clear, understandable reasons for doing so, not an "algorithmic decision" that cannot be challenged.

- Whole life-cycle risk management: Governance requires risk management of AI systems throughout. Impact assessments are conducted during the design phase to anticipate possible social, ethical, and legal risks; secure design concepts are adopted during the development phase; rigorous testing and validation are conducted before and after deployment; and continuous monitoring is conducted during the operational phase to detect and correct model performance degradation or the emergence of new biases in a timely manner.

- Clarity of accountability mechanisms: There must be a clear chain of accountability when AI systems cause damage. Who exactly is responsible? Is it the engineers who design the algorithm, the company that collects the data, the executives who decide to deploy it, or the end users who use it? Governance frameworks must define these responsibilities in advance to ensure that victims can seek redress and wrongdoers are held accountable, thus pushing all involved to be more prudent.

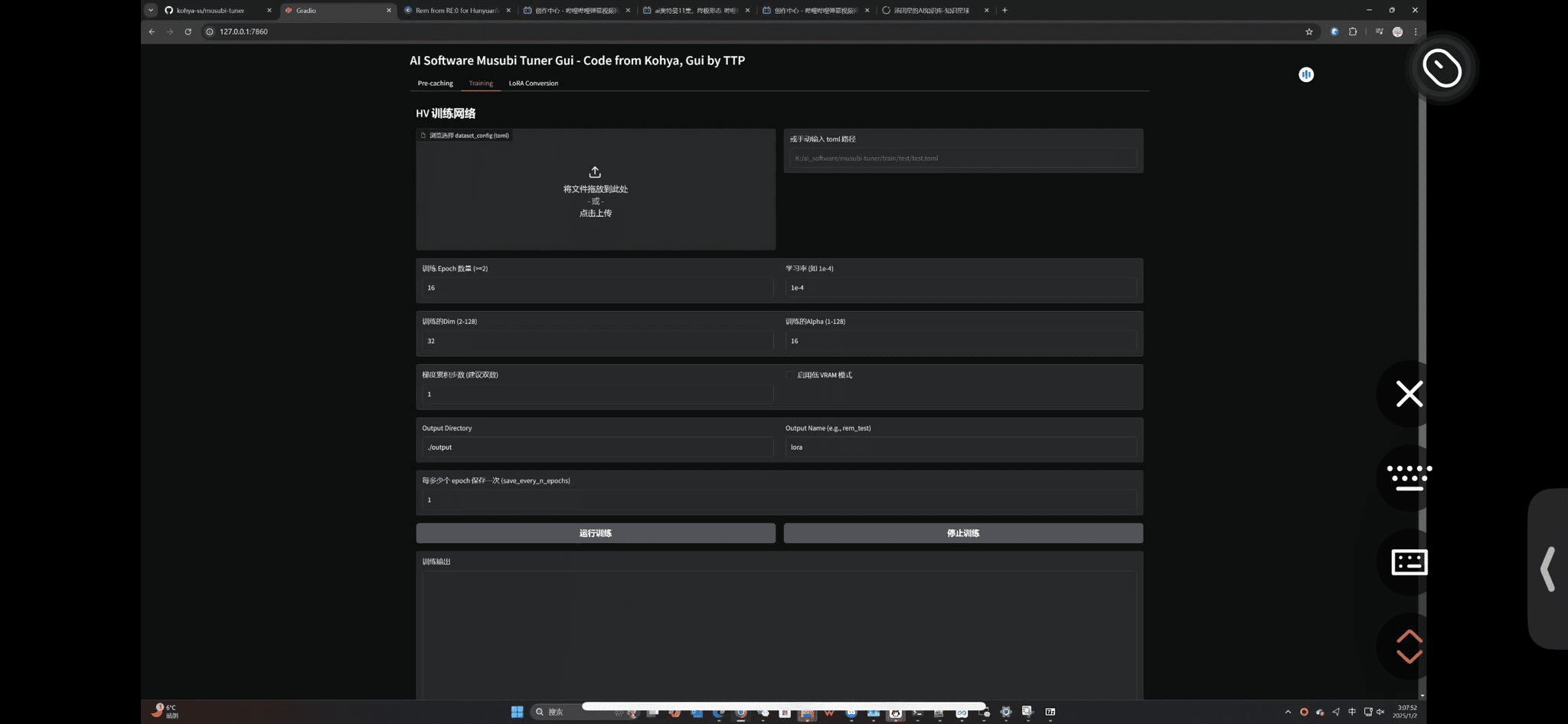

- Technical tools and standards supportGovernance requires specific technological tools for its realization. For example, adopt "explainable AI" (XAI) tools to interpret complex models; utilize techniques such as "federated learning" to protect data privacy while training AI; and develop fairness assessment toolkits to quantitatively detect algorithmic bias. At the same time, a unified technical standard should be established to ensure interoperability between different systems and consistency in security assessments.

Multi-level implementation framework for AI governance

Translating the concept of governance from paper to reality requires a concerted effort in a multilevel framework that spans from the internal to the external and from the micro to the macro.

- Macro-governance at the national and global levelsNational governments play the role of "referee" through legislation and the establishment of regulatory agencies (e.g., national AI offices), formulate national AI strategies, and participate in global rule-making. International organizations (e.g., the United Nations, OECD, G20) are committed to promoting the establishment of global AI governance guidelines and cooperation mechanisms to avoid "rule fragmentation" and vicious competition, and to jointly respond to the global challenges posed by AI.

- Meso-governance at industry and sector level: Different industries combine their own characteristics to develop guidelines and best practices for AI applications in their own industries. For example, the financial industry focuses on the fairness of AI in credit and anti-fraud compliance; the healthcare industry focuses on the reliability of AI diagnostic tools, privacy protection, and the definition of clinical responsibility; and the automotive industry works together to formulate safety testing standards for autonomous driving. Industry self-regulation and standards first are an important part of effective governance.

- Microgovernance at the organizational and enterprise level: Enterprises are the most important implementation unit of governance. Responsible technology companies will establish internal AI ethics committees, formulate corporate AI development principles, provide ethics training for employees, and create positions such as chief AI ethics officers. Enterprises need to integrate governance requirements into every process of product management, from data collection and model training to product launch and update iterations.

- Embedded governance at the technical levelGovernance requirements need to be directly "designed" into AI systems, which is called Governance by Design. Fairness constraints, privacy calculations, and logging are built into the code as it is being written, making compliance and ethics intrinsic to the technology, rather than an afterthought patch.

- Continuous monitoring and audit assessmentDeployment of AI systems is not the end of the road. The governance framework requires the establishment of an independent third-party auditing mechanism to conduct regular "medical check-ups" of in-service AI systems to assess whether they still meet the requirements of fairness, security and compliance. Effective feedback and reporting channels should be established to allow users and internal staff to report problems identified, forming a closed loop of oversight.

- Emergency response and exit mechanismsEven the best governance needs to be able to cope with unforeseen circumstances. Contingency plans must be developed in advance to enable rapid intervention, mitigation, or even shutdown of the AI system in the event of a serious failure or malicious misuse of the system, the so-called "Kill Switch". Clarify data disposal options after system failure or decommissioning.

Real-world challenges to AI governance

Idealized governance frameworks encounter many practical challenges at the technical, social and international cooperation levels in the process of implementation.

- Technology is evolving much faster than governance updatesAI technology iteration cycles are measured in months or even weeks, while legislation and standard-setting processes often take years. This "speed imbalance" leads to governance often lagging behind the latest technological applications and regulatory gaps.

- Global consensus elusive: There are significant differences between different countries in terms of cultural values, legal systems and development paths. Concepts such as privacy, freedom and security are weighted differently, making it extremely difficult to form globally harmonized rules for AI governance. Geopolitical rivalries further exacerbate these differences, and a "fragmented" situation in which multiple sets of rules coexist is likely to emerge.

- Complexity of accountability determinations: The decision-making process of an AI system involves multiple subjects in a long and complex chain. When a self-driving car powered by deep learning algorithms is involved in an accident, responsibility is difficult to clearly define - is it the sensor supplier, the algorithm engineer, the car manufacturer, the car owner or the software update pusher? The existing legal system is overwhelmed in dealing with this complexity.

- Technical difficulty of auditing and monitoring: For the most cutting-edge large-scale generative AI models, their parameter sizes are as large as trillions, and their internal working mechanisms are so complex that even developers cannot fully understand all their behaviors. Effective auditing and monitoring of this "black box" within a "black box" is a huge technical challenge.

- Huge gaps in talent and knowledge: Interdisciplinary talent with a deep understanding of AI technology, ethics, law and public policy is extremely scarce. Policymakers may not understand the technology, while technicians may lack ethical and legal perspectives, and there are barriers to effective dialog between the two sides, affecting the quality and enforceability of governance policies.

The profound value of AI governance for society

Despite the challenges, building a sound AI governance system has irreplaceable far-reaching value and significance for society as a whole.

- Building trust for technology adoption: Trust is the social foundation on which all technologies can be applied on a large scale. By demonstrating to the public through governance that AI is safe, reliable and responsible, people's fears and doubts can be eliminated, accelerating the implementation of AI technology in key areas such as healthcare, education and transportation, and truly unleashing its potential to improve lives.

- Preventing systemic risks and safeguarding social security: The misuse of AI could bring unprecedented risks, such as automated weapons out of control, large-scale cyberattacks, and social deception based on deep forgery. The governance system is like the Basel Accord in the financial sector, preventing individual events from triggering a chain reaction that could lead to a global catastrophe by putting up safety guardrails.

- Shaping a level playing field and guiding the healthy development of the market: Clear rules set a fair starting line of competition for all market participants. Governance curtails unfair competitive advantage through misuse of data, invasion of privacy or use of biased algorithms, encourages businesses to compete through genuine technological innovation and quality service, and promotes healthy and sustainable market development.

- Protection of fundamental human rights and dignity: One of the core missions of AI governance is to ensure that technological advances do not erode the fundamental rights of human beings. Guard human dignity in the digital age by preventing the excesses of digital surveillance through strict data protection regulations, safeguarding the rights and interests of socially disadvantaged groups through fairness requirements, and ensuring that ultimate control rests in the hands of humans through human oversight.

- Gathering global wisdom to address common challenges: The topic of AI governance has prompted governments, businesses, academia, and civil society to sit down together to discuss how to address global challenges such as climate change, public health, poverty, etc. AI governance can be a new nexus for global cooperation, leading to the use of powerful AI technologies to solve humanity's most pressing problems.

- Responsibility for future generations towards sustainable development: Governance decisions today will profoundly affect the shape of society in the future. Responsible governance means that we consider not only the short-term economic benefits of AI, but also its long-term social, environmental and ethical impacts, to ensure that the development of technology is in the interests of future generations and to achieve truly sustainable development.

Global Landscape and Comparison of Artificial Intelligence Governance

Major countries and regions in the world are exploring different paths of AI governance based on their own concepts and national conditions, forming a colorful global pattern.

- The European Union's "rights-based" regulatory modelThe European Union has taken the lead in establishing the world's strictest and most comprehensive regulatory framework for AI. The core idea is to classify AI applications according to risk and implement "pyramid-style" regulation. The EU model attaches great importance to the protection of fundamental rights, emphasizes the precautionary principle, and seeks to be the "de facto" global digital rule maker by setting extremely high compliance standards for technology companies through strong regulations such as the Artificial Intelligence Act and the Digital Services Act.

- The United States model of "innovation-first" flexible governance: The U.S. prefers to rely on sub-sector regulation by existing regulatory authorities (e.g., FTC, FDA), emphasizing industry self-regulation and technological solutions. Its strategy is more flexible, aiming to avoid overly harsh regulation stifling Silicon Valley's innovation vitality. The U.S. government provides soft guidance through the issuance of executive orders and investment guidelines, encouraging the identification of problems and solutions in development, and the national power is more invested in AI R&D and cutting-edge exploration of military applications.

- China's "safe and controllable" and comprehensive development model: China's AI governance emphasizes "safe and controllable" and "people-centred", encouraging technological development while attaching great importance to national security and social stability. China has issued a series of regulations, including the Interim Measures for the Administration of Generative Artificial Intelligence Services, which require AI-generated content to be in line with socialist core values and emphasize the main responsibility of enterprises in terms of security, privacy and bias. China's governance model reflects stronger overtones of state-led and holistic planning.

- Exploration and adaptation in other regionsThe United Kingdom has put forward the idea of "contextualized governance", which advocates not setting up a specialized regulatory body and relying on existing departments to flexibly adjust their regulatory strategies according to specific situations. Singapore, the United Arab Emirates and other small developed countries are committed to creating "AI sandbox" environments, attracting global AI companies and talents with loose regulation and superior infrastructure, and playing the role of "testing ground".

- The role of international organizations as coordinators: The Organization for Economic Cooperation and Development (OECD) has proposed AI principles that have been endorsed by a large number of countries. The United Nations is promoting the establishment of a global AI governance body, similar to the International Atomic Energy Agency (IAEA). These international organizations provide a rare platform for dialogue between countries in different camps, working to gather global consensus at the lowest level to avoid the worst-case scenario.

- Transnational Influence and Self-Regulation of Tech Giants: Large technology companies such as Google, Microsoft, Meta, OpenAI and others have AI resources and influence that extend beyond even many countries. The ethical codes and governance practices developed within these companies constitute another global system of "private" governance. There is both cooperation and tension between them and the "public" governance systems of sovereign states.

Indispensable public participation in AI governance

Far from being merely a closed-door meeting of government officials, business executives and technologists, AI governance cannot be effective and legitimate without the active participation of the general public.

- Public education is the cornerstone of participation: Promote AI literacy and educate the public about the fundamentals, capabilities, limitations and potential risks of AI through various forms of media, schools and public lectures. A public community with a basic understanding of AI can engage in meaningful discussion and oversight, rather than remaining in sci-fi fear or blind optimism.

- Building diversified channels for soliciting opinions: When formulating AI-related regulations, legislative and regulatory bodies should take the initiative to hold hearings and issue drafts for public comment, and broadly incorporate the voices of different social groups, such as those from consumer organizations, labor groups, minority communities, and disability rights protection agencies, to ensure that governance policies reflect diverse interests and values.

- Encouraging citizen deliberation and consensus conferences: Organize deliberative panels of randomly selected ordinary citizens to hold in-depth discussions on specific AI ethical dilemmas (e.g., boundaries of public use of facial recognition) and produce a report of recommendations based on neutral information provided by experts. This format allows decision makers to be informed by well-considered public opinion.

- The watchdog role of the media and investigative journalism: The media is an important window into AI issues for the public. Investigative journalists' in-depth coverage of algorithmic bias incidents (e.g., ProPublica's revelation of racial bias in the COMPAS recidivism assessment system) can effectively transform technical issues into public issues, sparking widespread attention and discussion and creating strong public opinion scrutiny pressure.

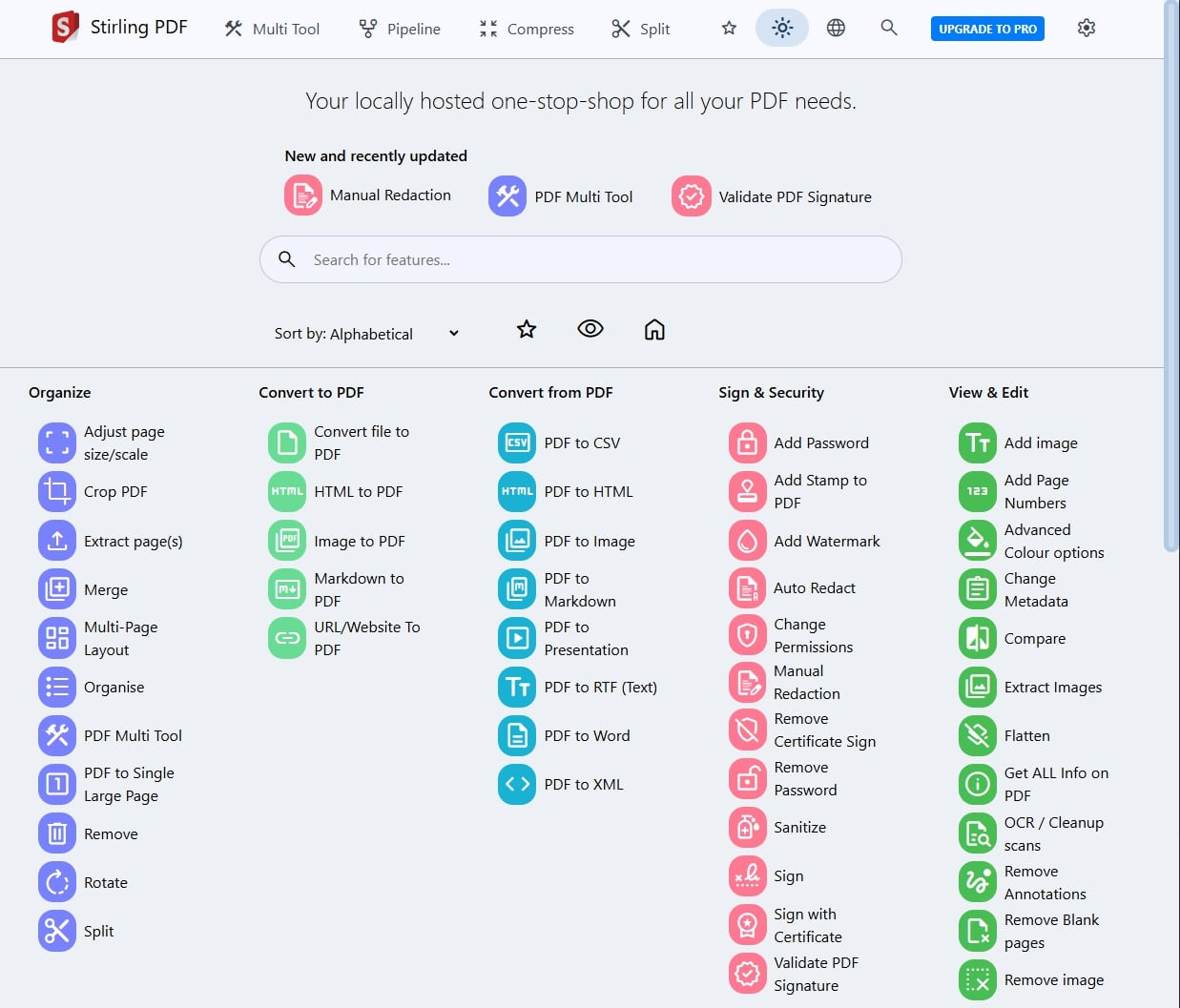

- Supporting independent research and audit institutions: The public can support and trust third-party research institutions, auditing organizations and ethics laboratories that are independent of governments and corporations. These organizations can publish objective research reports, conduct independent assessments of commercial AI systems, provide the public with authoritative and credible information, and break the information monopoly of technology companies.

- Empowering users with individual rights and choices: The governance framework should guarantee the rights of individual users, such as the right to be informed (to be informed that they are interacting with the AI), the right to choose (to be able to opt for a human service), the right to interpretation (to receive an explanation of the AI's decisions), and the right to refuse (to refuse a major decision made only by the AI). These rights make the public not passive recipients but active participants who can exercise their rights.

Key technology tools on which AI governance depends

Effective governance is not just talk, it needs to be underpinned by a range of powerful technical tools that translate governance principles into code and system functionality.

- Interpretable AI (XAI) Toolset: XAI is the key to opening the "black box" of AI. It includes a range of techniques, such as LIME, SHAP, etc., that can explain the predictions of complex models in a human-understandable way (e.g., by highlighting key input features that influence decisions). Without interpretability, transparency, accountability and fair auditing are impossible.

- Equity Assessment and Mitigation Toolkit: Open source toolkits like IBM's AIF360 and Microsoft's FairLearn provide dozens of proven fairness metrics (e.g., group fairness, equal opportunity) and algorithms to help developers quickly detect, assess, and mitigate algorithmic bias before and after model training, engineering ethical principles.

- Privacy Enhancing Technologies (PETs): Such techniques can technically guarantee data privacy by completing computation and analysis without access to the original data. They mainly include: federated learning (where the data of all parties do not go out of the local area and only model parameters are exchanged), differential privacy (where precisely calibrated noise is added to the data query results), and homomorphic encryption (where the encrypted data are computed). They are the core of realizing the "usability invisibility" of data.

- Model Monitoring and O&M PlatformThe MLOps platform continuously monitors the predictive performance, data quality distribution and fairness metrics of AI models, and once abnormal deviations are detected, alerts are issued immediately, triggering manual intervention or model iteration to ensure continued system compliance.

- Adversarial Attack Detection and Robustness Testing ToolsThese tools simulate malicious attackers by feeding carefully constructed "adversarial samples" (e.g., a perturbed picture that is indistinguishable to the human eye) to the AI model to test the robustness and security of the model in the face of disturbances. By identifying vulnerabilities in advance, the model can be strengthened to prevent it from being maliciously spoofed or exploited.

- Blockchain for Audit Traceability: The tamper-proof nature of blockchain technology can be used to record key decision logs, training data hashes, and version change histories for AI models. This creates a credible audit trail, providing an iron-clad data record when backtracking is required to investigate AI decision-making mishaps, greatly simplifying the accountability process.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...