Microsoft's original WizardLM team: code big model WarriorCoder, performance new SOTA

- Paper Title: WarriorCoder: Learning from Expert Battles to Augment Code Large Language Models

- Link to paper: https://arxiv.org/pdf/2412.17395

01 Background

In recent years, Large Language Models (LLMs) have shown impressive performance on code-related tasks, with a variety of code macromodels emerging. These success stories show that pre-training on large-scale code data can significantly improve the core programming capabilities of the models. In addition to pre-training, some methods of post-training LLMs on instruction data have also led to significant improvements in the models' understanding of instructions and quality of answers. However, the effectiveness of post-training relies heavily on the availability of high-quality data, but the collection and annotation of data presents considerable challenges.

In order to address the above challenges, some methods have designed various data flywheels to generate instruction data, such as Self-Instruct, Evol-Instruct, etc. These methods can effectively enhance the code generation capability of the model by training on these data. These methods construct instruction data through various data enhancement means, and training on these data can effectively improve the code generation capability of the model. However, as shown in Fig. 1, these methods still rely on extending existing datasets and require calling private LLMs (e.g., GPT-3.5, GPT-4, etc.), making data collection costly. In addition, the limited number of data sources and LLMs used for annotation also limits data diversity and inherits the systematic bias inherent in limited private LLMs.  Figure 1

Figure 1

This paper presents the WarriorCoder, a new data flywheel training paradigm for code macromodels, where the model integrates the strengths of individual code expert macromodels by learning from expert adversarial approaches. As shown in Fig. 1, individual large models of coding experts are pitted against each other, with attackers challenging opponents in their own domains of expertise, and the target model learning from the winners of these pairs of battles. Unlike previous approaches, which mostly rely on existing open source datasets as seed data to be synthesized and augmented, warriorCoder generates data from 0 to 1 without the need of seed data, and the approach can fuse the strengths of multiple coding expert macromodels instead of just distilling the strengths of individual models. In addition, the method proposed in this paper eliminates the reliance on human involvement and private LLMs in the data collection process, and allows for the collection of high-quality and diverse training data at a very low cost. Experimental results show that warriorCoder not only achieves the current SOTA in code generation tasks, but also achieves excellent results in benchmarks such as code reasoning and libraries using, which can be called code hexagon warrior.

02 Methodology

This paper constructs an arena for code grand models. Here, state-of-the-art code-expert macromodels are pitted against each other, with each model challenging the others using the knowledge it already possesses, while the remaining models act as referees to assess the outcome of the confrontations. The target model then learns from the winners of these confrontations, gradually integrating the strengths of all competitors. In this paper, the competitors (code expert grand models) are considered as a group, and the model is optimized by the relative superiority of answers within the group, which is in line with the GRPO It has a different flavor.  Figure 2

Figure 2

2.1 Competitors Setting

The competence of the participants determines the final performance of WarriorCoder. Theoretically, the greater diversity and quality of training data obtained from a larger and stronger pool of contestants, the better the performance of the final trained model. In each round of the arena, only one pair of coders is selected as competitors, while the others act as judges. In this paper, we have selected five advanced big models within 75B from the BigCodeBench ranking - Athene-V2-Chat, DeepSeek-Coder-V2-Lite-Instruct, Llama-3.3-70B-Instruct Qwen2.5-72B-Instruct, and QwQ-32B-Preview. It is worth noting that these five big models are all open source big models, and WarriorCoder achieves excellent performance based on the confrontation of these open source big models alone. Of course, WarriorCoder is also able to learn from powerful private macromodels.

2.2 Instruction Mining from Scratch

For a pair of opponents -- A and B (where A is the attacker and B is the defender) -- the first step in the confrontation is to challenge B in an area where A excels, which requires knowledge of what A has learned during training. However, almost all current open-source grand models do not publish their core training data, which makes knowledge of what attackers are good at extremely difficult. Inspired by Magpie, this paper devises a dialog-completion based approach to mine the capabilities that the big models have mastered. Taking Qwen2.5 as an example, if we want it to generate a fast sorting algorithm, the complete prompt format is shown in Fig. 3.Prompt should include system content, user content, and special tokens related to the format, such as " ", "" and so on.  Figure 3

Figure 3

In contrast, if only the prefix part (which does not have any specific meaning by itself, as shown in Fig. 4) is input into the model, user content can be obtained by utilizing the model's complementation capability.  Figure 4

Figure 4

In this way, with different configurations of generative parameters (e.g., different temperature values and top-p values) it is possible to collect instruction data that has been learned by the model. Unlike traditional data synthesis, the instruction data collected in this paper is not synthesized by the model, but is obtained by sampling directly from the model's distribution, which avoids problems such as pattern overfitting and output distribution bias. However, the instructions may be repetitive, ambiguous, unclear, or oversimplified. To address these issues, we de-duplicate the data and use a referee model to assess their difficulty. In this paper, we categorize the difficulty into four grades: Excellent, Good, Average, and Poor. ultimately, we only use the instructions of Excellent and Good grades, and use the KcenterGreedy algorithm to further compress the instruction data.

2.3 Win-Loss Decision

Both the challenger and the defender have to generate answers based on the instruction data, and a referee (the remaining model) votes on the winner: However, relying only on textit {local scores} to select winners may introduce the problem of chance. Since voting can be affected by factors such as randomness or reviewer bias, a weaker model may receive more votes than a stronger model under certain instructions, even if its answers are not really better than the stronger model.

However, relying only on textit {local scores} to select winners may introduce the problem of chance. Since voting can be affected by factors such as randomness or reviewer bias, a weaker model may receive more votes than a stronger model under certain instructions, even if its answers are not really better than the stronger model.

To solve this problem, this paper considers both local chance and global consistency in the decision-making process. This paper introduces the concept of global score - Elo rating. It can more comprehensively reflect changes in the relative performance of the model, covering performance over time and across multiple evaluations. By introducing the Elo rating, the local performance of a model in a single game and its global performance over multiple rounds can be considered simultaneously in the evaluation process, thus providing a more robust and accurate measure of a model's overall ability, which can help to reduce the risk of a weaker model winning due to chance and unrepresentative voting.

The final response score is weighted by the Elo rating and the judges' votes:

The final response score is weighted by the Elo rating and the judges' votes: Each response is compared to the responses of all opponents, so the score represents the relative strength of the current response within the group.

Each response is compared to the responses of all opponents, so the score represents the relative strength of the current response within the group.

2.4 Final Training

In this paper, the data format is instruction, the response from each participant, and the score corresponding to each response. This data format can support various post-training methods, such as SFT, DPO, KTO and so on. In this paper, SFT is adopted, and the response with the highest score in the group is used as the gold output, so that WarriorCoder can integrate the strengths of each participant in the training, and combine the strengths of all the participants.

03 Experimental

3.1 Main results

Table 1 shows the performance of WarriorCoder on the code generation benchmark. Compared to similar work, WarriorCoder achieves SOTA on HumanEval, HumanEval+, MBPP, and MBPP+. It is worth noting that WarriorCoder achieves stunning results without the need for a private large model (e.g., GPT-4, etc.) at all.  Table 1

Table 1

WarriorCoder also achieves excellent results in code reasoning benchmark and libraries using benchmark. As shown in Tables 2 and 3, WarriorCoder performs optimally in most of the metrics and even outperforms larger models such as 15B and 34B. This also proves that the method proposed in this paper has good generalization, which allows the model to obtain many different capabilities from multiple large models of code experts.  Table 2

Table 2 Table 3

Table 3

3.2 Data analysis

This paper also analyzes the constructed training data and investigates it from three perspectives: Dependence, Diversity, and Difficulty.

Dependence

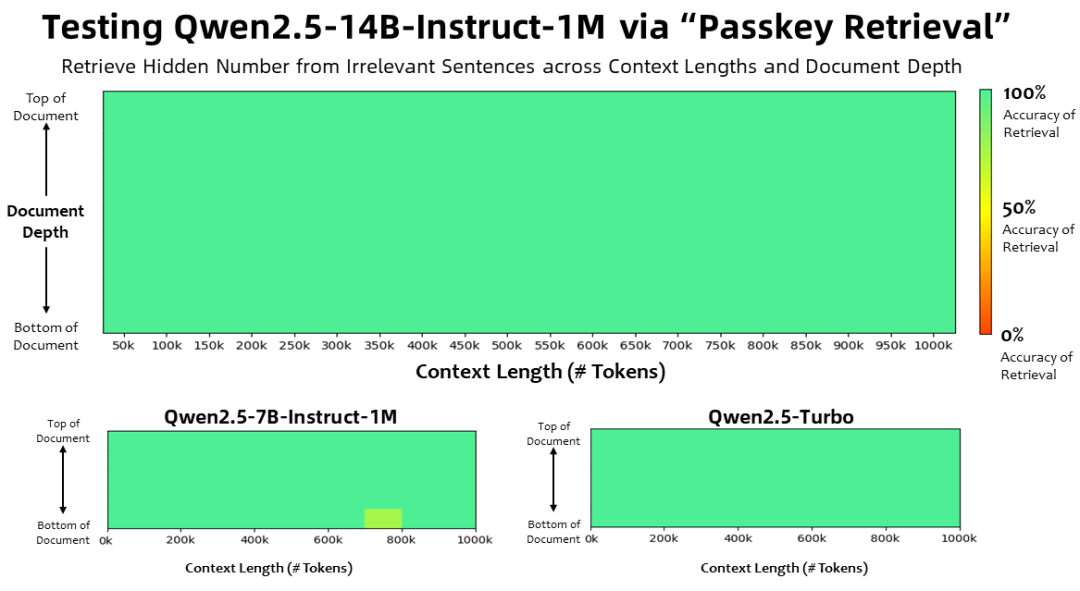

While previous work tends to extend, data augmentation based on some existing code datasets (e.g.), this paper constructs entirely new data from scratch. As shown in Fig. 5, the authors calculate the degree of overlap (ROUGE metrics) between the training data and the two commonly used code datasets, and the vast majority of the instructions have ROUGE scores of less than 0.3 with codealpaca and codeultrafeedback, suggesting that they differ significantly in content from those in the existing datasets. Notably, none of the mined instructions have ROUGE metrics above 0.6, which further proves that these instructions originate from the internal distribution of the expert macromodel rather than a simple copy or extension of the existing training data. As a result, these instructions are more novel and have a higher degree of independence, which is particularly valuable for training.  Figure 5

Figure 5

Table 4 shows the composition of the training data, covering seven different code tasks, which is why WarriorCoder is able to perform well on several benchmarks. It is worth noting that code reasoning only accounts for 2.9% which makes WarriorCoder perform amazingly well on the relevant benchmarks, which demonstrates that the method proposed in this paper has a great potential, and if the data is mined to target the weaknesses of the model, it can make the model even more capable. In addition, the heat map in Fig. 6 also shows the results of the contestants' confrontation, even the strongest model has bad performance at times, and WarriorCoder only learns from the winner response with the highest score under the current instruction.  Table 4

Table 4 Figure 6

Figure 6

Figure 7 shows the difficulty ratio of the instructions generated by the different models. The majority of instructions are rated as good, with scores between 6 and 8. Instructions rated as excellent (scores of 9-10) make up only a small portion of the dataset, indicating that highly complex or advanced tasks are relatively rare. The authors excluded instructions with scores below 6 from the training set because they tended to be either too simple or too vague, which would have been detrimental to the training phase and could have even weakened the model's performance and generalization ability.  Figure 7

Figure 7

04 Related Resources

Although the authors are not currently open-sourcing the model, we found that someone has already reproduced the authors' work at the following address:

Link to project: https://huggingface.co/HuggingMicah/warriorcoder_reproduce

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...