The Future is Here: An In-Depth Look at the "Model as Product" Era

Over the past few years, there has been a never-ending discussion about the next phase of AI development: Agentization? Stronger reasoning capabilities? Or true multimodal fusion? All kinds of speculations have emerged, signaling a new change in the AI field.

Now, I think it's time to give a definitive judgment:The era of "model as product" has arrived. It's not just a trend, it's a deep insight into the current AI landscape.

Whether it's the cutting edge of academic research or the actual direction of the commercial market, all signs are clearly pointing in this transformative direction.

- Generic model scale-up is experiencing bottlenecks: The "bigger is better" development model of the generic large model is gradually revealing its limitations. This is not only a technical challenge, but also a cost-effective consideration. As the industry discussion after GPT-4 has revealed, the relationship between model capacity increase and arithmetic cost increase is not simply linear, but a widening scissor gap. Model power may only grow at a linear rate, but the cost of training and running these behemoths climbs exponentially. Even companies with as much technology and resources as OpenAI, let alone other vendors, are struggling to find a business model that can cover their huge investment. This signals that the era of relying solely on the expansion of model parameter scales to infinitely improve AI capabilities may be over. We need to find more efficient, cost-effective, and sustainable paths to AI development.

- Orientation training is surging and far exceeding expectations: In stark contrast to the stunted development of generic models, the"Opinionated Training." approach shows amazing potential. This training paradigm emphasizes fine-tuning and training of models for specific tasks and application scenarios. The convergence of Reinforcement Learning and Reasoning techniques has reinvigorated targeted training. Instead of simply "learning the data," we are seeing models actually begin to "learn the task." This is a qualitative leap that marks a shift in thinking about AI development. Whether it's the stunning performance of small models in math, or the evolution of code models from code generators to autonomous managers of code bases, or the Claude Cases of playing complex games with almost zero information input are proof of the power of orientation training. This "small but fine" and "specialized but strong" model development route may become the mainstream of future AI applications.

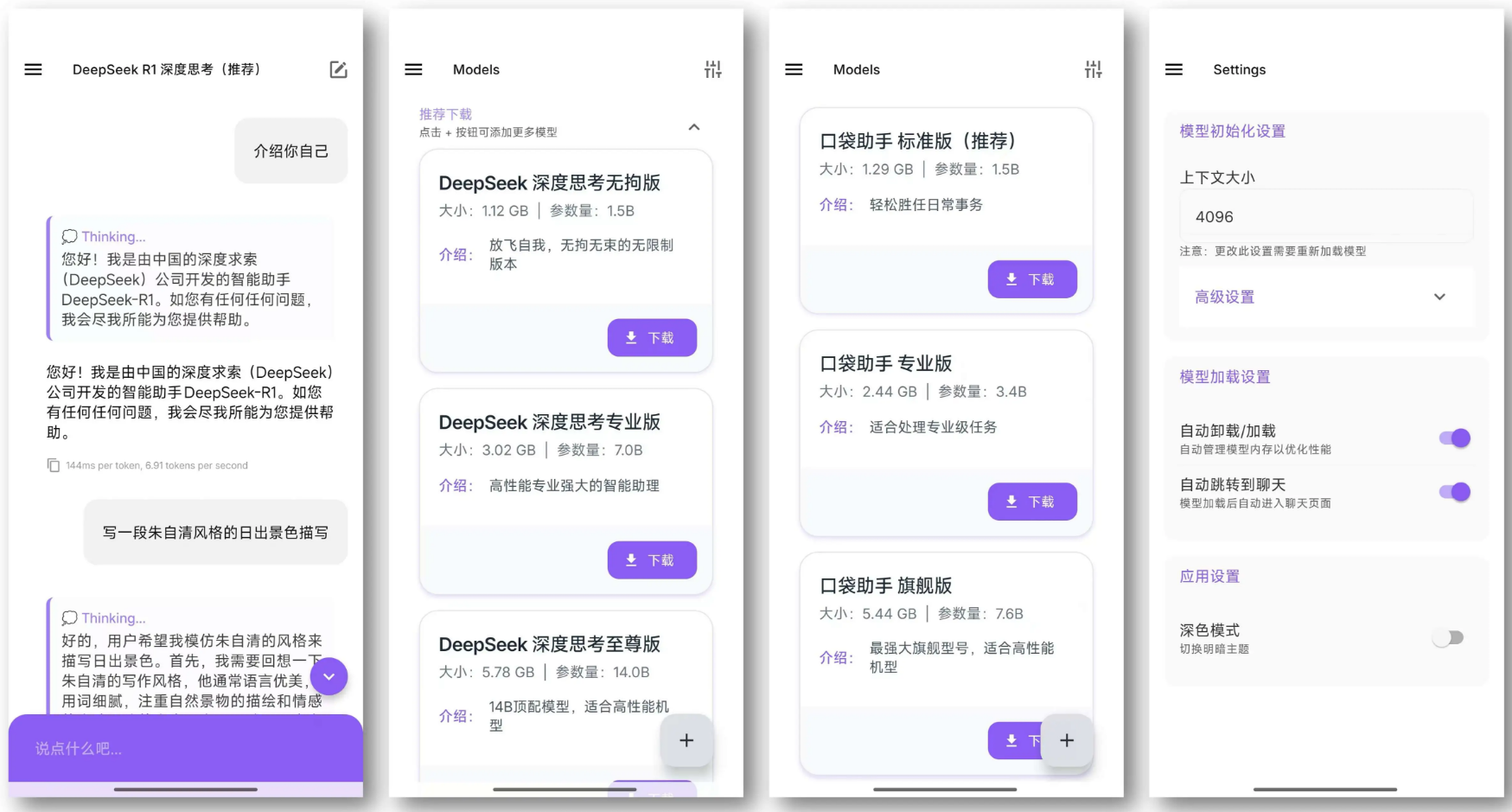

- Model inference costs fall off a cliff: The cost of reasoning, once seen as a "roadblock" to AI adoption, has also been greatly alleviated. Thanks to DeepSeek breakthroughs in model optimization and inference acceleration by companies such as AI, the threshold for deployment and application of AI models has been dramatically lowered. DeepSeek's latest research shows that the existing GPU computing power can theoretically meet the needs of billions of people around the world, each using cutting-edge models to process 10,000 AI models per day. tokens The needs of the This means that arithmetic power is no longer a key factor restricting the popularization of AI, and the era of large-scale application of AI is accelerating. For model providers, it is clear that the "pay-per-use" business model of just selling tokens can no longer fully exploit the value of AI models. They must look to the higher end of the value chain, such as providing more vertical and specialized modeling services and solutions.

All these signs are indicating that the center of gravity of the AI industry is shifting from a "general model" to a new paradigm of "model as product". This is not only an adjustment of the technical route, but also a profound change in the business model and industrial pattern. The traditional "apps are king" philosophy may need to be revisited. For a long time, investors and entrepreneurs have focused on the AI application layer, believing that application innovation is the key to AI commercialization. However, in the era of "model-as-product", the application layer may be the first to be automated and disrupted. In the future, the focus of competition will be on the models themselves, and whoever can have more advanced, more efficient and more professional models will be able to take the high ground in the AI competition.

The shape of future models: specialization, verticalization, and servicing

Over the past few weeks, we've seen two iconic products emerge from the "model-as-product" paradigm: OpenAI's DeepResearch and Anthropic The Claude 3.7 Sonnet. Both of these products reflect the trends of the new generation of models, and foreshadow the possible shape of future models.

OpenAI's DeepResearch, which has generated a lot of attention since its release, has been accompanied by a lot of misinterpretation. Many people have interpreted it simply as a "shell" application of GPT-4, or a search enhancement tool based on GPT-4. But that is far from the truth. OpenAI actually trains an entirely new model specialized for research and information retrieval tasks. DeepResearch is not a "workflow" that relies on external search engines or tool calls, but a true "Research Language Model."It has end-to-end autonomous search, browsing, information integration and report generation capabilities without any external intervention. It has end-to-end autonomous search, browsing, information integration and report generation capabilities without any external intervention. Users who have used DeepResearch can clearly feel the huge difference between it and traditional LLM or chatbots. The reports it generates are clearly structured, rigorously argued, and reliably traced, reflecting a professionalism and depth that far exceeds that of traditional search tools and LLMs.

In contrast, other products on the market that claim to be "Deep Search" such as Perplexity The same can be said of Google's and Google's features, which are dwarfed by their own. As Hanchung Lee points out, these products are still essentially based on a generic model with some simple fine-tuning and feature overlays, and lack deep optimization and systematic design for the search task. The emergence of DeepResearch marks the initial landing of the concept of "model as product", and also shows us the development direction of specialized and verticalized models.

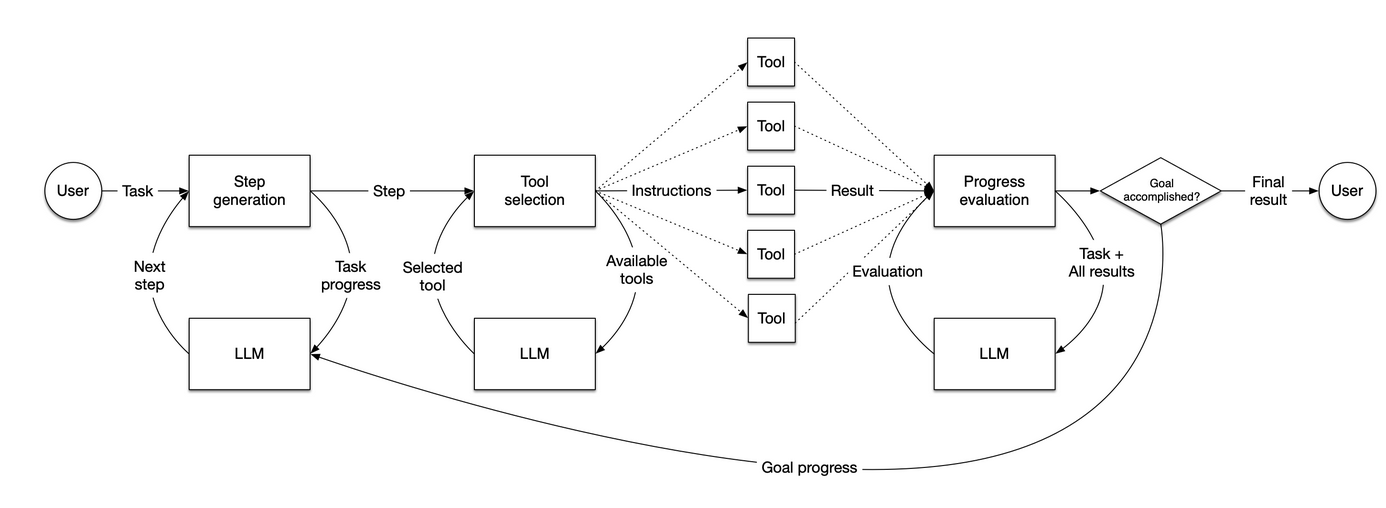

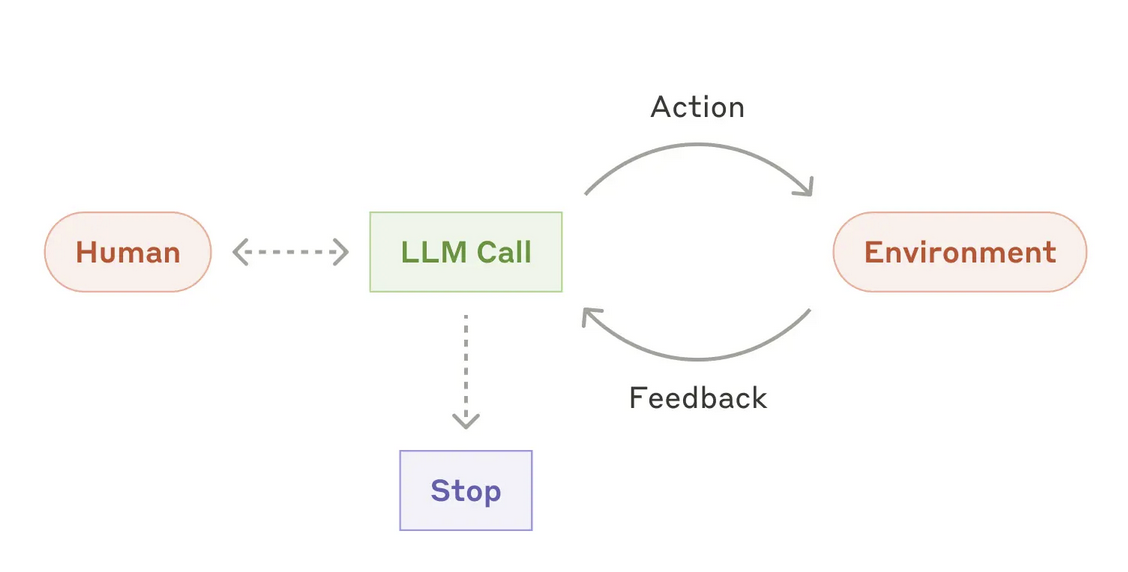

Anthropic is also actively pursuing a "model-as-product" strategy and has provided insights into the Agent model. They published an article last Decemberresearch reportAnthropic has redefined the Agent model. Anthropic argues that a true Agent model should have "Autonomy."that is capable of accomplishing the target task independently, rather than just as a link in the workflow. Similar to DeepResearch, Anthropic emphasizes that Agent models should be "Internally" Complete the entire process of task execution, including dynamic planning of task flow, autonomous selection and invocation of tools, etc., rather than relying on pre-defined code paths and external orchestration.

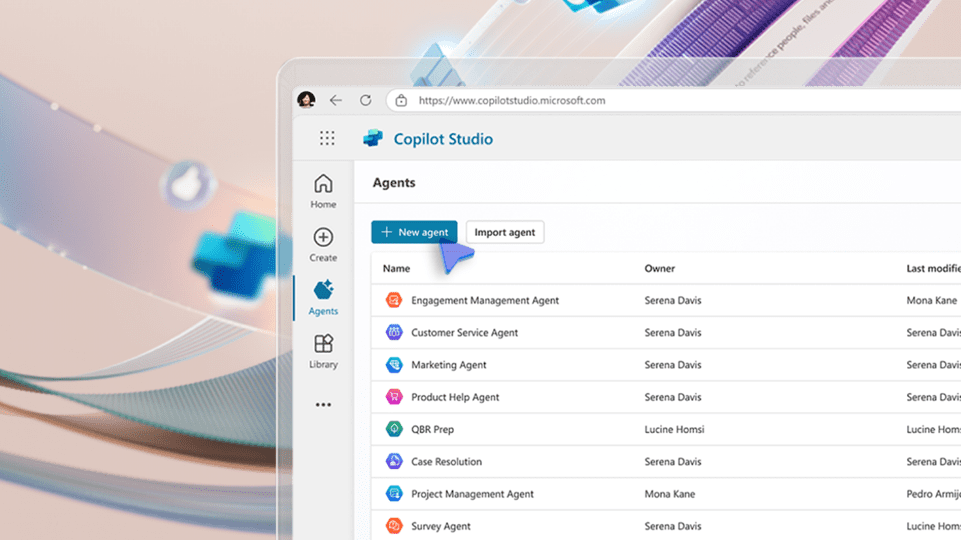

There are a large number of Agent startups emerging in the market today, but most of the "Agents" they are building are still stuck in the "Workflow" Level. These "Agents" are essentially a series of pre-defined code flows that link LLMs and various tools together to perform tasks in a fixed number of steps. This "pseudo-agent" approach, while it may have some value in certain verticals, is still a far cry from the "real agent" approach defined by Anthropic and OpenAI. Truly autonomous systems must be reshaped and innovated at the model level to make a qualitative leap forward.

The release of Anthropic Claude 3.7 further confirms the trend of "Model as Product". Claude 3.7 is a model deeply optimized for complex code scenarios. Claude 3.7 shows excellent performance in code generation, code understanding and code editing. Especially noteworthy is the fact that even a model like Devin Such a highly complex and intelligent "AI programmer" workflow, powered by Claude 3.7, also achieves significant improvements in the SWE benchmarks. This suggests that it is the power of the model itself that is key to building great applications. Instead of spending a lot of effort designing complex workflows and external tools, resources should be invested in the development and optimization of the model itself.

The Pleias team at RAG The exploration of Retrieval-Augmented Generation (RAG) domain also reflects the idea of "model as product". Traditional RAG systems are usually composed of multiple independent and coupled workflows, such as data routing, text chunking, result reordering, query understanding, query expansion, context fusion, search optimization, and so on. The lack of organic integration between these links leads to high system vulnerability and high maintenance and optimization costs. The Pleias team is trying to utilize the latest training techniques to integrate the various aspects of the RAG system. "Modeling."The RAG has two core models: one is responsible for data preprocessing and knowledge base construction, and the other is responsible for information retrieval, content generation and report output. These two models collaborate with each other to accomplish the whole process tasks of RAG. This solution, which requires a new model architecture design, a refined synthetic data pipeline and a customized reinforcement learning reward function, is a real technological innovation and research breakthrough. By "modeling" the RAG system, the system architecture can be greatly simplified, system performance and stability can be improved, deployment and maintenance costs can be reduced, and ultimately, RAG technology can be applied at scale.

To summarize, the core concept of "Model as Product" is that "Displacing Complexity". Complex problems that originally need to be solved at the application layer are processed and digested at the model level in advance through model training. The model learns and adapts to various complex scenarios and extreme situations in advance during the training phase, thus making model deployment and application simpler and more efficient. In the future, the core value of AI products will be reflected more in the model itself than in fancy features and complex workflows at the application layer. Model trainers will become the dominant players in value creation and value capture. Anthropic Claude's goal is to disrupt and replace current workflow-based "pseudo-agent" systems, such as the underlying agent framework provided by LlamaIndex. They want to enable smarter, more autonomous, and easier-to-use AI applications with more powerful models.

will be replaced by a more advanced modeling architecture as follows:

Survival in the era of "Model as Product": Develop your own model, or be swallowed by it?

Again, the strategic placement of large AI labs is not a "covert operation" but is clear and open. While in some ways their strategies may appear less than transparent, their core intentions are clear: They will start from the modeling layer and penetrate upwards to the application layer to build end-to-end AI products and services and seek to dominate the value chain. The business implications of this trend are far-reaching. Naveen Rao, VP of Gen AI at Databricks, hits the nail on the head:

In the next 2-3 years, all closed-source AI model providers will gradually stop selling API interfaces directly. Only open source models will continue to provide services through APIs. The goal of closed-source model providers is to build non-generic AI capabilities that are uniquely competitive, and in order to deliver these capabilities, they need to create the ultimate user experience and application interface. The AI product of the future will no longer be just the model itself, but a complete application that integrates the model, the application interface, and specific features.

This means that the honeymoon period of "division of labor" between model providers and app developers has come to an end. Application developers, especially "wrapper" companies that rely on third-party model APIs to build applications, are facing unprecedented challenges to their survival. In the future, the competitive landscape of the AI industry may evolve in the following directions:

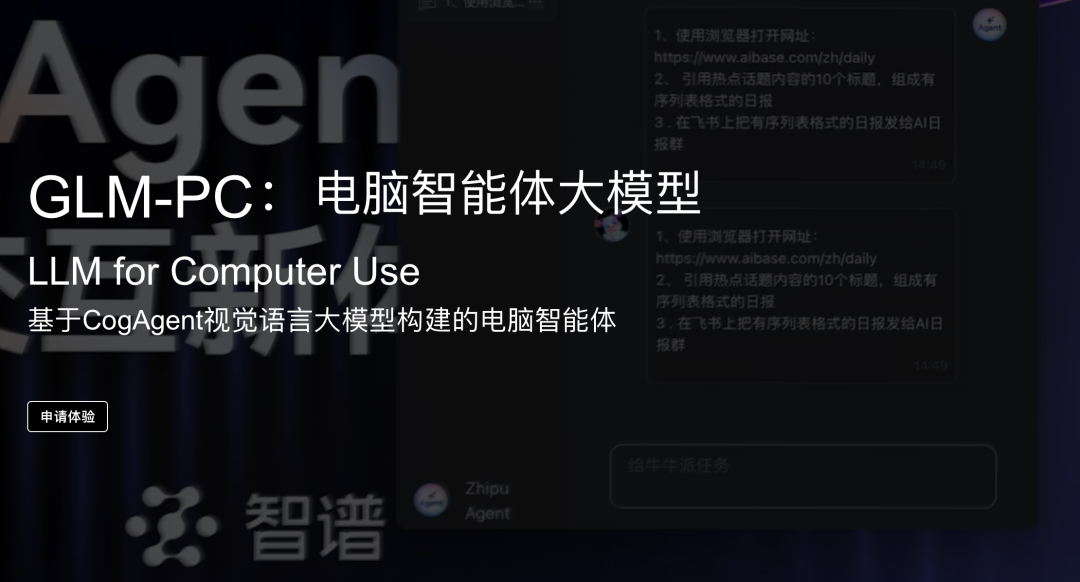

- Model providers develop their own applications to capture market share: Claude Code and DeepSearch have signaled that model providers are aggressively expanding their application areas. DeepSearch is not available as an API interface, but is integrated into OpenAI's premium subscription service as a core feature that enhances the value of the service. Claude Code is a lightweight endpoint integration tool that allows developers to use Claude 3.7 models directly in a code editor. These moves indicate that model providers are accelerating the construction of their own application ecosystems to provide services directly to users and capture market share. It is worth noting that some application wrappers, such as Cursor, have experienced performance degradation and user churn when plugged into the Claude 3.7 model. This further supports the idea that the model is the product: A true AI Agent is concerned with maximizing the model's own capabilities rather than accommodating and adapting to existing workflows.

- Application Wrapper Transformation, Powering Model Self-Research: In the face of the "descending blow" of model providers, some head application wrappers, have begun to actively seek transformation, trying to build their own model training capabilities. Despite their late start and relative weakness in model training, these companies are actively laying the groundwork. For example, Cursor emphasizes the value of its small code auto-completion model; WindSurf has an in-house, low-cost code model, Codium; Perplexity has long relied on a homegrown classifier for traffic routing and has begun training its own variant of DeepSeek for search enhancement. These moves show that application wrappers have realized that it is difficult to rely only on the "API call" model, it is difficult to gain a foothold in the future competition, you must master a certain model of self-research capabilities in order to remain competitive.

- Where do small packagers go from here? UI innovation may be the key to breakout: For the large number of small and medium-sized application wrappers, the room for survival will be even narrower in the future. If large modeling labs shrink their API services across the board, these small wrappers may be forced to turn to more neutral third-party reasoning service providers. With the convergence of modeling capabilities, innovation in UI (User Interface) and User Experience may become the key for small packagers to break through. The value of UI has long been severely underestimated in the AI space. As generic models become more powerful and model deployment and application processes become increasingly streamlined, theExcellent user interface will be the key factor to enhance the competitiveness of the product and attract users. Especially in application scenarios such as RAG, modeling capabilities may no longer be the deciding factor, and ease of use, interactivity and user experience, will become more important.

In short, for most application wrappers, the future is an "either/or" dilemma: Either you will be transformed into a model trainer (Training) or you will end up being swallowed up by the model owner (Being Trained On). Currently, all the work done by these application wrappers has, in a way, become "free market research" and "free data annotation" for large modeling labs. This is because all of the user interaction data and feedback ultimately flows to the model providers to help them improve their models, optimize their products, and further strengthen their market position.

The future direction of application wrappers also depends largely on the attitudes and perceptions of investors. Worryingly, the current investment climate seems to be somewhat "biased" against the model training space. Many investors still cling to the traditional concept of "application is king" and do not recognize the value and potential of model training. This investment "mismatch" may hinder the healthy development of AI technology, and even cause the Western AI industry to fall behind in future competition. Some application wrappers, as a result, may also have to "hide" their efforts in model training so as not to be misinterpreted by investors. Cursor's miniatures and Codium, for example, have so far lacked adequate publicity and promotion. This kind of investment orientation of "emphasizing application but not modeling" needs to cause deep reflection and vigilance in the industry.

The Underrated Reinforcement Learning: The Key to AI's Competitive Future

In the current AI investment space, there is a widespread "Reinforcement Learning was not priced in." The phenomenon. Behind this phenomenon is the cognitive bias of the investment community towards the development trend of AI technology, as well as the neglect of the strategic significance of reinforcement learning technology.

VCs typically base their investment decisions in AI on several assumptions:

- The application layer is the value pit, the modeling layer is just the infrastructure: Investors generally believe that the real value of AI is reflected in the application layer, and application innovation is the key to disrupt the existing market. The modeling layer is just an infrastructure that provides API interfaces and does not have core competitiveness.

- Model API prices will continue to fall and application wrappers will continue to benefit: Investors expect that model providers will continue to reduce the price of API calls in order to gain market share, thereby boosting the profitability of application wrappers.

- The closed-source model API is sufficient for all applications: Investors believe that applications built on closed-source model APIs can meet the needs of various scenarios, even in sensitive industries with high requirements for data security and autonomy.

- Model training is a big investment, long cycle, high risk, it is better to buy API directly: Investors generally believe that building their own model training capabilities is a "thankless" investment. Model training requires huge amounts of money, long cycles and high technical thresholds, and the risk is much higher than directly purchasing model APIs and quickly developing applications.

However, these assumptions are increasingly untenable in the context of the "model as product" era. I am concerned that if the investment community continues to hold on to these outdated notions, it may miss strategic opportunities for AI development and even lead to misallocation of market resources. The current AI investment boom could devolve into a "risky gamble," a "market failure that fails to accurately assess the latest technological developments (especially reinforcement learning)."

Venture Capital (VC) funds, which are supposed to seek out the best in their portfolios, are not the only ones who are looking for the best in the market. "Uncorrelated."The goal of VCs is not to beat the S&P500 index, but to build a diversified portfolio to minimize overall risk. The goal of a VC is not to beat the S&P500 index, but to build a diversified portfolio that reduces overall risk and ensures that some of the investments will still pay off in a down cycle. Model training is exactly what characterizes "non-correlated" investing. Against the backdrop of recessionary risks in major Western economies, the field of AI model training, which holds great potential for innovation and growth, is weakly correlated with macroeconomic cycles. However, model training companies are generally facing financing difficulties, which is contrary to the essential logic of venture capital. Prime Intellect is one of the few Western AI model training startups with the potential to grow into a cutting-edge AI lab. Despite their technological breakthroughs, including training the first decentralized LLM, their funding scale is comparable to that of an ordinary "shell" app company. This phenomenon of "bad money driving out good money" is thought-provoking.

With the exception of a few large labs, the current ecosystem for training AI models, remains very fragile and marginalized. Globally, there are only a handful of innovative companies that focus on model training. Prime Intellect, Moondream, Arcee, Nous, Pleias, Jina, the HuggingFace pre-training team (which is very small), and others make up almost the entirety of the open source AI model training space. Together with a handful of academic institutions such as Allen AI, EleutherAI, etc., they are building and maintaining the cornerstones of the current open source AI training infrastructure. In Europe, I learned that there are 7-8 LLM projects that plan to train models based on the Common Corpus corpus and pre-training tools developed by the Pleias team. The thriving open source community makes up for the lack of commercial investment to some extent, but it also struggles to get to the root of the challenges facing the model training ecosystem.

OpenAI also seems to be keenly aware of the importance of "Vertical RL". Recently, there have been rumors that OpenAI executives have expressed dissatisfaction with the Silicon Valley startup scene, which is "light on RL and heavy on applications". It is assumed that this message is likely to come from Sam Altman himself, and may be reflected in the next YC Startup Camp. This signals a possible shift in OpenAI's collaboration strategy. In the future, OpenAI's choice of partners may no longer be just API customers, but "collaborative contractors" involved in the early training stages of models. Model training, which will move from behind the scenes to the front of the stage, will become a core part of the AI competition.

The era of "model-as-product" implies a paradigm shift in AI innovation. The era of fighting alone is over, and open cooperation and collaborative innovation will become the mainstream. The search and code domains are the first to realize the "model-as-product" landing, largely due to the relative maturity of the application scenarios in these two domains, the clear market demand, and the clear technology path, which makes it easy to quickly "pick the low-hanging fruit". Like Cursor Such innovative products can be rapidly iterated and released in just a few months. However, more high-value AI application scenarios for the future, such as rule-based system intelligent upgrading, are still at an early stage of exploration, with huge technical challenges and unclear market demand, making it difficult to achieve breakthroughs through "short and quick" approaches. These are areas that require small teams with interdisciplinary backgrounds and a high degree of focus for long-term and intensive R&D investments. These "small and beautiful" innovation teams may become an important force in the future of the AI industry, and may be acquired by large technology companies after completing the early accumulation of technology to realize the value of cash. A similar model of collaboration could emerge in the user interface (UI) space. Some companies focused on UI innovation could build differentiated and competitive AI products by establishing strategic partnerships with large modeling labs to gain exclusive API access to closed-source specialized models.

In the tide of "model is product", DeepSeek's strategic layout, no doubt more forward-looking and ambitious. DeepSeek's goal is not just "model as product" but "model as common infrastructure layer". Similar to OpenAI and Anthropic, DeepSeek founder Wenfeng Lian has also publicly articulated his vision for DeepSeek:

We believe that the current phase is An explosion of technological innovation, not an explosion of applicationsThe first step is to make sure that you have a good understanding of what is going on in the industry. Only when a complete AI upstream and downstream industry ecosystem is established, DeepSeek does not have to personally do applications. Of course, if necessary, DeepSeek can also do applications, but this is not a priority. DeepSeek's core strategy has always been to adhere to technological innovation and build a powerful AI common infrastructure platform.

In the era of "model-as-product", limiting your attention to the application layer is tantamount to "Use the generals of the last generation of war to command the next generation of war."The problem is that the western AI industry still seems to be immersed in the old mindset of "application is king. Worryingly, the western AI industry still seems to be immersed in the old mindset of "application is king" and lacks sufficient knowledge and preparation for the new trends in AI development. Perhaps many people have not realized that the "war" in the field of AI has quietly entered a new stage. Whoever is the first to embrace the new paradigm of "model as product" will have a head start in the future AI competition.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...