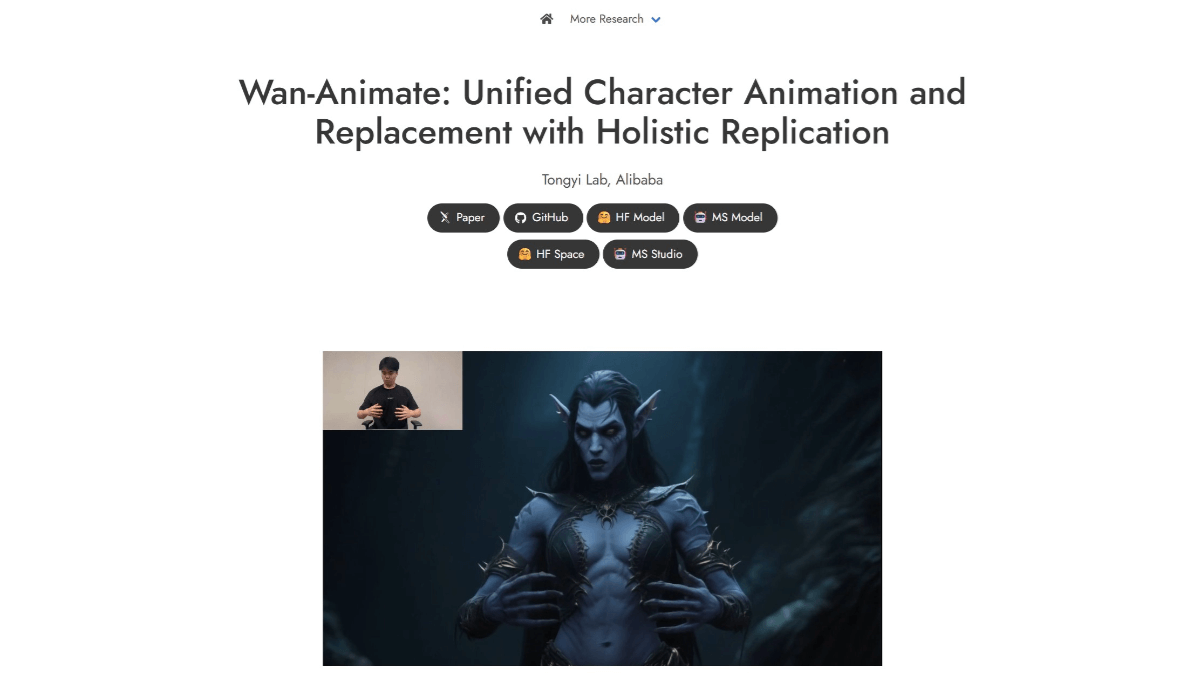

Wan2.2-Animate - A Generative Model for Action Generation of the Tongyi Wanphase Open Source

Wan2.2-What is Animate?

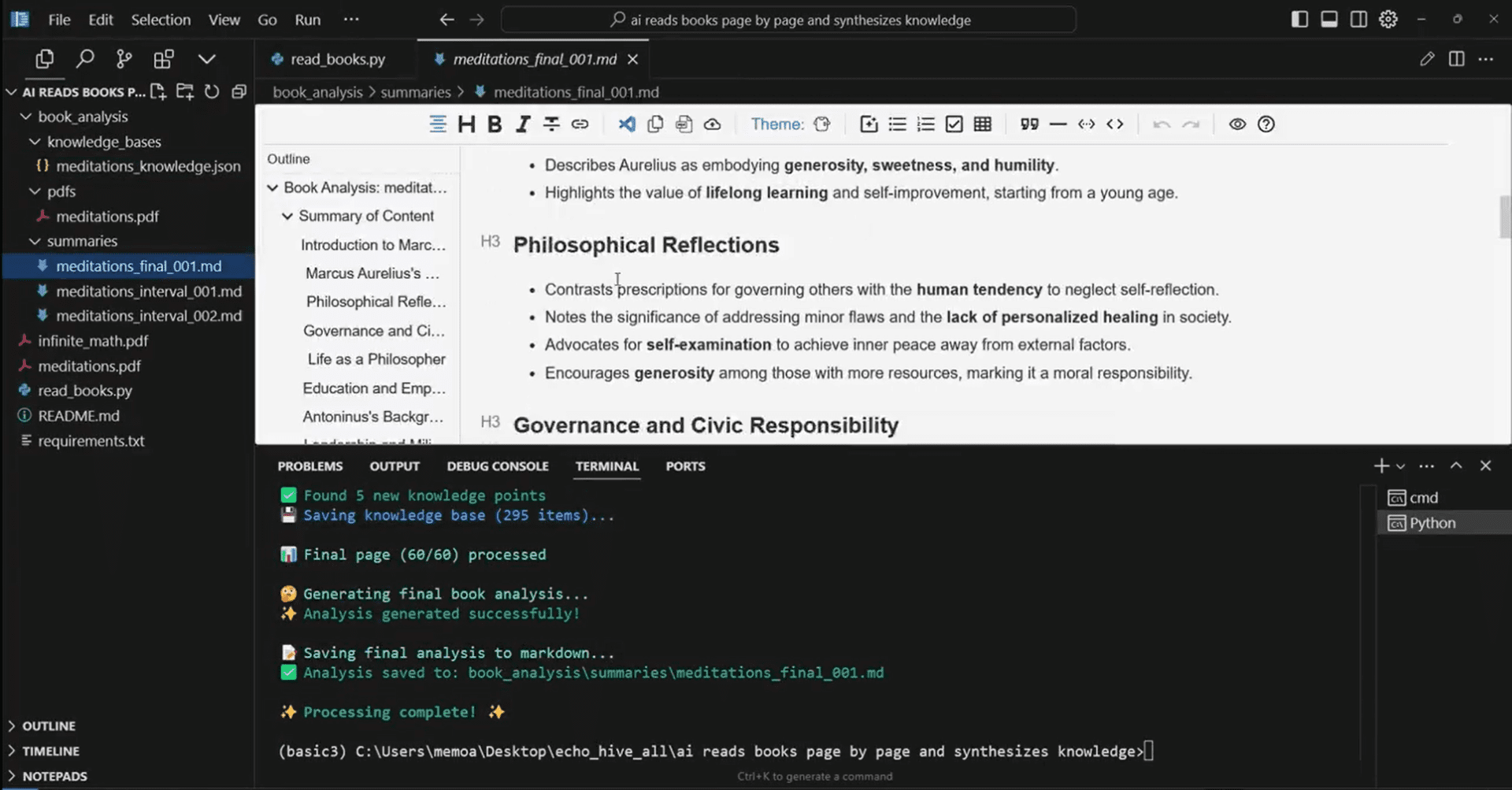

Wan2.2-Animate is an open source action generation model of Tongyi Wanxiang , which supports two modes of action imitation and role-playing . Users only need to input a character picture and a reference video , the model can migrate the video character's movements and expressions to the picture character , giving the picture character dynamic expression . The model can replace the character in the video with the character in the picture on the basis of retaining the original video's movement, expression and environment. The model supports driving characters, animation images and animal photos, which can be applied to short video creation, dance template generation, animation production and other fields. A large-scale character video dataset covering speech, facial expression and body movement is constructed, and post-training based on the Tongyi Wanxiang Tuyong video model realizes a single model compatible with two inference modes at the same time. Skeletal signals and implicit features are used for body movements and facial expressions respectively, together with the action redirection module, to realize accurate replication of movements and expressions.

Functional Features of Wan2.2-Animate

- Movement and Expression MigrationInput character pictures and reference videos, you can migrate the movements and expressions of the video characters to the picture characters, giving the picture characters dynamic expressiveness.

- Character replacement: Replace the characters in the video with the characters in the picture while preserving the movements, expressions and environment of the original video.

- Multiple Role Type Support: Can drive characters, anime images and animal photos for a wide range of applications.

- Large-scale dataset construction: A large-scale character video dataset covering speech, facial expressions, and body movements to improve model performance.

- Harmonized presentation format: To standardize role information, environment information and actions into a unified representation format, and to realize a single model compatible with two reasoning modes.

- accurate reproduction: For body movements and facial expressions, skeletal signals and implicit features are used respectively, together with a motion retargeting module, to achieve accurate replication of movements and expressions.

- Light Fusion Effect: In Replacement Mode, a separate light fusion LoRA is designed to ensure a perfect light fusion effect.

Core Benefits of Wan2.2-Animate

- Efficient Motion MigrationThe motion and expression in the reference video can be accurately migrated to the target character picture, and the migration effect is natural and smooth, giving the static character a vivid and dynamic expression.

- Environmental integration with nature: When replacing the characters, the original video's movements, expressions and environmental information can be perfectly preserved, realizing the natural integration of the characters and the background, and avoiding the unnatural sense of dissonance.

- Multi-Role Type Adaptation: Supports a wide range of character types such as characters, anime figures and animals, with broad applicability to meet the creative needs of different fields.

- Large-scale data set support: Training is based on a large-scale character video dataset covering speech, facial expressions and body movements, with rich and diverse data, giving the model stronger learning and generalization capabilities.

- single model with multiple modes: To standardize character information, environment information and actions into a unified representation format, to realize that a single model is compatible with both action imitation and role-playing modes of reasoning, and to reduce the cost of model usage.

- Precision Reproduction Technology: The use of skeletal signals and implicit features for body movements and facial expressions, respectively, together with the motion retargeting module, can accurately replicate the movements and expressions and improve the quality and realism of the generated videos.

- Light Fusion Optimization: An independent light fusion LoRA is designed in the replacement mode, which effectively ensures the consistency of light and fusion effect, making the generated video more natural and coordinated visually.

What is the official website of Wan2.2-Animate?

- Project website:: https://humanaigc.github.io/wan-animate/

- Github repository:: https://github.com/Wan-Video/Wan2.2

- HuggingFace Model Library:: https://huggingface.co/Wan-AI/Wan2.2-Animate-14B

Wan2.2-Animate's Applicable Populations

- content creator: It can be used to quickly generate creative videos, such as animations, advertisements, short videos, etc., to enhance creative efficiency and content diversity.

- Animators: Static characters can be given dynamic effects, simplifying the animation production process, reducing production costs and improving animation quality.

- Dance lovers and creators: Ability to generate personalized dance templates to help create unique dance video content.

- anime enthusiast: You can dynamically create your favorite anime characters to meet your personalized needs and enhance the fun of creation.

- educator: It can be used to create teaching videos, such as showing complex motion processes, to enhance teaching effectiveness and interest.

- Advertising and marketing staff: The ability to quickly generate engaging advertisement videos that enhance the creativity and attractiveness of advertisements.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...