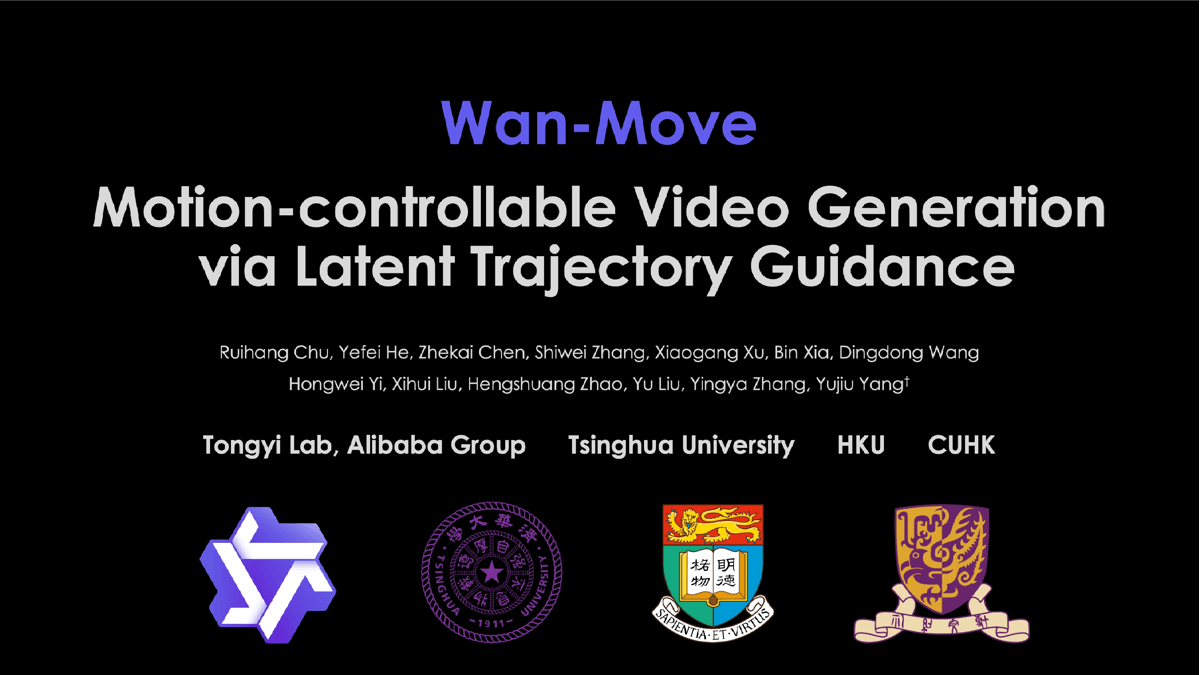

Wan-Move - Ali Tongyi's open source AI video generation framework with Tsinghua and others

What is Wan-Move?

Wan-Move is an open source AI video generation framework jointly developed by Ali Tongyi Labs, Tsinghua University and other organizations, focusing on high-quality video synthesis through precise motion control technology. The core technology is "potential trajectory guidance", which can seamlessly add point-level motion control on the basis of the existing image-to-video model, and support 5-second 480P video generation, with motion control accuracy exceeding the mainstream open source solution 22.5%. The framework does not need to modify the basic model architecture, and realizes motion injection by copying the features of the first frame to the subsequent frames, which is suitable for single-target, multi-target, and complex scenarios (e.g., multi-person interaction, object interaction), and has been benchmarked by MoveBench. It is suitable for single-target, multi-target and complex scenarios (e.g. multi-person interaction, object interaction), and has achieved top performance of FID 12.2 and EPE 2.6 in MoveBench benchmarks. Users can experience it through ComfyUI plug-in or cloud platform, and the model has been open-sourced on GitHub.

Features of Wan-Move

- Single target motion control: Allows precise motion control of individual objects in the video.

- Multi-objective motion control: Supports independent control of the motion of multiple objects in a video.

- Comparison with academic and commercial approaches: Qualitative comparisons with existing academic methods and commercial solutions are available on the website.

- camera control: Can control camera movement in video.

- locomotion transfer: It is possible to transfer motion from one video to another.

- 3D Rotation: Support for generating videos with 3D rotation effects.

Core Benefits of Wan-Move

- High quality motion controlThe newest addition to the system is the 480p video system, which produces high-quality, 5-second, 480p video with motion control comparable to commercial systems, meeting the needs of professional video creation.

- No additional modules required: No architectural changes to the existing image-to-video model are required, and no additional motion encoders need to be added, lowering the barrier to use and development costs.

- Fine-grained point-level control: Supports precise motion control of each element in the scene, enabling region-level motion customization to meet the diverse needs of complex scenes.

- Large-scale training and optimization: Ensure the visualization and motion accuracy of the generated video by training on large-scale data to improve the overall performance.

- Benchmarking support: Provides MoveBench benchmarking with large-scale samples and high-quality trajectory annotations, making it easy to evaluate and compare the effectiveness of different methods.

- Open Source and Ease of Use: The code, model weights, and benchmarking are open source, allowing users to quickly get started and perform secondary development with good scalability and community support.

What is Wan-Move's official website?

- Project website:: https://wan-move.github.io/

- Github repository:: https://github.com/ali-vilab/Wan-Move

- HuggingFace Model Library:: https://huggingface.co/Ruihang/Wan-Move-14B-480P

- arXiv Technical Paper:: https://arxiv.org/pdf/2512.08765

Who Wan-Move is for

- Video Creators: Professionals who need to create animated, special effects or creative videos can quickly generate video content with specific motion effects with Wan-Move.

- Advertising and marketing staff: Used to create engaging advertising videos to enhance brand promotion, suitable for marketing teams that need to generate high-quality video footage efficiently.

- video editor: In video post-production, it is used to quickly adjust and optimize video content, such as motion duplication and camera motion control, to improve work efficiency.

- educator: For creating instructional videos that help students better understand and learn through dynamic presentations, suitable for content creators in the education field.

- game developer: Used to generate animation effects in the game, such as character movements, scene changes, etc., to enhance the visual effect and user experience of the game.

- Technical researchers: Researchers interested in video generation and motion control technology can research and develop with open source code and benchmarking.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...