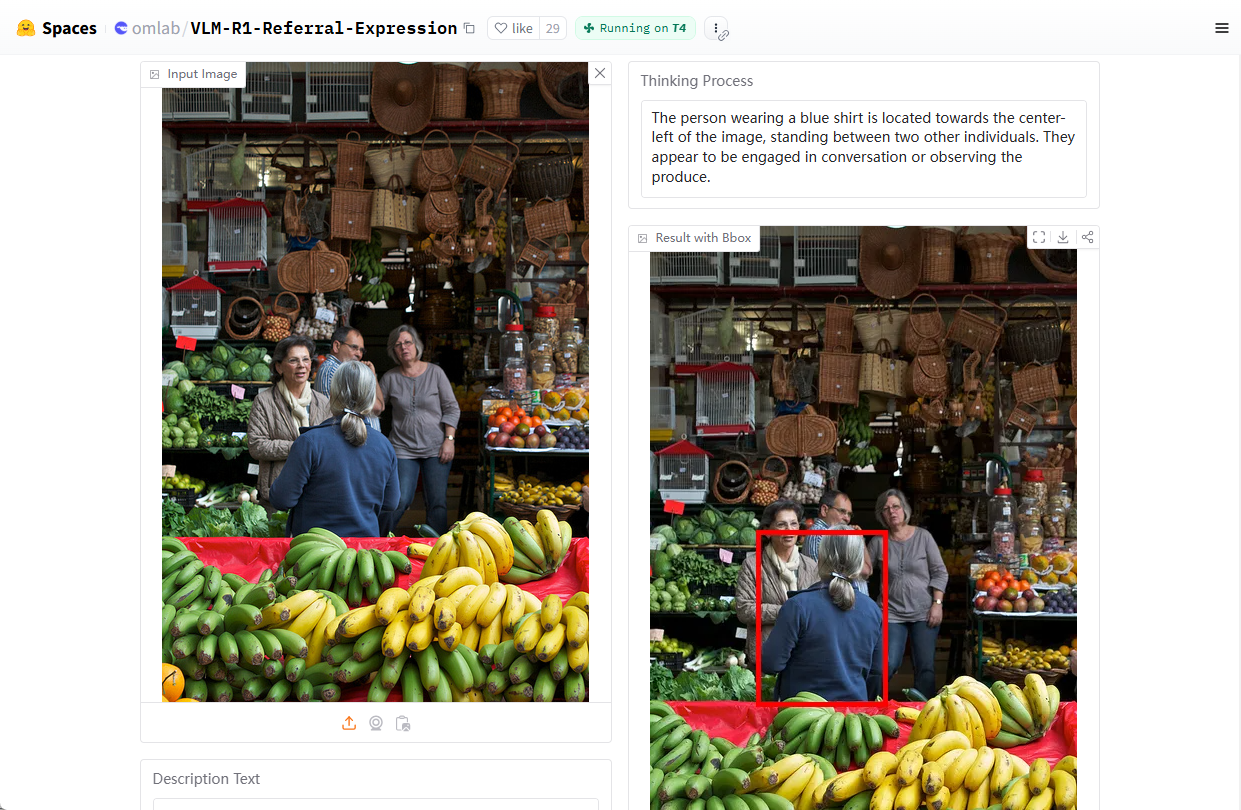

VLM-R1: A Visual Language Model for Localizing Image Targets through Natural Language

General Introduction

VLM-R1 is an open source visual language modeling project developed by Om AI Lab and hosted on GitHub. The project is based on DeepSeek The R1 method, combined with the Qwen2.5-VL VLM-R1 is particularly good at handling Representational Expression Comprehension (REC), e.g., answering questions such as "Where is the red cup in the picture?" and pinpointing the target in the image. Targets. The project provides detailed installation scripts, dataset support, and training code for developers and researchers to explore and develop visual language tasks. As of February 2025, the project has garnered nearly 2,000 GitHub hashtags, demonstrating its widespread interest in multimodal AI.

Demo address: https://huggingface.co/spaces/omlab/VLM-R1-Referral-Expression

Function List

- Refers to Representational Expression of Understanding (REC): The ability to parse natural language commands to locate specific targets in an image.

- Joint image and text processing: Supports simultaneous image and text input to generate accurate analysis results.

- Enhanced Learning Optimization: Enhancing model performance in complex visual tasks by training with the R1 method.

- Open source training code: Full training scripts and configuration files are provided for easy customization of the model.

- Dataset Support: Built-in COCO and RefCOCO dataset download and processing capabilities simplify the development process.

- High-performance inference support: Compatible with Flash Attention and other technologies to improve computing efficiency.

Using Help

Installation process

VLM-R1 is a Python based project that requires certain environment configuration to run. Here are the detailed installation and usage steps to help users get started quickly.

1. Environmental preparation

- Installing Anaconda: It is recommended that you use Anaconda to manage your Python environment to ensure system compatibility. Download: Anaconda official website. After the installation is complete, open the terminal.

- Creating a Virtual Environment: Type the following command in the terminal to create a file named

vlm-r1Python 3.10 environment:conda create -n vlm-r1 python=3.10

- activation environment: Activate the environment you just created:

conda activate vlm-r1

2. Install project dependencies

- cloning project: Download the code repository for VLM-R1 locally. Open a terminal and type:

git clone https://github.com/om-ai-lab/VLM-R1.git cd VLM-R1 - Run the installation script: The project provides a

setup.shScript for automated installation of dependencies. Run it in the terminal:bash setup.shThis script installs core libraries such as PyTorch, Transformers, etc. to make sure the environment is ready.

3. Data preparation

- Download COCO Dataset: VLM-R1 is trained using the COCO Train2014 image dataset. Run the following command to download and unzip it:

wget http://images.cocodataset.org/train2014/train2014.zip unzip train2014.zip -d <your_image_root>Make a note of the unzip path

<your_image_root>, which will be needed in subsequent configurations. - Download the RefCOCO labeling file: The RefCOCO dataset is used to refer to representation tasks. The download link can be found in the project documentation, unzip it and place it in the appropriate directory.

4. Training models

- Configuring Training Parameters: Enter

src/open-r1-multimodaldirectory, edit the parameters in the training script. Example:cd src/open-r1-multimodalmodifications

grpo_rec.pyor specify parameters when running the command. The following is an example command:torchrun --nproc_per_node=8 --nnodes=1 --node_rank=0 --master_addr="127.0.0.1" --master_port="12346" \ src/open_r1/grpo_rec.py \ --deepspeed local_scripts/zero3.json \ --output_dir output/my_model \ --model_name_or_path Qwen/Qwen2.5-VL-3B-Instruct \ --dataset_name data_config/rec.yaml \ --image_root <your_image_root> \ --max_prompt_length 1024 \ --num_generations 8 \ --per_device_train_batch_size 1 \ --gradient_accumulation_steps 2 \ --logging_steps 1 \ --bf16 \ --torch_dtype bfloat16 \ --num_train_epochs 2 \ --save_steps 100- Parameter Description:

--nproc_per_node: Number of GPUs, to be adjusted for your hardware.--image_root: Replace with your COCO dataset path.--output_dir: The model save path.

- Parameter Description:

5. Functional operating procedures

Refers to Representational Expression of Understanding (REC)

- Running test scripts: Once training is complete, use the provided test script to verify the model's effectiveness. Enter

src/evalCatalog:cd src/eval python test_rec_r1.py --model_path <your_trained_model> --image_root <your_image_root> --annotation_path <refcoco_annotation> - Input Example: Upload a picture and enter a question such as "Where is the blue car in the picture?". . The model will return the coordinates or description of the target location.

Image and Text Analysis

- Prepare to enter: Place the image file and question text in the specified directory, or specify the path directly in the script.

- running inference: Using the above test script, the model outputs an analysis of the image content, such as object category, position, etc.

Customized training

- Modify the data set: If you want to use your own dataset, edit the

data_config/rec.yaml, add the image path and label the file. - Adjustment of hyperparameters: Modified to meet the needs of the mandate

grpo_rec.pyParameters such as learning rate, batch size, etc. in the

6. Cautions

- hardware requirement: It is recommended to use a GPU with at least 8GB of RAM, or less if resources are limited.

num_generationsparameter to reduce the memory footprint. - debug mode: During training, you can set the

export DEBUG_MODE="true", view the detailed log. - Community Support: If you run into problems, ask questions on the GitHub Issues page and the Om AI Lab team and community will help.

With the above steps, users can fully install and use the VLM-R1 to quickly get started and utilize its powerful functions, whether they are conducting research on vision tasks or developing real-world applications.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...