VimLM: Native LLM-driven Vim Programming Assistant, Intelligent Programming Safely Offline

General Introduction

VimLM is a Vim plugin that provides a code assistant driven by the native LLM (Large Language Model). Interacting with the native LLM model through Vim commands, it automatically obtains the code context and helps users to perform code editing in Vim.Inspired by GitHub Copilot and Cursor, VimLM integrates contextual code comprehension, code summarization, and AI-assisted functionality directly embedded into the Vim workflow. It supports a wide range of MLX-compatible models with intuitive keybinding and split-screen response, ensuring users are safe to use in offline environments without API calls or data leaks.

Function List

- model-independent: Use any MLX-compatible model via configuration file

- Vim Native User Experience: Intuitive key binding and split screen response

- deep contextual understanding: Get code context from the current file, visual selections, referenced files, and project directory structure

- conversational coding: Iterative optimization through subsequent queries

- offline safety: Fully offline use, no API calls or data leakage required

- Code Extraction and Replacement: Inserts a block of code from the response into the selection area

- External Context Additions: Add external files or folders to the context via the !include command

- Project file generation: Generate a project file with the !deploy command.

- The response continues to be generated: Continue generating the interrupted response with the !continue command.

- Thread continuation: continue the current thread with the !followup command

Using Help

Installation process

- Make sure Python 3.12.8 is installed on your system.

- Install VimLM using pip:

pip install vimlm

Basic use

- From normal mode::

- check or refer to

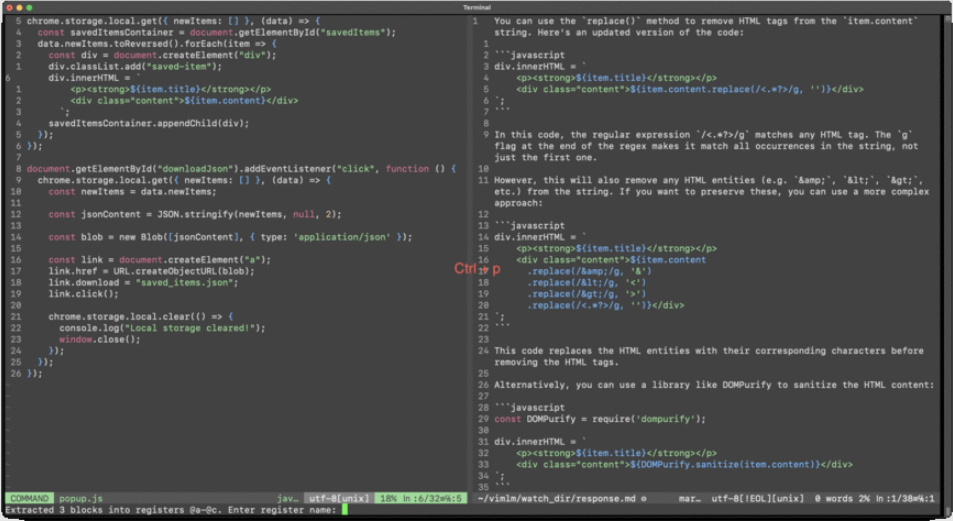

Ctrl-l: Adds the current line and file to the context. - Example hint: "Regular expression to remove HTML tag from item.content".

- check or refer to

- From visualization mode::

- Select the code block and press

Ctrl-l: Adds the selected block and the current file to the context. - Sample hint: "Convert this code to async/await syntax".

- Select the code block and press

- Follow-up dialogues::

- check or refer to

Ctrl-j: Continue the current thread. - Example follow-up: "Change to Manifest V3".

- check or refer to

- Code Extraction and Replacement::

- check or refer to

Ctrl-p: Inserts a block of code from the response into the last selected area (normal mode) or the active selection area (visual mode). - Sample workflow: select a piece of code in visual mode, press the

Ctrl-lat the prompt "Convert this code to async/await syntax", and then press theCtrl-pReplace the selected code.

- check or refer to

- inline command::

!include: Add an external context.- Example: "AJAXify this application !include ~/scrap/hypermedia-applications.summ.md".

!deploy: Generate the project file.- Example: "Create REST API endpoint !deploy . /api".

!continue: Continue generating responses.- Example: "summarize !include large-file.txt !continue 5000".

!followup: Continue the current thread.- Example: "Create a Chrome extension".

Detailed Operation Procedure

- Adding Context::

- In normal mode, press

Ctrl-lAdds the current line and file to the context. - In visual mode, select the code block and press the

Ctrl-lAdds the selected block and the current file to the context.

- In normal mode, press

- Generate Code::

- In Normal Mode or Visual Mode, press

Ctrl-lPrompt to generate code. - check or refer to

Ctrl-pInsert the generated code into the selection area.

- In Normal Mode or Visual Mode, press

- Follow-up dialogues::

- check or refer to

Ctrl-jContinue the current thread for iterative optimization.

- check or refer to

- Adding an External Context::

- utilization

!includecommand adds an external file or folder to the context. - Example: "AJAXify this application !include ~/scrap/hypermedia-applications.summ.md".

- utilization

- Generate project files::

- utilization

!deploycommand generates the project file. - Example: "Create REST API endpoint !deploy . /api".

- utilization

- Continue generating the response::

- utilization

!continuecommand continues to generate the interrupted response. - Example: "summarize !include large-file.txt !continue 5000".

- utilization

- Thread continuation::

- utilization

!followupcommand to continue the current thread. - Example: "Create a Chrome extension".

- utilization

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...