VideoReTalking: Audio-Driven Lip Synchronization and Video Editing System

General Introduction

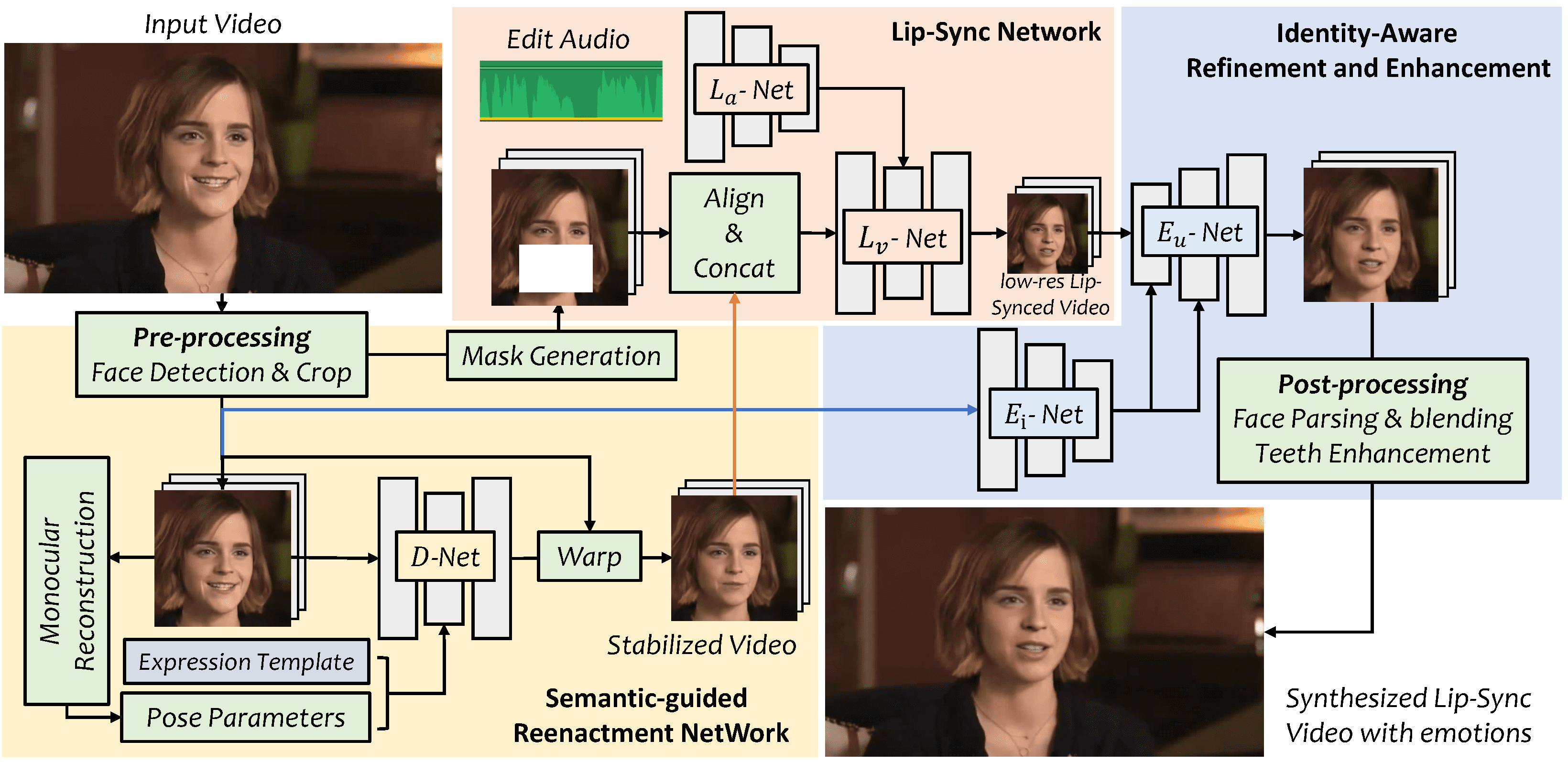

VideoReTalking is an innovative system that allows users to generate lip-synchronized facial videos based on input audio, producing high-quality and lip-synchronized output videos even with different emotions. The system breaks down this goal into three successive tasks: facial video generation with typical expressions, audio-driven lip synchronization, and facial enhancement to improve photo-realism. It handles all three steps using a learning-based approach that can be performed sequentially without user intervention. Explore VideoReTalking and its application to audio-driven lip synchronization talking head video editing through the provided link.

(not clear, need to enhance the video quality twice, slightly poor synchronization of Chinese lips)

Function List

Facial Video Generation: Generate facial videos with typical expressions based on the input audio.

Audio-driven lip-synchronization: generates lip-synchronized video based on the given audio.

Facial Enhancement: Improving photorealism of synthesized faces through identity-aware facial enhancement network and post-processing.

Using Help

Download the pre-trained model and put it in `. /checkpoints`.

Run `python3 inference.py` for quick inference of the video.

Expressions can be controlled by adding the parameters `--exp_img` or `--up_face`.

Online experience address

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...