VideoRAG: A RAG framework for understanding ultra-long videos with support for multimodal retrieval and knowledge graph construction

General Introduction

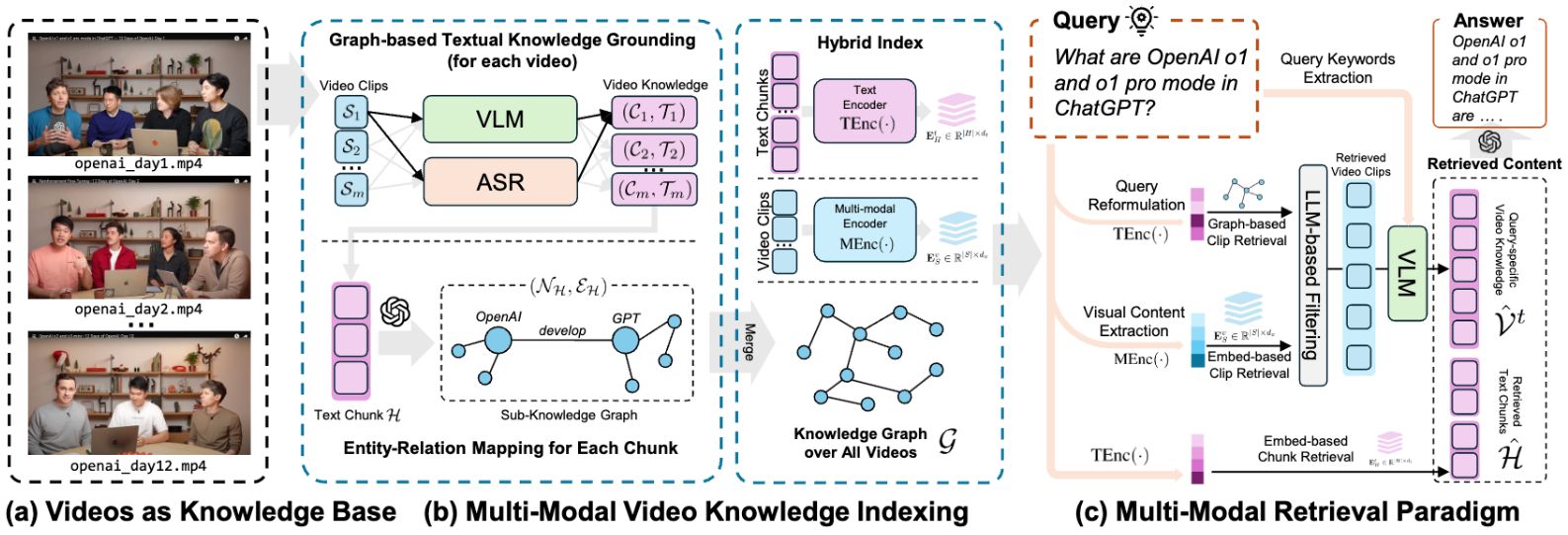

VideoRAG is a retrieval-enhanced generative framework designed for processing and understanding very long contextual videos. The tool combines a graph-driven textual knowledge base with hierarchical multimodal context encoding to efficiently process hundreds of hours of video content on a single NVIDIA RTX 3090 GPU. videoRAG maintains consistency across video semantics and optimizes retrieval efficiency by dynamically constructing a knowledge graph. Developed by the Department of Data Science at the University of Hong Kong, the project aims to provide users with a powerful tool to process complex video data.

Function List

- Efficient handling of very long contextual videos: Process hundreds of hours of video content with a single NVIDIA RTX 3090 GPU.

- Structured Video Knowledge Index: Distill hundreds of hours of video content into a concise knowledge graph.

- multimodal search: Combine textual semantics and visual content to identify the most relevant videos to provide a comprehensive response.

- Newly created LongerVideos benchmark: Contains over 160 videos totaling 134 hours of lectures, documentaries and entertainment.

- dual-channel architecture: Combining a graph-driven textual knowledge base and hierarchical multimodal context encoding to maintain cross-video semantic consistency.

Using Help

Installation process

- Create and activate the conda environment:

conda create --name videorag python=3.11

conda activate videorag

- Install the necessary Python packages:

pip install numpy==1.26.4 torch==2.1.2 torchvision==0.16.2 torchaudio==2.1.2

pip install accelerate==0.30.1 bitsandbytes==0.43.1 moviepy==1.0.3

pip install git+https://github.com/facebookresearch/pytorchvideo.git@28fe037d212663c6a24f373b94cc5d478c8c1a1d

pip install timm==0.6.7 ftfy regex einops fvcore eva-decord==0.6.1 iopath matplotlib types-regex cartopy

pip install ctranslate2==4.4.0 faster_whisper neo4j hnswlib xxhash nano-vectordb

pip install transformers==4.37.1 tiktoken openai tenacity

- Install ImageBind:

cd ImageBind

pip install .

- Download the necessary checkpoint files:

git clone https://huggingface.co/openbmb/MiniCPM-V-2_6-int4

git clone https://huggingface.co/Systran/faster-distil-whisper-large-v3

mkdir .checkpoints

cd .checkpoints

wget https://dl.fbaipublicfiles.com/imagebind/imagebind_huge.pth

cd ..

Usage Process

- Video Knowledge Extraction: Multiple videos are fed into VideoRAG and the system automatically extracts and builds a knowledge graph.

- Query Response: Users can enter a query and VideoRAG will provide a comprehensive response based on the constructed knowledge graph and multimodal search mechanism.

- Multi-language support: Currently VideoRAG has only been tested in English environment, if you need to deal with multi-language video, it is recommended to modify the WhisperModel in asr.py.

Main Functions

- Video Upload: Upload video files to the system, which will automatically process and extract knowledge.

- Query Input: Enter a question in the query box and the system will provide a detailed answer based on the knowledge graph and multimodal search mechanism.

- Results Showcase: The system displays relevant video clips and text responses that users can click on to view details.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...