VideoGrain: text prompts on the video of the local editing of open source projects

General Introduction

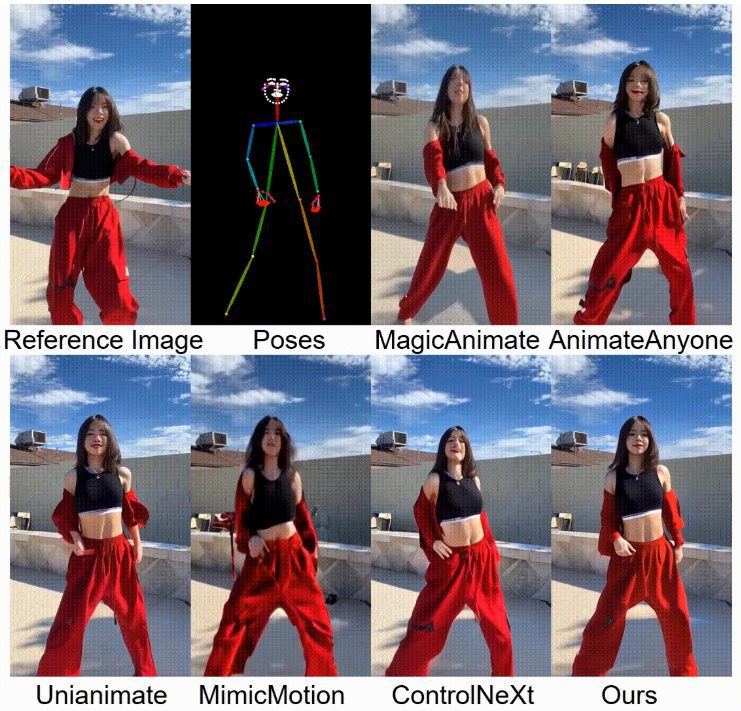

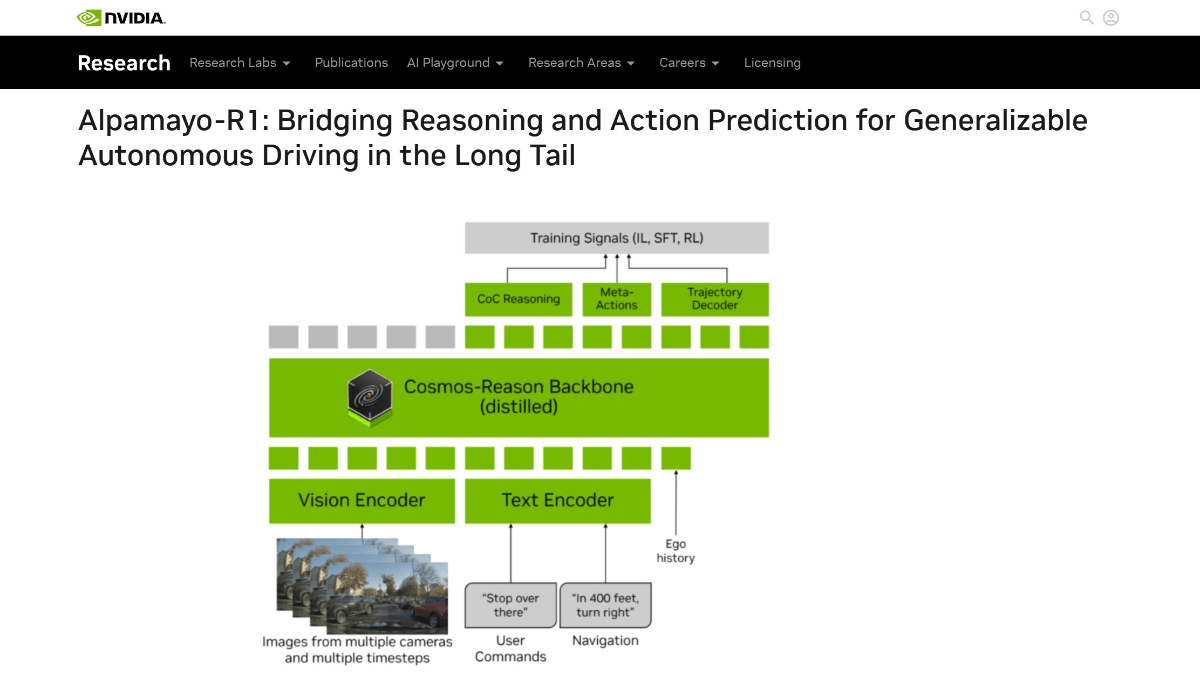

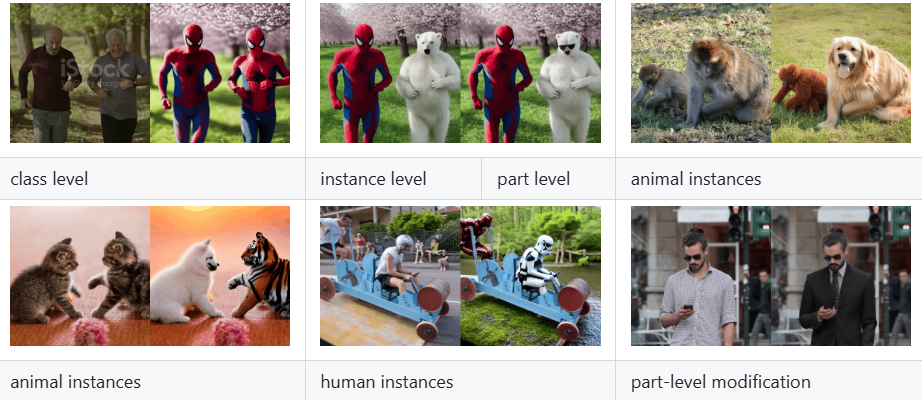

VideoGrain is an open source project focused on multi-grain video editing, developed by the xAI team and hosted on GitHub. This project is from the paper "VideoGrain: Modulating Space-Time Attention for Multi-Grained Video Editing", which has been selected for the ICLR 2025 conference. It achieves fine-grained editing of video content at the category, instance, and local levels by modulating the spatio-temporal attention mechanism in the diffusion model. Compared with traditional methods, VideoGrain solves the semantic alignment problem of text and region control and the feature coupling problem, and can accomplish high-quality editing without additional training. The project provides complete code, installation guide and pre-trained model downloads for developers, researchers and video editing enthusiasts, and has already attracted attention in the academic and open source communities.

Function List

- Multi-granularity video editing: Support for precise modification of categories (e.g., replacing "human" with "robot"), instances (e.g., specific objects), and localized details (e.g., hand motions) in a video.

- Zero sample editing capability: No need to retrain for a specific video, just type in the text prompts to finish editing.

- spatio-temporal attention modulation: Improve editing accuracy and video quality by enhancing cross-attention (text-to-region) and self-attention (intra-region feature separation).

- Open Source Code and Models: Full implementation code and pre-trained models are provided for easy reproduction and extension by users.

- diffusion-compatible model: Based on frameworks such as diffusers and FateZero for easy integration into existing video generation processes.

- Multiple file support: It can process video, image and other contents uploaded by users and output the edited video result.

Using Help

VideoGrain requires some programming knowledge and hardware support, but its installation and operation procedures are described in detail in the GitHub repository. Below is a detailed installation and usage guide to help users get started quickly.

Installation process

- Creating a Conda Environment

Create a standalone Python 3.10 environment and activate it by typing the following command in the terminal:

conda create -n videograin python=3.10

conda activate videograin

Make sure Conda is installed, if not, go to the Anaconda website to download it.

- Install PyTorch and related dependencies

VideoGrain relies on PyTorch and its CUDA support, GPU acceleration is recommended. Run the following command to install it:

conda install pytorch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 pytorch-cuda=12.1 -c pytorch -c nvidia

pip install --pre -U xformers==0.0.27

xformers can be optimized for memory and speed, skip if not needed.

- Installing additional dependencies

Run the following command from the repository root to install all dependent libraries:

pip install -r requirements.txt

Ensure that the internet is open, scientific internet access may be required to download some of the packages.

- Download pre-trained model

VideoGrain requires model weights such as Stable Diffusion 1.5 and ControlNet. Run the scripts provided in the repository:

bash download_all.sh

This will automatically download the base model to the specified directory. For manual downloads, visit the Hugging Face or Google Drive links (see README for details).

- Prepare ControlNet preprocessor weights

Download annotator weights (e.g. DW-Pose, depth_midas, etc.), about 4 GB. use the following command:

gdown https://drive.google.com/file/d/1dzdvLnXWeMFR3CE2Ew0Bs06vyFSvnGXA/view?usp=drive_link

tar -zxvf videograin_data.tar.gz

Unzip the file and put it into the ./annotator/ckpts Catalog.

Usage

After the installation is completed, users can run VideoGrain for video editing via command line. The following is the operation flow of the main functions:

1. Multi-granularity video editing

- Preparing the input file

Place the video file to be edited into the project directory (e.g../input_video.mp4). Supports common formats such as MP4, AVI. - Preparing text alerts

Specify editing instructions in the script, for example:

prompt = "将视频中的汽车替换为保时捷"

Support category replacement (e.g. "Car" to "Porsche"), instance modification (e.g. "Specific Character" to "Iron Man ") and localized adjustments (e.g. "Hand Action" to "Waving").

- Run the edit command

Enter it in the terminal:

python edit_video.py --input ./input_video.mp4 --prompt "将视频中的汽车替换为保时捷" --output ./output_video.mp4

After editing, the result is saved to ./output_video.mp4The

2. Zero sample editing

- Direct editing without training

VideoGrain's core strength is its zero-sample capability. Users only need to provide videos and cues, no pre-training is required. Example:

python edit_video.py --input ./sample_video.mp4 --prompt "将狗替换为猫" --output ./edited_video.mp4

- Adjustment parameters

transferring entity--strengthThe parameter controls the editing intensity (0.0-1.0, default 0.8), as:

python edit_video.py --input . /sample_video.mp4 --prompt "Replace dog with cat" --strength 0.6

3. Temporal attention modulation

- Enhanced text-to-area control

VideoGrain automatically optimizes cross-attention to ensure that prompts only affect the target area. For example, if the cue is "Change character's clothing to red", extraneous backgrounds will not be affected. - Elevated Feature Separation

Self-attention mechanism enhances consistency of details within regions and reduces interference across regions. Users do not need to adjust it manually, it takes effect automatically at runtime.

Handling Precautions

- hardware requirement

NVIDIA GPUs (e.g. A100 or RTX 3090) with at least 12GB of video memory are recommended. the CPU may run slower. - Cue word suggestions

Prompts need to be specific and clear, e.g. "Replace the character on the left with a robot" is better than the vague "Edit the character". - Debugging and Logging

If something goes wrong, check the./logsdirectory, or by adding the log file after the command--verboseView detailed output.

Featured Functions

- Multi-Granularity Editing in Action

Assuming the user wants to replace "bike" with "motorcycle" in a video, just enter the video path and cue word, and VideoGrain will recognize all the bikes and replace them with motorcycles, keeping the action and background consistent. - Open Source Extensions

User modifiableedit_video.pymodel parameters in the diffusers framework, or add new features based on the diffusers framework, such as support for higher resolutions or more edit types. - Community Support

GitHub repositories provide issue boards where users can submit problems or see what others have done.

With the above steps, users can quickly get started with VideoGrain and complete video editing tasks ranging from simple replacements to complex local adjustments.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...