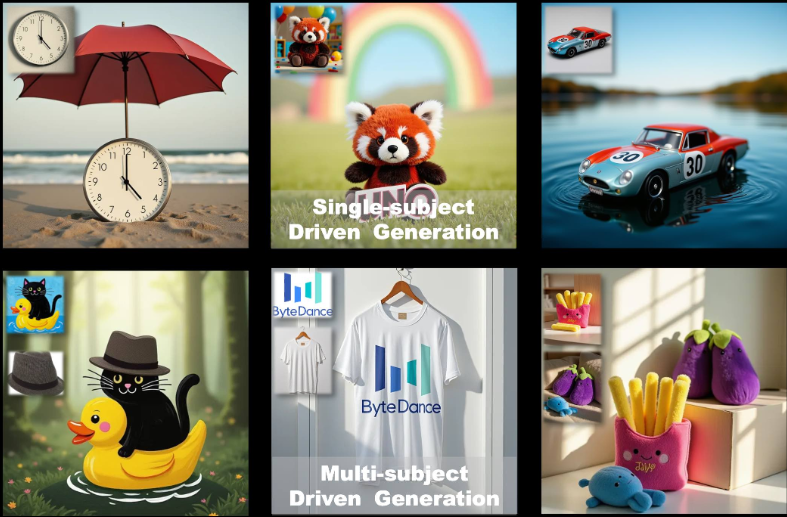

UNO: Support for single-subject and multi-subject customized image generation tools (suitable for e-commerce graphics)

General Introduction

UNO is an open source image generation framework developed by the ByteDance Intelligent Creation Team. It is based on FLUX.1 model, focusing on single-subject and multi-subject customized image generation through the generalization of "less to more".UNO solves the problem of data extension and subject consistency in multi-subject scenarios by using the context generation capability of Diffusion Transformer (DiT) combined with the high consistency of the data synthesis process. It supports users to generate high-quality images from text descriptions and reference images, which is widely applicable to personalized image creation, virtual character design and other scenarios. The project code is licensed under Apache 2.0 and the model weights are licensed under CC BY-NC 4.0, which is suitable for academic research and non-commercial use.

Function List

- Single-subject image generation: generates an image consistent with the description based on a single reference image, preserving subject characteristics.

- Multi-subject image generation: generating multiple specific subjects in the same scene, keeping their respective features not to be confused.

- Context generation: utilizes the context learning capability of the diffusion transformer to generate highly consistent images.

- Low Memory Optimization: Supports fp8 mode with peak memory usage of ~16GB for consumer GPUs.

- Model fine-tuning: provides pre-training and multi-stage training strategies to support iterative optimization from text to image models.

- Open source support: training code, inference code and model weights are provided to facilitate researchers to extend the application.

Using Help

Installation process

To use UNO, you need to install and configure the dependencies in your local environment. Here are the detailed installation steps for Python 3.10 through 3.12.

- Creating a Virtual Environment

First, create a separate Python virtual environment to avoid dependency conflicts. You can use the following command:python -m venv uno_env source uno_env/bin/activate # Linux/MacOS uno_env\Scripts\activate # WindowsOr use Conda to create an environment:

conda create -n uno_env python=3.10 -y conda activate uno_env - Installing PyTorch

If you are using an AMD GPU, NVIDIA RTX 50 series, or macOS MPS, you will need to manually install the appropriate version of PyTorch. Refer to the PyTorch website (https://pytorch.org/) Select the correct version. For example:pip install torch torchvision torchaudio - Installing UNO Dependencies

Clone the UNO repository and install the dependencies:git clone https://github.com/bytedance/UNO.git cd UNO pip install -e . # 仅用于推理 pip install -e .[train] # 用于推理和训练Note: Ensure that

requirements.txtdependencies are installed correctly. If you encounter problems, check for missing system libraries (such as theffmpeg) and throughconda install -c conda-forge ffmpegInstallation. - Download Model Checkpoints

UNO relies on the FLUX.1-dev model and associated checkpoints. It can be downloaded in the following ways:- automatic download: When running inference scripts, checkpoints are automatically passed through the

hf_hub_downloadDownload to the default path (~/.cache/huggingface). - manual download: Use the Hugging Face CLI to download models:

huggingface-cli download black-forest-labs/FLUX.1-dev huggingface-cli download xlabs-ai/xflux_text_encoders huggingface-cli download openai/clip-vit-large-patch14 huggingface-cli download bytedance-research/UNO

After downloading, place the model in the specified directory (e.g.

models/unetcap (a poem)models/loras). - automatic download: When running inference scripts, checkpoints are automatically passed through the

- Verify Installation

After the installation is complete, run the following command to check that the environment is configured correctly:python -c "import torch; print(torch.cuda.is_available())"If the return

TrueThis means that the GPU environment has been configured successfully.

Usage

UNO provides a Gradio interface (app.py) is used for interactive image generation and can also be used to run inference scripts from the command line (inference.py). The following are the main operating procedures.

Image generation through the Gradio interface

- Launching the Gradio Application

Ensure that Gradio is installed (included in therequirements.txt(in). Run the following command to start the interface:python app.pyUpon startup, the browser opens a local page (usually the

http://127.0.0.1:7860). - input parameter

In the Gradio interface:- Enter a text prompt (

prompt), describe the image scene you want to generate, e.g. "a cat and a dog playing in the park". - Upload 1-4 reference images (

image_ref1untilimage_ref4), these images define the appearance of the subject. - Set the seed value (

seed) to control the randomness of the generated results, the default value is 3407. - Select the model type (

flux-dev,flux-dev-fp8maybeflux-schnell), recommendedflux-dev-fp8to reduce video memory requirements.

- Enter a text prompt (

- Generating images

Click on the Generate button and UNO will generate the results based on the prompts and the reference image. Generation time depends on hardware performance and typically takes several seconds to several minutes on consumer GPUs such as the RTX 3090.

Running reasoning from the command line

- Prepare to enter

Create a configuration file containing the prompt and reference image paths, or specify the parameters directly on the command line. Example:python inference.py --prompt "A man in a suit, standing in a city" --image_paths "./assets/examples/man.jpg" --model_type "flux-dev-fp8" --save_path "./output" - Description of common parameters

--prompt: A textual description defining the content of the generated image.--image_paths: Reference image path with multiple image support.--model_type: Model Type, Recommendedflux-dev-fp8The--offload: Enable video memory offloading to reduce video memory usage.--num_steps: Number of diffusion steps, default 25, affects the quality of generation.--guidance: Guidance factor, default 4, controls how well the text matches the image.

- View Results

The generated image is saved to the--save_pathThe specified directory (e.g.output/inference).

Featured Function Operation

Single-subject generation

- procedure::

- Upload an image of the subject (e.g. a photo of a person).

- Enter a text prompt describing the target scene (e.g., "This person is walking on the beach").

- Set the reference image resolution to 512 (default).

- To generate an image, UNO maintains the appearance of the subject's features (e.g., face, clothing).

- caveat: Ensure that the reference image is clear and the subject is well characterized, avoiding blurred or low quality images.

Multi-subject generation

- procedure::

- Upload multiple reference images (e.g., a photo of a cat and a photo of a dog).

- Enter text prompts that describe multi-subject scenes (e.g., "The cat and the dog are playing in the grass.").

- Set the reference image resolution to 320 (multi-subject default).

- To generate an image, UNO avoids subject feature confusion through the UnoPE (Universal Rotational Position Embedding) technique.

- caveat: The number of reference images should not exceed 4 and each subject should be clearly distinguished in the image.

Low memory optimization

- utilization

flux-dev-fp8model, the video memory footprint is down to about 16GB. - start using

--offloadparameter, offloading some of the computation to the CPU, further reducing graphics memory requirements. - For users of consumer GPUs such as RTX 3090 or RTX 4090.

Frequently Asked Questions

- insufficient video memory: Try to reduce the resolution (

--widthcap (a poem)--height) to 512x512, or use theflux-dev-fp8Model. - installation failure: Check that the PyTorch version is compatible with the GPU and manually install a specific version if necessary.

- Generate unsatisfactory results: Adjustments

--guidance(increased to 5 or 6) or--num_steps(increased to 50) to improve image quality.

application scenario

- Personalized image creation

Users can upload their own photos and combine them with text descriptions to generate images of specific scenarios. For example, uploading a selfie generates an image of "yourself walking in the city of the future", which is suitable for social media content creation. - Virtual Character Design

Game developers or animators can upload character sketches to generate character images for different scenarios and maintain a consistent character appearance, suitable for manga, animation or game development. - Advertising & Marketing

Marketing teams can upload product or brand mascot images to generate diverse advertising scenarios (e.g., products displayed in different seasons) and enhance the diversity of visual content. - academic research

Researchers can use UNO's open source code and training flow to explore the application of diffusion models to multi-subject generation, validate new algorithms or optimize existing models.

QA

- What hardware does UNO support?

UNO recommends NVIDIA GPUs (such as RTX 3090 or 4090) with a minimum of 16GB of video memory. AMD GPUs and macOS MPS are supported, but PyTorch needs to be configured manually. - How to improve the quality of generated images?

Add a diffusion step (--num_stepsset to 50) or adjust the bootstrap factor (--guidance(set to 5-6). Make sure the reference image is clear and the text prompts are specific. - Does UNO support commercial use?

Model weights are licensed under CC BY-NC 4.0 for non-commercial use only. Commercial use is subject to the terms of the original FLUX.1-dev license. - How to deal with feature confusion in multi-subject generation?

UNO Reduce confusion with UnoPE technology. Ensure that the subject features of each reference image are clearly defined and that the resolution is appropriately reduced (e.g. 320) to optimize the effect.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...