TreeGPT: AI Chat Interface for Visualizing Tree Conversations

General Introduction

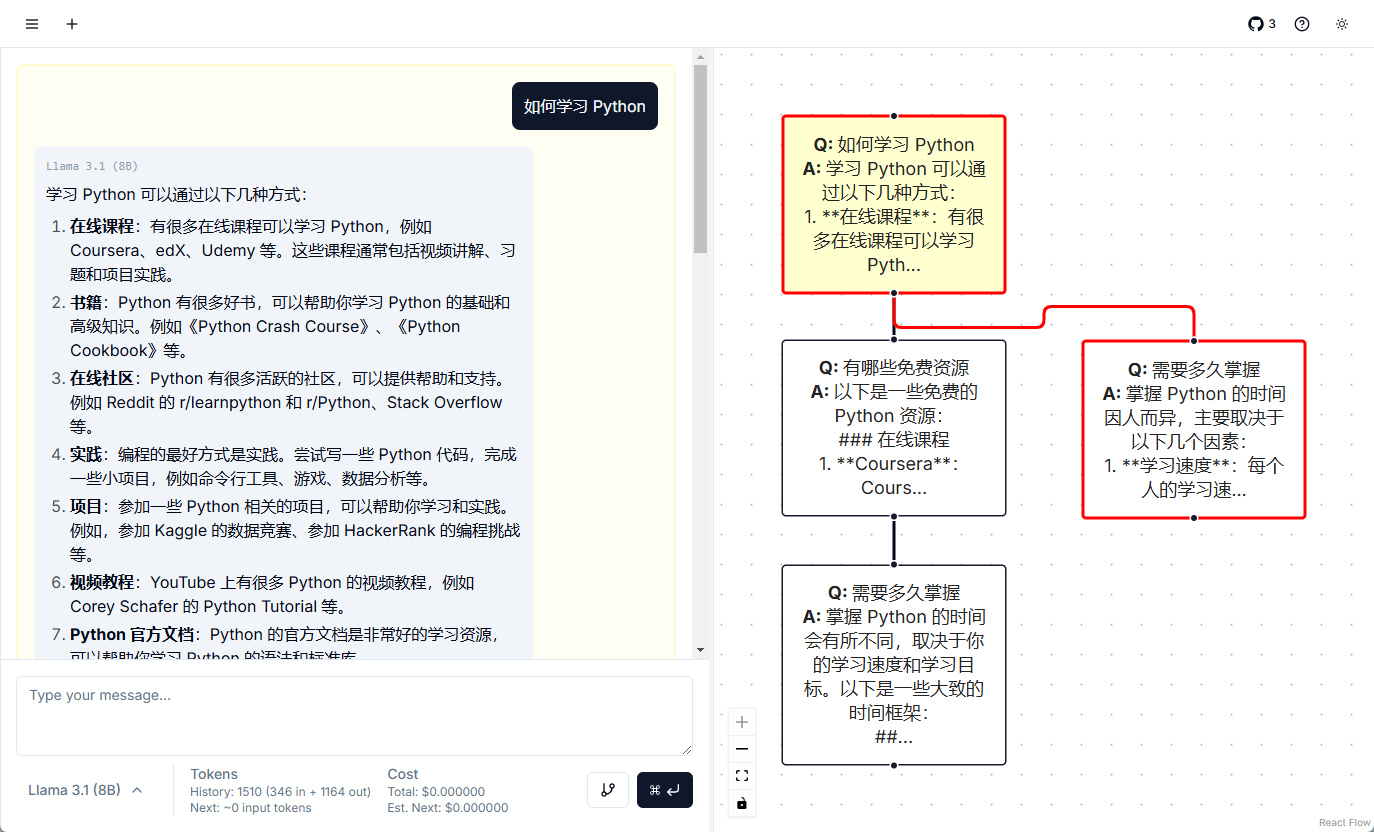

TreeGPT is an open source chat application based on Next.js, focusing on visualizing conversations with large language models (LLMs, such as GPTs) through tree graph structures (directed acyclic graphs, DAGs), replacing the traditional linear chat approach to improve speed and ease of use. The project is hosted on https://github.com/jamesmoore24/treegptThe application can be run locally by cloning the source code and configuring the OpenAI API key (http://localhost:3000) or visit treegpt.app TreeGPT solves the traditional chat interface where branching conversations are difficult to manage, searching is inconvenient, and token The problem of opacity of use is suitable for developers, researchers or users who need efficient interaction.

Function List

- Tree Dialog Visualization: Presentation of chats in an interactive tree view with support for branching navigation.

- natural language search: Optimize results using embedded metadata by describing search conversation records.

- Real-time token management: Tracks token usage, provides cost estimation and output control.

- Multi-model support: Connect to LLM providers such as OpenAI, Anthropic, etc. for intelligent model selection.

- shortcut operation: Provides Vim-like buttons to quickly switch modes and edit nodes.

Using Help

TreeGPT is a Next.js application that needs to be built and run locally. Below is a detailed installation and usage guide to help users deploy it from scratch and master its core features.

Installation process

- environmental preparation

- Installing Node.js: Access

nodejs.orgTo install v18, download and install v18 or later. After installation, typenode -vConfirm the version. - Install npm or yarn: Node.js comes with npm, if you prefer yarn, you can run the

npm install -g yarnInstallation.

- Installing Node.js: Access

- clone warehouse

- Open a terminal and run the following command:

git clone https://github.com/jamesmoore24/treegpt.git cd treegpt - This will download the TreeGPT source code and go to the project directory.

- Open a terminal and run the following command:

- Installation of dependencies

- Enter it in the terminal:

npm installor use yarn:

yarn install - Wait for the dependency installation to complete (may take a few minutes, depending on the network).

- Enter it in the terminal:

- Configuring OpenAI API Keys

- In the project root directory, create the

.envfile, enter the following:OPENAI_API_KEY=你的_api_密钥 - Get the key: Go to the OpenAI website (

platform.openai.com), generate a new key on the API Keys page, copy and replace the你的_api_密钥The - Save the file to ensure that the key is not compromised.

- In the project root directory, create the

Running the application

- Starting the Development Server

- Runs in the terminal:

npm run devOr:

yarn dev - Upon startup, the terminal will display something like

http://localhost:3000The address of the

- Runs in the terminal:

- Access to applications

- Open your browser and type

http://localhost:3000(or the address displayed on the terminal). - Once the page loads you will see the TreeGPT chat screen.

- Open your browser and type

Core Function Operation

- Tree Dialog Visualization

- Start chatting: Type a question in the input box (e.g. "How to optimize code") and enter the answer as a node in the tree.

- Creating Branches: Click on any node and enter a new question (e.g. "Specific tool") to generate a child node.

- navigation tree: Use the mouse to click on a node, or press the shortcut key

[j]Moving up.[1-9]Select the branch that[r]Returns the root node. - View Overview: The interface provides a mini-map showing the complete dialog tree structure, and mouse hover nodes to preview the content.

- natural language search

- check or refer to

[/]Go into search mode and enter a description (e.g. "algorithms discussed yesterday"). - The system returns matching dialog nodes based on the embedded metadata.

- check or refer to

- Real-time token management

- The interface displays token usage and estimated cost for the current conversation.

- The output length or context window size can be adjusted via the settings.

- Multi-model support

- OpenAI is used by default, if you need to switch models (e.g. Anthropic), you need to configure other API keys in the code (refer to the project documentation).

- The system will intelligently select the optimal model based on the built-in rules.

- shortcut operation

[``]: Switch between chat and view mode.[e]: Edit the contents of the current node.[dd]: Delete the current node and its subtrees.- These commands mimic Vim operations and improve efficiency.

sample operation (computing)

Let's say you want to discuss "Ways to Learn Python":

- Type "How to learn Python" and get an answer.

- Click on the Answer node and type "What are the free resources" to generate a branch.

- check or refer to

[j]Move up to see the parent node, then enter "how long does it take to master" to form a new branch. - The dialog tree is displayed:

如何学习 Python ├── 有哪些免费资源 └── 需要多久掌握

- check or refer to

[/]Search for "free resources" to quickly locate relevant nodes.

caveat

- network requirement: An Internet connection is required at runtime to access the LLM API.

- key security::

.envFiles should not be uploaded to the public repository. - performance optimization: When the dialog tree is too large, it is recommended to periodically clean up useless nodes (

[dd]).

With the above steps, users can easily build TreeGPT and experience its powerful tree dialog function locally.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...