ToolGen: Unified Tool Retrieval and Invocation through Generation

ToolGen is a framework for integrating tool knowledge directly into large-scale language models (LLMs), enabling seamless tool invocation and language generation by representing each tool as a unique token. It was developed by Renxi Wang et al. to improve the performance of tool retrieval and task completion.

- Tool tokenization: convert tools into unique tokens for easy model invocation.

- Tool call generation: The model is capable of generating tool calls and parameters.

- Task completion: automation of complex tasks through tool calls.

- Dataset Support: Provides rich datasets to support model training and evaluation.

summaries

As Large Language Models (LLMs) evolve, their limitation of not being able to autonomously perform tasks by directly interacting with external tools becomes particularly evident. Traditional approaches rely on using tool descriptions as context input, which is limited by context length and requires a separate retrieval mechanism that is often inefficient. We propose ToolGen, a method for retrieving tool descriptions by representing each tool as a unique Token , a paradigm that integrates tool knowledge directly into LLM parameters. This allows LLMs to seamlessly integrate tool invocations with language generation by using tool invocations and parameters as part of the predictive capabilities of their next Token. Our framework allows LLMs to access and use a large number of tools without additional retrieval steps, significantly improving performance and scalability. Experimental results based on over 47,000 tools show that ToolGen not only achieves superior results in tool retrieval and autonomous task completion, but also lays the foundation for a new generation of AI agents that can adapt to a wide range of domain tools. By fundamentally transforming tool retrieval into a generative process, ToolGen paves the way for more flexible, efficient, and autonomous AI systems.ToolGen extends the utility of LLM by supporting end-to-end tool learning and providing opportunities for integration with other advanced technologies such as Chain Thinking and Reinforcement Learning.

1 Introduction

Large Language Models (LLMs) have demonstrated impressive capabilities in processing external inputs, performing operations, and autonomously accomplishing tasks (Gravitas, 2023; Qin et al. 2023; Yao et al. 2023; Shinn et al. 2023; Wu et al. 2024a; Liu et al. 2024). Among the various approaches to enable LLMs to interact with the external world, tool calls via APIs have become one of the most common and effective. However, as the number of tools increases into the tens of thousands, existing methods of tool retrieval and execution are difficult to scale efficiently.

In real-world scenarios, a common approach is to combine tool retrieval with tool execution, i.e., the retrieval model first filters out the relevant tools and then hands them over to the LLM for final selection and execution (Qin et al., 2023; Patil et al., 2023). While this combined approach is helpful when dealing with a large number of tools, there are obvious limitations: retrieval models often rely on small encoders that make it difficult to comprehensively capture the semantics of complex tools and queries, while separating retrieval from execution may lead to inefficiencies and stage bias during task completion.

Furthermore, LLMs and their disambiguators are pre-trained primarily on natural language data (Brown et al., 2020; Touvron et al., 2023), and have limited intrinsic knowledge of tool-related functionality of their own. This knowledge gap leads to poor performance, especially when LLMs must rely on retrieved tool descriptions for decision making.

In this study, we introduce ToolGen, a new framework that integrates real-world tool knowledge directly into LLM parameters and transforms tool retrieval and execution into a unified generation task. Specifically, ToolGen makes more effective use of LLM's preexisting knowledge for tool retrieval and invocation by extending the LLM vocabulary to represent tools as specific virtual tokens and training the model to generate these tokens in a dialog context.

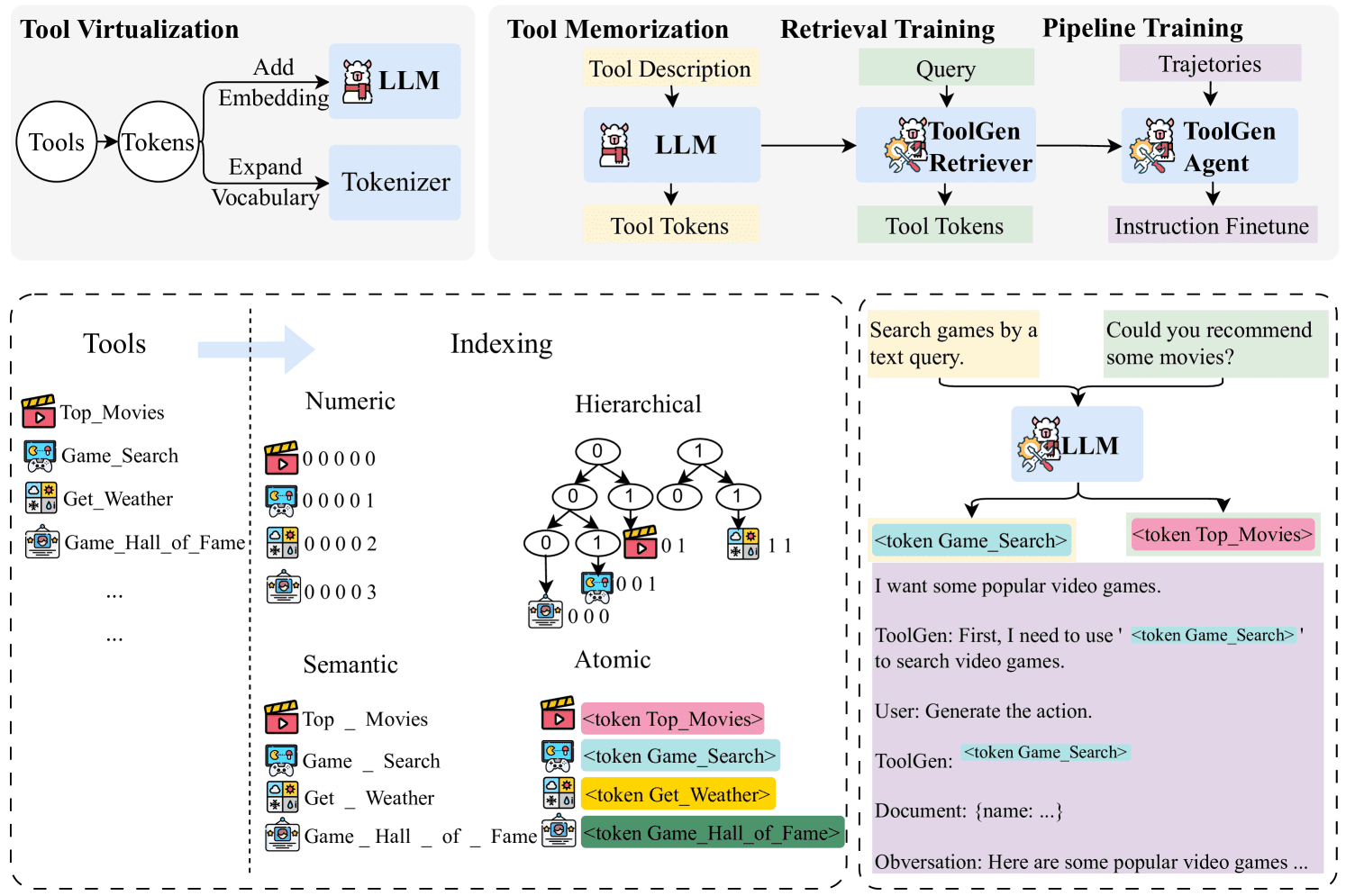

Specifically, each tool is represented as a unique virtual Token in the LLM vocabulary.Based on the pre-trained LLM, the training process of ToolGen consists of three phases: tool memorization, retrieval training and agent training. During the tool memorization phase, the model associates each virtual tool Token with its document. During retrieval training, the model learns to generate relevant ToolTokens based on user queries.Finally, in end-to-end agent tuning, the model is trained to act as an autonomous agent, generating plans and tools and determining the appropriate parameters for accomplishing tasks. By invoking tools and obtaining feedback from the external environment, the model can efficiently and integratively process user queries. Figure 1 shows how ToolGen compares to the traditional paradigm.

We validate ToolGen's superiority in two scenarios: a tool retrieval task where the model retrieves the correct tool for a given query, and an LLM-based agent task where the model accomplishes complex tasks involving real API calls. Utilizing a dataset of 47,000 real-world tools, ToolGen performs comparably to leading tool retrieval methods, but at significantly lower cost and with greater efficiency. In addition, it goes beyond the traditional tool learning paradigm, highlighting its potential for advancing more effective tool use systems.

Figure 1: Comparison of ToolGen with previous retrieval-based approaches. Previous approaches use retrievers to retrieve relevant tools by means of similarity matching, and then place these tools into LLM prompts for selection.ToolGen enables tool retrieval by directly generating tool Token, and can accomplish the task without relying on any external retrievers.

Figure 1: Comparison of ToolGen with previous retrieval-based approaches. Previous approaches use retrievers to retrieve relevant tools by means of similarity matching, and then place these tools into LLM prompts for selection.ToolGen enables tool retrieval by directly generating tool Token, and can accomplish the task without relying on any external retrievers.

ToolGen represents a new paradigm for tool interaction, fusing retrieval and generation into a unified model. This innovation lays the foundation for a new generation of AI agents that can adapt to a wide range of domain tools. In addition, ToolGen creates new opportunities for combining advanced techniques such as chained-thinking reasoning and reinforcement learning with a unified generative approach for tool use, extending the capabilities of large language models in real-world applications.

To summarize, our contributions include:

- A novel framework, ToolGen, is proposed that integrates tool retrieval and execution into the process of generating large language models through virtual tokens.

- A three-phase training process was designed to enable ToolGen to be efficient and scalable in terms of tool retrieval and API calls.

- Experimental validation shows that ToolGen exhibits comparable performance over the best available tool retrieval methods in large-scale tool repositories, but at a lower cost, more efficiently, and beyond the traditional tool learning paradigm.

2 Related work

2.1 Tool retrieval

Tool retrieval is crucial in the actual task execution of large language model agents, where tools are usually described by their documents. Traditional approaches such as sparse retrieval (e.g. BM25 (Robertson et al., 2009)) and dense retrieval (e.g., DPR (Karpukhin et al., 2020), ANCE (Xiong et al., 2021)) rely on large document indexes and external modules, leading to inefficiencies and difficulties in optimizing in an end-to-end agent framework. Several studies have explored alternative approaches. For example, Chen et al. (2024b) rewrite the query and extract its intent towards an unsupervised retrieval setting, although the results are not as good as supervised approaches. xu et al. (2024) propose an approach that improves retrieval accuracy but increases latency by iteratively optimizing the query based on tool feedback.

Recently, generative retrieval has emerged as a promising new paradigm where models directly generate relevant document identifiers rather than relying on traditional retrieval mechanisms (Wang et al., 2022; Sun et al., 2023; Kishore et al., 2023; Mehta et al., 2023; Chen et al., 2023b). Inspired by this, ToolGen represents each tool as a unique Token so that tool retrieval and invocation can be handled as generative tasks. In addition to simplifying retrieval, this design can be used in conjunction with other large language models and large language model-based agent functions (e.g., Chained Thought Reasoning (Wei et al., 2023) and ReAct (Yao et al., 2023)) is smoothly integrated. By integrating retrieval and task execution into a single large language modeling agent, it reduces latency and computational overhead and improves the efficiency and effectiveness of task completion.

2.2 Large Language Model Agent with Tool Calls

Large language models show strong potential for mastering the tools required for various tasks. However, most existing research has focused on a limited set of actions (Chen et al., 2023a; Zeng et al., 2023; Yin et al., 2024; Wang et al., 2024). For example, Toolformer (Schick et al., 2023) fine-tunes GPT-J to handle only five tools (e.g., calculators). While this approach works well in narrow tasks, it has difficulties in realistic scenarios containing large amounts of action space.ToolBench (Qin et al., 2023) extends the study by introducing over 16,000 tools, emphasizing the challenges of tool selection in complex environments.

To perform tool selection, current approaches typically employ a retrieval-generation pipeline, where the large language model first retrieves the relevant tool and then utilizes it (Patil et al., 2023; Qin et al., 2023). However, the pipeline approach faces two main problems: mis-passing of the retrieval step, and the difficulty for the large language model to fully understand and utilize the tools through simple prompts.

To alleviate these problems, researchers have attempted to represent actions as Token and transform action prediction into generative tasks. For example, RT2 (Brohan et al., 2023) generates Token representing robot actions, and Self-RAG (Asai et al., 2023) uses special Token to decide when to retrieve a document.ToolkenGPT (Hao et al., 2023) introduces tool-specific Token to trigger the tool's use, a concept closest to our approach. concept is closest to our approach.

Our approach differs significantly from ToolkenGPT. First, we focus on real tools that require flexible parameters for complex tasks (e.g., YouTube channel search), whereas ToolkenGPT is limited to simpler tools with fewer inputs (e.g., math functions with two numbers). In addition, ToolkenGPT relies on fewer sample cues, whereas ToolGen integrates tool knowledge directly into the larger language model through full parameter fine-tuning, allowing it to autonomously retrieve and perform tasks. Finally, our experiments involve a much larger set of tools-47,000 tools, compared to ToolkenGPT's 13-300.

Other studies such as ToolPlanner (Wu et al., 2024b) and AutoACT (Qiao et al., 2024) have used reinforcement learning or multi-agent systems to enhance tool learning or task completion (Qiao et al., 2024; Liu et al., 2023; Shen et al., 2024; Chen et al., 2024a). We do not compare these approaches to our model for two reasons:(1) most of these efforts rely on feedback mechanisms such as Reflection (Shinn et al., 2023) or reward modeling, which is similar to ToolBench's evaluation design, where the large language model serves as an evaluator and does not have access to real answers. However, this is not the focus of our study, and our end-to-end experiments do not rely on such feedback mechanisms. (2) Our approach does not conflict with these methods, but rather can be integrated. The exploration of such integration is left for future research.

3 ToolGen

In this section, we first introduce the symbolic representation used in the paper. Then, we describe in detail the specific approach of ToolGen, including tool virtualization, tool memory, retrieval training, and end-to-end agent tuning, as shown in Figure 2. Finally, we present our inference methodology.

Figure 2: Schematic diagram of the ToolGen framework. In the tool virtualization phase, tools are mapped to virtual tokens, and in the next three training phases, ToolGen first memorizes tools by predicting tool tokens from tool documents. Next, ToolGen learns to retrieve tools by predicting tool tokens from queries. Finally, pipeline data (i.e., trajectories) are used to fine-tune the retrieval model in the last stage, generating the ToolGen Agent model.

Figure 2: Schematic diagram of the ToolGen framework. In the tool virtualization phase, tools are mapped to virtual tokens, and in the next three training phases, ToolGen first memorizes tools by predicting tool tokens from tool documents. Next, ToolGen learns to retrieve tools by predicting tool tokens from queries. Finally, pipeline data (i.e., trajectories) are used to fine-tune the retrieval model in the last stage, generating the ToolGen Agent model.

3.1 Preparatory knowledge

For a given user query q, the goal of tool learning is to solve q by using tools from a large set of tools D={d1,d2,...,dN}, where |D|=N is a large number, which makes it impractical to include all tools in D in a large language modeling environment. Therefore, current research typically uses a retriever R to retrieve k relevant tools from D, denoted as Dk,R={dr1,dr2,...,drk}=R(q,k,D), where |Dk,R|≪N. The final prompter is a concatenation of q and Dk,R, denoted as Prompt=[q,Dk,R]. To accomplish a task (query), agents based on large language models typically employ a four-stage iteration (Qu et al., 2024 ): generating a plan pi , selecting a tool dsi , determining tool parameters ci , and collecting information from tool feedback fi . We denote these steps as pi,dsi,ci,fi for the i-th iteration. The model will continue to iterate through these steps until the task is completed, at which point the final answer a is generated. The entire trajectory can be represented as Traj=[Prompt,(p1,ds1,c1,f1),...,(pt,dst,ct,ft),a]=[q,R (q,D),(p1,ds1,c1,f1),...,(pt,dst,ct,ft),a]. This iterative approach allows the model to dynamically adjust and refine its operations at each step based on the feedback received, thus improving its performance in accomplishing complex tasks.

3.2 Tool virtualization

In ToolGen, we implement tool virtualization by mapping each tool to a unique new Token through an approach called atomic indexing. In this approach, a unique Token is assigned to each tool by extending the vocabulary of a large language model. the embedding of each tool Token is initialized to the average embedding of its corresponding tool name, thus providing a semantically meaningful starting point for each tool.

Formally, a Token set is defined as T = Index(d)|∀d ∈ D, where Index is a function that maps tools to Token. We show that atomic indexing is more efficient and reduces phantom phenomena compared to other indexing methods (e.g., semantic and numeric mappings; see 4.3 and 5.4 for discussion).

3.3 Tool Memory

After assigning Token to a tool, the big language model still lacks any knowledge about the tool. To address this problem, we fine-tune the tool description by using it as input and its corresponding Token as output, referring to this process as tool memorization. We use the following loss function:

| ℒtool=∑d∈D-logpθ(Index(d)|ddoc) |

where θ denotes the large language model parameters and ddoc denotes the tool description. This step provides the basics of the tool and related operations for the large language model.

3.4 Search training

We then train the large language model to associate the hidden space of virtual tool Token (and its documents) with the space of user queries, so that the model can generate the correct tool based on the user's query. To this end, we fine-tune the big language model by taking user queries as input and corresponding tool Token as output:

| ℒretrieval=∑q∈Q∑d∈Dqq-logpθ′(Index(d)|q) |

where θ′ denotes the large language model parameter after tool memorization, Q is the set of user queries, and Dq is the set of tools associated with each query. This process generates the ToolGen retriever, enabling it to generate the appropriate Tool Token for a given user query.

3.5 End-to-End Agent Tuning

After retrieval training, the Big Language model is able to generate Tool Token from the query.In the final stage, we use the Agent-Flan task to complete the trajectory fine-tuning model. We adopt an Agent-Flan-like (Chen et al., 2024c) inference strategy, where our pipeline uses an iterative process where the model first generates the Thought, and then generates the corresponding Action Token, which is used to fetch the tool document, which is then used by the big language model to generate the necessary parameters. The process iterates until the model generates a "done" token or reaches the maximum number of rounds. The generated trajectory is denoted as Traj=[q,(p1,Index(ds1),c1,f1),...,(pt,Index(dst),ct,ft),a]. In this structure, the association tool is no longer needed.

3.6 Reasoning

During the reasoning process, a large language model may generate action Token that exceeds the predefined set of tool Token.To prevent this, we devise a constraint bundle search generation method that restricts the output Token to the set of tool Token. We apply this constrained bundle search to both tool retrieval (where the model is based on a query selection tool) and end-to-end agent systems, effectively reducing illusions in the action generation step. See 5.4 for a detailed analysis. See Appendix C for implementation details.

4 Tool retrieval assessment

4.1 Experimental setup

We use the pre-trained Llama-3-8B model (Dubey et al., 2024) as the base model with a vocabulary of 128,256. 46,985 Token were added during tool virtualization through an atomic indexing approach, resulting in a final vocabulary of 175,241. we fine-tuned the model using the Llama-3 chat template with a cosine learning rate scheduler and applied a warm-up step of 3%. The maximum learning rate was 4 × 10-5. All models were passed through Deepspeed ZeRO 3 on 4 × A100 GPUs (Rajbhandari et al.2020) for training. Eight rounds of tool memorization and one round of retrieval training were conducted.

data set

Our experiments are based on ToolBench, a real-world tool benchmark containing more than 16,000 tool collections, each containing multiple APIs, for a total of about 47,000 unique APIs.Each API is documented using a dictionary containing the API name, description, and invocation parameters. See the Appendix for a real-world example AWe treat each API as an operation and map it to a token. We treat each API as an operation and map it to a Token. our retrieval and end-to-end agent tuning data is transformed from ToolBench's raw data. See the Appendix for more information GThe following is an example of a tool. Although each tool may contain multiple APIs, for simplicity this document refers to each API collectively as a tool.

We follow the data partitioning approach of Qin et al. (2023) and categorize the 200,000 pairs (queries, associated APIs) into three classes: I1 (single-tool queries), I2 (multi-tool queries within classes), and I3 (multi-tool commands within collections), containing 87,413, 84,815, and 25,251 instances, respectively.

Baseline methodology

We compare ToolGen to the following benchmarks:

- BM25: A classical TF-IDF-based unsupervised retrieval method based on the word similarity between a query and a document.

- Embedding similarity (EmbSim): sentence embeddings generated using OpenAI's sentence embedding model; specifically text-embedding-3-large used in our experiments.

- Re-Invoke (Chen et al., 2024b): an unsupervised retrieval method incorporating query rewriting and document expansion.

- IterFeedback (Xu et al., 2024): a BERT-based retriever that uses gpt-3.5-turbo-0125 as a feedback model for up to 10 rounds of iterative feedback.

- ToolRetriever (Qin et al., 2023): a BERT-based retriever trained by contrast learning.

set up

We conduct experiments in two settings. In the first setting, In-Domain Retrieval restricts the retrieval of tools whose search space is within the same domain. For example, when evaluating queries in the I1 domain, it is limited to tools in I1. This setting is consistent with the ToolBench setting. The second setting, Multi-Domain Retrieval, is more complex, where the search space is extended to tools in all three types of domains. In this case, the model is trained on merged data, increasing the search space and task complexity. Unlike ToolBench, the multi-domain setting reflects realistic scenarios where retrieval tasks may involve overlapping or mixed domains. This setting assesses the model's ability to generalize across domains and handle more complex and diverse retrieval tasks.

norm

We evaluated retrieval performance using the Normalized Discount Cumulative Gain (NDCG) (Järvelin & Kekäläinen, 2002), a widely used metric in ranking tasks, including tool retrieval.The NDCG takes into account both the relevance of the retrieved tools and the ranking position.

Table 1: Tool retrieval evaluation in two settings: (1) intra-domain retrieval, where models are trained and evaluated on the same domains, and (2) multi-domain retrieval, where models are trained on all domains and evaluated on the complete set of tools from all domains.BM25, EmbSim, and Re-Invoke are untrained, unsupervised benchmarking methods.IterFeedback is a retrieval system with multiple models and feedback mechanisms.ToolRetriever is trained using contrast learning, while ToolGen is trained using next Token prediction. Items with * in the results indicate models not implemented by us, and the data is from the original paper and thus listed only under the in-domain setting. For ToolGen in the in-domain setting, we allow the generation space to include all Token, which is a more challenging scenario compared to other models. The optimal results in each category are marked in bold.

| mould | I1 | I2 | I3 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| NDCG1 | NDCG3 | NDCG5 | NDCG1 | NDCG3 | NDCG5 | NDCG1 | NDCG3 | NDCG5 | |

| region | |||||||||

| BM25 | 29.46 | 31.12 | 33.27 | 24.13 | 25.29 | 27.65 | 32.00 | 25.88 | 29.78 |

| EmbSim | 63.67 | 61.03 | 65.37 | 49.11 | 42.27 | 46.56 | 53.00 | 46.40 | 52.73 |

| Re-Invoke* | 69.47 | - | 61.10 | 54.56 | - | 53.79 | 59.65 | - | 59.55 |

| IterFeedback* | 90.70 | 90.95 | 92.47 | 89.01 | 85.46 | 87.10 | 91.74 | 87.94 | 90.20 |

| ToolRetriever | 80.50 | 79.55 | 84.39 | 71.18 | 64.81 | 70.35 | 70.00 | 60.44 | 64.70 |

| ToolGen | 89.17 | 90.85 | 92.67 | 91.45 | 88.79 | 91.13 | 87.00 | 85.59 | 90.16 |

| multidomain | |||||||||

| BM25 | 22.77 | 22.64 | 25.61 | 18.29 | 20.74 | 22.18 | 10.00 | 10.08 | 12.33 |

| EmbSim | 54.00 | 50.82 | 55.86 | 40.84 | 36.67 | 39.55 | 18.00 | 17.77 | 20.70 |

| ToolRetriever | 72.31 | 70.30 | 74.99 | 64.54 | 57.91 | 63.61 | 52.00 | 39.89 | 42.92 |

| ToolGen | 87.67 | 88.84 | 91.54 | 83.46 | 86.24 | 88.84 | 79.00 | 79.80 | 84.79 |

4.2 Results

Table 1 shows the results of the tool retrieval. As expected, all trained models significantly outperform the untrained baseline (BM25, EmbSim, and Re-Invoke) on all metrics, showing the advantages of training on tool retrieval data.

Our proposed ToolGen model consistently performs best in both settings. In the in-domain setting, ToolGen provides highly competitive results, with performance comparable to that of the IterFeedback system using multiple models and feedback mechanisms. As a single model, ToolGen significantly outperforms ToolRetriever on all metrics and even outperforms IterFeedback in several scenarios (e.g., NDCG@5 for domain I1 and NDCG@1, @3, @5 for I2).

In the multidomain setting, ToolGen remains robust, outperforming ToolRetriever and maintaining the lead among the baseline models, despite the larger search space and the usual drop in overall performance. This shows that ToolGen, despite being a single model, can still compete with complex retrieval systems like IterFeedback, demonstrating its ability to handle complex real-world retrieval tasks with unclear domain boundaries.

4.3 Comparison of Indexing Methods

While ToolGen uses atomic indexes for tool virtualization, we also explore several alternative generative retrieval methods. In this section, we compare them to the following three approaches:

- Digital: maps each tool to a unique number. The generated Token is purely digital, providing no intrinsic semantic information, but a unique identifier for each tool.

- Hierarchical: This method clusters tools into non-overlapping groups and recursively divides these clusters to form a hierarchical structure. An index from the root node to the leaf nodes in this structure represents each tool, similar to the Brown clustering technique.

- Semantics: In this approach, each tool is mapped to its name, which guides the Large Language Model (LLM) through the semantic content of the tool name. The tool name directly provides a meaningful representation related to its function.

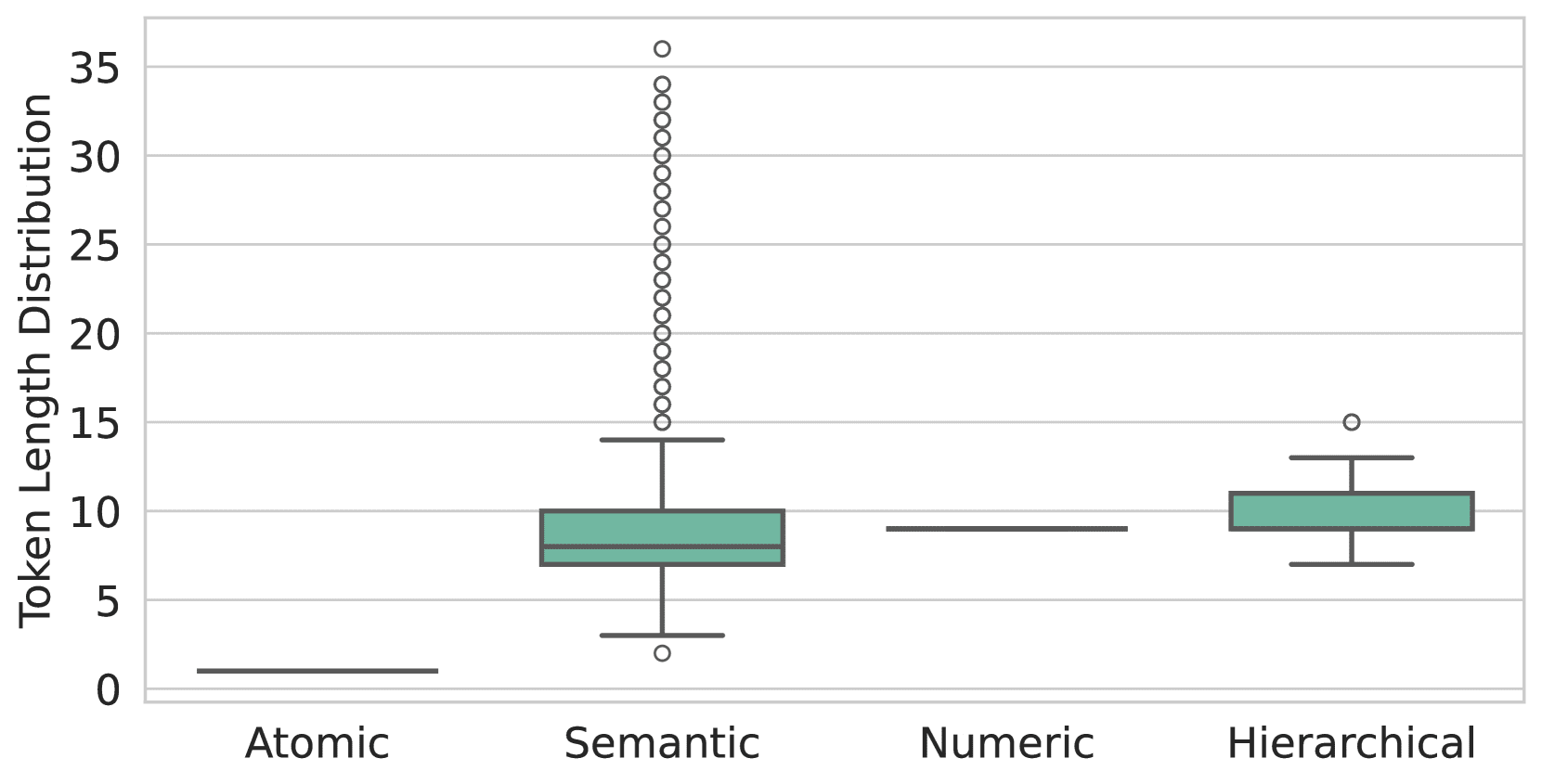

Figure 3: The distribution of the number of sub-Tokens per tool differs under each indexing method. Atomic indexing ensures that each tool is a single Token, while numeric indexing encodes tools into N Token, i.e., the tool number lies in the range (10N-1,10N]. In contrast, semantic indexing and hierarchical indexing produce variable numbers of sub-Tokens, with semantic indexing having a longer sequence of sub-Tokens and more outliers.

Specific implementation details are described in Appendix B.

First, we analyzed the number of sub-Tokens required by each method to represent each tool, as shown in Figure 3. The graph highlights the superiority of atomic indexing, where each tool is represented by a single Token, whereas the other methods require multiple Token. this efficiency allows ToolGen to reduce the number of generated Token and shorten the reasoning time in retrieval and agent scenarios.

Next, we examined the effectiveness of the different indexing methods. As shown in Table 2, semantic indexing exhibits the best retrieval performance across a number of metrics and scenarios, while atomic indexing comes in a close second in many cases. We attribute this to the fact that semantic indexing better matches the pre-trained data of the large language model. However, this advantage diminishes as the training data and types increase. For example, in Section 5.3, we show that atomic indexes perform better on end-to-end results. Taking these factors into account, we choose atomic indexes for ToolGen tool virtualization.

Table 2: Retrieval evaluations of different indexing methods in the multidomain setting. The best results are bolded and the second best results are underlined.

| mould | I1 | I2 | I3 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| NDCG1 | NDCG3 | NDCG5 | NDCG1 | NDCG3 | NDCG5 | NDCG1 | NDCG3 | NDCG5 | |

| numeric | 83.17 | 84.99 | 88.73 | 79.20 | 79.23 | 83.88 | 71.00 | 74.81 | 82.95 |

| lamination | 85.67 | 87.38 | 90.26 | 82.22 | 82.70 | 86.63 | 78.50 | 79.47 | 84.15 |

| meaning of words | 89.17 | 91.29 | 93.29 | 83.71 | 84.51 | 88.22 | 82.00 | 78.86 | 85.43 |

| atomic | 87.67 | 88.84 | 91.54 | 83.46 | 86.24 | 88.84 | 79.00 | 79.80 | 84.79 |

Table 3: Ablation studies for tool retrieval. The effects of removing retrieval training, tool memory, and constrained beam search on ToolGen performance are evaluated separately.

| mould | I1 | I2 | I3 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| NDCG1 | NDCG3 | NDCG5 | NDCG1 | NDCG3 | NDCG5 | NDCG1 | NDCG3 | NDCG5 | |

| ToolGen | 87.67 | 88.84 | 91.54 | 83.46 | 86.24 | 88.84 | 79.00 | 79.80 | 84.79 |

| -Memory | 84.00 | 86.77 | 89.35 | 82.21 | 83.20 | 86.78 | 77.00 | 77.71 | 84.37 |

| -Retrieval training | 10.17 | 12.31 | 13.89 | 5.52 | 7.01 | 7.81 | 3.00 | 4.00 | 4.43 |

| -Binding | 87.67 | 88.79 | 91.45 | 83.46 | 86.24 | 88.83 | 79.00 | 79.93 | 84.92 |

4.4 Ablation experiments

We conducted ablation experiments to evaluate the effects of different training phases of the ToolGen, as shown in Table 3 Shown. The results show that retrieval training is a key factor affecting the performance of tool retrieval, as it directly aligns the retrieval task where the input is a query and the output is a tool Token. The removal of tool memories results in a slight decrease in performance, although it is helpful in improving generalization performance, as we will show in Appendix F discussed further in. Similarly, restricted bundle search, while contributing little to the retrieval task, helps to prevent hallucination generation and thus has some value in end-to-end agent tasks, see Section 5.4.

5 End-to-end assessment

5.1 Experimental setup

We have made several changes to the trace data from ToolBench to adapt it to the ToolGen framework. For example, since ToolGen does not require explicit selection of relevant tools as input, we removed this information from the system prompts. For more details, see Appendix G. Based on this, we fine-tuned the retrieval model using the reformatted data to generate an end-to-end ToolGen agent.

baseline model

- GPT-3.5: We use gpt-3.5-turbo-0613 as one of the baseline models. The implementation is the same as StableToolBench (Guo et al., 2024), where the tool-calling functionality of GPT-3.5 is used to form tool agents.

- ToolLlama-2: Qin et al. (2023) introduced ToolLlama-2 by fine-tuning the Llama-2 (Touvron et al., 2023) model on ToolBench data.

- ToolLlama-3: To ensure a fair comparison, we created the ToolLlama-3 baseline model by fine-tuning the same base model Llama-3 as ToolGen on the ToolBench dataset. For the remainder of this paper, ToolLlama-3 is referred to as ToolLlama to distinguish it from ToolLlama-2.

set up

- Use of Genuine Tools (G.T.): referring to Qin et al. (2023), we define the Genuine Tools for a query as ChatGPT Selected tool. For ToolLlama, we entered the real tool directly in the prompt, in the same format as its training data. For ToolGen, since it was not trained on data with pre-selected tools, we added the prefix: I am using the following tool in the planning phase:[tool tokens], where [tool tokens] is the virtual Token corresponding to the real tool.

- Use of Retriever: In the end-to-end experiments, we use a retrieval-based setup. For the baseline model, we use the tools retrieved by ToolRetriever as the associated tools. ToolGen, on the other hand, generates tool tokens directly and therefore does not use a retriever.

All models are fine-tuned using a cosine scheduler with the maximum learning rate set to 4 × 10-5. The context length is truncated to 6,144 and the total batch size is 512. We further use Flash-Attention (Dao et al., 2022; Dao, 2024) and Deepspeed ZeRO 3 ( Rajbhandari et al., 2020) to save memory.

ToolGen and ToolLlama follow different paradigms to accomplish the task; ToolLlama generates ideas, actions, and parameters in a single round, while ToolGen separates these steps. For ToolGen, we set a cap of up to 16 rounds, allowing for 5 rounds of actions and 1 round of final answers. We compare this to ToolLlama's limit of 6 rounds.

In addition, we introduced a retry mechanism for all models to prevent premature termination, the details of which are described in Appendix D. Specifically, if a model generates a response that contains "give up" or "I'm sorry", we prompt the model to regenerate the response at a higher temperature.

Assessment of indicators

For end-to-end evaluation, we use the stable tool evaluation benchmark StableToolBench (Guo et al., 2024), which selects solvable queries from ToolBench and simulates the output of failed tools using GPT-4 (OpenAI, 2024). We evaluate performance using two metrics: solvable pass rate (SoPR), which is the percentage of queries successfully solved, and solvable win rate (SoWR), which indicates the percentage of answers that outperform the reference model (GPT-3.5 in this study). In addition, we provide the micro-averaged scores for each category.

Table 4: Performance of end-to-end evaluation of unseen commands in two settings. For the R. setting, GPT-3.5 and ToolLlama use ToolRetriever, while ToolGen does not use an external retriever. All resulting SoPR and SoWR evaluations are performed three times and reported as mean values.

| mould | SoPR | SoWR | ||||||

|---|---|---|---|---|---|---|---|---|

| I1 | I2 | I3 | Avg. | I1 | I2 | I3 | Avg | |

| Use of real tools (G.T.) | ||||||||

| GPT-3.5 | 56.60 | 47.80 | 54.64 | 50.91 | - | - | - | - |

| ToolLlama-2 | 53.37 | 41.98 | 46.45 | 48.43 | 47.27 | 59.43 | 27.87 | 47.58 |

| ToolLlama | 55.93 | 48.27 | 52.19 | 52.78 | 50.31 | 53.77 | 31.15 | 47.88 |

| ToolGen | 61.35 | 49.53 | 43.17 | 54.19 | 51.53 | 57.55 | 31.15 | 49.70 |

| Using the Retriever (R.) | ||||||||

| GPT-3.5 | 51.43 | 41.19 | 34.43 | 45.00 | 53.37 | 53.77 | 37.70 | 50.60 |

| ToolLlama-2 | 56.13 | 49.21 | 34.70 | 49.95 | 50.92 | 53.77 | 21.31 | 46.36 |

| ToolLlama | 54.60 | 49.96 | 51.37 | 51.55 | 49.08 | 61.32 | 31.15 | 49.70 |

| ToolGen | 56.13 | 52.20 | 47.54 | 53.28 | 50.92 | 62.26 | 34.42 | 51.51 |

5.2 Results

Table 4 shows the end-to-end evaluation performance of each model in two environments: using the real tool (G.T.) and the retriever (R.). In the G.T. environment, ToolGen achieves an average SoPR score of 54.19, outperforming GPT-3.5 and ToolLlama, and ToolGen achieves the highest SoWR score of 49.70. ToolGen still maintains the lead in the Retriever environment, with an average SoPR of 53.28 and SoWR of 51.51. ToolLlama shows a competitive performance, outperforming the real tool on some individual instances. ToolLlama shows competitiveness and outperforms ToolGen on some individual instances. end-to-end ToolGen ablation studies are presented in Appendix G.

Table 5: End-to-end evaluation of different indexing methods.

| Indexing Methods | SoPR | SoWR | ||||||

|---|---|---|---|---|---|---|---|---|

| I1 | I2 | I3 | Avg. | I1 | I2 | I3 | Avg | |

| numeric index | 34.76 | 29.87 | 46.99 | 35.45 | 25.77 | 33.02 | 29.51 | 28.79 |

| hierarchical indexing | 50.20 | 45.60 | 32.79 | 45.50 | 38.04 | 43.40 | 29.51 | 38.18 |

| semantic indexing | 58.79 | 45.28 | 44.81 | 51.87 | 49.69 | 57.55 | 26.23 | 47.88 |

| atomic index | 58.08 | 56.13 | 44.81 | 55.00 | 47.85 | 57.55 | 29.51 | 47.58 |

5.3 Comparison of Indexing Methods

Similar to the comparison of indexing methods for the retrieval task (Section 4.3 ), Table 5 shows the comparison of the different indexing methods in the end-to-end agent task. In this setup, restricted decoding is removed, allowing the agent to freely generate Thought, Action, and Parameters.From the results, the atomic indexing method performs the best among the four indexing methods. We attribute this to the higher phantom rates of the other methods, as discussed in Section 5.4.

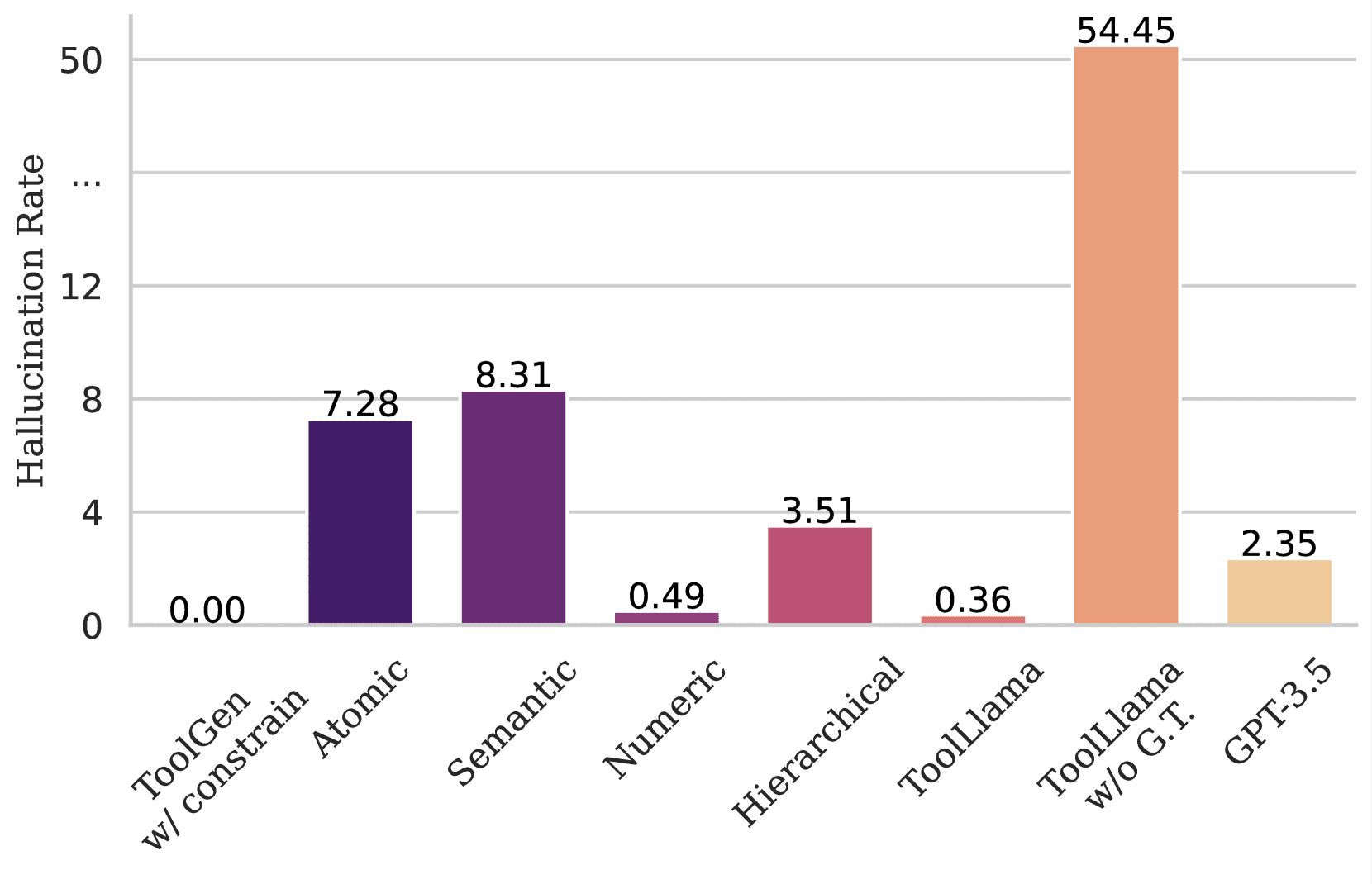

Figure 4: Shows the phantom rate of different models when generating non-existent tools. ToolGen does not generate non-existent tools when using restricted decoding. However, without this restriction, ToolGen generates 7% non-tool tokens in the Action generation phase when using atomic indexing, and the illusion rate is higher when using semantic indexing. For ToolLlama and GPT-3.5, the illusion occurs even if five real tools are provided in the hint. If no tool is specified in the hint, ToolLlama generates non-existing tool names in excess of 50%.

5.4 Hallucinations

We evaluate the model's phantom situation in tool generation in an end-to-end agent scenario. To do so, we enter queries that are formatted consistently with the model's training. Specifically, for ToolGen, we directly enter the query and prompt the model to generate the response according to the ToolGen agent paradigm (i.e., Thought, Tool, and Parameters in order). We tested Action decoding without using the bundle search restrictions described in Section 3.6. For ToolLlama and GPT-3.5, we entered the query and included 5 real tools. In all settings, we report the proportion of tools not present in the dataset among those generated in all tool generation actions. Figure 4 illustrates the phantom rate of non-existent tools generated by different models. As can be seen from the figure, ToolLlama and GPT-3.5 are still likely to generate non-existent tool names despite the fact that only five real tools are provided. In contrast, ToolGen completely avoids hallucinations through its restricted decoding design.

6 Conclusion

In this paper, we introduce ToolGen, a large language modeling (LLM) framework that unifies tool retrieval and execution by embedding tool-specific virtual tokens into a model vocabulary, thereby transforming tool interaction into a generative task. By including a three-stage training process, ToolGen empowers LLMs to efficiently retrieve and execute tools in real-world scenarios. This unified approach sets a new benchmark for scalable and efficient AI agents capable of handling large tool libraries. Going forward, ToolGen opens the door to integrating advanced technologies such as chain-of-thought reasoning, reinforcement learning, and ReAct, further enhancing the autonomy and versatility of LLMs in real-world applications.

References

- Asai et al. (2023)↑Akari Asai, Zeqiu Wu, Yizhong Wang, Avirup Sil, and Hannaneh Hajishirzi.Self-rag: Learning to retrieve, generate, and critique through self-reflection, 2023.URL https://arxiv.org/abs/2310.11511.

- Brohan et al. (2023)↑ Anthony Brohan, Noah Brown, Justice Carbajal, Yevgen Chebotar, Xi Chen, Krzysztof Choromanski, Tianli Ding, Danny Driess, Avinava Dubey, Chelsea Finn, Pete Florence, Chuyuan Fu, Montse Gonzalez Arenas, Keerthana Gopalakrishnan, Kehang Han, Karol Avinava Dubey, Chelsea Finn, Pete Florence, Chuyuan Fu, Montse Gonzalez Arenas, Keerthana Gopalakrishnan, Kehang Han, Karol Hausman, Alexander Herzog, Jasmine Hsu, Brian Ichter, Alex Irpan, Nikhil Joshi, Ryan Julian, Dmitry Kalashnikov, Yuheng Kuang, Isabel Leal, Lisa Lee, Tsang-Wei Edward Lee , Sergey Levine, Yao Lu, Henryk Michalewski, Igor Mordatch, Karl Pertsch, Kanishka Rao, Krista Reymann, Michael Ryoo, Grecia Salazar, Pannag Sanketi, Pierre Sermanet, Jaspil Joshi, Ryan Julian, Dmitry Kalashnikov, Yuheng Kuang, Isabel Leal, Lisa Lee, Tsang-Wei Edward Lee Pierre Sermanet, Jaspiar Singh, Anikait Singh, Radu Soricut, Huong Tran, Vincent Vanhoucke, Quan Vuong, Ayzaan Wahid, Stefan Welker, Paul Wohlhart. Jialin Wu, Fei Xia, Ted Xiao, Peng Xu, Sichun Xu, Tianhe Yu, and Brianna Zitkovich.Rt-2: Vision-language-action models transfer web knowledge to robotic control, 2023.URL https://arxiv.org/abs/2307.15818.

- Brown et al. (2020)↑Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler. Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei.Language models are few-shot learners.In Hugo Larochelle, Marc'Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin (eds.). Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual, 2020.URL https://proceedings.neurips.cc/paper/2020/hash/1457c0d6bfcb4967418bfb8ac142f64a-Abstract.html.

- Chen et al. (2023a) ↑Baian Chen, Chang Shu, Ehsan Shareghi, Nigel Collier, Karthik Narasimhan, and Shunyu Yao.Fireact: Toward language agent fine- tuning.arXiv preprint arXiv:2310.05915, 2023a.

- Chen et al. (2023b)↑Jiangui Chen, Ruqing Zhang, Jiafeng Guo, Maarten de Rijke, Wei Chen, Yixing Fan, and Xueqi Cheng.Continual Learning for Generative Retrieval over Dynamic Corpora. Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, CIKM '23, pp. 306-315, New York, NY, USA, 2023b. Association for Computing Machinery. isbn 9798400701245. doi: 10.1145/ 3583780.3614821.URL https://dl.acm.org/doi/10.1145/3583780.3614821.

- Chen et al. (2024a)↑Junzhi Chen, Juhao Liang, and Benyou Wang.Smurfs: Leveraging multiple proficiency agents with context-efficiency for tool planning, 2024a.URL https://arxiv.org/abs/2405.05955.

- Chen et al. (2024b)↑Yanfei Chen, Jinsung Yoon, Devendra Singh Sachan, Qingze Wang, Vincent Cohen-Addad, Mohammadhossein Bateni, Chen-Yu Lee, and Tomas Pfister.Re-invoke: tool invocation rewriting for zero-shot tool retrieval.arXiv preprint arXiv:2408.01875, 2024b.

- Chen et al. (2024c)↑Zehui Chen, Kuikun Liu, Qiuchen Wang, Wenwei Zhang, Jiangning Liu, Dahua Lin, Kai Chen, and Feng Zhao.Agent-flan: designing data and methods of effective agent tuning for large language models, 2024c.URL https://arxiv.org/abs/2403.12881.

- Dao (2024)↑Tri Dao.FlashAttention-2: Faster attention with better parallelism and work partitioning.In International Conference on Learning Representations (ICLR), 2024.

- Dao et al. (2022)↑Tri Dao, Daniel Y. Fu, Stefano Ermon, Atri Rudra, and Christopher Ré.FlashAttention: fast and memory-efficient exact attention with IO-awareness.In Advances in Neural Information Processing Systems (NeurIPS), 2022.

- Dubey et al. (2024)↑Abhimanyu Dubey, Abhinav Jauhri, Abhinav Pandey, Abhishek Kadian, Ahmad Al-Dahle, Aiesha Letman, Akhil Mathur, Alan Schelten, Amy Yang, Angela Fan, et al. The llama 3 herd of models.arXiv preprint arXiv:2407.21783, 2024.

- Gravitas (2023)↑Gravitas.AutoGPT, 2023.URL https://github.com/Significant-Gravitas/AutoGPT.

- Guo et al. (2024)↑Zhicheng Guo, Sijie Cheng, Hao Wang, Shihao Liang, Yujia Qin, Peng Li, Zhiyuan Liu, Maosong Sun, and Yang Liu.StableToolBench: Towards Stable Large-Scale Benchmarking on Tool Learning of Large Language Models, 2024.URL https://arxiv.org/abs/2403.07714.

- Hao et al. (2023)↑Shibo Hao, Tianyang Liu, Zhen Wang, and Zhiting Hu.Toolkengpt: Augmenting frozen language models with massive tools via tool embeddings.In Alice Oh, Tristan Naumann, Amir Globerson, Kate Saenko, Moritz Hardt, and Sergey Levine (eds.) Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, December 10 - 16, 2023 USA, December 10 - 16, 2023, 2023.URL http://papers.nips.cc/paper_files/paper/2023/hash/8fd1a81c882cd45f64958da6284f4a3f-Abstract-Conference.html.

- Järvelin & Kekäläinen (2002)↑Kalervo Järvelin and Jaana Kekäläinen.Cumulated gain-based evaluation of ir techniques.ACM Transactions on Information Systems (TOIS), 20(4):422-446, 2002.

- Karpukhin et al. (2020)↑Vladimir Karpukhin, Barlas Oguz, Sewon Min, Patrick Lewis, Ledell Wu, Sergey Edunov, Danqi Chen, and Wen-tau Yih.Dense passage retrieval for open-domain question answering.In Bonnie Webber, Trevor Cohn, Yulan He, and Yang Liu (eds.) Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 6769-6781, Online, 2020. association for Computational Linguistics. doi: 10.18653/v1/2020.emnlp-main.550.URL https://aclanthology.org/2020.emnlp-main.550.

- Kishore et al. (2023)↑Varsha Kishore, Chao Wan, Justin Lovelace, Yoav Artzi, and Kilian Q. Weinberger.Incdsi: Incrementally updatable document retrieval.In Andreas Krause, Emma Brunskill, Kyunghyun Cho, Barbara Engelhardt, Sivan Sabato, and Jonathan Scarlett (eds.). International Conference on Machine Learning, ICML 2023, 23-29 July 2023, Honolulu, Hawaii, USA, volume 202 of Proceedings of Machine Learning Research, pp. 17122-17134. PMLR, 2023.URL https://proceedings.mlr.press/v202/kishore23a.html.

- Liu et al. (2024)↑Xiao Liu, Hao Yu, Hanchen Zhang, Yifan Xu, Xuanyu Lei, Hanyu Lai, Yu Gu, Hangliang Ding, Kaiwen Men, Kejuan Yang, et al. Agentbench. Evaluating llms as agents.In The Twelfth International Conference on Learning Representations, 2024.

- Liu et al. (2023)↑ Zhiwei Liu, Weiran Yao, Jianguo Zhang, Le Xue, Shelby Heinecke, Rithesh Murthy, Yihao Feng, Zeyuan Chen, Juan Carlos Niebles, Devansh Arpit, et al. Bolaa: Benchmarking and orchestrating llm-augmented autonomous agents.arXiv preprint arXiv:2308.05960, 2023.

- Mehta et al. (2023)↑Sanket Vaibhav Mehta, Jai Gupta, Yi Tay, Mostafa Dehghani, Vinh Q. Tran, Jinfeng Rao, Marc Najork, Emma Strubell, and Donald Metzler. DSI++: Updating transformer memory with new documents.In Houda Bouamor, Juan Pino, and Kalika Bali (eds.). Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pp. 8198-8213, Singapore, 2023. Association for Computational Linguistics. doi: 10.18653/v1/2023.emnlp-main.510.URL https://aclanthology.org/2023.emnlp-main.510.

- OpenAI (2024)↑OpenAI.Gpt-4 technical report, 2024.URL https://arxiv.org/abs/2303.08774.

- Patil et al. (2023) ↑Shishir G. Patil, Tianjun Zhang, Xin Wang, and Joseph E. Gonzalez.Gorilla: Large language model connected with massive apis, 2023. URL https://arxiv.org/abs/2305.15334.

- Qiao et al. (2024)↑Shuofei Qiao, Ningyu Zhang, Runnan Fang, Yujie Luo, Wangchunshu Zhou, Yuchen Eleanor Jiang, Chengfei Lv, and Huajun Chen.Autoact. Automatic agent learning from scratch for qa via self-planning, 2024.URL https://arxiv.org/abs/2401.05268.

- Qin et al. (2023)↑Yujia Qin, Shihao Liang, Yining Ye, Kunlun Zhu, Lan Yan, Yaxi Lu, Yankai Lin, Xin Cong, Xiangru Tang, Bill Qian, Sihan Zhao, Lauren Hong, Runchu Tian, Ruobing Xie, Jie Zhou, Mark Gerstein, Dahai Li, Zhiyuan Liu, and Maosong Sun. Runchu Tian, Ruobing Xie, Jie Zhou, Mark Gerstein, Dahai Li, Zhiyuan Liu, and Maosong Sun.ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs, 2023.URL https://arxiv.org/abs/2307.16789.

- Qu et al. (2024)↑Changle Qu, Sunhao Dai, Xiaochi Wei, Hengyi Cai, Shuaiqiang Wang, Dawei Yin, Jun Xu, and Ji-Rong Wen.Tool learning with large language models: a survey.arXiv preprint arXiv:2405.17935, 2024.

- Rajbhandari et al. (2020)↑Samyam Rajbhandari, Jeff Rasley, Olatunji Ruwase, and Yuxiong He.Zero: Memory optimizations toward training trillion parameter models, 2020.URL https://arxiv.org/abs/1910.02054.

- Robertson et al. (2009)↑Stephen Robertson, Hugo Zaragoza, et al. The probabilistic relevance framework: Bm25 and beyond.Foundations and Trends® in Information Retrieval, 3(4):333-389, 2009.

- Schick et al. (2023)↑Timo Schick, Jane Dwivedi-Yu, Roberto Dessì, Roberta Raileanu, Maria Lomeli, Luke Zettlemoyer, Nicola Cancedda, and Thomas Scialom.Toolformer: language models can teach themselves to use tools, 2023.URL https://arxiv.org/abs/2302.04761.

- Shen et al. (2024)↑Weizhou Shen, Chenliang Li, Hongzhan Chen, Ming Yan, Xiaojun Quan, Hehong Chen, Ji Zhang, and Fei Huang.Small llms are weak tool learners: a multi-llm agent, 2024.URL https://arxiv.org/abs/2401.07324.

- Shinn et al. (2023)↑Noah Shinn, Federico Cassano, Ashwin Gopinath, Karthik Narasimhan, and Shunyu Yao.Reflexion: language agents with verbal reinforcement learning.In Proceedings of the 37th International Conference on Neural Information Processing Systems, pp. 8634-8652, 2023.

- Sun et al. (2023)↑Weiwei Sun, Lingyong Yan, Zheng Chen, Shuaiqiang Wang, Haichao Zhu, Pengjie Ren, Zhumin Chen, Dawei Yin, Maarten de Rijke, and Zhaochun Ren.Learning to tokenize for generative retrieval.In Alice Oh, Tristan Naumann, Amir Globerson, Kate Saenko, Moritz Hardt, and Sergey Levine (eds.) Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, December 10 - 16, 2023 USA, December 10 - 16, 2023, 2023.URL http://papers.nips.cc/paper_files/paper/2023/hash/91228b942a4528cdae031c1b68b127e8-Abstract-Conference.html.

- Touvron et al. (2023)↑Hugo Touvron, Thibaut Lavril, Gautier Izacard, Xavier Martinet, Marie-Anne Lachaux, Timothée Lacroix, Baptiste Rozière, Naman Goyal, Eric Hambro, Faisal Azhar, Aurelien Rodriguez, Armand Joulin, Edouard Grave, and Guillaume Lample.Llama: open and efficient foundation language models, 2023.URL https://arxiv.org/abs/2302.13971.

- Wang et al. (2024)↑Renxi Wang, Haonan Li, Xudong Han, Yixuan Zhang, and Timothy Baldwin.Learning from failure: integrating negative examples when fine -tuning large language models as agents.arXiv preprint arXiv:2402.11651, 2024.

- Wang et al. (2022)↑Yujing Wang, Yingyan Hou, Haonan Wang, Ziming Miao, Shibin Wu, Qi Chen, Yuqing Xia, Chengmin Chi, Guoshuai Zhao, Zheng Liu, Xing Xie, Hao Sun, Weiwei Deng, Qi Zhang, and Mao Yang. Hao Sun, Weiwei Deng, Qi Zhang, and Mao Yang.A neural corpus indexer for document retrieval.In Sanmi Koyejo, S. Mohamed, A. Agarwal, Danielle Belgrave, K . Cho, and A. Oh (2008), a neural corpus indexer for document retrieval. . Cho, and A. Oh (eds.). Advances in Neural Information Processing Systems 35: Annual Conference on Neural Information Processing Systems 2022, NeurIPS 2022, New Orleans, LA, USA, November 28 - December 9, 2022 New Orleans, LA, USA, November 28 - December 9, 2022, 2022.URL http://papers.nips.cc/paper_files/paper/2022/hash/a46156bd3579c3b268108ea6aca71d13-Abstract-Conference.html.

- Wei et al. (2023)↑Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Brian Ichter, Fei Xia, Ed Chi, Quoc Le, and Denny Zhou.Chain-of-thought prompting elicits reasoning in large language models, 2023.URL https://arxiv.org/abs/2201.11903.

- Wu et al. (2024a)↑Qingyun Wu, Gagan Bansal, Jieyu Zhang, Yiran Wu, Beibin Li, Erkang Zhu, Li Jiang, Xiaoyun Zhang, Shaokun Zhang, Jiale Liu, Ahmed Hassan Awadallah, Ryen W White, Doug Burger, and Chi Wang.Autogen: Enabling next-gen llm applications via multi-agent conversation framework.In COLM, 2024a.

- Wu et al. (2024b)↑Qinzhuo Wu, Wei Liu, Jian Luan, and Bin Wang.ToolPlanner: A Tool Augmented LLM for Multi Granularity Instructions with Path Planning and Feedback, 2024b.URL https://arxiv.org/abs/2409.14826.

- Xiong et al. (2021)↑Lee Xiong, Chenyan Xiong, Ye Li, Kwok-Fung Tang, Jialin Liu, Paul N. Bennett, Junaid Ahmed, and Arnold Overwijk.Approximate nearest neighbor negative contrastive learning for dense text retrieval.In 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, May 3-7, 2021. OpenReview.net, 2021.URL https://openreview.net/forum?id=zeFrfgyZln.

- Xu et al. (2024)↑Qiancheng Xu, Yongqi Li, Heming Xia, and Wenjie Li.Enhancing tool retrieval with iterative feedback from large language models.arXiv preprint arXiv:2406.17465, 2024.

- Yao et al. (2023)↑Shunyu Yao, Jeffrey Zhao, Dian Yu, Nan Du, Izhak Shafran, Karthik Narasimhan, and Yuan Cao.ReAct: Synergizing reasoning and acting in language models.In International Conference on Learning Representations (ICLR), 2023.

- Yin et al. (2024)↑Da Yin, Faeze Brahman, Abhilasha Ravichander, Khyathi Chandu, Kai-Wei Chang, Yejin Choi, and Bill Yuchen Lin.Agent lumos: Unified and modular training for open-source language agents.In Lun-Wei Ku, Andre Martins, and Vivek Srikumar (eds.). Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 12380-12403, Bangkok, Thailand, August 2024. association for Computational Linguistics. doi: 10.18653/v1/2024.acl-long.670. URL https://aclanthology.org/2024.acl-long.670.

- Zeng et al. (2023) ↑Aohan Zeng, Mingdao Liu, Rui Lu, Bowen Wang, Xiao Liu, Yuxiao Dong, and Jie Tang.Agenttuning: enabling generalized agent abilities for llms, 2023.

An example of a real tool

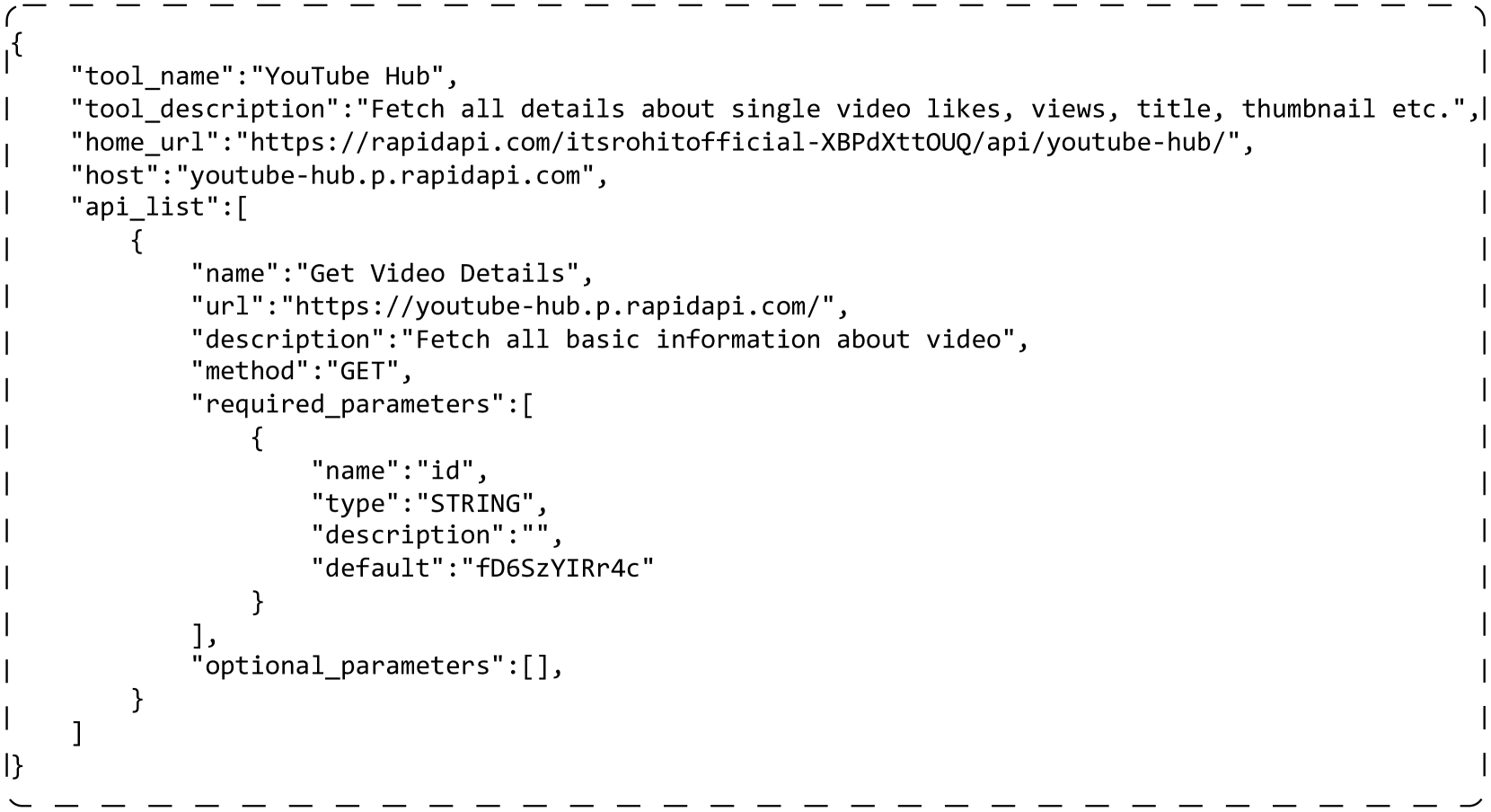

seek 5 An example of a real tool is shown. Each tool contains multiple APIs. the following fields are used in our experiments: "tool_name" is the name of the tool. "tool_description" describes information about the tool, such as what it does. In each API, "name" is the name of the API. "description" describes information about the API. "method" is the http method that calls the API. "required_parameters" is the parameters that must be filled in when calling the API. "optional_parameters" can be used to set additional parameters (optional).

{

"tool_name":"YouTube Hub",

"tool_description":"获取单个视频的点赞数、观看次数、标题、缩略图等详细信息。",

"home_url":"https://rapidapi.com/itsrohitofficial-XBPdXttOUQ/api/youtube-hub/",

"host":"youtube-hub.p.rapidapi.com",

"api_list":[

{

"name":"获取视频详情",

"url":"https://youtube-hub.p.rapidapi.com/",

"description":"获取视频的所有基本信息",

"method":"GET",

"required_parameters":[

{

"name":"id",

"type":"STRING",

"description":"",

"default":"fD6SzYIRr4c"

}

],

"optional_parameters":[],

}

]

}

Figure 5: An example of a real tool. The tool contains an API and unnecessary fields have been removed for simplicity.

B Tool virtualization implementation

ToolGen uses a single unique Token to represent a tool, which demonstrates its strength in tool retrieval and invocation. We have also introduced other methods for indexing tools, including semantic, numeric, and hierarchical. Below is a detailed description of how we implement each type of indexing.

atomic

Indexing is the method we use in ToolGen. In contrast to the other methods, it uses a single Token as the tool and does not fictionalize a tool that does not exist. We use <> to combine the tool name and the API name to form a single Token. e.g., in the Appendix A The example in this section results in a Token of <>.

meaning of words

The index maps each tool to a name used in ToolBench, which is also a combination of the tool name and the API name. However, the name can be broken down into multiple Token so that the model can perceive its semantic meaning. In the example in Appendix A, the resulting mapping is get_video_details_for_youtube_hub.

numeric

The index maps each tool to a unique number. We begin by obtaining a list of all tools, which is approximately 47,000 in length, and for all tools, we use a five-digit number, separated by spaces, to represent the tool. If the example in Appendix A is the 128th element in the list, we use 0 0 0 0 1 2 8 to represent the tool. Since the Llama-3 lexicon encodes each number

layering

also maps each tool to a number. Unlike numeric indexing, we inject structural information into the tool representation through iterative clustering. In each iteration, we cluster the tools into ten clusters, each assigned a number from 0 to 9. For each cluster, we repeat this clustering process until there is only one tool in that cluster. These steps form a clustering tree. We go from the root to the leaf numbers as a representation of the tools in that leaf. The examples in Appendix A may be assigned a number longer than five digits, such as 0 1 2 2 3 3 3.

C Binding search details

When retrieving and completing end-to-end agent tasks, we use constrained bundle search to restrict the generated operations to valid tool tokens.The detailed steps are described in Algorithm 1.The basic idea is to restrict the search space during the bundle search step. To do this, we first construct a disjoint dictionary tree, where each node represents a tool Token ID, and the children of that node are all feasible IDs that follow the current ID. using this tree, we can determine all possible next Token IDs based on the currently searched IDs. in the bundle search step, we block out all other infeasible Token logits, forcing possible IDs to be sampled or searched.

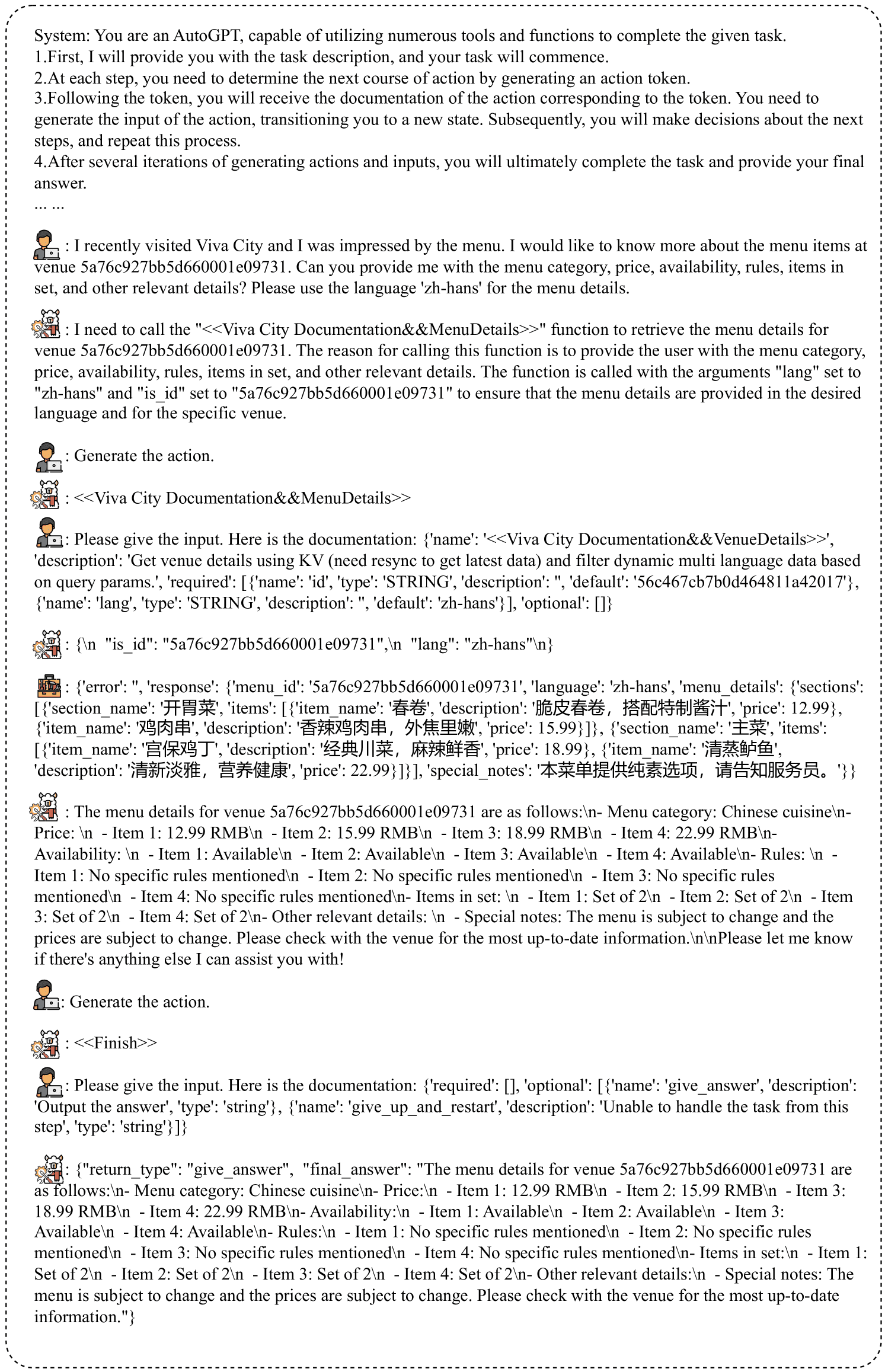

For retrieval, this can be applied directly during generation. For the end-to-end agent task, since we decompose the reasoning step into three dialog rounds, we can easily detect when ToolGen needs to generate an action and therefore apply the constraint. Figure 6 shows an example of end-to-end reasoning for ToolGen, where there is no associated tool for ToolGen to choose from. It can just generate the ToolToken and complete the task.

Table 6: Statistics of the dataset for the three stages of training. For tool memorization, there are some duplicates, resulting in a slightly larger sample size than the number of tools we used.

| data set | tool memory | Search training | End-to-end agent tuning | |||

|---|---|---|---|---|---|---|

| I1 | I2 | I3 | full | |||

| #num | 49,936 | 194,086 | 222,783 | 72,833 | 489,702 | 183,336 |

系统:您是一个 AutoGPT,能够利用众多工具和功能来完成给定的任务。

1. 首先,我会给您提供任务描述,您的任务将开始。

2. 在每一步中,您需要通过生成一个行动令牌来确定下一步行动。

3. 在令牌之后,您将收到与该令牌对应的行动文档。您需要生成该行动的输入,将您转移到一个新状态。随后,您将决定下一步,并重复此过程。

4. 在生成多个行动和输入的几次迭代后,您将最终完成任务并提供您的最终答案。

... ...

:我最近访问了 Viva City,菜单给我留下了深刻的印象。我想了解更多关于场馆 5a76c927bb5d660001e09731 的菜单项。您能提供菜单类别、价格、可用性、规则、套餐项及其他相关细节吗?请使用语言 'zh-hans' 来提供菜单详情。

:我需要调用 "<<Viva City Documentation&&MenuDetails>>" 函数以检索场馆 5a76c927bb5d660001e09731 的菜单详情。调用此函数的原因是为了向用户提供菜单类别、价格、可用性、规则、套餐项及其他相关细节。该函数的调用参数为 "lang" 设置为 "zh-hans" 和 "is_id" 设置为 "5a76c927bb5d660001e09731",以确保提供的菜单详情符合所需语言,并且是针对特定场馆。

:生成行动。

:<<Viva City Documentation&&MenuDetails>>

:请给出输入。以下是文档:{'name': '<<Viva City Documentation&&VenueDetails>>', 'description': '使用 KV 获取场馆详情(需要重新同步以获取最新数据),并根据查询参数过滤动态多语言数据。', 'required': [{'name': 'id', 'type': 'STRING', 'description': '', 'default': '56c467cb7b0d464811a42017'}, {'name': 'lang', 'type': 'STRING', 'description': '', 'default': 'zh-hans'}], 'optional': []}

:{\n "is_id": "5a76c927bb5d660001e09731",\n "lang": "zh-hans"\n}

:{'error': '', 'response': {'menu_id': '5a76c927bb5d660001e09731', 'language': 'zh-hans', 'menu_details': {'sections': [{'section_name': '开胃菜', 'items': [{'item_name': '春卷', 'description': '脆皮春卷,搭配特制酱汁', 'price': 12.99}, {'item_name': '鸡肉串', 'description': '香辣鸡肉串,外焦里嫩', 'price': 15.99}]}, {'section_name': '主菜', 'items': [{'item_name': '宫保鸡丁', 'description': '经典川菜,麻辣鲜香', 'price': 18.99}, {'item_name': '清蒸鲈鱼', 'description': '清新淡雅,营养健康', 'price': 22.99}]}], 'special_notes': '本菜单提供纯素选项,请告知服务员。'}}}

:场馆 5a76c927bb5d660001e09731 的菜单详情如下:\n- 菜单类别:中餐\n价格:\n - 项目 1:12.99 人民币\n - 项目 2:15.99 人民币\n - 项目 3:18.99 人民币\n - 项目 4:22.99 人民币\n可用性:\n - 项目 1:可用\n - 项目 2:可用\n - 项目 3:可用\n - 项目 4:可用\n- 规则:\n - 项目 1:未提及具体规则\n - 项目 2:未提及具体规则\n - 项目 3:未提及具体规则\n - 项目 4:未提及具体规则\n- 套餐项:\n - 项目 1:2份套餐\n - 项目 2:2份套餐\n - 项目 3:2份套餐\n - 项目 4:2份套餐\n- 其他相关细节:\n - 特别说明:菜单可能会更改,价格也可能会变动。请向场馆确认最新信息。\n\n请告诉我是否还有其他我可以帮助您的地方!

:生成行动。

:<<Finish>>

:请给出输入。以下是文档:{'required': [], 'optional': [{'name': 'give_answer', 'description': '输出答案', 'type': 'string'}, {'name': 'give_up_and_restart', 'description': '无法从此步骤处理任务', 'type': 'string'}]}

:{"return_type": "give_answer", "final_answer": "场馆 5a76c927bb5d660001e09731 的菜单详情如下:\n- 菜单类别:中餐\n- 价格:\n - 项目 1:12.99 人民币\n - 项目 2:15.99 人民币\n - 项目 3:18.99 人民币\n - 项目 4:22.99 人民币\n- 可用性:\n - 项目 1:可用\n - 项目 2:可用\n - 项目 3:可用\n - 项目 4:可用\n- 规则:\n - 项目 1:未提及具体规则\n - 项目 2:未提及具体规则\n - 项目 3:未提及具体规则\n - 项目 4:未提及具体规则\n- 套餐项:\n - 项目 1:2份套餐\n - 项目 2:2份套餐\n - 项目 3:2份套餐\n - 项目 4:2份套餐\n- 其他相关细节:\n - 特别说明:菜单可能会更改,价格也可能会变动。请向场馆确认最新信息。"}

Figure 6: Example of reasoning with ToolGen. First a system prompt is given, with no associated tool. Then the user gives a task query, ToolGen generates a thought, and we use the user role prompt model to generate an action. After generating the action, we again use the user to provide a tool document. The model will generate tool inputs based on this documentation.

Figure 6: Example of reasoning with ToolGen. First a system prompt is given, with no associated tool. Then the user gives a task query, ToolGen generates a thought, and we use the user role prompt model to generate an action. After generating the action, we again use the user to provide a tool document. The model will generate tool inputs based on this documentation.

E Ablation test

Table 7 shows the ablation results for the end-to-end evaluation. For unseen instructions, ToolGen Agent performs slightly better without tool memory or retrieval training. However, for unseen tools, both SoPR and SoWR drop without the first two stages of training. This suggests that the first two stages of training play a role in ToolGen's generalization ability, while retrieval training is more important than tool memory.

Table 7: Ablation results from end-to-end evaluation of ToolGen. Here Inst. represents unseen queries (commands) and Tool. and Cat. represent tools not seen during training.

| mould | SoPR | SoWR | ||||||

|---|---|---|---|---|---|---|---|---|

| I1-Inst. | I2-Inst. | I3-Inst. | Avg. | I1-Inst. | I2-Inst. | I3-Inst. | Avg. | |

| ToolGen | 54.60 | 52.36 | 43.44 | 51.82 | 50.31 | 54.72 | 26.23 | 47.28 |

| w/o retrieval training | 56.95 | 46.70 | 50.27 | 52.42 | 49.69 | 50.94 | 34.43 | 47.27 |

| w/o memorization | 56.03 | 47.96 | 57.38 | 53.69 | 49.08 | 59.43 | 34.43 | 49.70 |

| I1-Tool. | I1-Cat. | I2 Cat. | Avg. | I1-Tool | I1-Cat. | I2 Cat. | Avg. | |

| ToolGen | 56.54 | 49.46 | 51.96 | 52.66 | 40.51 | 39.87 | 37.90 | 39.53 |

| w/o retrieval training | 49.47 | 40.31 | 37.90 | 42.84 | 36.71 | 30.07 | 36.29 | 34.18 |

| w/o memorization | 58.86 | 46.19 | 49.87 | 51.70 | 37.34 | 38.56 | 42.74 | 39.32 |

F Generalization capability

For ToolGen Agent, we measure the model's performance on untrained tool queries. Table 8 shows the end-to-end evaluation of the model on unseen tools.The performance of ToolGen Agent is lower than ToolLlama, which also indicates poor generalization ability when completing the full task. The generalization problem is prevalent in generative retrieval and is beyond the scope of this paper. Therefore, we leave it for future research.

Table 8: Generalization results for ToolGen. We tested and compared ToolGen's performance with other models on queries that asked for unseen tools during training.

| mould | set up | SoPR | SoWR | ||||||

|---|---|---|---|---|---|---|---|---|---|

| I1-Tool. | I1-Cat. | I2 Cat. | Avg. | I1-Tool | I1-Cat. | I2 Cat. | Avg | ||

| GPT-3.5 | GT. | 58.90 | 60.70 | 54.60 | 58.07 | - | - | - | - |

| ToolLlama | GT. | 57.38 | 58.61 | 56.85 | 57.68 | 43.04 | 50.31 | 54.84 | 49.04 |

| ToolGen | GT. | 52.32 | 40.46 | 39.65 | 47.67 | 39.24 | 38.56 | 40.32 | 39.30 |

| GPT-3.5 | look up | 57.59 | 53.05 | 46.51 | 52.78 | 46.20 | 54.25 | 54.81 | 51.58 |

| ToolLlama | look up | 57.70 | 61.76 | 45.43 | 54.96 | 48.73 | 50.98 | 44.35 | 48.30 |

| ToolGen | 56.54 | 49.46 | 51.96 | 52.66 | 40.51 | 39.87 | 37.90 | 39.53 |

Adapting ToolBench data to ToolGen

Our ToolGen data is adapted and transformed from ToolBench data. Specifically, we use tool documents as the data for tool memory training, where the inputs are tool documents and the outputs are the corresponding Token.

For retrieval training, we use the data annotated for tool retrieval in ToolBench, where a query is annotated with multiple related tools. We take the query as input and convert the relevant tools into virtual tokens, which are then used as the output of the retrieval training.

For end-to-end smartbody tuning, we use interaction trajectories as a source with the following transformations:(1) Each trajectory contains available tools from system hints that can be used to solve the query. When completing a task, ToolLlama relies on the tools retrieved in the system hints to solve the task, whereas ToolGen can generate tools directly. Therefore, we remove the tools from the system hints. (2) We replace all the tool names in the trajectory with the corresponding virtual tool Token.(3) In the original trajectory, the Intelligent Body Model generates Thought, Action, and Action Input (also known as ReAct) sequentially. We decompose the whole ReAct into three dialog rounds. In the first round, the smart body model generates a Thought and we use the user to prompt the model to generate an Action. In the second round, the model generates actions, i.e., virtual tool tokens, and then we obtain documents corresponding to these tokens so that the model knows what parameters to specify. In the third round, the model generates parameters for the tool.

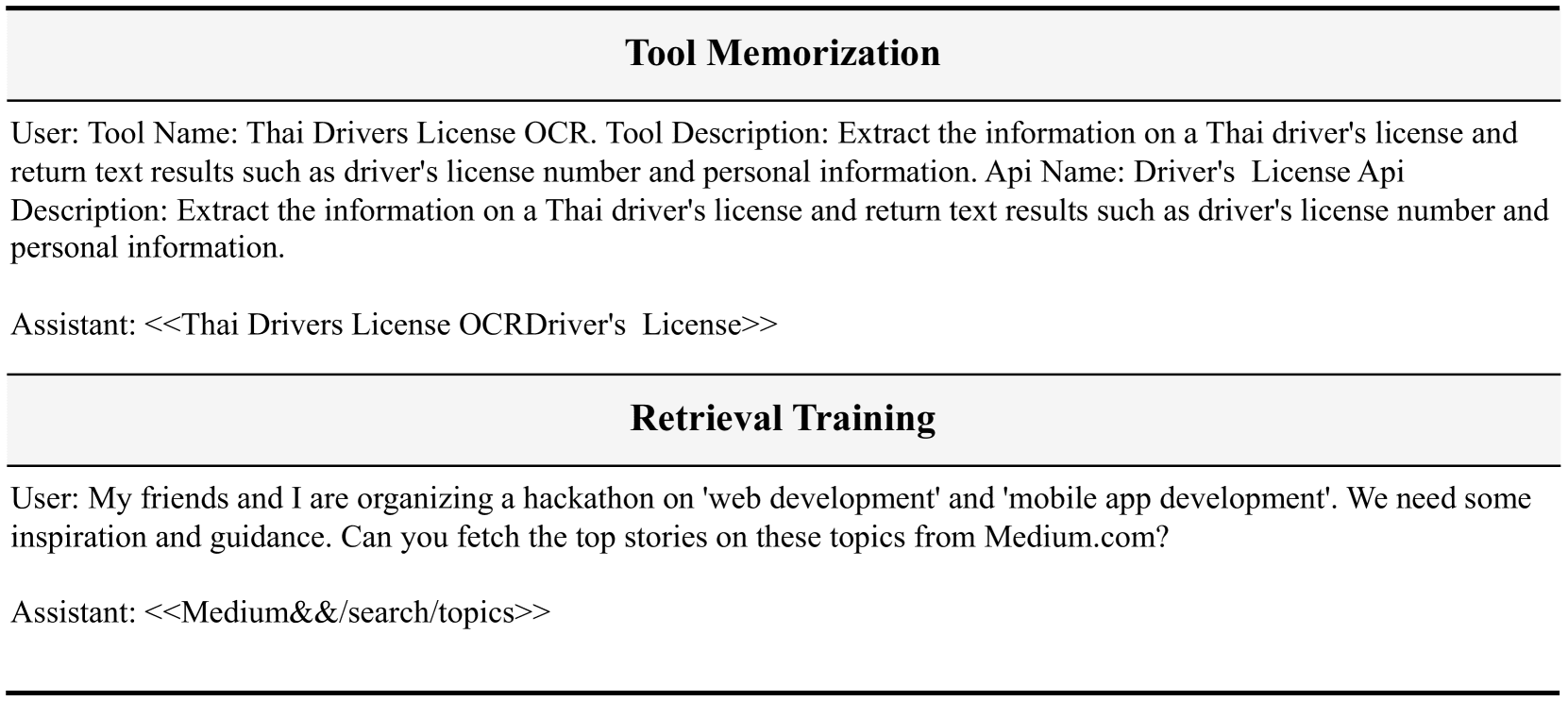

The number of samples in each dataset is shown in Table 6. samples for tool memory and retrieval training are shown in Fig. 7. samples for end-to-end intelligences tuning are shown in Fig. 8.

# 工具记忆

用户: 工具名称:泰国驾驶执照 OCR。工具描述:提取泰国驾驶执照上的信息并返回文本结果,例如驾驶执照号码和个人信息。API 名称:驾驶执照 API 描述:提取泰国驾驶执照上的信息并返回文本结果,例如驾驶执照号码和个人信息。

助手: <<Thai Drivers License OCRDriver's License>>

# 检索训练

用户: 我和我的朋友正在组织一个关于“网页开发”和“移动应用开发”的黑客马拉松。我们需要一些灵感和指导。你能从 Medium.com 获取这些主题的热门故事吗?

助手: <<Medium&&/search/topics>>

Fig. 7: Example dataset for tool memory and retrieval training. We use user roles to represent inputs and assistant roles to represent outputs.

# 端到端代理调优

系统:

您是一个 AutoGPT,能够利用众多工具和功能来完成给定的任务。

1. 首先,我会提供给您任务描述,您的任务将开始。

2. 在每一步中,您需要通过生成一个操作 Token 来确定下一步行动。

3. 在 Token 之后,您将收到与该 Token 对应的操作文档。您需要生成操作的输入,帮助您转到新的状态。随后,您将对下一步进行决策,并重复此过程。

4. 在生成多个操作和输入的若干次迭代后,您最终将完成任务并提供您的最终答案。

记住:

1. 状态变化是不可逆的,您无法返回到之前的状态。

2. 保持您的操作简洁,限制在最适合当前查询的范围内。

3. 您可以进行多次尝试。如果您计划不断尝试不同的条件,请每次尝试一个条件。

4. 如果您认为您已经收集了足够的信息,请生成操作 "<<Finish>> with argument give_answer",以提供您对该任务的答案。

5. 如果您觉得在这一步无法处理任务,请生成操作 "<<Finish>> with argument give_up_and_restart"。

让我们开始吧!

任务描述:您应该使用操作来处理实时用户查询。记住:

1. 在任务结束时始终生成 "<<Finish>>"。最终答案应包含足够的信息以展示给用户。如果您无法处理任务,或者发现操作总是失败(该功能当前无效),请使用操作 <<Finish>> with give_up_and_restart。

2. 仅生成操作和输入。

用户:

您能否获取邮政编码 'PL11DN' 的地址?我想知道找到的项目数量、区、分区、县、国家以及地理编码详情(东向、北向、纬度和经度)。

开始!

助手:

根据用户的查询,他们想获取邮政编码 'PL11DN' 的地址,并检索特定详情,如找到的项目数量、区、分区、县、国家以及地理编码详情。当前的 API 调用是针对函数 'find_by_postcode',参数 'postcode' 设置为 'PL11DN'。此 API 很有用,因为它可以根据给定的英国邮政编码提供地址。通过使用此 API,我将能够为用户的查询检索所需的信息。

用户:

生成操作。

助手:

<<Find By PostCode&&Find By PostCode>>

用户:

请给出输入。这是文档:{'name': '<<Find By PostCode&&Find By PostCode>>', 'description': '“Find By PostCode” API 根据给定的英国邮政编码获取地址。此 API 提供 JSON 接口以搜索英国邮政编码的地址。', 'required': [{'name': 'postcode', 'type': 'string', 'description': '', 'default': 'PL11DN'}], 'optional': []}

助手:

{

"postcode": "PL11DN"

}

工具:

{"error": "", "response": "{'status': 200, 'message': '成功', 'noOfItems': 37, 'result': {'postcode': 'PL1 1DN', 'district': '普利茅斯', 'ward': '圣彼得和海滨', 'county': '德文', 'country': '英格兰', 'geocode': {'eastings': '248025', 'northings': '0054420', 'lattitude': '50.370001', 'longitude': '-4.138397'}, 'addresses': [{'po_box': '', 'line_2': '', 'postcode_type': 'S', 'line_3': '', 'su_organisation_indicator': ' ', 'building_number': '5', 'udprn': '18911184', 'thoroughfare': '圣安德鲁十字', 'line_1': '5 圣安德鲁十字', 'postcode_outcode': 'PL1', 'postcode_compact': 'PL11DN', 'dependant_thoroughfare': '', 'premise': '5', 'post_town': '普利茅斯', 'building_name': '', 'organisation_name': '', 'postcode': 'PL1 1DN', 'department_name': '', 'postcode_incode': '1DN', 'address': '5 圣安德鲁十字, 普利茅斯, PL1 1DN', 'double_dependant_locality': '', 'sub_building_name': '', 'dependant_locality': '', 'delivery_point_suffix': '4T'}, {'po_box': '', 'line_2': '2 圣安德鲁十字', 'p...

用户:

生成操作。

助手:

<<Finish>>

用户:

请给出输入。这是文档:{'required': [], 'optional': [{'name': 'give_answer', 'description': '输出答案', 'type': 'string'}, {'name': 'give_up_and_restart', 'description': '无法处理此步骤的任务', 'type': 'string'}]}

助手:

{

"return_type": "give_answer",

"final_answer": "找到的项目数量:37\n区:普利茅斯\n分区:圣彼得和海滨\n县:德文\n国家:英格兰\n地理编码详情:\n- 东向:248025\n- 北向:0054420\n- 纬度:50.370001\n经度:-4.138397"

}

! [[Pasted image 20241027190429.png]]

Figure 8: Example of end-to-end agent tuning.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...