Think&Cite: Improving Text Citation Accuracy Using Tree Search Techniques

summaries

Although Large Language Models (LLMs) perform well, they are prone to hallucinating and generating factually inaccurate information. This challenge has motivated efforts in attribute text generation, prompting LLMs to generate content that contains supporting evidence. In this paper, we propose a new framework called Think&Cite and formulate attribute text generation as a multi-step reasoning problem with integrated search. Specifically, we propose Self-Guided Monte Carlo Tree Search (SG-MCTS), which utilizes the self-reflective capability of LLMs to reflect on the intermediate states of MCTS to guide the tree expansion process. To provide reliable and comprehensive feedback, we introduced the Progress Reward Model (PRM), which measures the progress of tree search from the root node to the current state from both generative and attribute perspectives. We conducted extensive experiments on three datasets and the results show that our method significantly outperforms the baseline method.

1 Introduction

Large-scale language models (LLMs) (Zhao et al., 2023) perform well in many natural language processing tasks. Despite these advances, LLMs often generate responses that contain illusory and inaccurate information (Ji et al., 2023; Huang et al., 2023; Zhang et al., 2023). This problem compromises their reliability and, more importantly, users' trust in LLMs. To improve the reliability of LLMs, a new generation paradigm, attribute text generation, has been proposed that enables LLMs to generate responses that contain in-text citations to provide evidence (Gao et al., 2023b), as shown in Figure 1.

Figure 1: Given a question, the model generates text by citing passages from the corpus as supporting evidence.

Most existing work (Slobodkin et al., 2024; Sun et al., 2024; Fierro et al., 2024) simply prompts LLMs to provide citations when generating text. In addition, other work (Li et al., 2024; Huang et al., 2024) attempts to fine-tune LLMs on large amounts of supervised training data containing text with annotated citations.Despite these recent efforts, the development of LLMs that learn to generate faithful content with reliable citations remains an open challenge. First, existing methods use an autoregressive generation paradigm, which can be described as "System 1", a fast and instinctive mode of thinking, but not sufficiently accurate (Kahneman, 2011). As a result, any intermediate generative error (e.g., misrepresentation or misquotation) may result in an incorrect final response. Inspired by complex reasoning research (Zhang et al., 2024; Wang et al., 2024), we aimed to develop a model for the "System 2" paradigm for citing external evidence, which requires more in-depth, deliberate, and logical thinking (Kahneman, 2011). Second, attribute text generation often involves long text generation. liu et al. (2023) found that long responses to existing LLMs often contain unsupported statements and inaccurate quotes. We argue that the lack of explicit generation planning in previous work has hindered the progress of such systems.

In this paper, we present Think&Cite, a new framework for integrating search algorithms into attribute text generation. We conceptualize the generation task as a multi-step inference problem in which the model generates a sentence at each step through an iterative think-express-reference paradigm. To enhance this generative process, we propose Self-Guided Monte Carlo Tree Search (SG-MCTS), which extends classical MCTS with two innovations. First, our approach utilizes the self-reflective capability of LLMs to reflect on the intermediate states of the MCTS in real time, thus guiding the tree expansion process and actively avoiding inadequate inference paths. This is different from previous work that mainly reflects on the final outcome or the complete trajectory. Second, we propose the Progress Reward Model (PRM) to measure the progress of tree search from the root node to the current state from both generative and attribute perspectives. Compared to evaluating only individual steps, the progress-based reward model can provide a reliable and comprehensive assessment to guide the MCTS search process.

To the best of our knowledge, we are the first to apply a tree search algorithm to the task of attribute text generation. We conducted extensive experiments on three datasets to validate the effectiveness of our approach. The results show that our model significantly outperforms previous hints and fine-tuned baselines.

2 Related work

Text Generation with Attribution. Large Language Models (LLMs) have been used for text generation with attribution due to their excellent language generation capabilities (Gao et al., 2023b; Huang et al., 2024; Sun et al., 2024; Li et al., 2024; Slobodkin et al., 2024). LLMs for text generation with attribution can be roughly divided into two categories. The generated work can be broadly categorized into two types. The first type involves fine-tuning the LLM using preference learning (Li et al., 2024) and reinforcement learning (Huang et al., 2024), which teaches the LLM to generate supportive and relevant citations in order to obtain higher rewards. However, this approach relies on humans to organize high-quality datasets with annotated in-text citations. Another class of work directly instructs LLM to generate text with attributions through attribution-then-generation planning (Slobodkin et al., 2024) or using an external validator to guide generation (Sun et al., 2024). However, this approach generates text and citations in an autoregressive manner, where any inaccurate intermediate generation is prone to failure in the subsequent process. In contrast, our approach proposes self-guided tree search with progressive rewards to consider multiple paths.

LLM with tree search: Integrating tree search algorithms with LLM has attracted significant attention. Recent studies have investigated the use of tree search methods to enhance the performance of LLM during inference (Zhang et al., 2024; Wang et al., 2024; Ye and Ng, 2024).Sutton (2019) emphasized the superiority of scaling in learning and search over other methods. Empirical evidence further suggests that extended inference time computation can significantly improve LLM performance without additional training (Brown et al., 2024; Snell et al., 2024).A search (Hart et al., 1968) and Monte Carlo Tree Search (MCTS) (Browne et al., 2012) are used as planning techniques (Hart et al., 1968) and Monte Carlo Tree Search (MCTS) (Browne et al., 2012) are used as planning techniques to improve the performance of LLMs in solving complex inference problems. These search algorithms have been widely used in reinforcement learning (Silver et al., 2017) and in many real-world applications such as AlphaGo (Silver et al., 2016). Our work is the first to apply a tree search algorithm (i.e., Monte Carlo tree search) to solve the task of text generation with attribution. In addition, we propose self-guided MCTS, which relies on the reflective power of LLM to improve tree expansion.

3 Formulation of the problem

Our proposed framework aims to allow pre-trained LLM Mθ Generating responses with in-text citations that serve as evidence of the output content is called text generation with attribution (Slobodkin et al., 2024; Gao et al., 2023a).

Formally, given an input question x and a corpus of text passages D, the model Mθ Need to generate a reply y = (y 1 , ... , y T ), the response consists of T sentences, where each sentence yt Cite the list of paragraphs from D, denoted as Ct = {C t,1 , ... , C t,m } Due to the marginal benefit of combining more citations (Gao et al., 2023b), in this paper we allow up to three citations per sentence (m ≤ 3) and these citations are enclosed in square brackets, e.g., [1][2]. We also focus on knowledge-intensive scenarios where the problem involves world knowledge and where most sentences in the LLM contain multiple facts and require supporting citations as evidence. Following previous work (Gao et al., 2023b; Piktus et al., 2021), we partition the corpus D into 100-word paragraphs for retrieval, which makes it easier for humans to verify and does not introduce too much irrelevant information.

through an iterative "think-express-cite" paradigm. To enhance this generative process, we propose Self-Guided Monte Carlo Tree Search (SG-MCTS), which extends classical MCTS through two innovations. first, our approach utilizes the LLM's ability to self-reflect on the intermediate states of the MCTS in real-time, thereby guiding the tree expansion process and proactively avoiding insufficient inference paths. This is in contrast to previous work that primarily reflects on the final outcome or the complete trajectory. Second, we propose the Progress Reward Model (PRM) to measure the progress of the tree search from the root node to the current state in terms of two aspects, i.e., generation progress and attribution progress. Compared to evaluating only individual steps, the progress-based reward model can provide a reliable and comprehensive evaluation to guide the MCTS search process.

To the best of our knowledge, we are the first to apply a tree search algorithm to the task of text generation with attribution. We conducted extensive experiments on three datasets to validate the effectiveness of our approach. The results show that our model significantly outperforms previous cue-based and fine-tuned baselines.

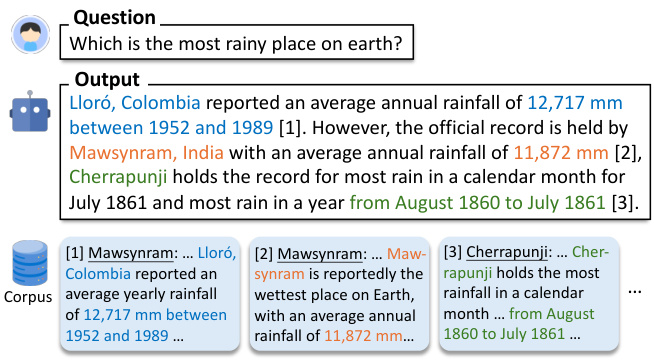

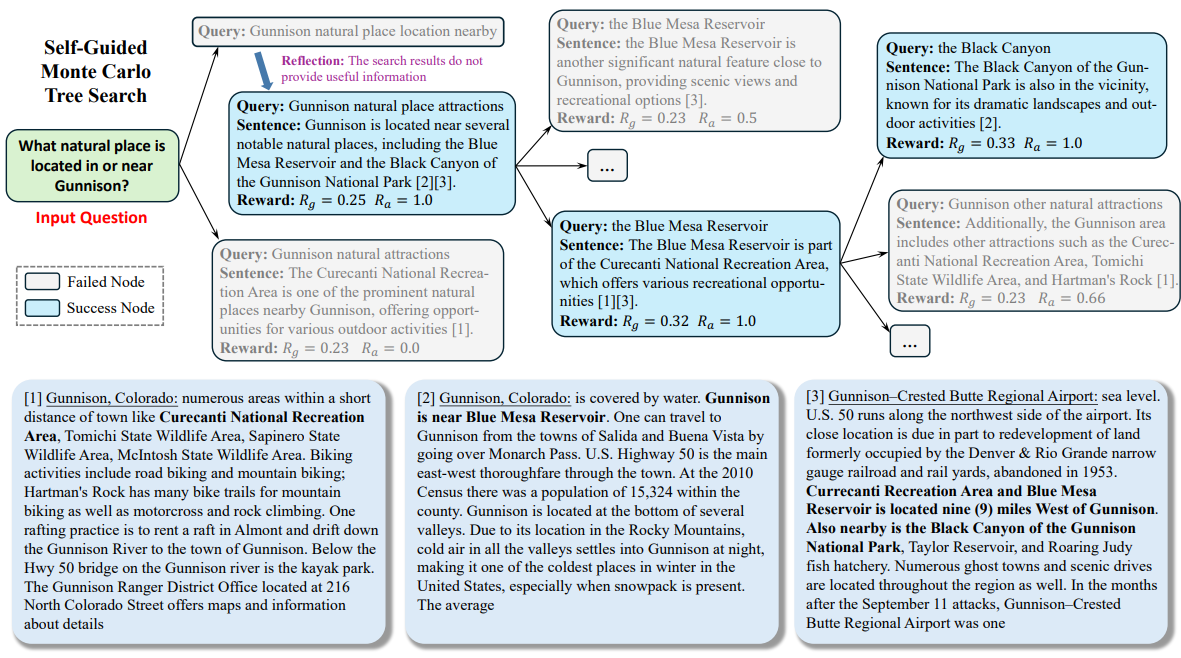

Figure 2: The overall framework of our proposed Think&Cite approach.

4 Methodology

The proposed THINK&CITE framework is built on a language agent for attribute text generation that uses Self-Guided Monte Carlo Tree Search (SG-MCTS) to plan and search for multiple generative paths and Progress Reward Modeling (PRM) to provide asymptotically fine-grained signaling of the search process. Figure 2 depicts the overall architecture of our approach.

4.1 Attribute Text Generation Agent

Inspired by previous work (Yao et al., 2022; Chen et al., 2023), we develop a linguistic agent to solve the task of text generation with attribution, which performs an iterative think-verbalize-cite process that leverages the inference and planning capabilities of the LLM.

Iterative Think-Verbalize-Cite. In order to generate the tth sentence, the agent first actively thinks about the next generated blueprint (e.g., content topic or abstract) as a search query q t The agent then uses the search tool to retrieve the most relevant top-K paragraphs D from the given corpus D through the Search operation (i.e., "Search: {query}"). Then, the agent uses the search tool to retrieve the most relevant top-K paragraphs D from a given corpus D through a Search operation (i.e., "Search: {query}"). t . Based on the retrieved passages, the agent references, via the Generate operation (i.e., "Generate: {sentence}"), the text from the Dt List of paragraphs Ct to express a sentence y t . The historical query, retrieved paragraphs, generated sentences, and citations (denoted as H = {(q i , D i , y i , C i )}^t^ i=1 ) will be used as a context for the next step in thinking and expressing. If the agent considers the task solved, it can output "End" to terminate the process. In this way, the agent can thoughtfully plan and retrieve different information, which can dynamically take into account shifts in content focus as the generation process proceeds, in contrast to previous work that relied on static reference corpora (Slobodkin et al. ..., 2024) is different. Moreover, this paradigm is similar to recent work on iterative retrieval-enhanced generation (Jiang et al., 2023; Shao et al., 2023), but differs in that our work requires the model to predict content blueprints for the next generation in order to retrieve the relevant information and carefully select appropriate references in order to merge them at the appropriate locations in the generated text.

4.2 Self-guided Monte Carlo tree search

We formulate text generation with attribution as a multi-step inference problem in which the model is thoughtful about the attribution of text. Monte Carlo tree search has become an effective search algorithm for many decision-making tasks (Silver et al., 2016; Ye et al., 2021). In this work, we propose Self-Guided Monte Carlo Tree Search (SG-MCTS), which utilizes the self-reflective capability of the LLM to guide the search process of MCTS. Previous work (Shinn et al., 2023; Zhou et al., 2024; Yu et al., 2024) typically reflects on the final result or complete trajectory, which is inefficient and sparse. In contrast, our approach aims to criticize and reflect on the intermediate state of MCTS to guide tree expansion in real-time and actively ignore erroneous generation paths.

Typically, MCTS is based on a strategy model πθ constructs the search tree T, which is usually the LLM M θ . In this tree, the node st = [q t , D t , y t , C t ] denotes the state of the tth tree level, including the search query q t D t Sentences, Expressions yt and cited paragraphs C t . The root node s0 = [x] denotes the input problem. In each iteration, SG-MCTS follows four steps, i.e., selection, reflection-guided extension, evaluation, and backpropagation.

Selection phase. The selection phase aims to identify a node s from the search tree Tt for subsequent expansion. The Upper Confidence Constraint (UCT) algorithm applied to trees (Kocsis and Szepesvári, 2006) is used to select the best node with the highest UCT score:

UCT(s t ) = V(s t ) + w √(ln N(p) / N(s)) t )) (1)

where V(s t ) is estimated at the assessment stage st The value function (expected reward), N(s t ) is st the visit count, w is the weight of the control exploration, and p is the st of the parent node.

Reflection-guided extension phase . In the extension phase, by generating multiple successor nodes s t+1 The nodes selected are extended by the think-verbalize-cite process s t The Think step first generates a blueprint as a search query q̂ t+1 It extracts the subject and content of the next sentence, which will be used to retrieve the paragraph D̂. t+1 . However, the strategy model can go wrong in the extension phase, e.g., by generating non-specific or irrelevant queries, which may hinder subsequent evidence retrieval and ultimately lead to incorrect sentence generation. Therefore, we introduce the Reflection step, where the strategy model is based on the question x and the retrieved passage D̂t+1 Reflective Inquiry q̂t+1 to recognize errors:

u = M θ (q̂ t+1 , D̂ t+1 , x), (2)

where the reflection text u contains suggestions about certain aspects of the search, e.g., that the query should be more focused on the search topic. Based on the reflection, the strategy model reformulates a new query qt+1 to retrieve the relevant paragraph D t+1 ::

q t+1 , Dt+1 = M θ (u, q̂ t+1 , D̂ t+1 , H), (3)

where H is the history trace. Note that the above process can be iterated until the model determines that the retrieved evidence is supportive or the maximum number of iterations is reached. Finally, the Verbalize and Cite steps are extracted from Dt+1 Generate the next sentence y t+1 and with accurate citations C t+1 ::

y t+1 , Ct+1 = M θ (q t+1 , D t+1 , H). (4)

The new node consists of the query, the retrieved corpus, the generated sentence and the cited paragraph, denoted as st+1 = [q t+1 , D t+1 , y t+1 , C t+1 ] Compared to simple expansions in a typical MCTS, our approach improves defective expansion nodes to avoid low-quality generation. Since MCTS trees are constructed incrementally, improving the quality of the next operation allows the model to navigate more favorable paths through the vast search space, thus improving the overall search quality of the tree.

Evaluation phase. The evaluation phase aims to use the progress reward model (see Section 4.3) to compute the new extension node st+1 The expected reward of R(s t+1 ). Progress assessment involves two aspects: generation and attribution. Generating progress incentives Rg Measurement of sentences generated so far y 1 , ... , yt+1 The quality of the text of the Vesting progress rewards Ra Evaluating the generated sentences y 1 , ... , yt+1 and cited paragraphs C 1 , ... , Ct+1 attributional consistency between them. Finally, the total reward is computed as the sum of the two: R(s t+1 ) = R g + R a The

Backpropagation phase. In the backpropagation phase, the new node st+1 of the reward R(s t+1 ) is propagated back to its parent s t that updates each node on the path from the root node to its parent s 0 , s 1 , ... , st The value function of the

N new (s i ) = N old (s i ) + 1, 0 ≤ i ≤ t (5)

V new (s i ) = (V old (s i )N old (s i ) + R(s t+1 )) / N new (s i ) (6)

where N old (s i ) and V old (s i ) are nodes si of the previously accessed count and value functions.

4.3 Progress Reward Model

Previous outcome reward models (Cobbe et al., 2021; Hosseini et al., 2024) and process reward models (Lightman et al., 2024; Dai et al., 2024) have focused on evaluating the final outcome or intermediate steps. In this work, we propose that after taking the next step, the measurement tree search from the root s0 to state st+1 The Progress. Since text with attribution consists of text content and its citations, we designed two aspects of progress rewards, generation progress rewards and attribution progress rewards, to assess the quality of the generated text content and the relevance of the citations, respectively.

4.3.1 Generating progress incentives

In direct preference optimization (DPO) (Rafailov et al., 2023), the marker-level logarithmic ratios can be interpreted as

implicit marker-level rewards under the maximum entropy reinforcement learning (RL) formulation. Therefore, we propose to utilize the existing DPO alignment model to measure generation of the next sentence yt+1 Post-generated sentences y1:t+1 = y 1 , ... , yt+1 The quality score of R g The

Specifically, we define a sentence-level Markov decision process (MDP) where the state st = (x, y 1 , ... , y t ) denotes the inputs and sentences generated so far, and the initial state s0 = x is an input problem. The action at = yt+1 denotes the next sentence to be generated. Thus, the RLHF optimization objective can be rewritten as a maximum entropy RL problem at the sentence level:

E atπθ(-|st)~ [∑^T^t=1 r'(s t , a t )] + βE s0x~ [H(π θ (-|s 0 ))],

where the sentence-level reward function r' can be computed as:

r'(s t , a t ) = { βlog π ref (a t |s t ), if st+1 it's not the end of the line

{ r'(y|x) + βlog π ref (a t |s t ) If s t+1 It's the end of the line.

The maximum entropy RL formula derives the optimal value function V and the Q function Q as:

Q(s t , a t ) = r'(s t , a t ) + V(s t+1 ),

V(s t ) = log ∑a exp(Q(s t , a)), when t ≤ T .

Therefore, the optimal policy π is derived as:

⇒ βlog π(a t |s t ) = Q(s t , a t ) - V(s t ),

⇒ βlog (π(a t |s t ) / π ref (a t |s t )) = V(s t+1 ) - V(s t ).

This motivated us to use the DPO strategy to derive the partial sum of rewards to formulate partial responses y1:t+1 Progress incentives R g ::

∑^t^k=0 βlog (π(a k |s k ) / π ref (a k |s k )) = V(s t+1 ) - V(s 0 ),

⇒ R g (y 1:t+1 ) = ∑^t^k=0 wk log (π(y k+1 |x, y 1:k ) / π ref (y k+1 |x, y 1:k )),

where y1:k denotes y 1 , ... , y k wk = 1 / (t+1) is the weight of each sentence-level log-likelihood ratio.

4.3.2 Attribute progress rewards

We use two citation metrics used in previous work (Gao et al., 2023b), citation recall and precision, to represent the attribution progress reward R a The

Specifically, citation recall measures partial response y1:t+1 of sentences that could be supported by the corresponding cited passage. We used the NLI model (Honovich et al., 2022) to check whether the cited passages could derive model responses. For each sentence yi (1 ≤ i ≤ t + 1), we will Ci in the cited paragraphs are joined as a premise and the generated sentence yi as assumptions for the NLI model. We set citation recall to 1 if the premises contain assumptions and 0 otherwise. citation precision evaluates the percentage of citations that support the corresponding sentence. We use the same NLI model as above to compute the precision score. For each citation c i,j If (1) Ci All citations in y contain the generated sentence yi and (2) Ci \ {c i,j } without the sentence y i Otherwise, the precision score is set to 0. We compute the precision score (0 or 1) for each citation and average it over all citations. Finally, we compute the F1 score as the attribution progress reward R a (y 1:t+1 , C 1 , ... , C t+1 ) to provide a balanced attribution quality metric between generated sentences and quoted passages.

5 Experiments

5.1 Experimental setup

Dataset. For evaluation, we use the ALCE benchmark test (Gao et al., 2023b), which consists of three datasets: (1) ASQA (Stelmakh et al., 2022), a long-form QA dataset containing ambiguous questions that require multiple answers to cover different aspects; (2) QAMPARI (Amouyal et al. 2022), a factual QA dataset in which the answer to each question is a list of entities extracted from different passages; (3) ELI5 (Fan et al., 2019), a long-form QA dataset containing how/why/what questions. For ASQA and QAMPARI, most of the questions can be answered via Wikipedia, so we use the 2018/12/20 Wikipedia snapshot as the corpus. For ELI5, since its questions are thematically diverse, we use Sphere (Piktus et al., 2021) (a filtered version of Common Crawl) as the corpus. Following Gao et al. (2023b), we use GTR (Ni et al., 2022) for Wikipedia and Sphere BM25 (Robertson et al., 2009) to retrieve the first 100 passages as a corpus for each question. See Appendix A for more details.

Evaluation Metrics. We use the evaluation metrics from the original ALCE benchmark test. To evaluate the correctness of the output, we use Exact Match (EM) Recall from ASQA, Recall-5 from QAMPARI, and Statement Recall from ELI5 to measure the percentage of golden answers (key pieces of information) in the output. We further compute Precision as a correctness metric for the QAMPARI dataset to measure the percentage of correct answers in the generated answers. To assess the citation quality of the output, we compute Citation Recall, which measures the percentage of sentences in the output that can be deduced from the passages they cite, and Citation Precision, which measures the percentage of citations that can help support the output sentences.

Baseline. We compare our approach with that based on ChatGPT and GPT-40 were compared to the following baselines:

Vanilla RAG The model was directly instructed to generate responses based on the given first 5 paragraphs and to cite them accordingly. We use context learning with two presentations (Brown et al., 2020).

The Summary/Snippet RAG provides a summary or snippet of the paragraph instead of the full text. The model will generate responses with citations based on the first 10 paragraph summaries or snippets.

Interact allows the model to further access the full text of certain paragraphs in the Summary/Snippet RAG method. The model can present an action "Check: Document [1] [2]" to get the full text of the corresponding document.

Inline Search allows the model to request an action "Search: {query}" to retrieve the most relevant paragraphs from the top 100. This method is similar to ours in that it acts as a direct comparison.

ReRank randomly draws four responses for each question and selects the best response based on a citation recall metric.

The above baseline has been applied and evaluated in the original ALCE benchmark test as reported in (Gao et al., 2023b). Furthermore, we compare our approach with previous work on text generation with attribution.FG-Reward (Huang et al., 2024) suggests using fine-grained rewards as training signals to fine-tune LLaMA-2-7B (Touvron et al., 2023) to generate attributable responses.VTG (Sun et al., 2024) uses changing memories and a two-layer verifier to generate attributable responses. 2024) uses evolving memories and a two-layer validator to guide the generative model (i.e., text-davinci-003).APO (Li et al., 2024) collates a preference pair dataset and applies preference optimization to LLaMA-2-13B for text generation with attribution.

Implementation details. We use LLaMA-3.1-8B-Instruct and GPT-40 as our strategy models to evaluate the performance of our approach. For reward models, we use the DPO model, Llama-3-8B-SFR-Iterative-DPO-R¹, to compute generative progress rewards and the NLI model, T5-XXL-TRUE-NLI-Mixture (Honovich et al., 2022), to compute attributed progress rewards. For each search query, we retrieve the top 3 passages from the corpus as candidate references D t . In the UCT algorithm (Equation 1), the weight w is set to 0.2. For SG-MCTS, we extend three child nodes for each parent node and set the maximum tree level to 6 and the maximum number of iterations for MCTS to 30.

5.2 Main results

Table 1 shows the results of our method and baseline on the three datasets.

First, it can be observed that the three retrieval-enhanced generation (RAG) methods exhibit moderate performance, although the use of summaries or snippets improves correctness. However, this improvement comes at the cost of citation quality, as paragraph information is highly compressed.ReRank leads to a consistent improvement in citation quality across the three datasets (e.g., vanilla RAG improves citation recall from 73.61 TP3T to 84.81 TP3T in ASQA). As a direct comparison, Inline Search is similar to our approach, but performs worse compared to the other cue baselines. This is due to simply requesting search queries without considering evidence quality and relevance.

Second, by fine-tuning the LLM on supervised training data with annotated citations, FG-Reward and APO show increased citation quality in the ASQA and ELI5 datasets, but not improved performance in QAMPARI. In addition, VTG employs a generative validator and an in-memory validator to assess the logical support of the evidence, leading to strong citation quality (e.g., citation recall of 86.71 TP3T in ASQA). However, fine-tuned LLMs are limited by the quality and quantity of supervised training data, where supporting evidence requires significant cost to link to the correct source. Furthermore, these methods still rely on autoregressive generation, a fast but less accurate mode of thinking. As a result, any intermediate generation errors (e.g., misrepresentations or inadequate citations) will lead to problems with the final response.

| ASQA | QAMPARI | ELI5 | |

|---|---|---|---|

| Correctness | Citation | Correctness | Citation |

| EM Rec. | Rec. | Prec. | Recall-5 |

| ChatGPT | |||

| Vanilla RAG | 40.4 | 73.6 | 72.5 |

| w/ ReRank | 40.2 | 84.8 | 81.6 |

| Summary RAG | 43.3 | 68.9 | 61.8 |

| w/ Interact | 39.1 | 73.4 | 66.5 |

| Snippet RAG | 41.4 | 65.3 | 57.4 |

| w/Interact | 41.2 | 64.5 | 57.7 |

| Inline Search | 32.4 | 58.3 | 58.2 |

| GPT-40 | |||

| Vanilla RAG | 41.3 | 68.5 | 75.6 |

| w/ ReRank | 42.1 | 83.4 | 82.3 |

| Summary RAG | 46.5 | 70.2 | 67.2 |

| w/ Interact | 48.1 | 73.1 | 72.8 |

| Snippet RAG | 45.1 | 68.9 | 66.5 |

| w/Interact | 45.2 | 67.8 | 66.7 |

| Inline Search | 40.3 | 65.7 | 66.9 |

| FG-Reward | 40.1 | 77.8 | 76.3 |

| VTG | 41.5 | 86.7 | 80.0 |

| APO | 40.5 | 72.8 | 69.6 |

| Ours (LLaMA) | 45.2 | 82.3 | 80.6 |

| Ours (GPT-40) | 50.1 | 89.5 | 87.1 |

Table 1: Evaluation results of attribute text generation on three datasets. "Rec." and "Prec." are abbreviations for recall and precision. Bold and underlined fonts indicate the best and second best results in each dataset, respectively.

Finally, our approach significantly outperforms all previous approaches in all three datasets.Think&Cite formulates the attribute text generation task as a multi-step inference problem and introduces a slow and deliberate mode of reflection to search for the best solution. By proposing a self-guided MCTS algorithm, Think&Cite utilizes the self-reflective capability of LLMs to guide the tree expansion process. In addition, the proposed progress reward model can further provide comprehensive and reliable feedback to help the model explore better generated responses.

5.3 Further analysis

We report further analysis of our method on ASQA using GPT-40, as we have similar findings on other datasets.

Ablation Study. To validate the effectiveness of our proposed framework, we analyzed its key design elements for ablation. We designed four variants: (1) w/o SG-MCTS removes the self-guided MCTS and directly generates answers step-by-step; (2) w/o Reflection removes the reflection step and employs the vanilla MCTS algorithm; (3) w/o GP Reward removes the need to generate progress rewards R g ; (4) w/o AP Reward Removed vesting progress rewards R a We show the results in Table 2. We show the results in Table 2. All variants perform worse than the original method, indicating the effectiveness of each component. Specifically, the performance of w/o SG-MCTS is significantly worse, which suggests that integrating a search algorithm into text generation with attribution is highly beneficial. The use of vanilla MCTS (w/o Reflection) leads to worse citation quality, due to the introduction of false references without reflecting on the retrieved results. Similarly, both w/o GP Reward and w/o AP Reward lead to worse performance, suggesting that both generation and citation quality checking are critical.

| Method | Correctness | Citation |

|---|---|---|

| EM Rec. | Rec. | Prec. |

| Think&Cite | 50.1 | 89.5 |

| w/oSG-MCTS | 42.1 | 78.2 |

| w/oReflection | 46.5 | 83.6 |

| w/oGPReward | 47.1 | 86.2 |

| w/oAPReward | 46.7 | 81.3 |

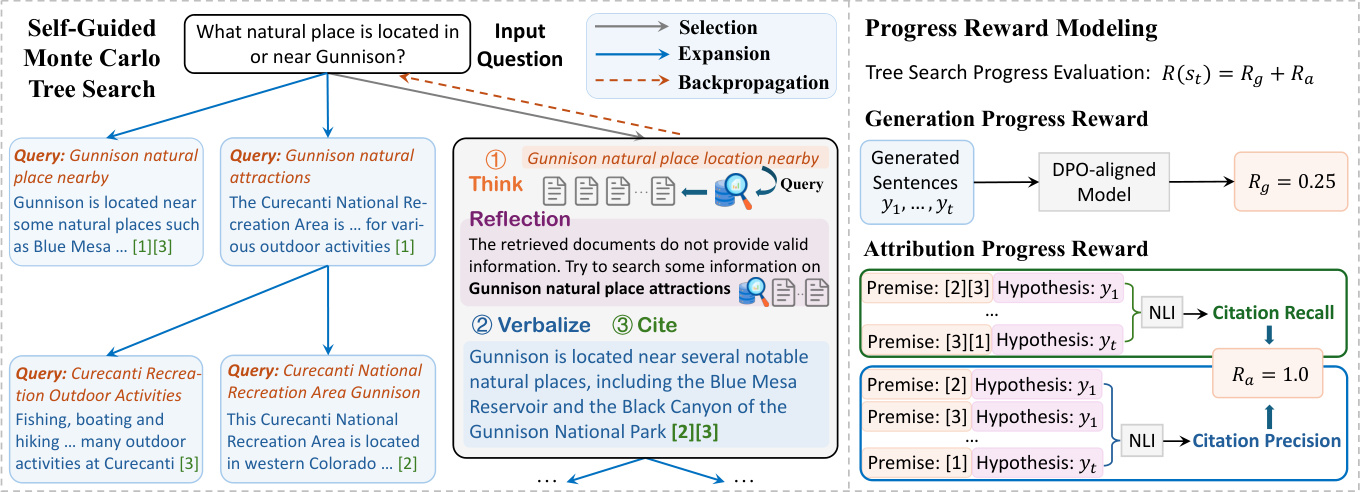

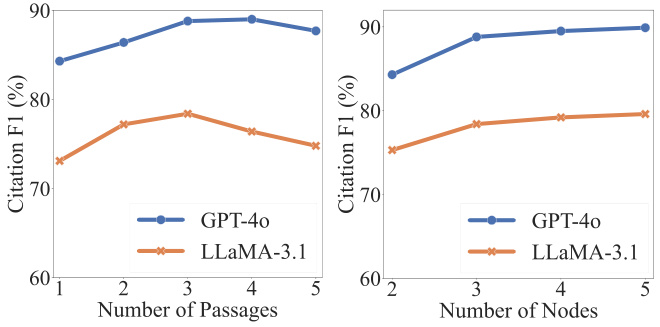

Self-reflection and simulation. In each simulation, SG-MCTS follows four key steps and employs self-reflection to improve the quality of the intermediate states in the extension by criticizing and improving the error queries. To check the effectiveness of reflection, we compared the performance between increasing the maximum number of simulations and increasing the maximum number of reflection operations. We first changed the maximum number of simulations to {10, 20, 30, 40} and fixed the maximum number of reflections to 10. Similarly, we changed the maximum number of reflections to {5, 10, 15, 20} and fixed the maximum number of simulations to 30. We show the F1 scores based on the citation recall and precision in Figure 3. The figure shows that increasing both the number of simulations and the number of reflections improves the performance of text generation with attribution. This is expected, as more extensive exploration improves the probability of finding the correct generation. However, more reflection steps can "overthink" the model, introducing noise and leading to performance degradation.SG-MCTS outperforms vanilla MCTS without reflection because there may be incorrect searches in the parent node, causing the reasoning process in the extended child node to continue along the wrong path. The reflection step improves incorrect retrievals due to insufficient queries, thus allowing subsequent exploration to proceed more accurately.

Figure 3: Results on ASQA regarding the number of simulations (left side) or the number of reflection steps (right side).

Hyperparameter analysis. Two hyperparameters are critical for correctness and citation quality: each query qt Number of paragraphs retrieved |D t | and extended child nodes in tree search st+1 The number of paragraphs retrieved. As shown in Figure 4, the quality of citations can be initially improved by increasing the number of retrieved paragraphs. However, further increases above a certain threshold lead to worse performance, mainly because merging more paragraphs introduces noise that negatively affects the reliability of the generated content. On the other hand, we observe that increasing the number of expanded nodes leads to a consistent improvement, although the improvement later stabilizes. Since expanding more nodes leads to higher computational costs, we extract three child nodes for each parent node.

Figure 4: Results on ASQA regarding the number of paragraphs (left side) or the number of extended nodes (right side).

5.4 Case Studies

In order to facilitate the understanding of the whole workflow of our method, we performed a qualitative analysis in ASQA. We show an example in Appendix C. Throughout the search process, LLM treats the input problem as the root node and gradually expands the search tree to reach the termination state. As shown, the model first generates a query (i.e., "Location of nearby Gunnison natural attractions") to retrieve passages. Since the paragraph does not contain valid information needed to answer the question, the model reflects and proposes a new query (i.e., "Gunnison natural attraction sites") for retrieval. Based on the retrieved paragraphs, the model generates sentences and references the second and third paragraphs (i.e., "[2][3]"). By following a multi-step generation process, the model can think deeply about the topic and output reliable content with accurate citations.

6 Conclusion

In this work, we present Think&Cite, a new framework for attribute text generation with integrated tree search.Think&Cite builds on the iterative think-express-cite generation paradigm. To enhance the generation process, we propose Self-Guided Monte Carlo Tree Search, which leverages the self-reflective capabilities of LLMs to criticize and correct the intermediate states of MCTSs to guide tree expansion. In addition, we propose the Progress Reward Model to measure the progress of tree search and provide reliable feedback. Extensive experiments on three datasets show that our proposed Think&Cite outperforms traditional hinting and fine-tuning methods.

limitations

The scope of our experiments is limited by the large computational cost of tree-based search methods. Future work could explore a wider range of attribute text generation datasets. In our model, Monte Carlo tree search is used for self-guided generation. Future work can explore additional search algorithms to evaluate the generality and robustness of our proposed framework.

appendice

A data set

We evaluate our approach on the ALCE benchmark, which consists of three datasets. Specifically, the ASQA dataset (Stelmakh et al., 2022) contains 948 questions with answers that can be found from Wikipedia; the QAMPARI dataset (Amouyal et al., 2022) contains 1,000 questions based on Wikipedia; and the ELI5 dataset (Fan et al., 2019) contains 1,000 questions and the answers can be found in Sphere (Piktus et al., 2021). Detailed information about these three datasets is presented in Table 3.

| data set | Corpus (# paragraphs) | Type of problem |

|---|---|---|

| ASQA | Wikipedia (21 million) | factual |

| QAMPARI | Wikipedia (21 million) | Factual (tabulation) |

| ELI5 | Sphere (899 million) | Why/How/What |

B Tips

We instructed LLM to perform self-guided tree search to generate attribute texts in a sample less learning manner.The context learning hints for the ASQA, QAMPARI and ELI5 datasets are shown in Tables 4, 5, and 6, respectively.In these hints, we first defined four operations in the iterative think-express-reference paradigm for LLM. Then, we constructed four contextual examples that were selected to be consistent with the ALCE benchmark (Gao et al., 2023b).

C Case studies

We show an example from ASQA in Figure 5.

Your goal is to write an accurate, interesting and concise answer to a given question using only the search results provided and citing them correctly. Use a fair and journalistic tone. Always state a citation for any fact. You can use four actions:

- """Search: keywords""", which requests some keywords and retrieves the most relevant documents based on the search and generation history.

- """Reflect: ideas"""" and reflect on the search results if a valid document was not obtained to answer the question.

- """Output: sentence """which outputs a long answer based on the retrieved document. In the answer, correctly cite by using [1][2][3]. Cite at least one document and up to three documents per sentence. If multiple documents support the sentence, only the least sufficient subset of documents are cited.

- """End""", it ends the quizzing task.

Here are some examples:

Question : Who set the record for the longest distance shot?

Search : Longest Distance Shot Record

Document[1] (Caption: David Akers) set a record for the longest field goal by kicking a 63-yard field goal over the crossbar.

Documentation[2] (Title: Dirk Borgognone) Dirk Borgognone, Dirk Ronald Borgognone (born January 9, 1968) is a former national...

Document[3] (Title: Russell Elkseben) against the University of Colorado. Despite early troubles in the game, including...

Reflection : The search results did not provide valid information to answer the question. It is better to search for the record for the longest field goal in the NFL.

Search : Record for longest field goal in the NFL

Document[4] (Title: Field Goal) toward their own end zone. The longest field goal in NFL history is 64 yards, a record set by...

Document [5] (Title: Field Goal Range) end zone at each end) was only 66 yards. Scaccia, playing indoor soccer, attempted a 64-yard...

Document[6] (Title: Range of Shots) This achievement is not an official record. All of the above shots were successful...

Output : The record for the longest field goal in an NFL game is 64 yards set by Matt Prater[4].

Search : Record for longest shot at any level of play, college

Document[7] (Title: Field Goal) toward their own end zone. The longest field goal in NFL history is 64 yards, a record set by...

Documentation[8] (Title: Range of Shots) The 35 to 40 yard line (closer in crosswinds) usually attempts the more risky...

Document[9] (Title: Field goal range) NFL, and has been banned by the NCAA since 1989) is 68 yards, held by Fabrizio Scaccia...

Output : However, the record for the longest field goal at any level of competition is 69 yards, kicked by college kicker Ove Johansson in a 1976 Abilene Christian University soccer game against East Texas State University [8]. The End.

Your goal is to write an accurate, interesting and concise answer to a given question using only the search results provided and citing them correctly. Use a fair and journalistic tone. Always state a citation for any fact. You can use four actions:

- """Search: keywords""", which requests some keywords and retrieves the most relevant documents based on the search and generation history.

- """Reflect: ideas"""" and reflect on the search results if a valid document was not obtained to answer the question.

- """Output: sentence """which outputs a long answer based on the retrieved document. In the answer, correctly cite by using [1][2][3]. Cite at least one document and up to three documents per sentence. If multiple documents support the sentence, only the least sufficient subset of documents are cited.

- """End""", it ends the quizzing task.

Here are some examples:

Question : What books has Nevil Shute written?

Search : Neville Shute Themes Social Disorders

Document[1] (Title: Nevil Shute) Early stages. My congratulations." His reputation as a writer led the Ministry of Information to send him to...

Document [2] (Title: Nevil Shute) The theme is crossing social barriers such as class ("The Lonely Road" and "The Landing"), race...

Documentation[3] (Title: Nevil Shute) Aircraft construction company, Airspeed Ltd. His novels grew in popularity, but he became more famous...

Output : Beyond the Black Stump [2], Lonely Road [2], Chessboard [2], In the Damp [2], Toolroom Trustee [2], Around the Bend [2].

Search : Nevil Shute Simple Readable Style

Documents[4] (Nevil Shute) Aircraft construction company, Airspeed Ltd. His novels grew in popularity, but he became more famous...

Document[5] (Checkerboard) People of Burma", both of which are central to the book's story. Schutt is concerned about the sales of the book...

Documentation[6] (wet) He used the idea of voting several times in the short story "The Curious Republic of Gondor"...

Reflection : The search results did not provide any useful information to answer the question. Better to search for books by Nevil Shute from the 1950s.

Search : 1950s books by Nevil Shute

Document[7] (Nevil Shute) Early stages. My congratulations." His reputation as a writer led the Ministry of Information to send him to...

Document [8] (Nevil Shute) The theme is crossing social barriers such as class ("The Lonely Road" and "The Landing"), race ("The Chessboard")...

Documents[9] (Nevil Shute) Aircraft construction company, Airspeed Ltd. His novels grew in popularity, but he became more famous...

Output : Malazan [7], Stephen Morris [7].

close

Your goal is to write an accurate, interesting and concise answer to a given question using only the search results provided and citing them correctly. Use a fair and journalistic tone. Always state a citation for any fact. You can use four actions:

- """Search: keywords""", which requests some keywords and retrieves the most relevant documents based on the search and generation history.

- """Reflect: ideas"""" and reflect on the search results if a valid document was not obtained to answer the question.

- """Output: sentence """which outputs a long answer based on the retrieved document. In the answer, correctly cite by using [1][2][3]. Cite at least one document and up to three documents per sentence. If multiple documents support the sentence, only the least sufficient subset of documents are cited.

- """End""", it ends the quizzing task.

Here are some examples:

Question : What causes bipolar disorder?

Search : What is bipolar disorder?

Document [1] (Title: Bipolar Disorder Type II) Research in setting personal perceptual and behavioral boundaries. The research suggests the following...

Document [2] (Title: Biology of Bipolar Disorder) Brain regions located in these areas are hypothesized to be the mechanism of bipolar disorder...

Document [3] (Title: Biology of Bipolar Disorder) regardless of polarity. Inflammation has been reported in bipolar disorder...

Reflection : The search results did not provide valid information to answer the question. It is better to search for symptoms of bipolar disorder.

Search : What are the symptoms of bipolar disorder? How long do they last?

Document [4] (Title: Bipolar Disorder | Definition in the Medical Dictionary) Bipolar Disorder | Definition of Bipolar Disorder...

Document [5] (Title: Mania and Bipolar Disorder) can go from depressed to "very happy" all in one day, or even in a few days, which...

Documentation[6] (Title: For Individuals - Adam Schwartz) For Individuals 2014 Adam Schwartz The information is extensive and covers...

Output : Bipolar disorder is a mood disorder that causes extreme mood swings ranging from euphoria to depression [4]. Mood swings may range from days to months [5].

Search : What causes bipolar disorder?

Documentation[7] (Title: Bipolar Disorder) Bipolar disorder is usually a cyclical disorder in which an individual exhibits depression...

Document [8] (Title: Depression Bipolar Disorder Symptoms 2019 | Beating Depression) Depression Bipolar Disorder Symptoms 2019.

Documentation[9] (Title: Mental Disorders) may include parental factors, including parental rejection, lack of parental warmth...

OUTPUT : We are still unsure of the exact factors that contribute to this disease, but genetics is thought to be a major factor [7].

close

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...