Text2Edit: A Native Multimodal Model for Text-Driven Video Ad Creation (Unreleased)

General Introduction

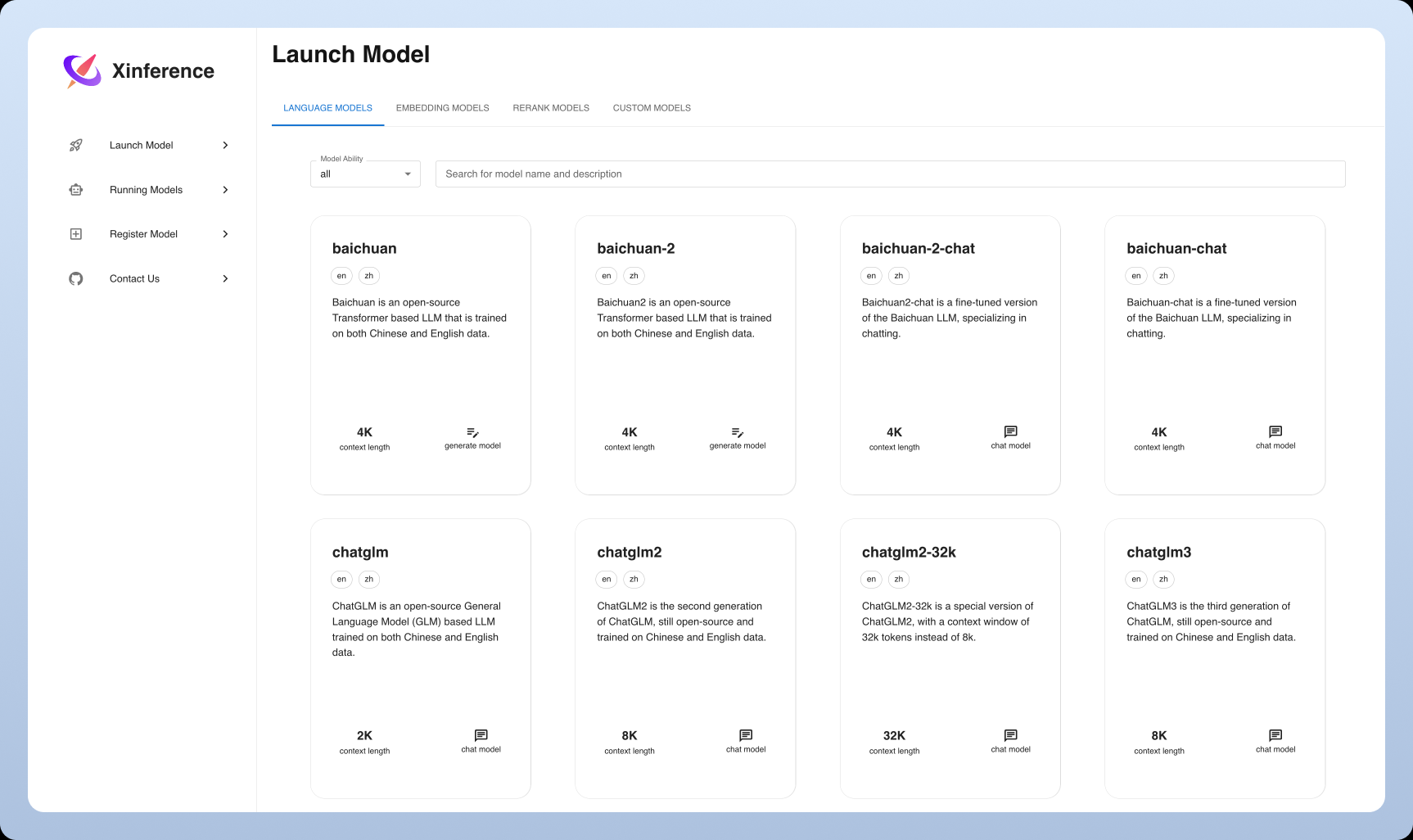

Text2Edit is an open source project , hosted on GitHub , aims to provide efficient text editing and advertisement generation functions . The main goal of the project is to help users quickly process text content and generate high-quality advertising material through an easy-to-use interface and powerful features.Text2Edit project is maintained by a group of developers , the code base is open , users can freely access and contribute. The project's main programming languages include JavaScript, HTML and CSS, ensuring cross-platform compatibility and a great user experience.

Technical characteristics

1. Multimodal Large Language Models (MLLMs)

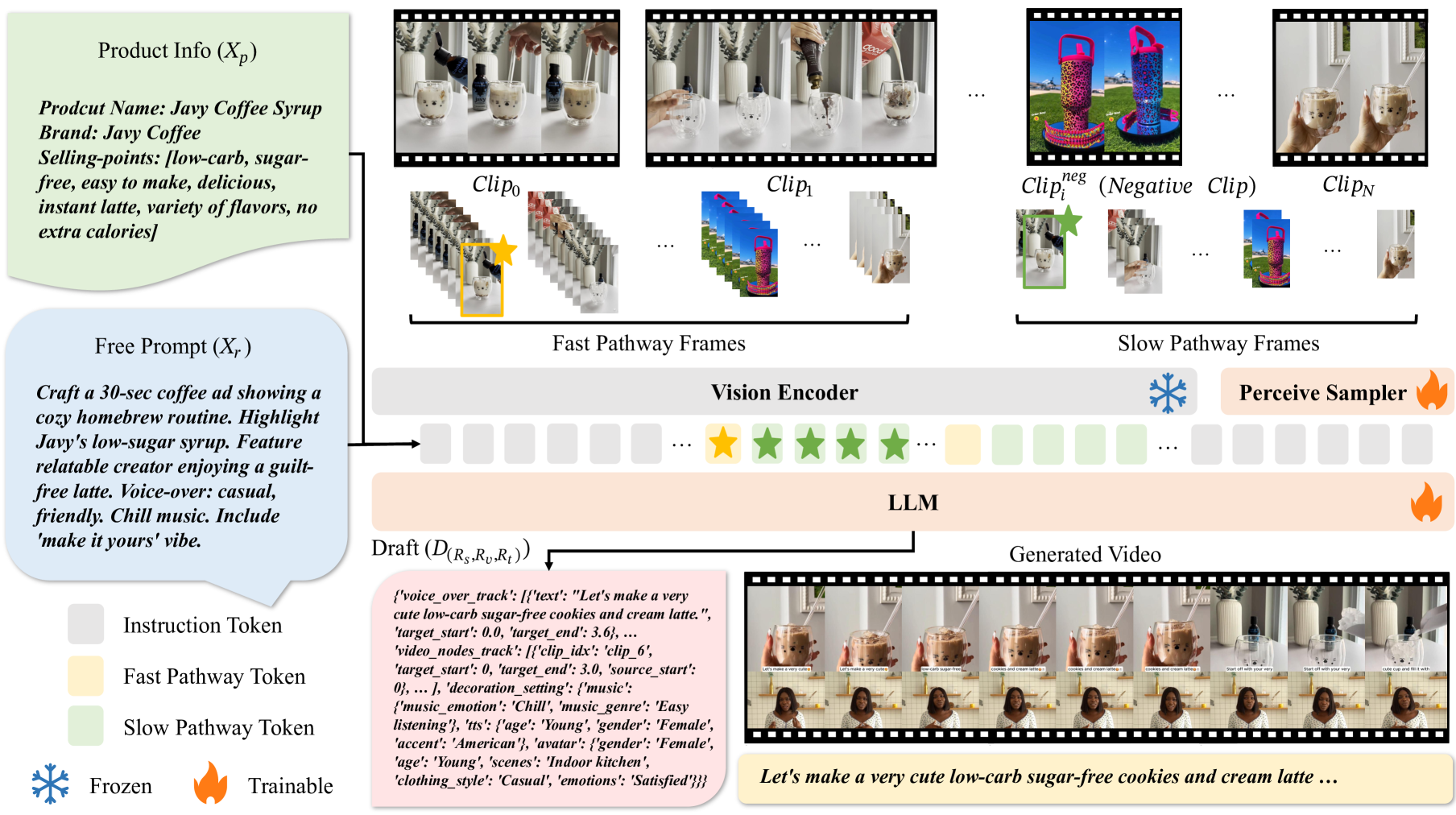

The Multimodal Big Language Model is the basis of this project, which is capable of simultaneously processing information in multiple modalities such as text, images and video.

2. High-frame-rate sampling and slow-fast processing techniques

In order to better understand the spatio-temporal information in the video, the project employs high frame rate sampling and slow-fast processing techniques:

- High Frame Rate Sampling: By sampling video frames at a frequency of 2 frames per second (fps), the model is able to more sensitively capture temporal changes in the video. This approach significantly enhances the model's ability to understand changes in video dynamics.

- Slow-fast processing technique: the model processes video frames using two paths simultaneously.

- Slow path: frames are processed at a lower frame rate (e.g., 0.5fps), but more tokens are assigned per frame for capturing detailed spatio-temporal information.

- Fast path: process frames at a high frame rate (e.g., 2fps) but assign fewer tokens per frame, focusing on capturing rapidly changing scenes. This dual-path strategy balances the spatio-temporal and semantic information of the video and significantly improves the model's understanding of the video content.

3. Text-driven editing

The text-driven editing mechanism allows users to precisely control the outcome of video editing through text input. Users can specify the length of the video, story line, target audience, script style, emphasized product selling points and other information. The model generates draft video edits that meet the user's needs based on these textual prompts, ensuring a high degree of control and versatility in the output.

4. Specific implementation of video editing

- Embedding and processing of video frames: video frames are first converted into embedding vectors by a visual coder such as CLIP or OpenCLIP. These vectors are input into the LLM along with text embedding vectors, and the model processes these embedding vectors through a self-attentive mechanism to generate drafts for video editing.

- Draft generation and post-processing: The drafts output by the model include the arrangement of video clips, narration scripts and decorative elements (e.g., background music, digital human images, etc.). These drafts are processed through post-processing (e.g., speech synthesis, music retrieval, etc.) to ultimately generate a renderable video.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...