Tarsier: an open source video comprehension model for generating high-quality video descriptions

General Introduction

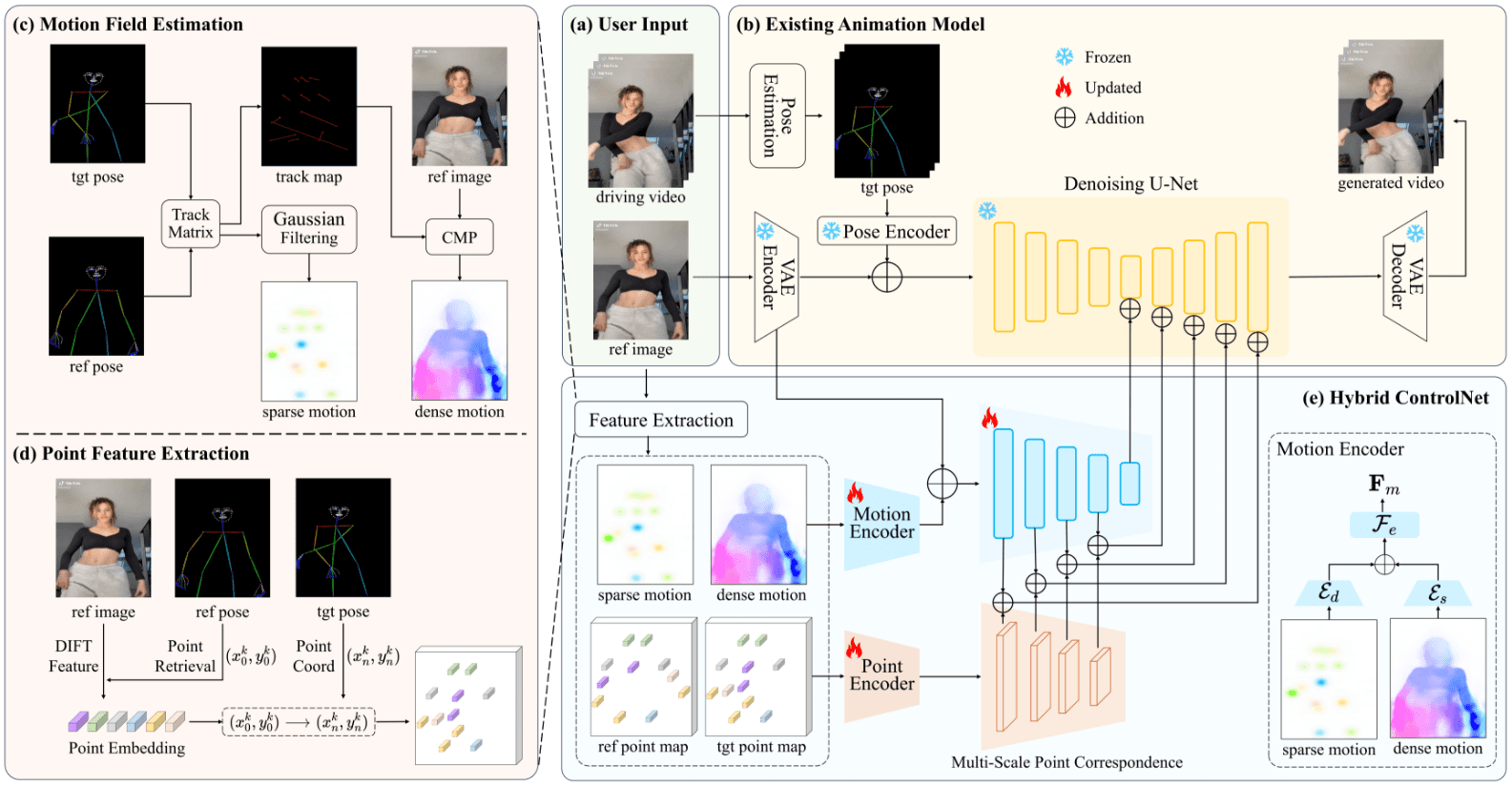

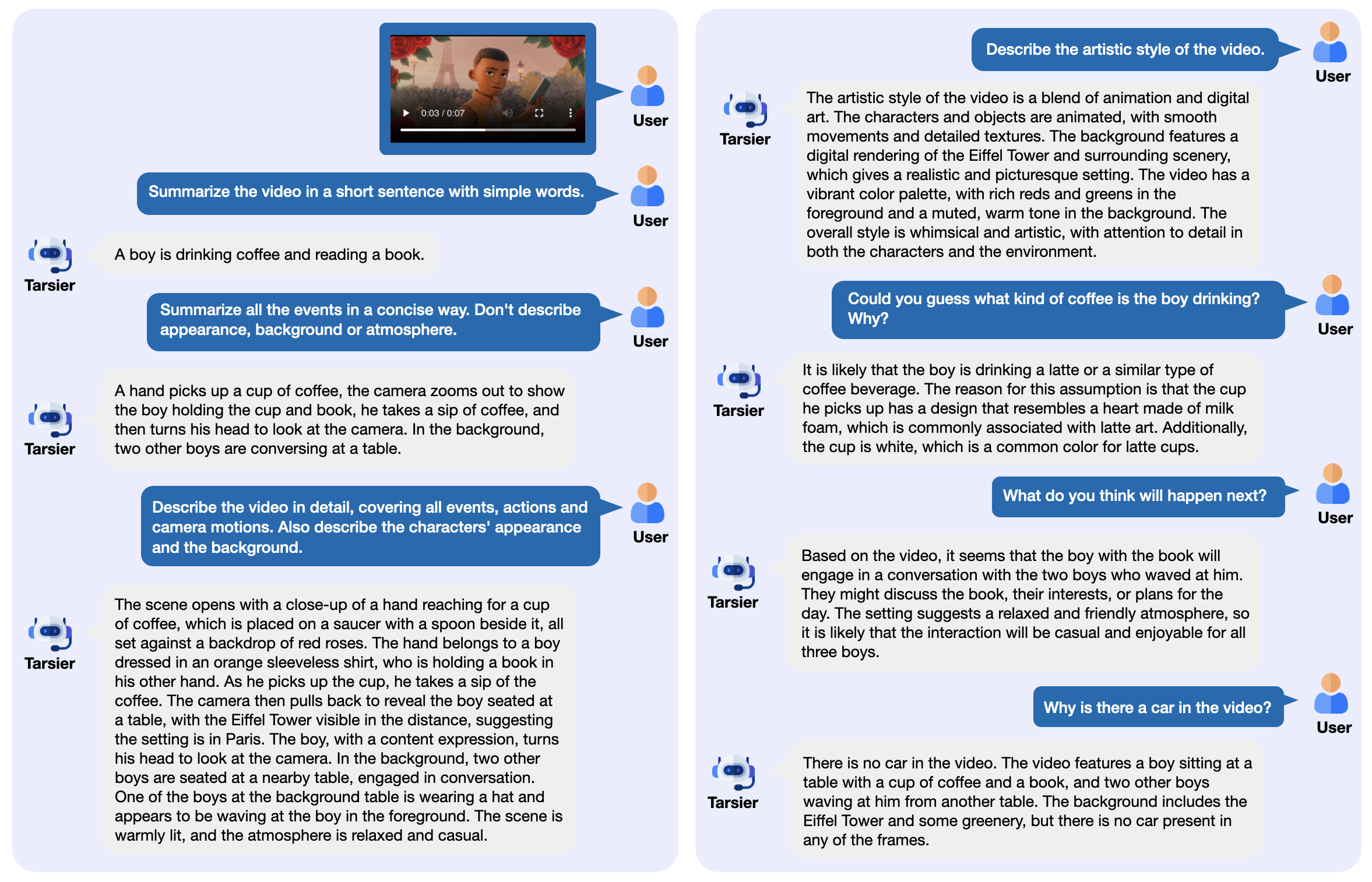

Tarsier is a family of open-source video-language models developed by ByteDance, mainly for generating high-quality video descriptions. It consists of a simple structure: CLIP-ViT processes the video frames and analyzes the temporal relations in combination with a Large Language Model (LLM). The latest version, Tarsier2-7B (released in January 2025), reached the top level in 16 public benchmarks and can compete with models such as GPT-4o. Tarsier supports video description, Q&A, and zero-sample subtitle generation, and the code, model, and data are publicly available on GitHub. The project has also launched the DREAM-1K benchmark for evaluating video description capabilities, which contains 1000 diverse video clips.

Function List

- Generate detailed video descriptions: analyze video content and output detailed text.

- Support for video Q&A: answer video-related questions, such as events or details.

- Zero-sample subtitle generation: generate subtitles for videos without training.

- Multi-task video comprehension: excels in multiple tasks such as quizzing and captioning.

- Open source deployment: provide model weights and code to run locally or in the cloud.

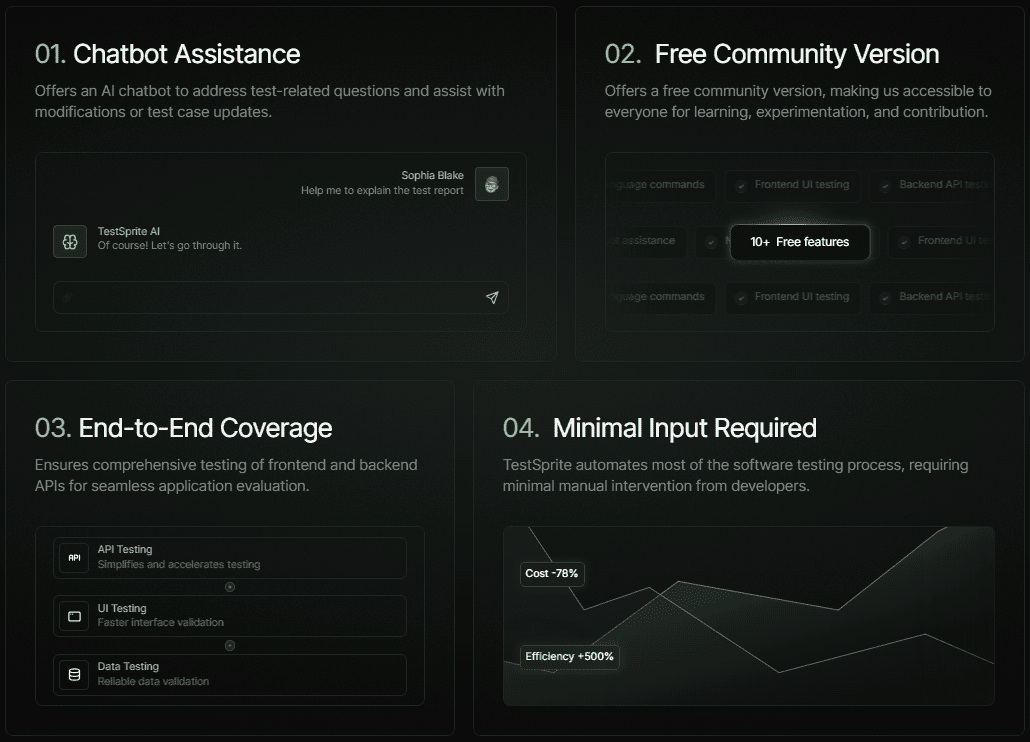

- Provides assessment tools: Includes the DREAM-1K dataset and the AutoDQ assessment methodology.

Using Help

Tarsier is suitable for users with a technical background, such as developers or researchers. Detailed installation and usage instructions are provided below.

Installation process

- Preparing the environment

Requires Python 3.9 or later. A virtual environment is recommended:

conda create -n tarsier python=3.9

conda activate tarsier

- clone warehouse

Download the Tarsier project code:

git clone https://github.com/bytedance/tarsier.git

cd tarsier

git checkout tarsier2

- Installation of dependencies

Run the installation script:

bash setup.sh

This will install all the necessary libraries, such as PyTorch and Hugging Face's tools.

- GPU support (optional)

If you have an NVIDIA GPU, install PyTorch with CUDA:

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu118

- Download model

Download models from Hugging Face, e.g. Tarsier2-7B:

huggingface-cli download omni-research/Tarsier2-7b

Other models like Tarsier-34b or Tarsier2-Recap-7b are also available from the official links.

- Verify Installation

Run the quick test script:

python3 -m tasks.inference_quick_start --model_name_or_path path/to/Tarsier2-7b --input_path assets/videos/coffee.gif

The output should be a description of the video, such as "A man picks up a coffee cup with heart-shaped foam and takes a sip".

Main Functions

Generate Video Description

- move

- Prepare a video file (supports formats such as MP4, GIF, etc.).

- Run command:

python3 -m tasks.inference_quick_start --model_name_or_path path/to/Tarsier2-7b --instruction "Describe the video in detail." --input_path your/video.mp4

- The output is displayed in the terminal, e.g. describing the actions and scenes in the video.

- take note of

- Videos that are too long may require more memory, so it is recommended to test with a short video first.

- Adjustable parameters such as frame rate (see

configs/tarser2_default_config.yaml).

Video Q&A

- move

- Specify questions and videos:

python3 -m tasks.inference_quick_start --model_name_or_path path/to/Tarsier2-7b --instruction "视频里的人在做什么?" --input_path your/video.mp4

- Output direct answers, such as "He's drinking coffee".

- draw attention to sth.

- Questions should be specific and avoid ambiguity.

- Support Chinese and other languages, Chinese works best.

Zero sample subtitle generation

- move

- Modify the configuration file to enable subtitle mode (

configs/tarser2_default_config.yamlset up intask: caption). - Running:

python3 -m tasks.inference_quick_start --model_name_or_path path/to/Tarsier2-7b --config configs/tarser2_default_config.yaml --input_path your/video.mp4

- Outputs short subtitles, such as "Drinking coffee alone".

Local service deployment

- move

- Install vLLM (recommended version 0.6.6):

pip install vllm==0.6.6

- Start the service:

python -m vllm.entrypoints.openai.api_server --model path/to/Tarsier2-7b

- Called with the API:

curl http://localhost:8000/v1/completions -H "Content-Type: application/json" -d '{"prompt": "描述这个视频", "video_path": "your/video.mp4"}'

- vantage

- Video can be processed in batches.

- Easy integration into other systems.

Featured Function Operation

DREAM-1K Assessment

- move

- Download the DREAM-1K dataset:

wget https://tarsier-vlm.github.io/DREAM-1K.zip

unzip DREAM-1K.zip

- Operational assessment:

bash scripts/run_inference_benchmark.sh path/to/Tarsier2-7b output_dir dream

- The output includes metrics such as F1 scores that show the quality of the description.

AutoDQ Evaluation

- move

- Ensure installation ChatGPT dependencies (Azure OpenAI configuration required).

- Run the evaluation script:

python evaluation/metrics/evaluate_dream_gpt.py --pred_dir output_dir/dream_predictions

- Outputs an automatic evaluation score that measures description accuracy.

Frequently Asked Questions

- installation failure: Check the Python version and network, upgrade pip (

pip install -U pip). - Slow model loading: Make sure you have enough disk space, at least 50GB is recommended.

- No GPU output: Run

nvidia-smiCheck that CUDA is working properly.

Online Experience

- interviews Tarsier2-7B Online DemoIf you want to test the description and the Q&A function, upload a video.

With these steps, you can easily handle video tasks with Tarsier. Whether you're generating descriptions or deploying services, it's simple and efficient.

application scenario

- Video Content Organization

Media workers can use Tarsier to generate video summaries and quickly organize their material. - Educational Video Assistance

Teachers can generate subtitles or quizzes for course videos to enhance teaching and learning. - Short video analysis

Marketers can analyze the content of short videos such as TikTok and extract key messages for promotion.

QA

- What video formats are supported?

Supports MP4, GIF, AVI, etc, as long as FFmpeg can decode it. - What are the hardware requirements?

Minimum 16GB of RAM and 4GB of video memory, NVIDIA GPU recommended (e.g. 3090). - Is it commercially available?

Yes, Tarsier uses the Apache 2.0 license, which allows commercial use subject to terms.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...