SVLS: SadTalker Enhanced to Generate Digital People Using Portrait Video

General Introduction

SadTalker-Video-Lip-Sync is a video lip-synthesis tool based on the SadTalkers implementation. The project generates lip shapes through voice-driven generation and uses configurable facial region enhancement to improve the clarity of the generated lip shapes. The project also uses the DAIN frame interpolation algorithm to fill in frames in the generated video to make the lip transition smoother, more realistic and more natural. Users can quickly generate high-quality lipshape videos through simple command-line operations, which are suitable for various video production and editing needs.

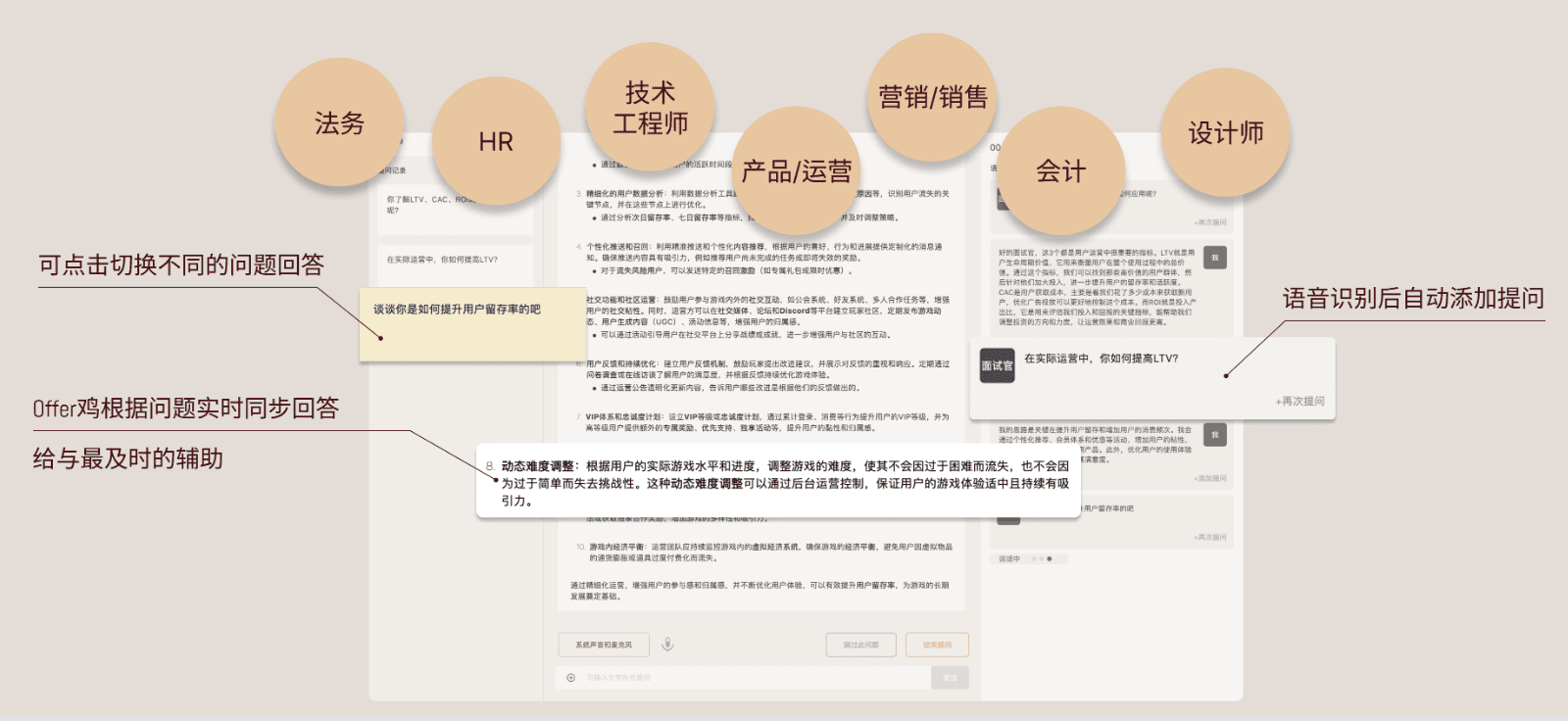

SadTalker original

SadTalker Enhanced

Function List

- Speech-driven lip generation: Driving lip movements in a video through an audio file.

- Facial Area Enhancement: Configurable lip or full face area picture enhancement for improved video clarity.

- DAIN frame insertion: Use deep learning algorithms to patch frames on videos to improve video smoothness.

- Multiple enhancement options: Supports three modes: no enhancement, lip enhancement and full face enhancement.

- Pre-trained models: Provide a variety of pre-trained models to facilitate users to get started quickly.

- Simple command line operation: Easy to configure and run via command line parameters.

Using Help

environmental preparation

- Install the necessary dependencies:

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu113

conda install ffmpeg

pip install -r requirements.txt

- If you need to use the DAIN model for frame filling, you also need to install Paddle:

python -m pip install paddlepaddle-gpu==2.3.2.post112 -f https://www.paddlepaddle.org.cn/whl/linux/mkl/avx/stable.html

Project structure

checkpoints: store pre-trained modelsdian_output: Stores the output of DAIN frame insertsexamples: Sample audio and video filesresults: Generate resultssrc: Source Codesync_show: Synthesized effect demonstrationthird_part: Third-party librariesinference.py: Reasoning ScriptsREADME.md: Project description document

model-based reasoning

Use the following command for model inference:

python inference.py --driven_audio <audio.wav> --source_video <video.mp4> --enhancer <none, lip, face> --use_DAIN --time_step 0.5

--driven_audio: Input audio files--source_video: Input video file--enhancer: Enhanced mode (none, lip, face)--use_DAIN: Whether to use DAIN frames--time_step: Interpolated frame rate (default 0.5, i.e. 25fps -> 50fps)

synthesis effect

The generated video effects are displayed in the ./sync_show Catalog:

original.mp4: Original videosync_none.mp4: Synthesis effects without any enhancementnone_dain_50fps.mp4: Adding 25fps to 50fps using only the DAIN modellip_dain_50fps.mp4: Enhancements to the lip area + DAIN model to add 25fps to 50fpsface_dain_50fps.mp4: Enhancement of full face area + DAIN modeling to add 25fps to 50fps

Pre-trained models

Pre-training model download path:

- Baidu.com:link (on a website) Extract code: klfv

- Google Drive:link (on a website)

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...