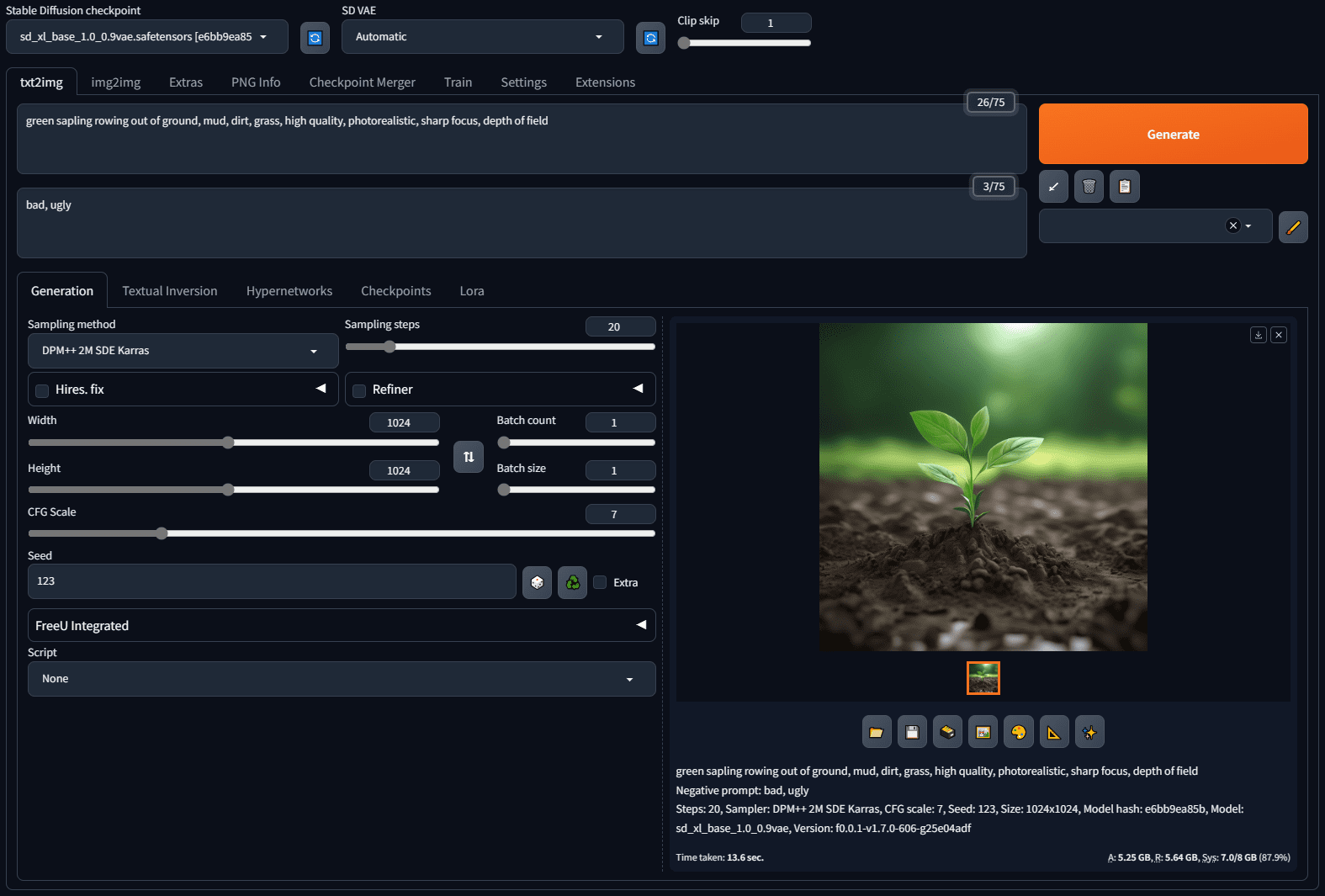

Stable Diffusion WebUI Forge: Optimized and Accelerated Image Generation Models

General Introduction

The Stable Diffusion WebUI Forge is a platform built on the Stable Diffusion WebUI (Gradio version) to simplify the development process, optimize resource management, and speed up inference processing. It provides a number of accelerations and optimizations not found in the original WebUI. features supported by Forge include GPU reasoning acceleration, resource management optimization, new ways to simplify integration of UNet patches, extended development convenience, and automated resource management. In addition, the developers have provided sample ControlNet extensions to help users develop their own features.

Function List

GPU Inference Acceleration

Resource management optimization

UNet patches: a new approach to integration

Easy to expand and develop

Automated resource management

Using Help

Visit the GitHub repository for the latest code

Install and run according to the documentation

Image Generation with Stable Diffusion WebUI Forge

Developing Custom Extensions with UNet Patches and ControlNet Samples

Compared to the original WebUI (for SDXL inference at 1024px), you can expect the following speedups:

If you're using a common GPU, such as 8GB of video memory, you can expect an increase in inference speed (it/s) of about 30 to 45%, peak GPU memory (in Task Manager) to drop from about 700MB to 1.3GB, maximum diffusion resolution (no out-of-memory occurrences) to increase by about a factor of 2x to 3x, and maximum diffusion batch size (no out-of-memory occurrences) to Maximum diffusion resolution (no out-of-memory occurs) will increase by a factor of about 2 to 3, and maximum diffusion batch size (no out-of-memory occurs) will increase by a factor of about 4 to 6.

If you're using a lower performance GPU like 6GB of video memory, you can expect an inference speed (it/s) increase of ~60~75%, the GPU memory peak (in Task Manager) will drop from ~800MB to 1.5GB, the maximum diffusion resolution (where out-of-memory doesn't occur) will increase by ~3x, and the maximum diffusion batch size (where out-of-memory doesn't occur) will Maximum diffusion resolution (without out-of-memory) will increase by a factor of about 3, and maximum diffusion batch size (without out-of-memory) will increase by a factor of about 4.

If you're using a high-performance GPU like the 4090 with 24GB of video memory, you can expect an increase in inference speed (it/s) of about 3~6%, the GPU memory peak (in Task Manager) will drop from about 1GB to 1.4GB, the maximum diffusion resolution (no out-of-memory occurrences) will increase by a factor of about 1.6, and the maximum diffusion batch size (no out of memory) will increase by about 2x.

If you use ControlNet with SDXL, the maximum ControlNet count (without out-of-memory) will be increased by about 2 times, and the speed with SDXL+ControlNet will be increased by about 30~45%.

Another very important change brought by the Forge is the Unet Patcher, with which methods like Self-Attention Guidance, Kohya High Res Fix, FreeU, StyleAlign, Hypertile, etc. can be implemented in about 100 lines of code. by using the Unet Patcher.

Thanks to Unet Patcher, many new things are now possible and supported in Forge, including SVD, Z123, masked Ip-adapter, masked controlnet, photomaker and more.

No more monkeypatching UNet or conflicting with other extensions!

Forge has also added a number of samplers including, but not limited to, DDPM, DDPM Karras, DPM++ 2M Turbo, DPM++ 2M SDE Turbo, LCM Karras, Euler A Turbo, etc. (LCM has been in the original webui since 1.7.0).

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...