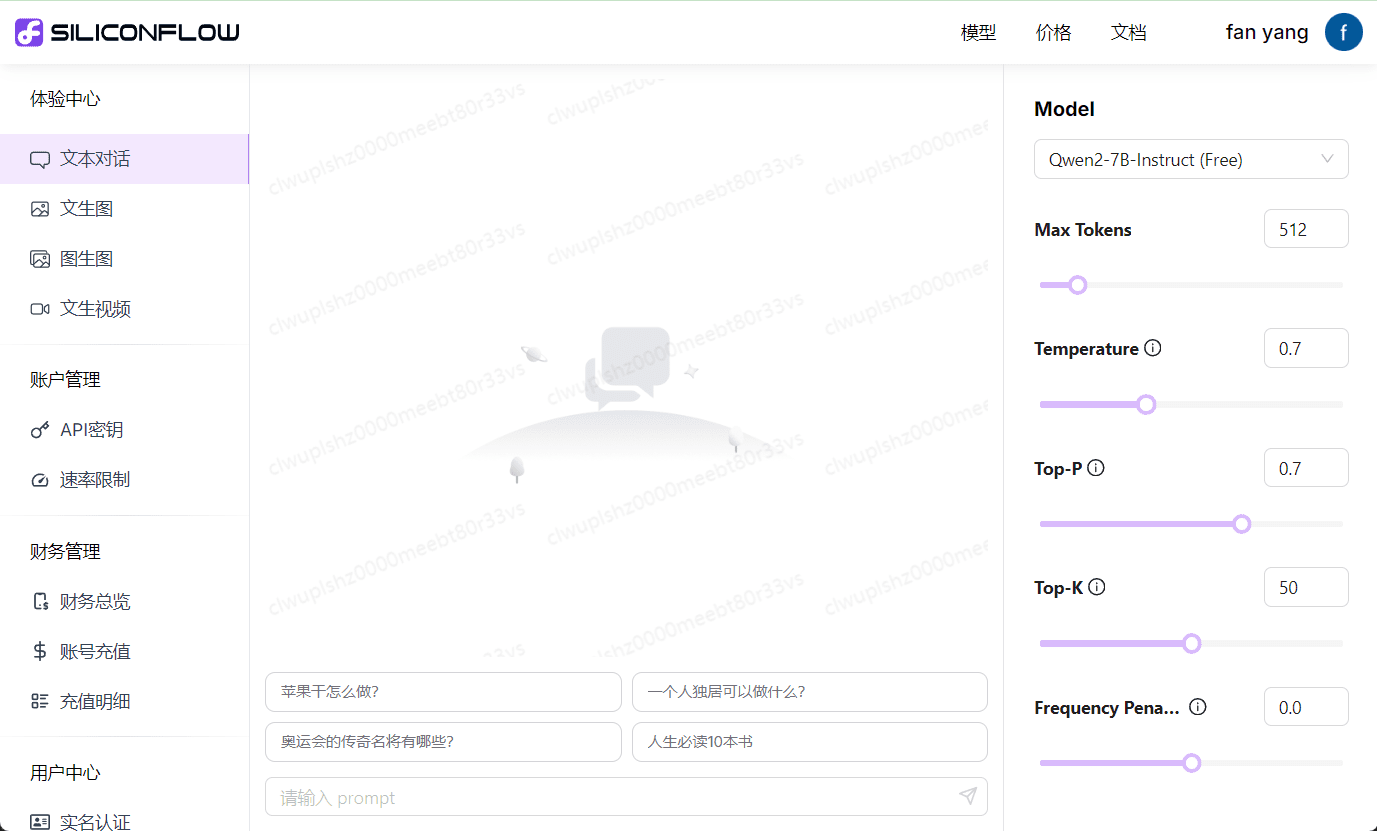

SILICONFLOW (Silicon Flow): accelerating AGI for humanity, integrating free large model interfaces

General Introduction

SiliconCloud provides cost-effective GenAI services based on excellent open source base models.

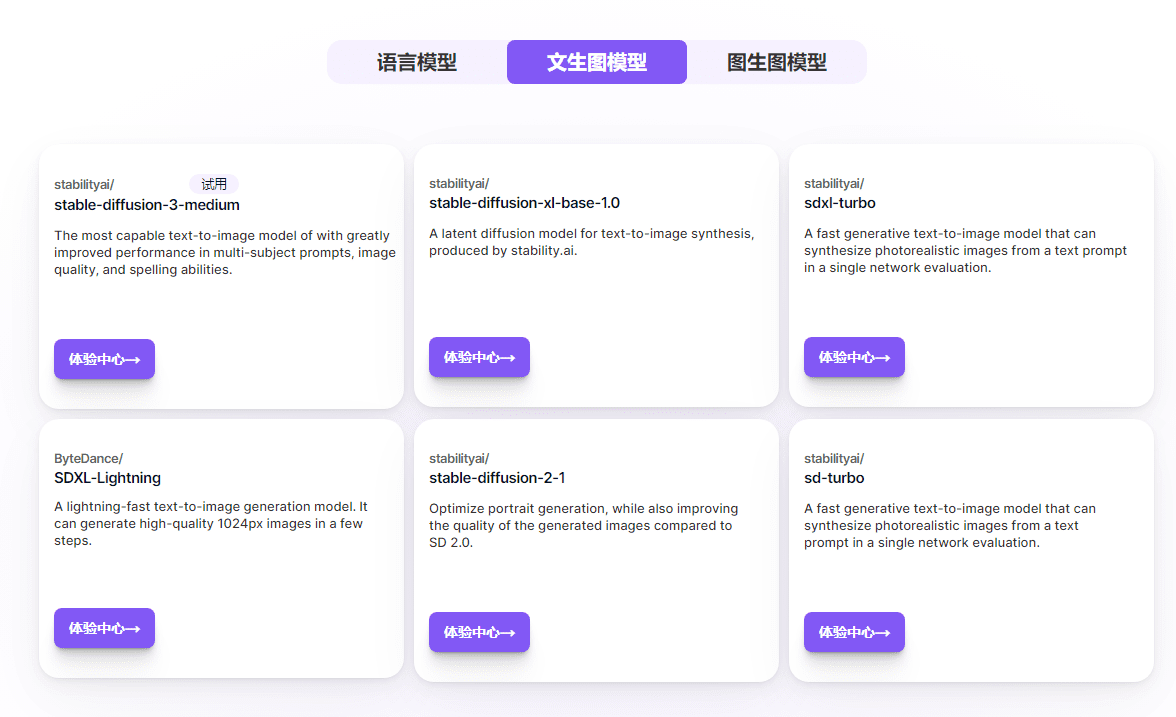

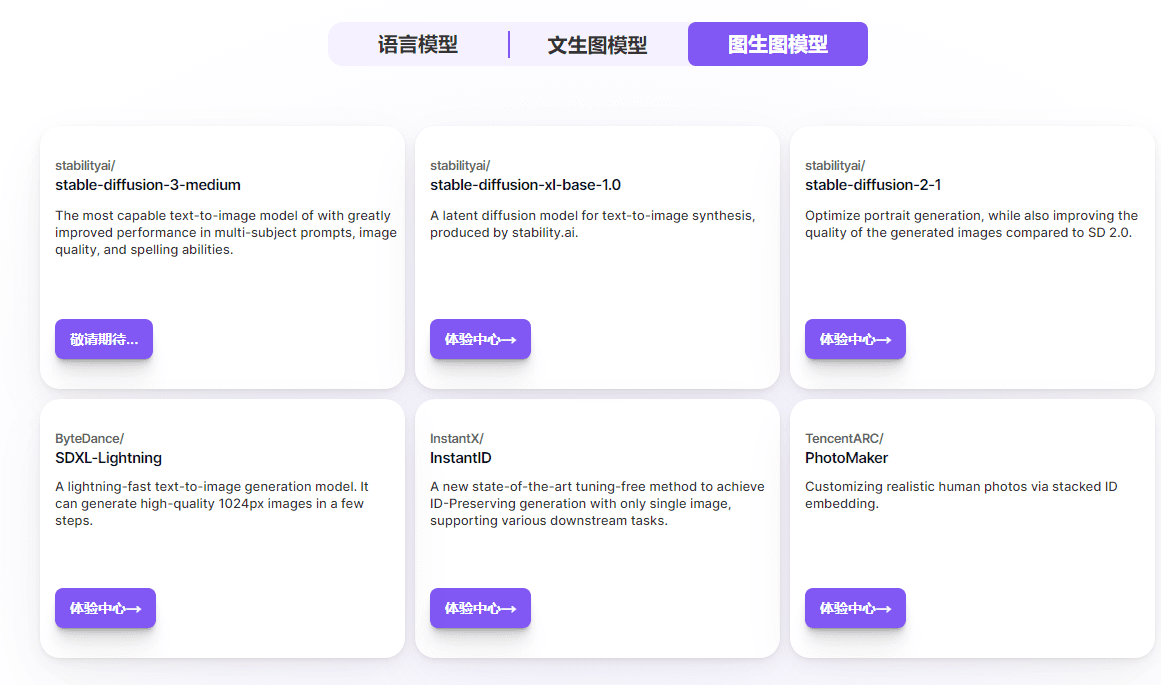

Unlike most big model cloud service platforms that only provide their own big model APIs, SiliconCloud has a wide range of open source big language models and image generation models including Qwen, DeepSeek, GLM, Yi, Mistral, LLaMA 3, SDXL, and InstantID, so that users are free to switch between models suitable for different application scenarios. Users can freely switch between models suitable for different application scenarios.

What's more, SiliconCloud provides out-of-the-box large model inference acceleration services to bring a more efficient user experience to your GenAI applications.

For developers, SiliconCloud provides one-click access to top open source models. This allows developers to have better application development speed and experience while significantly reducing the cost of trial and error in application development.

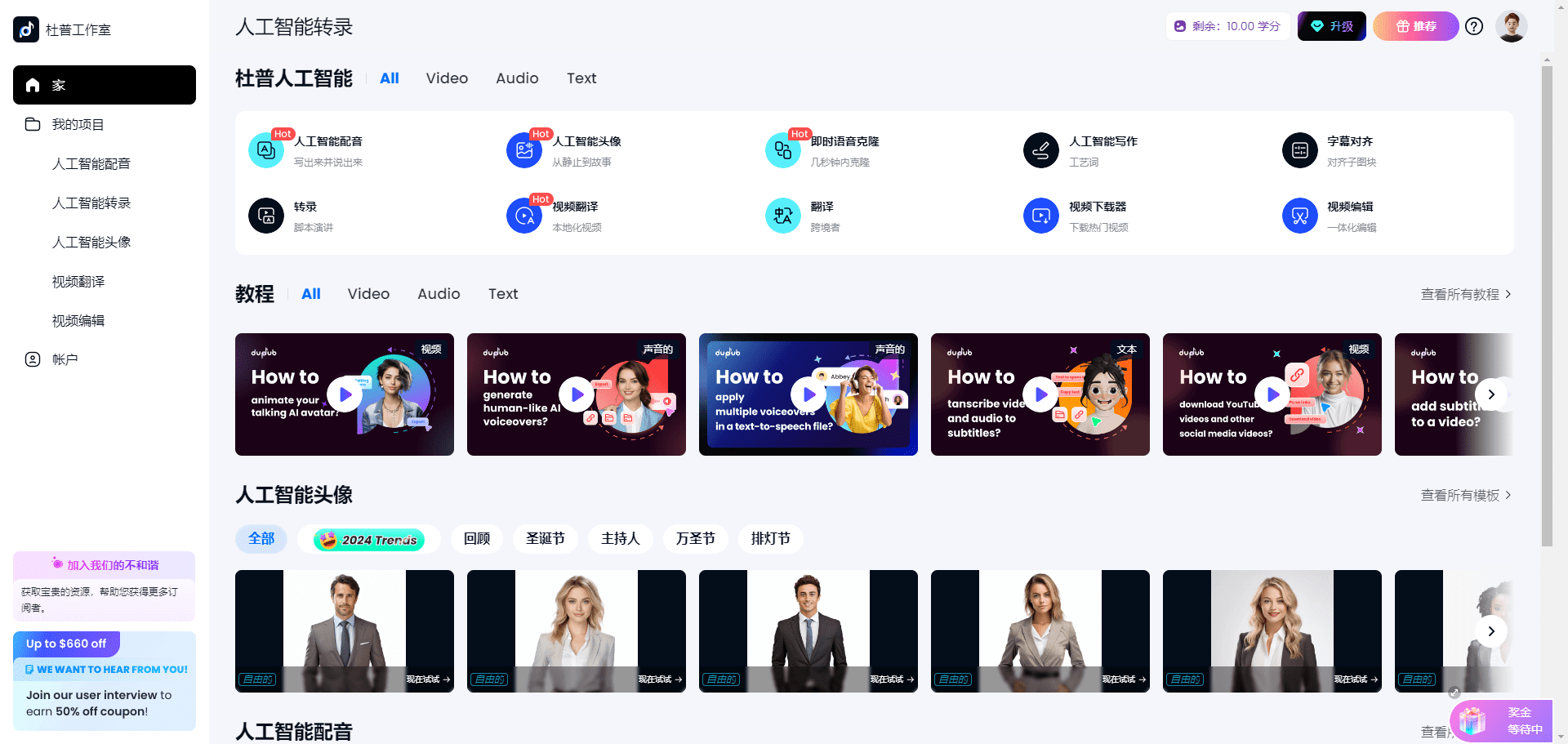

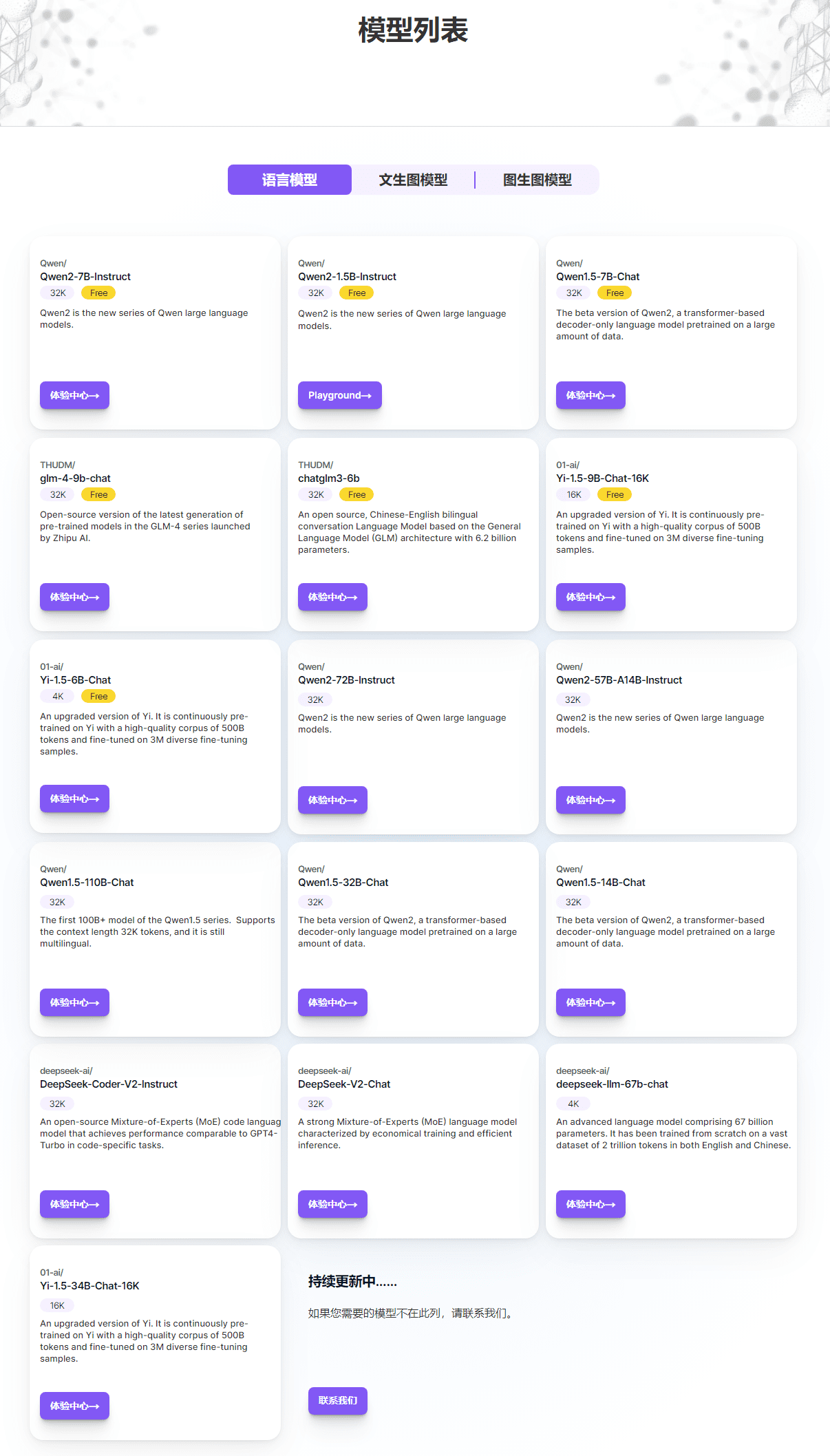

On the SiliconCloud Models page, you can view the language models, text-to-graph models, and graph-to-graph models that we currently support, and click on "Experience Center" to actually test using the model.

Model List

Free Model List

| Qwen/Qwen2-7B-Instruct | 32K |

|---|---|

| Qwen/Qwen2-1.5B-Instruct | 32K |

| Qwen/Qwen1.5-7B-Chat | 32K |

| thudm/glm-4-9b-chat | 32K |

| THUDM/chatglm3-6b | 32K |

| 01-ai/Yi-1.5-9B-Chat-16K | 16K |

| 01-ai/Yi-1.5-6B-Chat | 4K |

| google/gemma-2-9b-it | 8K |

| internlm/internlm2_5-7b-chat | 32K |

| meta-llama/Meta-Llama-3-8B-Instruct | 8K |

| meta-llama/Meta-Llama-3.1-8B-Instruct | 8K |

| mistralai/Mistral-7B-Instruct-v0.2 | 32K |

Using the SiliconCloud API

Base URL: https://api.siliconflow.cn/v1

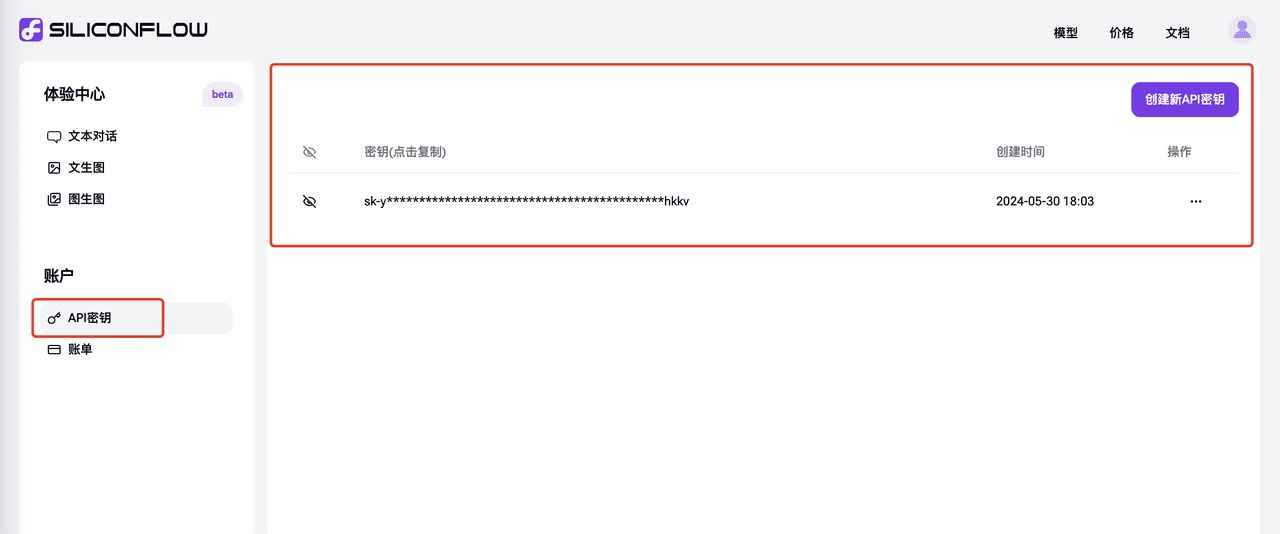

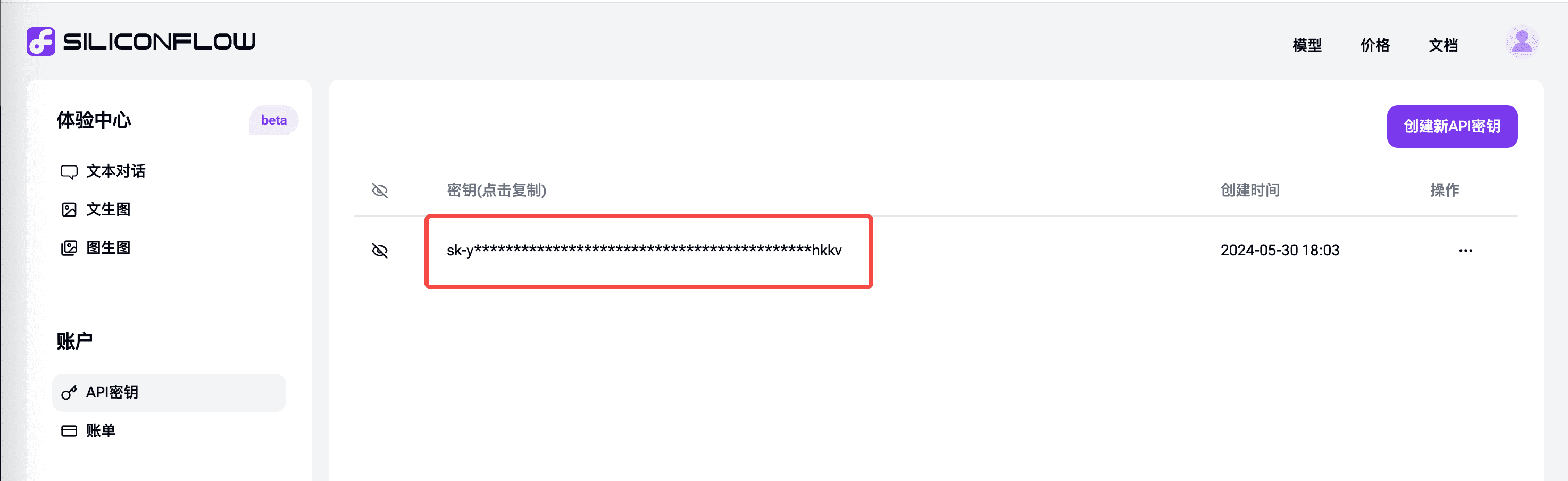

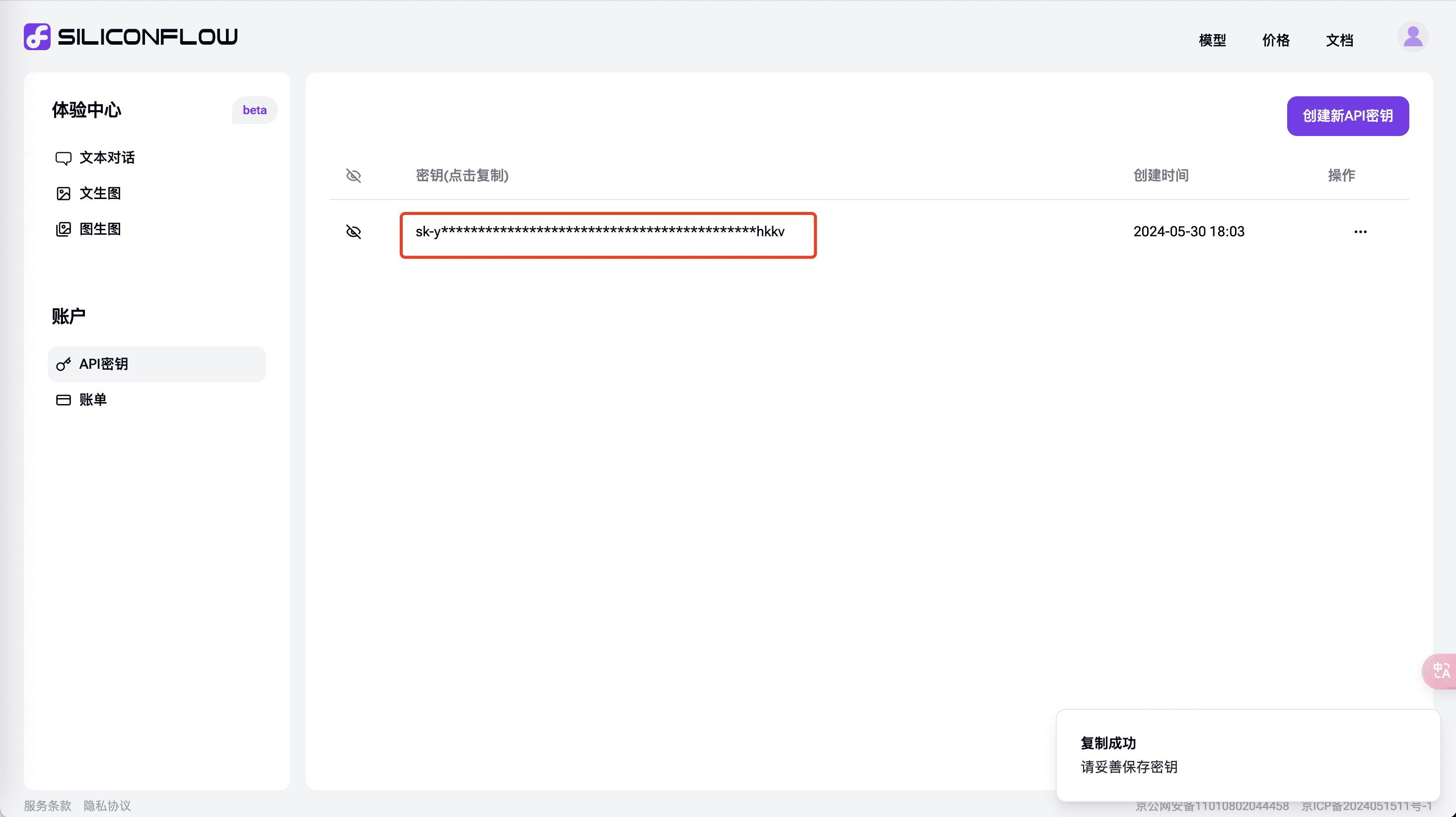

Generate API key

In the "API Keys" tab Click Create New API Key and click Copy to use it in your API scenario.

Using the SiliconCloud API in Immersive Translation

Use SiliconCloud's API Capabilities for Fast Cross-Language Translation in Immersive Translations

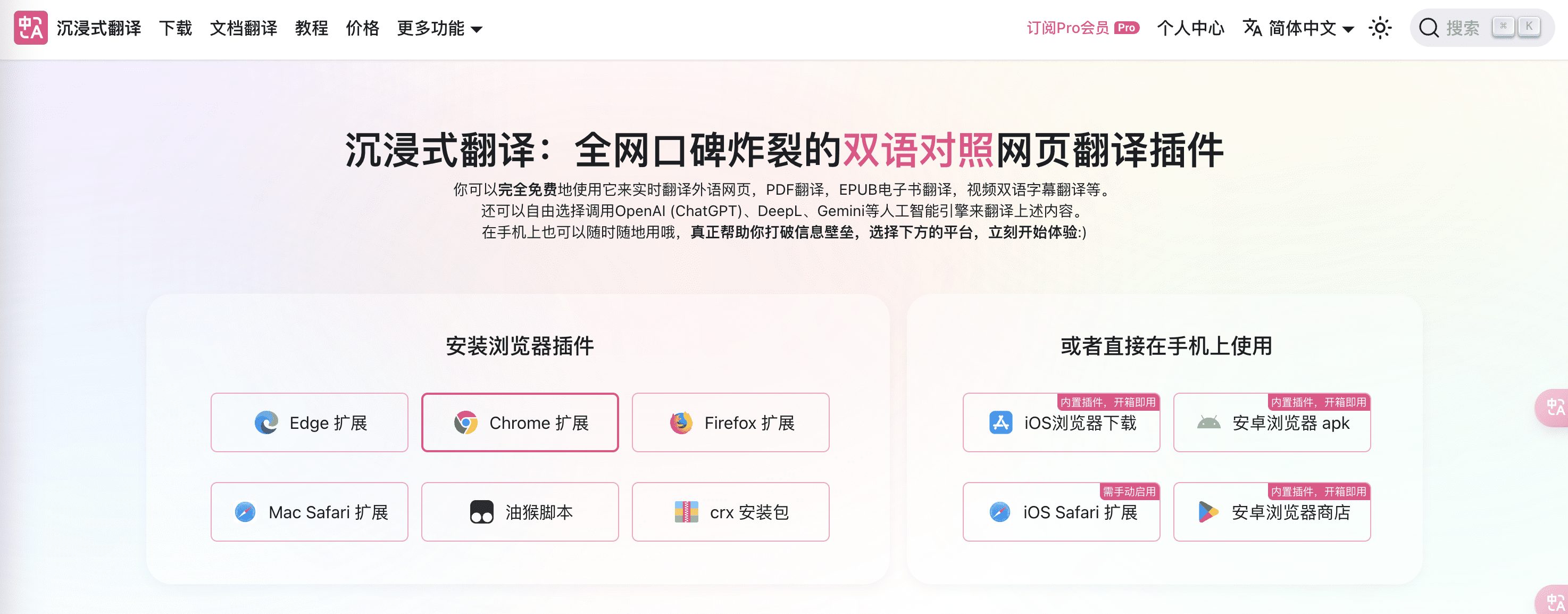

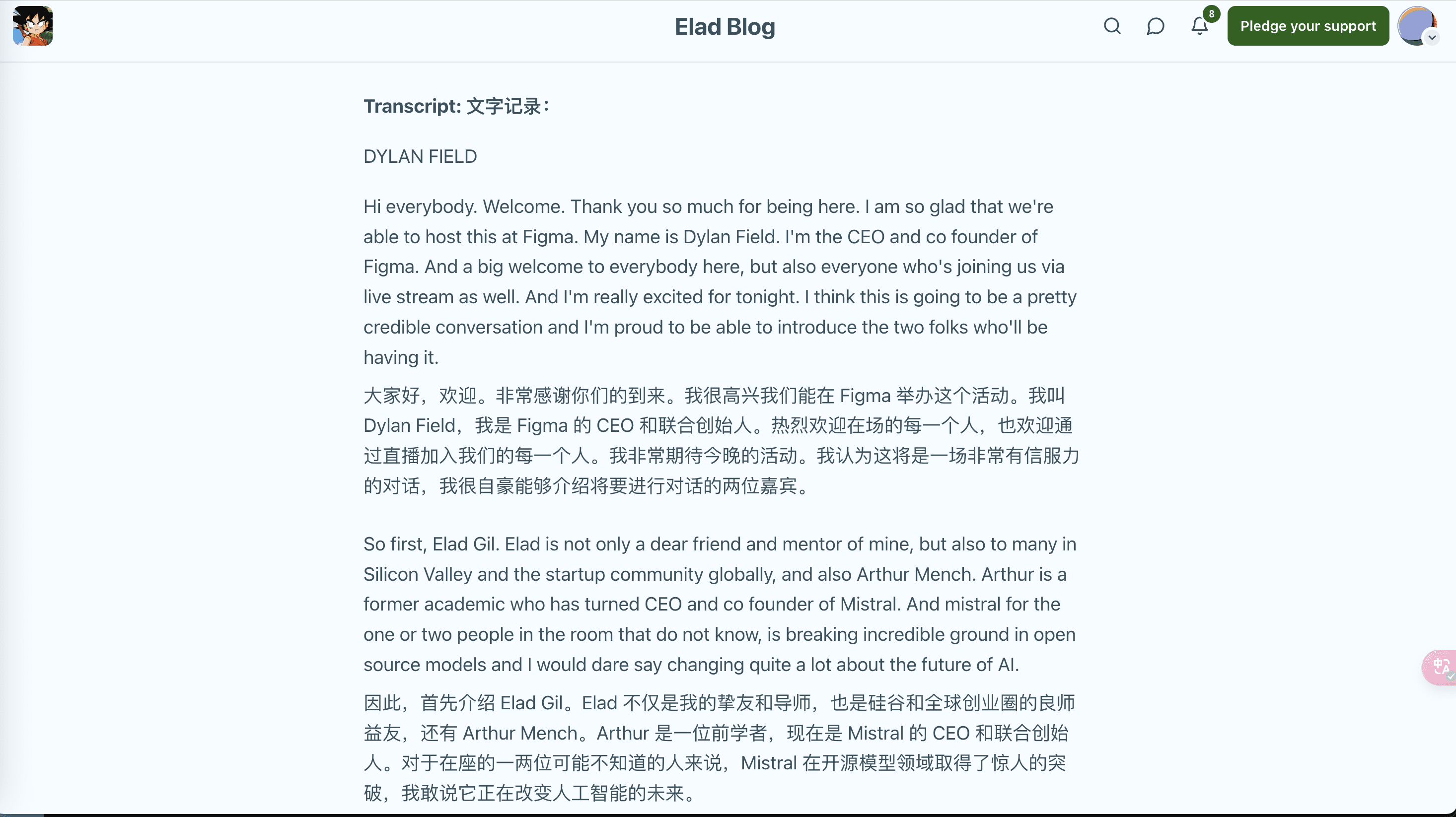

As a bilingual webpage translation plugin with explosive online reputation, Immersive Translation combines the cross-language comprehension capability of the Big Language Model to translate foreign language content in real time, which can be applied to webpage reading, PDF translation, EPUB e-book translation, video bilingual subtitle translation and other scenarios, and supports the use of a variety of browser plug-ins and apps. Since its launch in 2023, this highly acclaimed AI bilingual webpage translation extension has helped more than 1 million users cross the language barrier and freely draw global wisdom.

SiliconCloud of Silicon Flow has recently been the first to provide a series of large models such as GLM4, Qwen2, DeepSeek V2, Yi, etc., and the models are very fast. How can these two be combined to improve capabilities in immersive translation scenarios?

Look at the efficacy of the treatment first

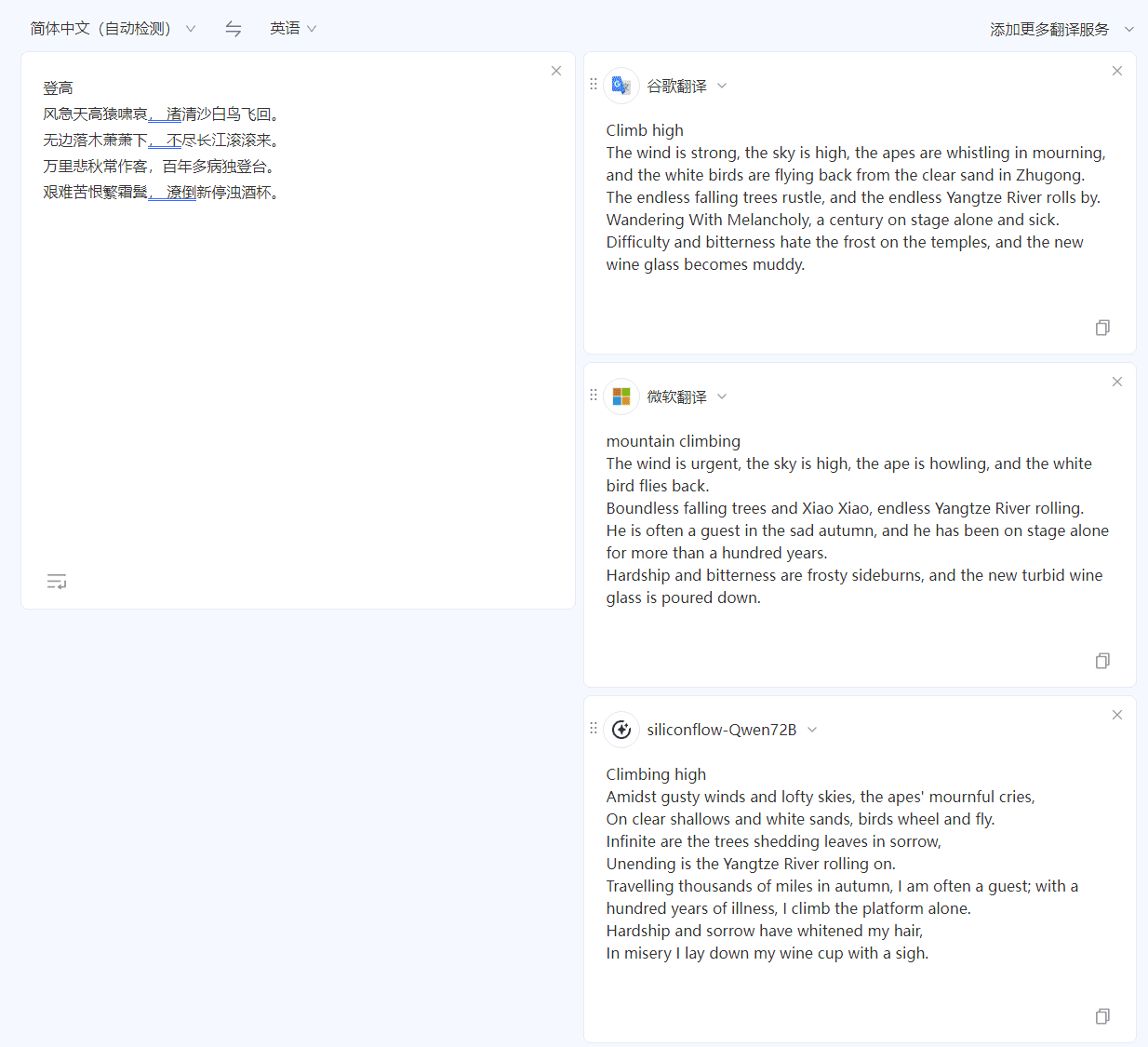

Let's take a familiar Chinese-English translation as an example. Using Du Fu's classic "Ascending Heights" for the "Chinese to English" test, compared with Google Translate and Microsoft Translate, the QWen 72B model provided by Silicon Mobility has a more authentic understanding of the Chinese language, and the translation is also closer to the style of the original text.

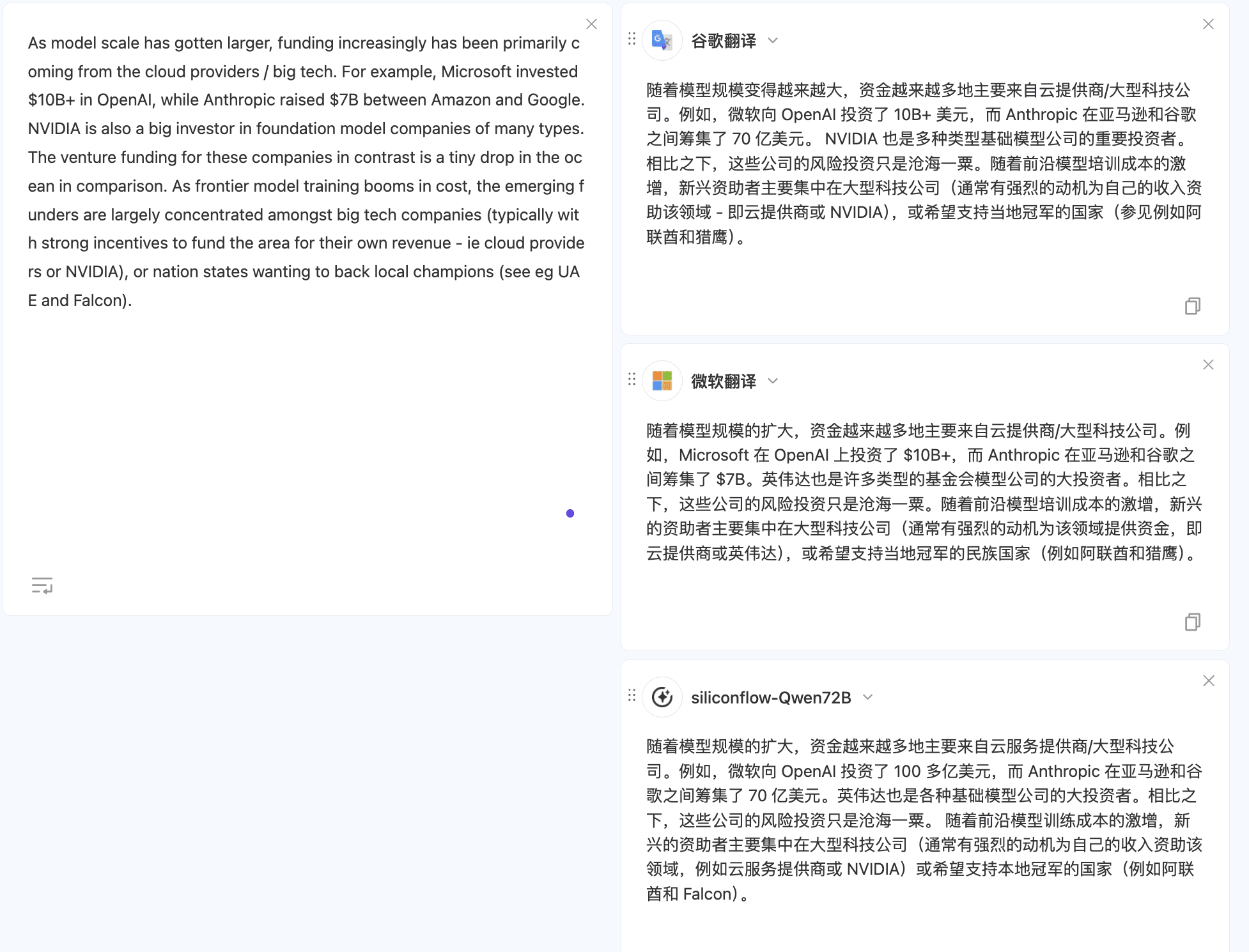

The model provided by Silicon Mobility also provides better results in translating the same text from English to Chinese, with smoother translations.

To try other scenarios, people can just useTranslated Document Multi-Model Comparison The configuration of the SiliconCloud-related models is detailed in the "Configuration Procedure" section.

Configuration process: default configuration

- Select "Install Browser Plug-in" on the Immersive Translation website and follow the instructions. If you have already installed the corresponding plug-in, please skip this step.

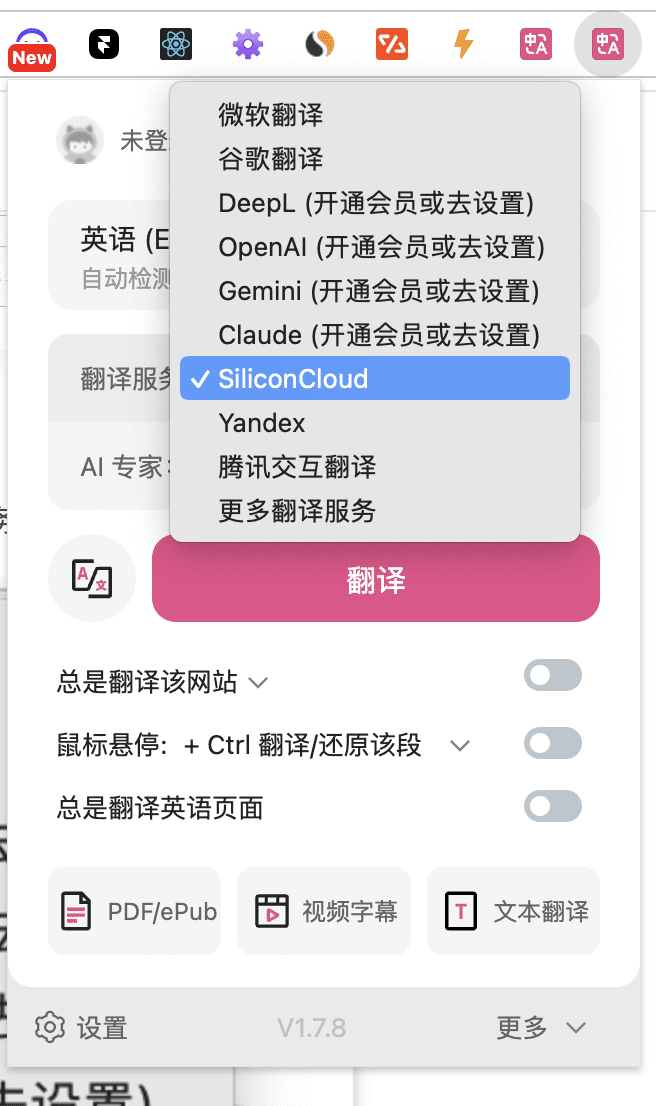

- Set "Translation Service" to "SiliconCloud" in the Immersive Translation icon to use SiliconCloud's translation service.

The above completes the default settings.

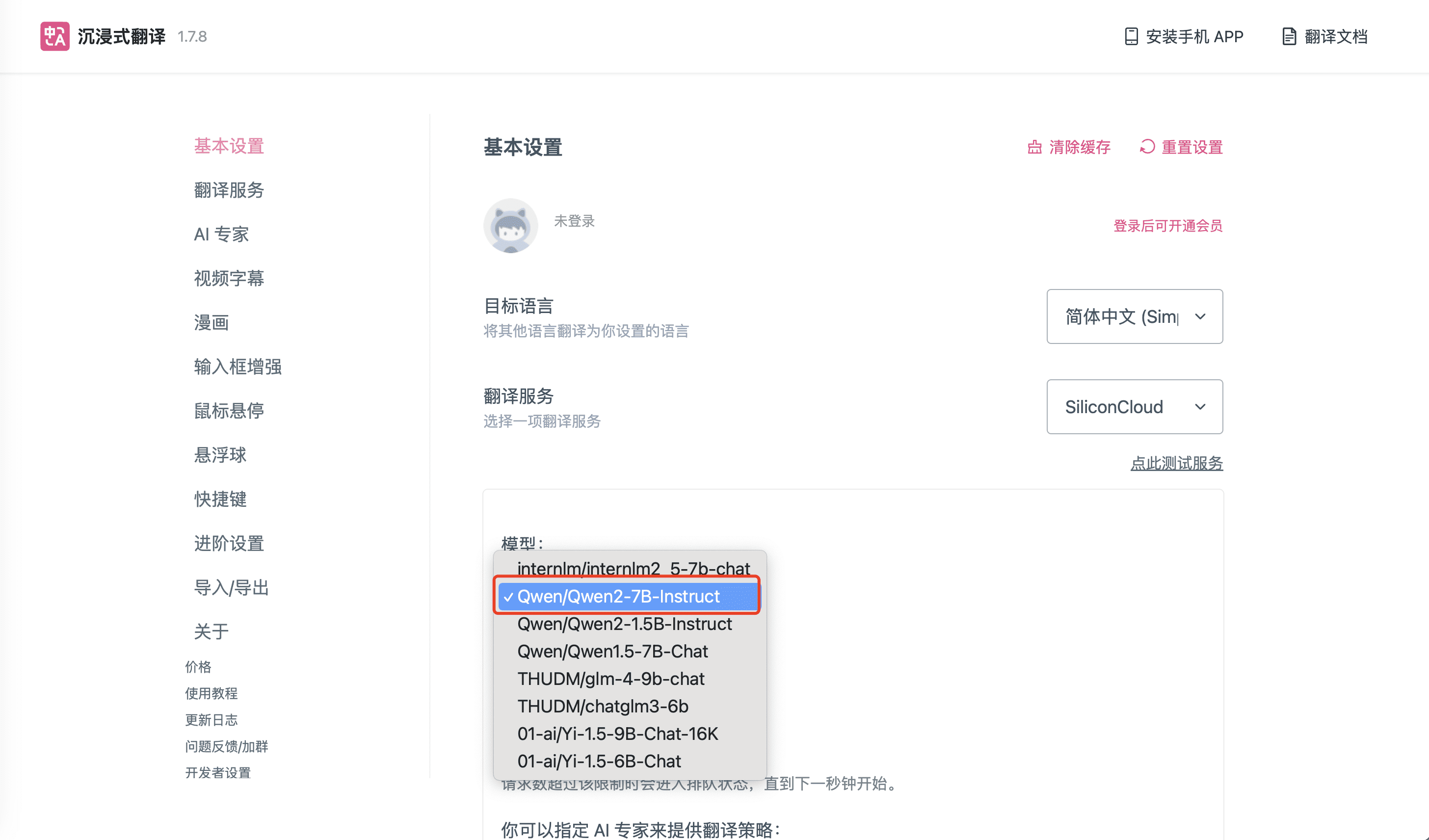

Select other free models from SiliconCloud outside of the default settings:

- The default model in the above settings is "Qwen/Qwen2-7B-Instruct" of SiliconCloud, if you need to switch to other SiliconCloud models, click "Settings" in the icon above. If you need to switch to other SiliconCloud models, click "Settings" in the above icon to make SiliconCloud related settings.

Select another size model for SiliconCloud outside of the default settings:

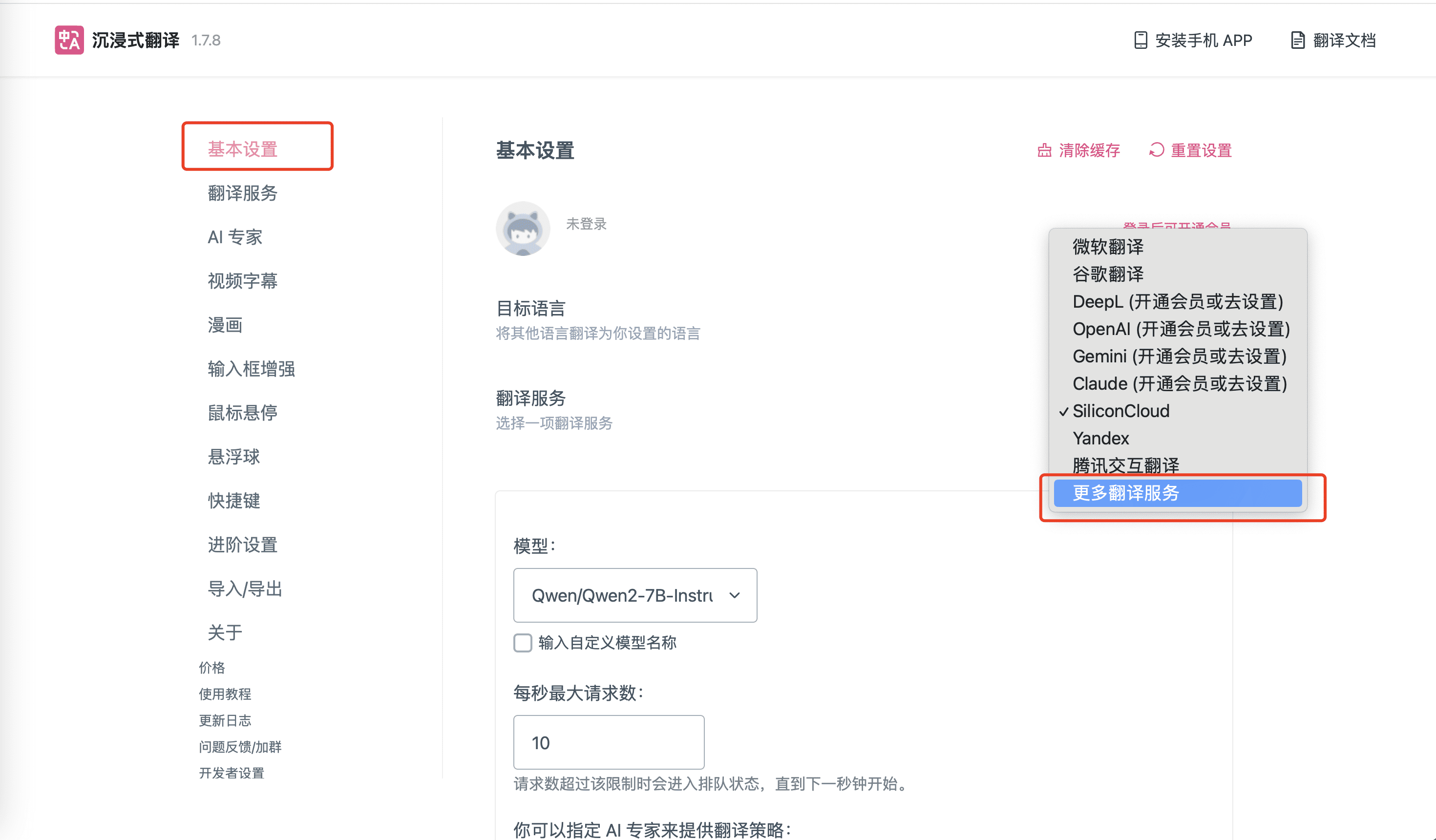

- If you need to use other SiliconCloud models other than the above 8 models, click on the Immersive Translation extension icon, find the corresponding "Settings" option, as shown in the figure below, and select "More Translation Services".

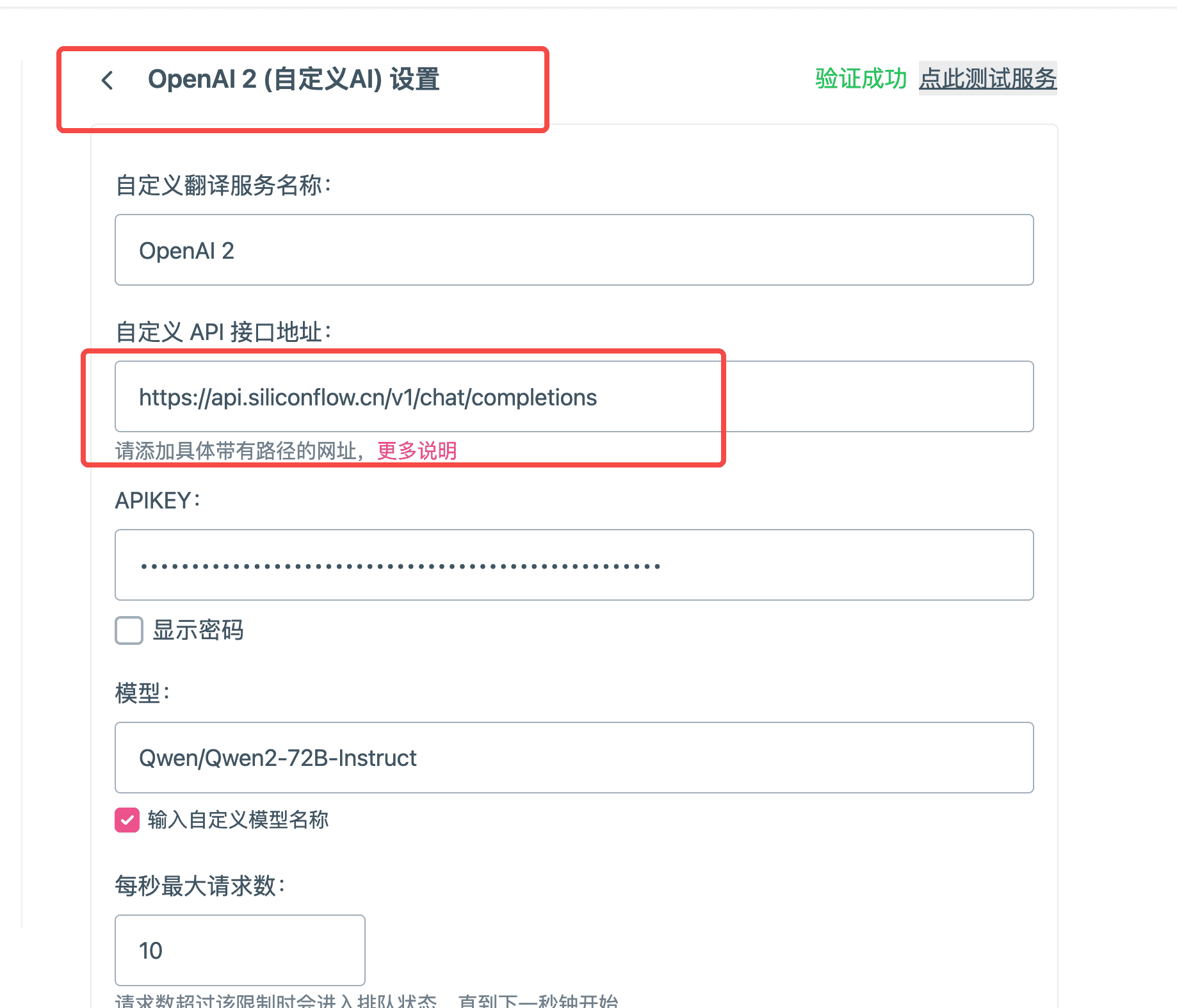

- Add information about the SiliconCloud model to it!

- Customized translation service name: just fill in according to your own actual situation, here we fill in "Qwen/Qwen2-72B-Instruct".

- Custom API interface address:

- API Address:https://api.siliconflow.cn/v1/chat/completions

- To learn more you can refer tohttps://docs.siliconflow.cn/reference/chat-completions-1

APIKEY: FromAPI key Click on Copy Over in the

- Model name: the name of the model in the siliconcloud, here it is "Qwen/Qwen2-72B-Instruct", can be used.Platform Model ListGet the names of all currently supported models.

- Click Test in the upper right corner to verify that the test was successful.

- Now that you have completed the relevant model configuration items, you can use the large language model provided by SiliconCloud to provide translations in the Immersive Translator plugin.

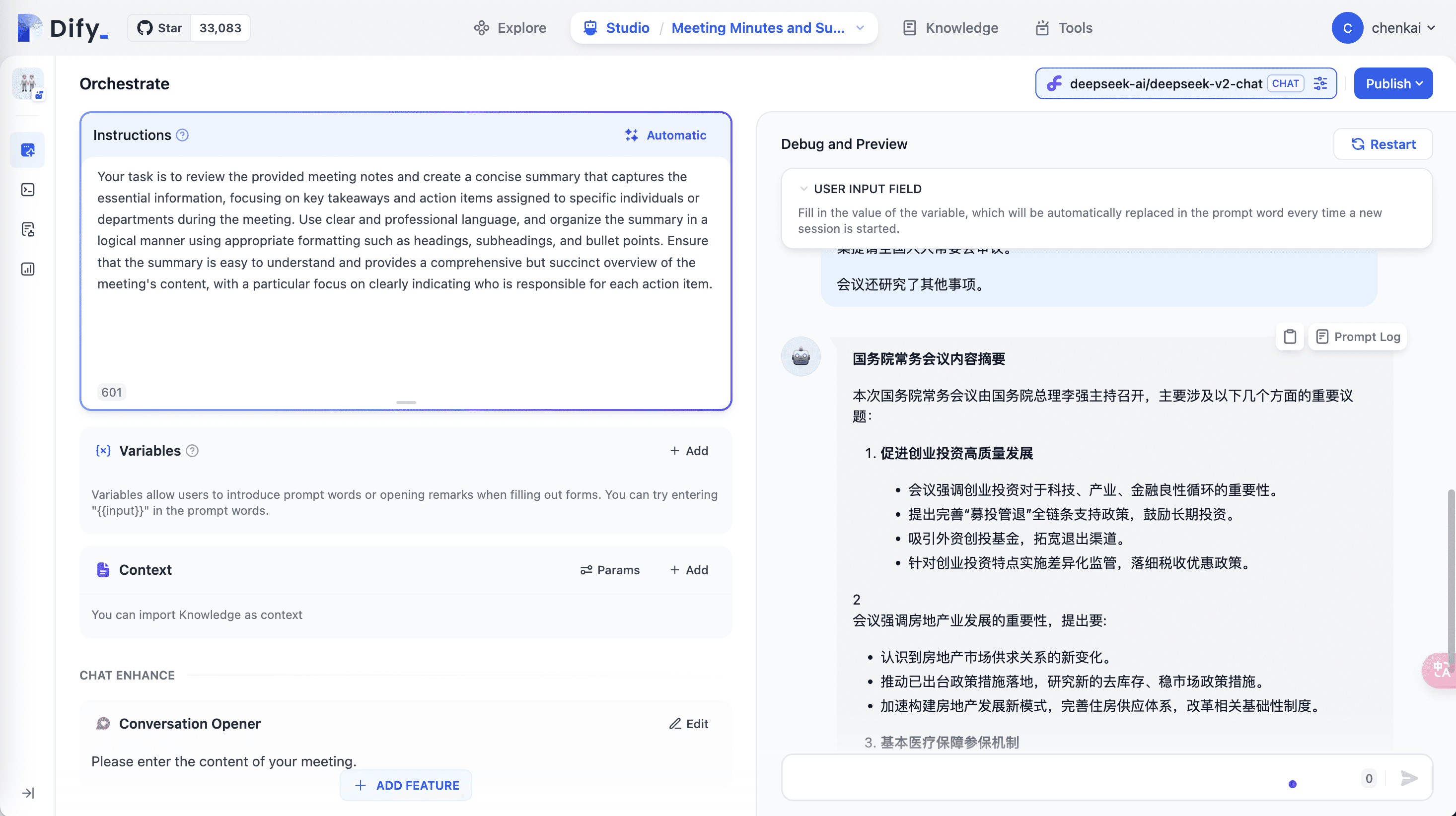

Using the SiliconCloud API in dedify

utilization dify API for the SiliconCloud model built into the

As a frequent user of dify workflows, SiliconCloud provides the latest models such as GLM4 , Qwen2, DeepSeek V2, Yi, etc. at the first time, and the model is very fast, that must be the first time to dock up!

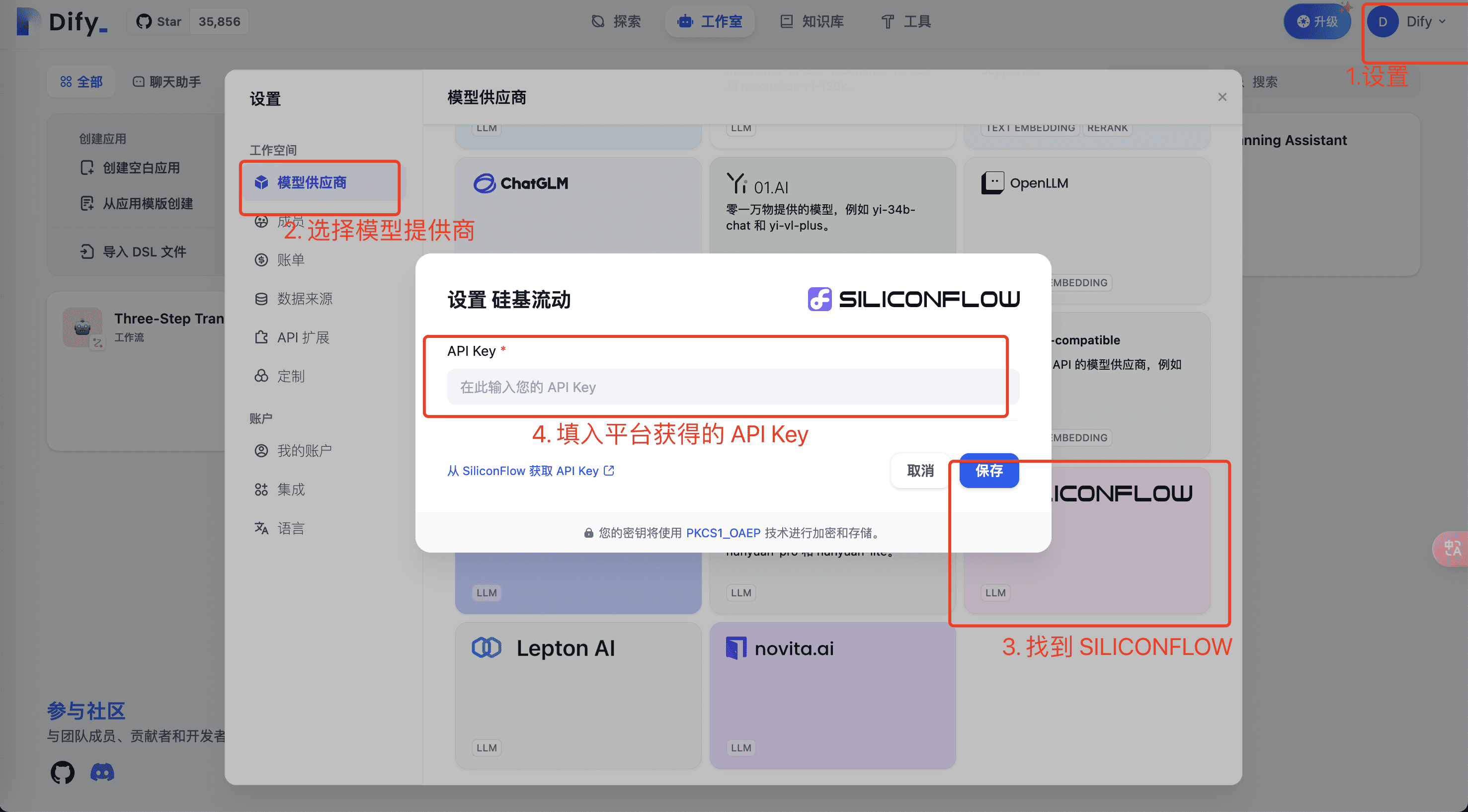

- In Setup, set the model and API Key for your SiliconCloud platform account to set up the large model.

- Fill in the relevant model and API Key information for the SiliconCloud platform and click Save to verify. Use theSiliconCloud APIKeyGet the current user's API Key and copy it to the above environment.

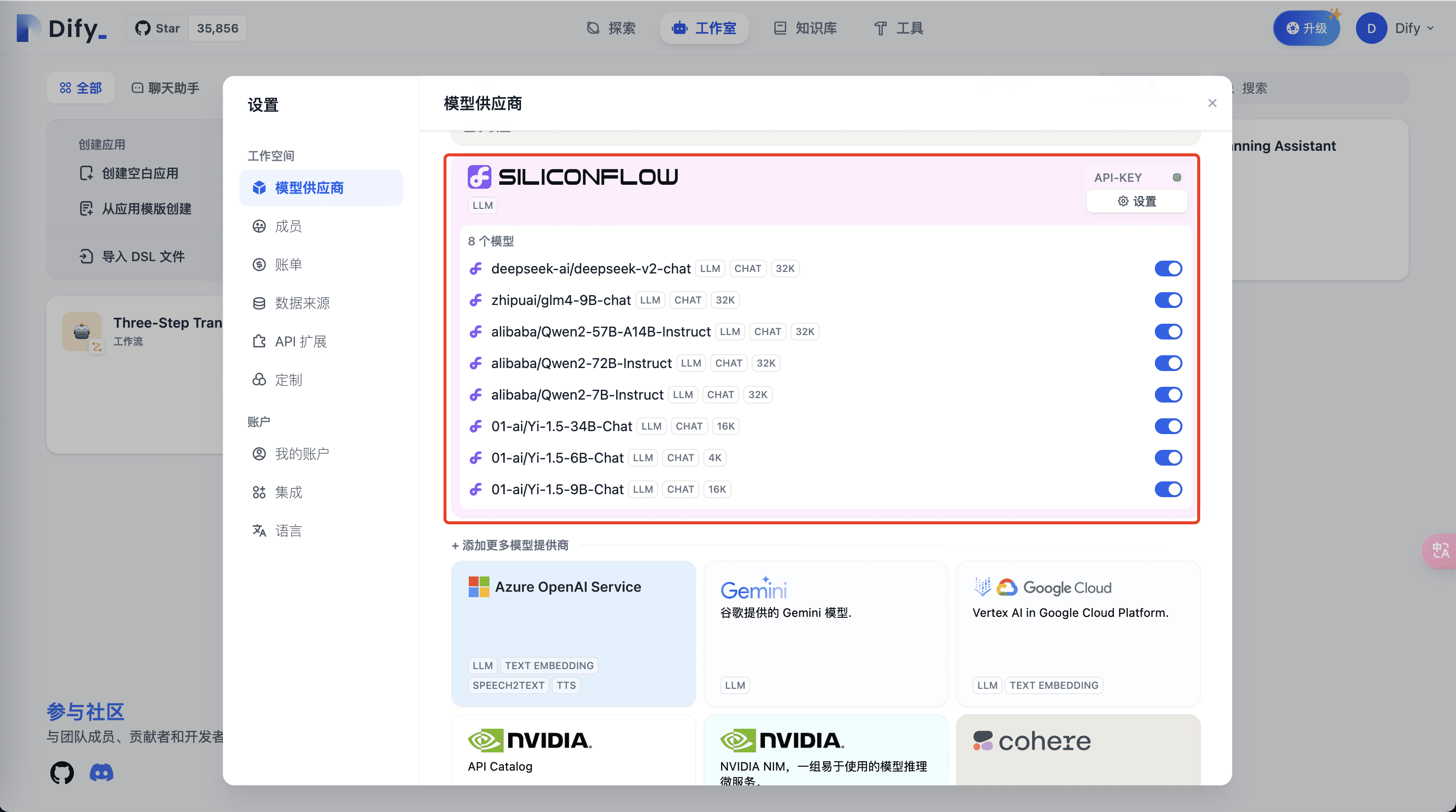

- Next you can see the SiliconFlow related models in the top area of the model provider.

- Using SiliconCloud Correspondent Models in Applications

After the above steps, you are ready to use the rich and fast LLM models provided by SiliconFlow in your Dify development applications.

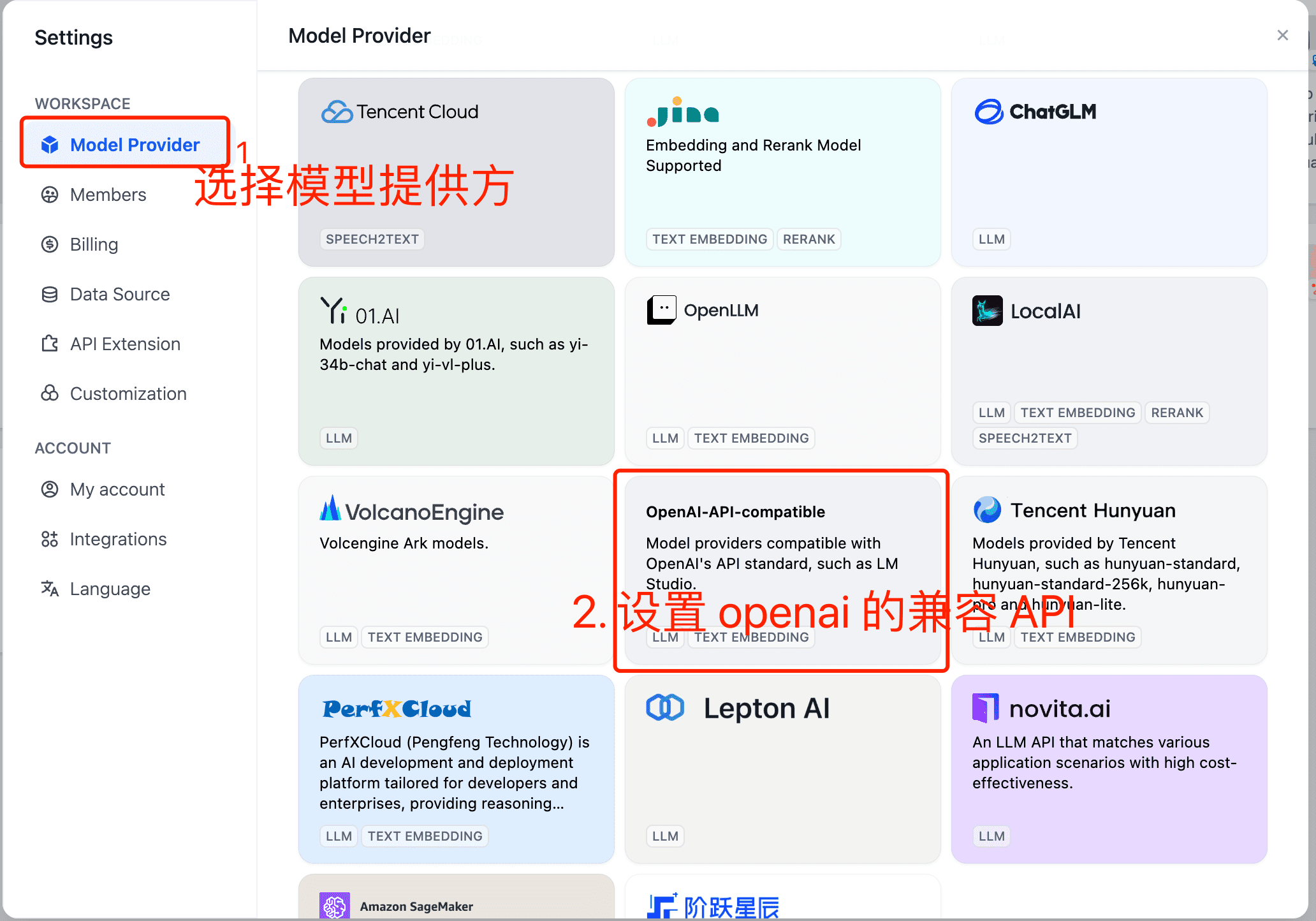

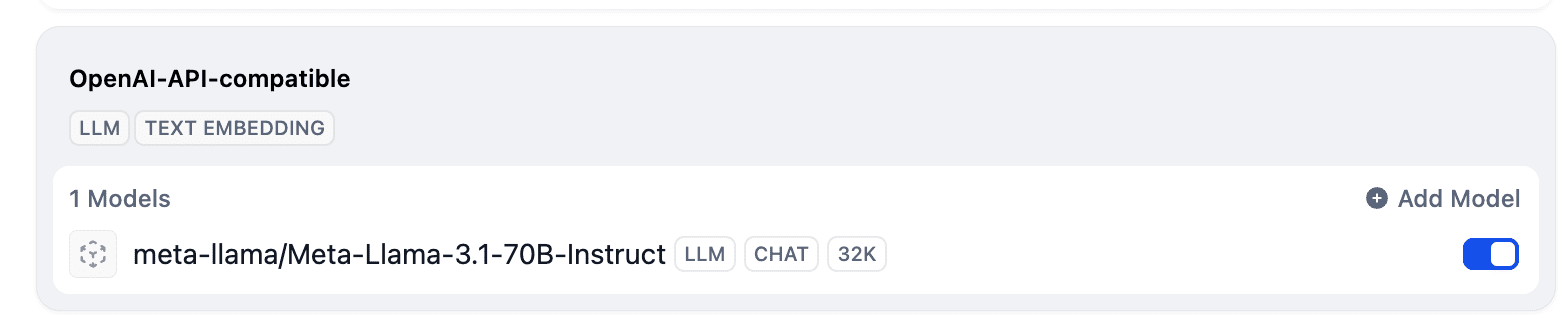

SiliconCloud models not currently in the dify source code

At present, new models are still being introduced into SiliconCloud, because of the iterative rhythm of both parties, some models can not be presented in Dify at the first time, and here we set up according to the following process.

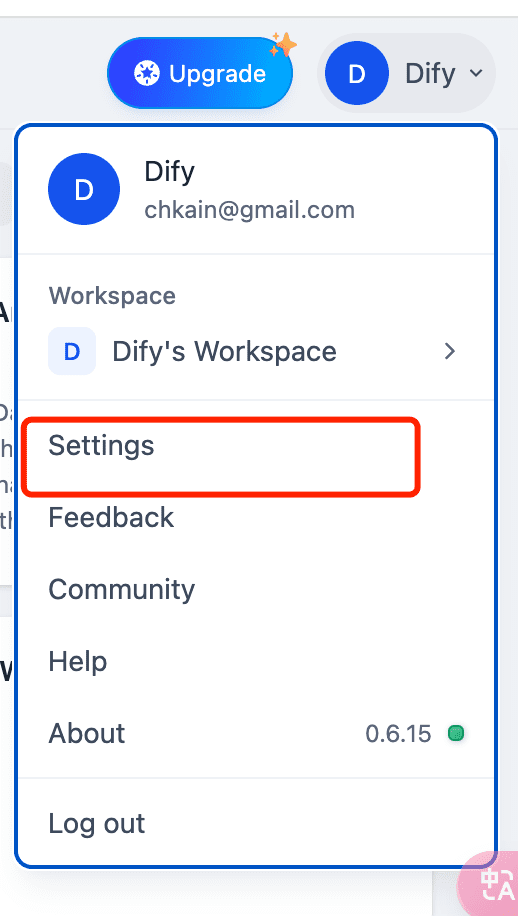

- Open settings of dify to set it.

- In Settings, select Model Provider and set "openai compatible API".

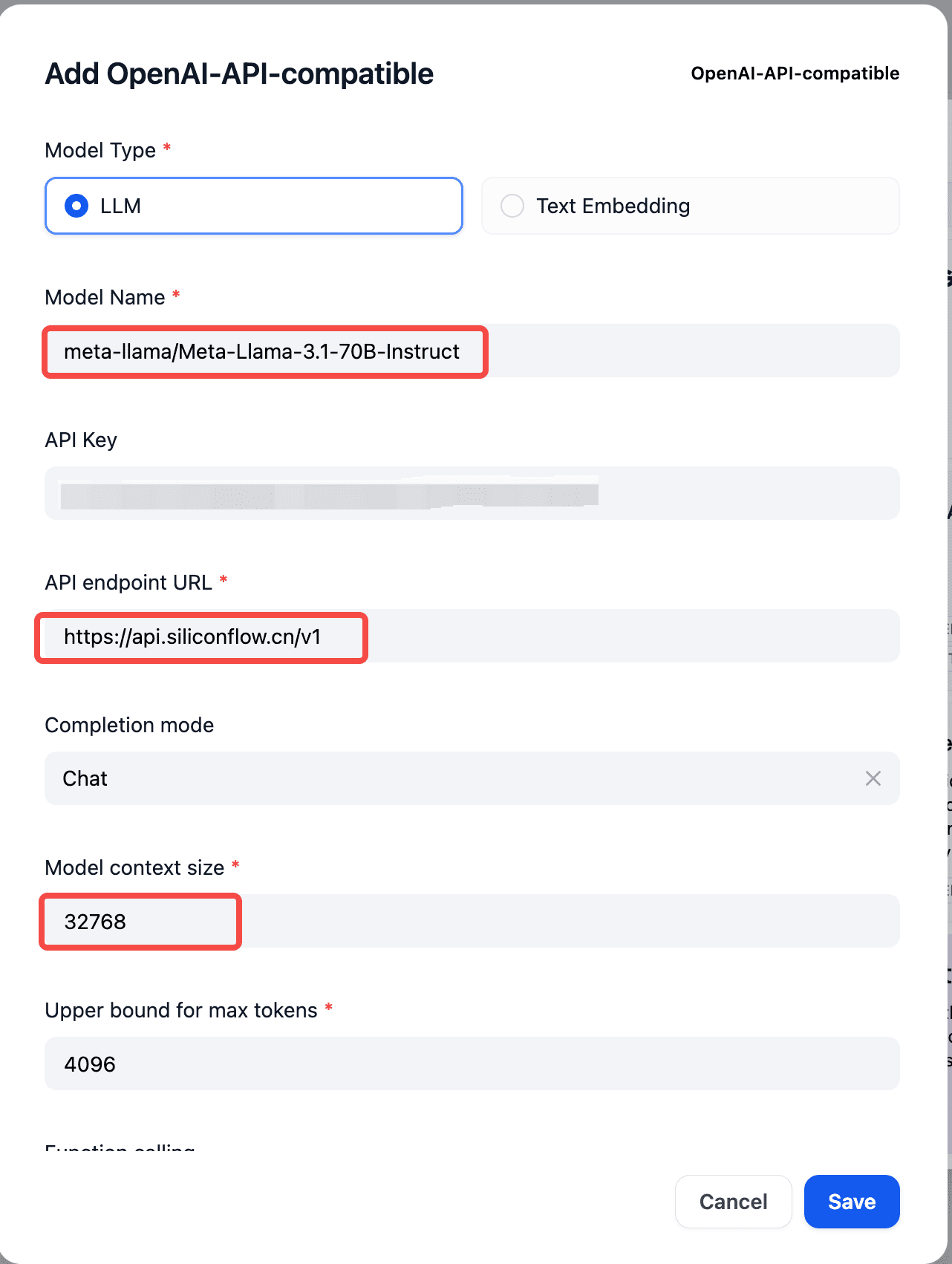

- Set the corresponding SiliconCloud Model Name, API Key, and API Endpoint API in it.

Model Name: fromhttps://docs.siliconflow.cn/reference/chat-completions-1 Look in the document.

API Key: fromhttps://cloud.siliconflow.cn/account/ak Please note that if you are using an overseas model, please observe thehttps://docs.siliconflow.cn/docs/use-international-outstanding-models Documentation rules for real-name authentication.

API endpoint URL:https://api.siliconflow.cn/v1

- Once the setup is complete, you can see the above added models in the model list.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...