Guard the bottom line of AI, "boy buried picture" involved in the case of people detained! These tricks teach you to identify the authenticity of the photos

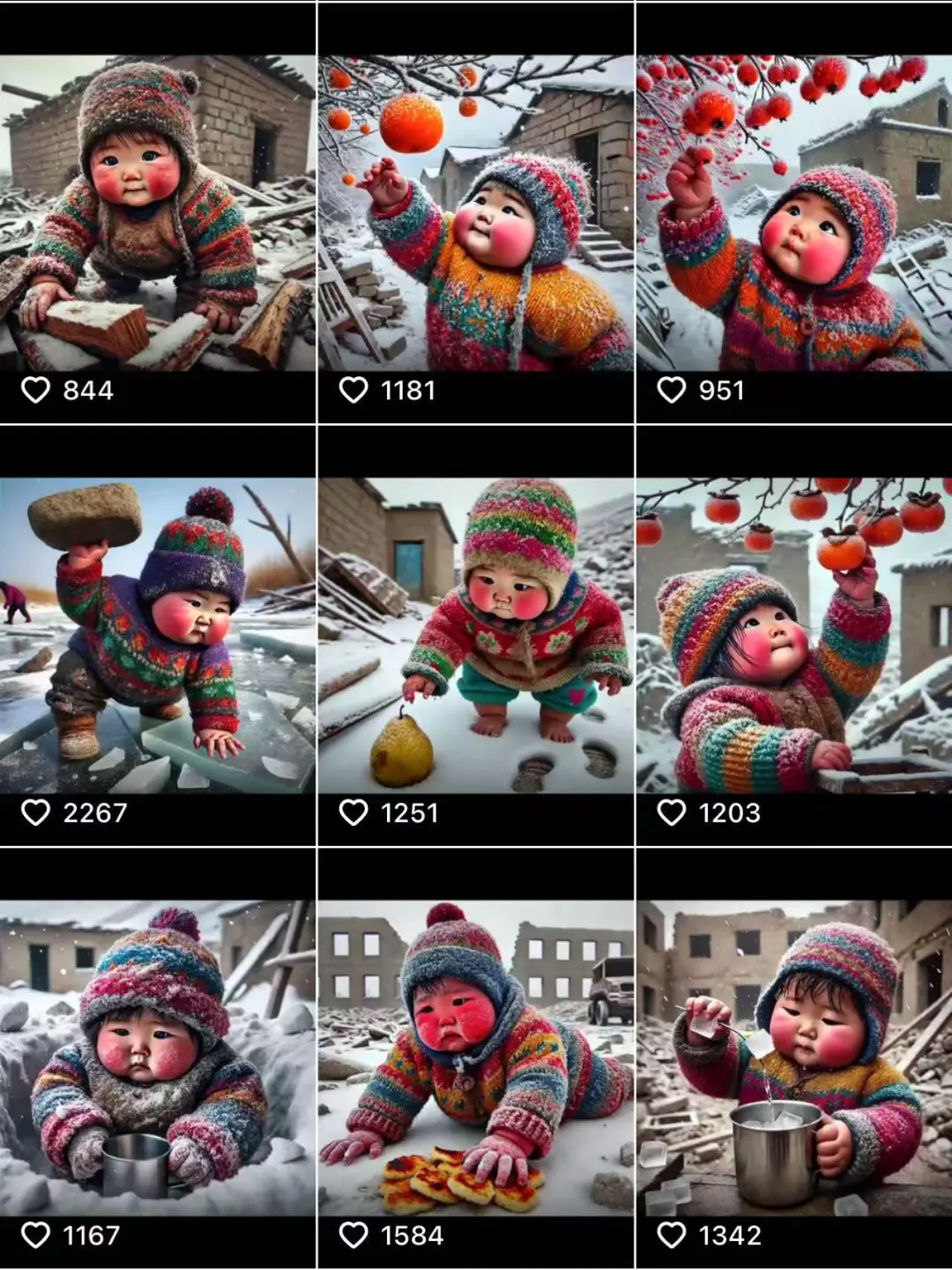

On January 7, a 6.8-magnitude earthquake struck Tingri County, Tibet, and many people are concerned about the progress of the rescue and praying for the safety of the affected areas. Meanwhile, amidst all the goodwill and concern, a picture of a "little boy buried under the rubble" quickly became popular online.

This picture, accompanied by the words "Rikaze earthquake", hit the tearful spot of countless people, and also triggered tens of thousands of likes and retweets. Most people didn't realize that it was just a fake AI-generated picture.

The Truth Emerges, AI Content Stolen

Looking closely at this picture, a young boy wearing a hat buried in rubble seems to be in a critical and heartbreaking situation, and it does indeed look like a photo of a disaster scene.

However, careful netizens found the break - the boy in the picture had an extra finger. The police later found out that the picture was AI-generated and had nothing to do with the earthquake.

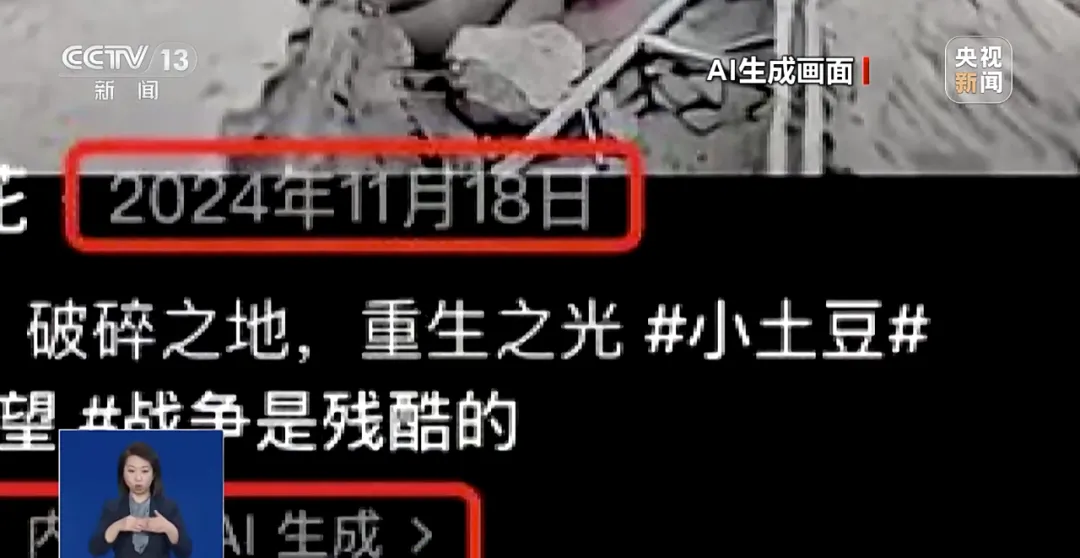

The image is from an AI creator, Mr. He, and was originally short video footage released last November. His original intention was to call attention to children in war through AI content, but he didn't expect the material to be maliciously stolen and put together with the earthquake.

Rumor mongers can't escape liability

The pirate is a netizen from Qinghai. In order to gain traffic, he combined this AI-generated picture with the earthquake disaster, and even labeled it with keywords such as "Rikaze earthquake" to try to attract attention.

This behavior not only disrupts the public hearing, but also the real rescue in the disaster area added unnecessary confusion, eventually, the netizen was detained by the police in accordance with the law.

According to the Public Security Administration Punishment Law and relevant articles of the Criminal Law, those who spread false disaster information may face detention or even heavier criminal punishment. This rumor farce is also another warning to everyone: the Internet is not an illegal place.

AI technology becoming a hotbed of rumors?

What many people don't realize is how inexpensive it can be to create a rumor. With a simple cue word, a disaster video can be generated in less than 30 seconds that is fake enough to be real, and even comes with narration.

Although the state has issued relevant regulations, requiring AI-generated content to be clearly labeled, but the actual implementation of the effect has not met expectations. In this incident, the picture originally had an "AI-generated" watermark, but it was easily removed by the rumor-mongers.

How can ordinary people recognize "AI fake pictures"?

We need to be vigilant when it comes to AI-generated content. Here are a few tricks to help you quickly screen for authenticity:

1. Observational details

AI-generated images may have problems with some details, especially complex or unconventional elements:

- Hand or finger: AI-generated hand details are often abnormal, such as multiple fingers, missing fingers, distorted shapes, or incorrect proportions.

- Eyes and facial features: AI-generated faces may have asymmetrical eyes, blurred edges, or disjointed features.

- Words and symbols: AI-generated text is often garbled, irregular or illegible characters.

- Background details: There may be confusing, disjointed objects in the background, or elements that do not conform to the laws of physics (e.g., suspended shadows).

2. Checking texture and logical consistency

AI-generated images sometimes lack textures and details that are common in the real world, and sometimes ignore logical rules:

- Oversmooth: A surface of skin or object that is excessively smooth.

- Detail repetition: e.g. similar patterns in localized areas such as leaves, bricks, etc.

- Light and Shadow: The direction of the light source does not coincide with the position of the shadows.

- reflection problem: A reflection in a mirror or water that does not correspond to the real object.

- Proportion and layout of objects: Odd size or placement arrangements often occur, such as furniture floating or out of proportion.

3. Analyzing the resolution and quality of images

- Inconsistent resolution: AI-generated images may appear blurry in some areas and too sharp in others.

- color distribution: AI images may be overly saturated or uniform in color and lack the subtle transitions of a real scene.

- pixel artifacts: AI-generated images may have irregular pixel textures when zoomed in.

- Edge processing: The edges of objects may be too sharp or blurred to blend naturally with the background.

4. Attention to physical laws and details of motion

If the image involves motion or dynamic elements:

- blurring effect: AI-generated motion blur may not correspond to actual motion patterns.

- trajectory: Fast-moving objects may not have a true trajectory or image overlay.

- laws of physics: Natural physical dynamics in real photos (e.g. wind blowing leaves) may be difficult to render perfectly by AI.

5. Understanding the stylistic features of AI graphic tools

Different AI tools generate images in different styles:

- MidJourney: Images tend to be very artistic and like to add blurring effects or oversharpening.

- Stable Diffusion: Sometimes there is distortion on edges and small objects.

- DALL-E: Logic or detail errors may occur when generating complex scenarios.

Technology is just a tool, the best use of it depends on people's hearts and minds

The "little boy buried" incident is a mirror. It reflects the two sides of AI technology - it can either help us create new possibilities or, when used inappropriately, become a driver of rumors.

But that doesn't mean we should "stay away" from AI technology. On the contrary, we need legal regulation, technological improvements, as well as a sense of responsibility in everyone's heart. In the Internet era, the line between true and false is sometimes very blurred, but your calm and rational, can make those carefully woven lies lose soil.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...