Using the DeepSeek-R1 API Frequently Asked Questions

DeepSeek-R1 API

The standard model name is: deepseek-reasoner

DeepSeek-R1 supports cache hits

Cache hits are generally used for high-frequency inputs with few sample examples, large document inputs with many outputs (less than 64 tokens (the contents of which will not be cached)

Input part of system and user messages are counted as cache hits.

Cache hits are time-sensitive, typically lasting from a few hours to a few days

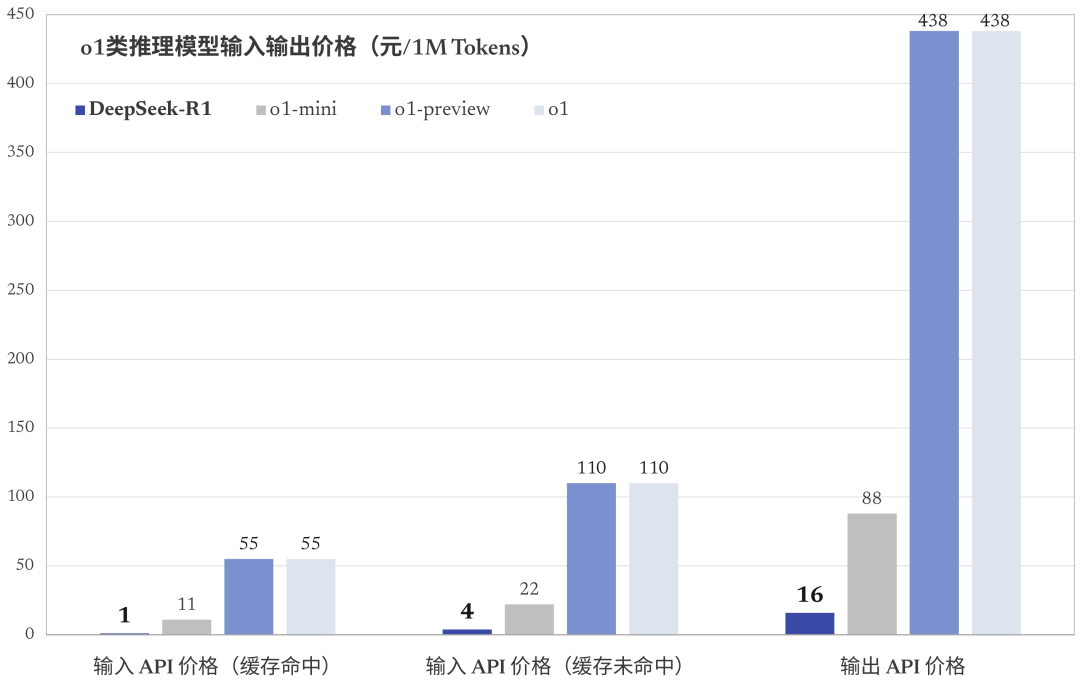

Number of tokens hit by cache ($1/million tokens)

DeepSeek-R1 Output Issues

DeepSeek-R1 Output consists of both thought chain output and answer output, both of which count as output token The billing is the same.

The API supports up to 64K contexts, and thought chains are not counted as part of the total length.

The thought chain output can be set to a maximum (reasoning_effort) of 32K tokens; the answer output can be set to a maximum (max_tokens) of 8K tokens.

DeepSeek-R1 Context Splicing

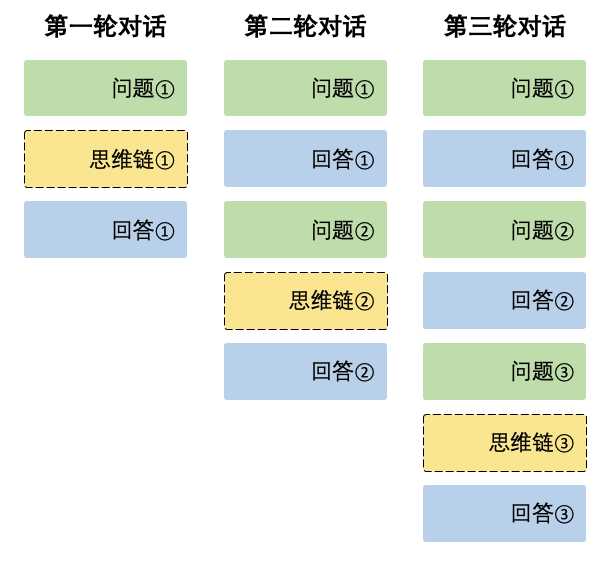

During each round of dialog, the model outputs thought chain content (reasoning_content) and the final answer (content). In the next round of dialog, the content of the thought chain output from the previous round is not spliced into the context, as shown below:

Context always retains the last round of the chain of thought, otherwise the output answer will be confused.

About using the third-party DeepSeek-R1 API

Note the incompatibility with official formats! For example, silicon-based flow.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...