Sherpa-ONNX: Offline Speech Recognition and Synthesis with ONNXRuntime

General Introduction

sherpa-onnx is an open source project developed by the Next-gen Kaldi team to provide efficient offline speech recognition and speech synthesis solutions. It supports multiple platforms including Android, iOS, Raspberry Pi, etc. and is capable of real-time speech processing without an internet connection. The project relies on the ONNX Runtime framework and provides functions from speech-to-text (ASR), text-to-speech (TTS), and voice activity detection (VAD) for various embedded systems and mobile devices. The project not only supports offline use , but also through the WebSocket server and client communication .

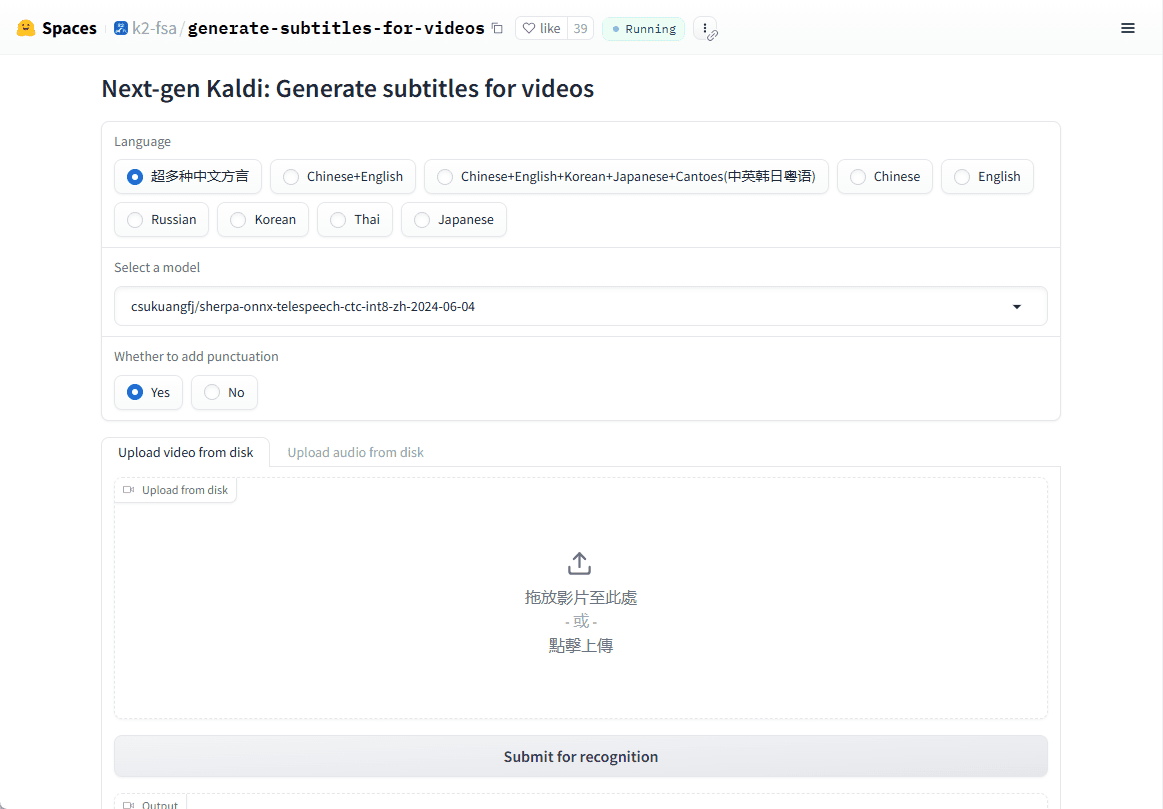

Online demo: https://huggingface.co/spaces/k2-fsa/generate-subtitles-for-videos

Function List

- Offline Speech Recognition (ASR): Supports real-time speech-to-text in multiple languages, without the need for an Internet connection.

- Offline speech synthesis (TTS): Provides high-quality text-to-speech service, again without the need for internet.

- Voice Activity Detection (VAD): Real-time detection of voice activity, suitable for a variety of voice interaction scenarios.

- Multi-platform support: Available for Linux, macOS, Windows, Android, iOS, and many other operating systems.

- Cross-language model support: Support advanced speech models such as Zipformer, Paraformer, etc. to improve the accuracy of recognition and synthesis.

- low resource consumption: The optimized model can run smoothly on resource-limited devices.

Using Help

Installation process

sherpa-onnx is an open source project, you can download the source code directly from GitHub for compilation, or use the pre-compiled binaries directly:

1.clone warehouse::

git clone https://github.com/k2-fsa/sherpa-onnx.git

cd sherpa-onnx

- Compile source code::

- For Linux and macOS users:

mkdir build cd build cmake -DCMAKE_BUILD_TYPE=Release .. make -j4 - For Windows users, you may need to use Visual Studio or another compiler supported by CMake.

- For Linux and macOS users:

- Download pre-compiled files::

- Visit the GitHub release page (e.g. https://github.com/k2-fsa/sherpa-onnx/releases) and select the precompiled version for your operating system to download.

Usage

Speech Recognition (ASR) Example::

- command-line mode::

Download pre-trained models (e.g. sherpa-onnx-streaming-zipformer-bilingual-zh-en):wget https://github.com/k2-fsa/sherpa-onnx/releases/download/asr-models/sherpa-onnx-streaming-zipformer-bilingual-zh-en.tar.bz2 tar xvf sherpa-onnx-streaming-zipformer-bilingual-zh-en.tar.bz2Then run:

./build/bin/sherpa-onnx --tokens=sherpa-onnx-streaming-zipformer-bilingual-zh-en/tokens.txt --encoder=sherpa-onnx-streaming-zipformer-bilingual-zh-en/encoder.onnx --decoder=sherpa-onnx-streaming-zipformer-bilingual-zh-en/decoder.onnx your_audio.wav - real time recognition::

Real-time speech recognition using a microphone:./build/bin/sherpa-onnx-microphone --tokens=sherpa-onnx-streaming-zipformer-bilingual-zh-en/tokens.txt --encoder=sherpa-onnx-streaming-zipformer-bilingual-zh-en/encoder.onnx --decoder=sherpa-onnx-streaming-zipformer-bilingual-zh-en/decoder.onnx

Speech Synthesis (TTS) Example::

- Download a pre-trained TTS model (e.g. VITS model):

wget https://github.com/k2-fsa/sherpa-onnx/releases/download/tts-models/sherpa-onnx-tts-vits.tar.bz2 tar xvf sherpa-onnx-tts-vits.tar.bz2 - Run TTS:

./build/bin/sherpa-onnx-offline-tts --model=sherpa-onnx-tts-vits/model.onnx "你好,世界"

Voice Activity Detection (VAD)::

- Run the VAD:

./build/bin/sherpa-onnx-vad --model=path/to/vad_model.onnx your_audio.wav

caveat

- Model Selection: Choose the appropriate model (e.g. streaming or non-streaming version) for your needs. Different models differ in terms of performance and real-time performance.

- hardware requirement: While sherpa-onnx is intended to be low resource consumption, complex models may require higher computational power, especially on mobile devices.

- Language Support: Pre-trained models may support multiple languages, make sure to choose the right model for your language.

With these steps and tips, you can start using sherpa-onnx for speech-related application development, whether it's a real-time dialog system or offline speech processing.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...