Cherry Studio, AnythingLLM, and Chatbox: a full review of the best AI models for your business

AI assistant tools: how to choose?

With the rapid development of AI technology, many users are concerned about how to choose an AI assistant tool that can effectively manage multiple models and realize efficient collaboration in the face of a wide range of AI tools. In this article, we will analyze the three popular AI assistant tools: Cherry Studio, AnythingLLM, and Chatbox from the three dimensions of functional positioning, unique advantages, and applicable scenarios, hoping to help readers accurately choose one according to their own needs, and significantly improve their work efficiency.

In order to show the features of these tools more intuitively, the author summarizes the following minimalist experience after actually installing and trying them out:

- Cherry Studio: Friendly interface, simple but rich features, support for local knowledge base construction, built-in "smart body" function is particularly good, covering technical writing, DevOps engineers, explosive copywriting, fitness coaching and many other professional areas.

- AnythingLLM: The interface design is relatively professional, with built-in embedded models and vector databases, and a powerful local knowledge base.

- Chatbox: Focusing on conversational AI applications, the interface is simple and easy to use, and it supports reading documents, but does not support the local knowledge base function for the time being. Built-in "my partner" function, a small number of assistants but practical, such as "charting", "travel assistant", "quaqqa machine ", "Little Red Book Copy Generator" and so on.

1. Cherry Studio: "Commander in Chief" for multi-model collaboration

Cherry Studio Positioned as an "all-in-one commander" for multi-model collaborative work, it aims to provide users with one-stop solutions for large model management and application.

Unique Advantages

- Multi-model flexible switching: Cherry Studio is compatible with OpenAI, DeepSeek, Gemini and other major cloud models and integrates with Ollama The local deployment function enables users to flexibly invoke cloud and local models to fully meet the needs of different scenarios.

- Built-in massive pre-configured assistants: More than 300 pre-configured assistants are available, covering विविध domains such as writing, programming, design, and more. Users can customize the roles and functions of the assistants and even compare the outputs of multiple models in the same conversation to quickly find the optimal solution.

- Powerful multimodal file handling: Support for text, PDF, images and other formats of the file processing, integrated WebDAV file management, code highlighting and Mermaid chart visualization features, fully meet the needs of users in the complex data processing, enhance work efficiency.

Applicable Scenarios

- The developer performs multi-model comparison debugging: It is convenient for developers to compare and debug the code generation effect or document generation quality of different models and choose the most suitable model.

- Content creators quickly switch copywriting styles: Help copywriters, marketing and other content creators to quickly generate different styles of copy to inspire creativity.

- Enterprises balance data privacy and performance: Meet the dual needs of organizations for data security and high-performance computing, using local models to safeguard data privacy while leveraging high-performance models in the cloud to handle complex tasks.

Suggestions for selection

Cherry Studio is especially suited for technical teams, users who need to multitask, and those who have high requirements for data privacy and functionality scalability.

For more information, please visit the Cherry Studio website:https://cherry-ai.com

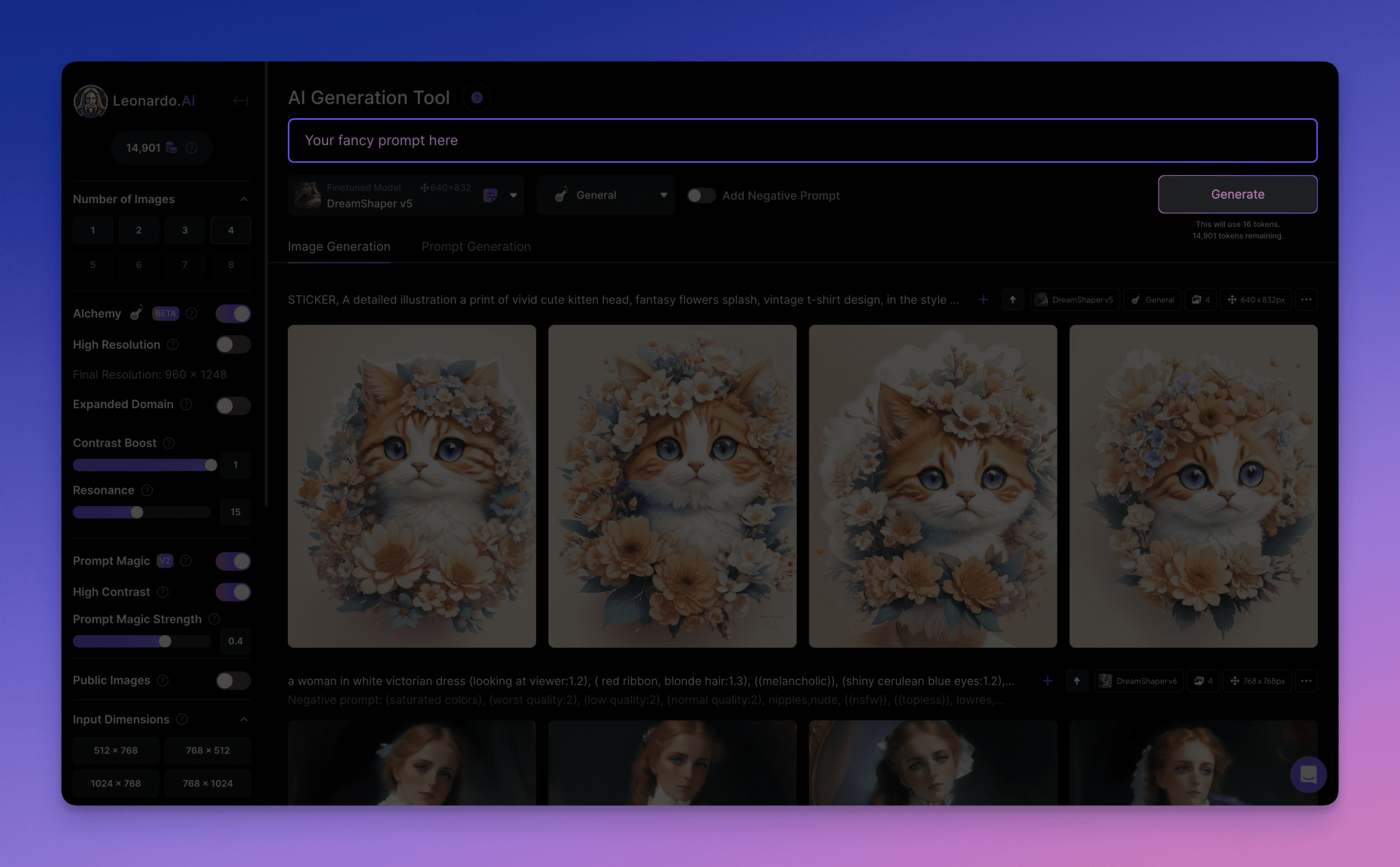

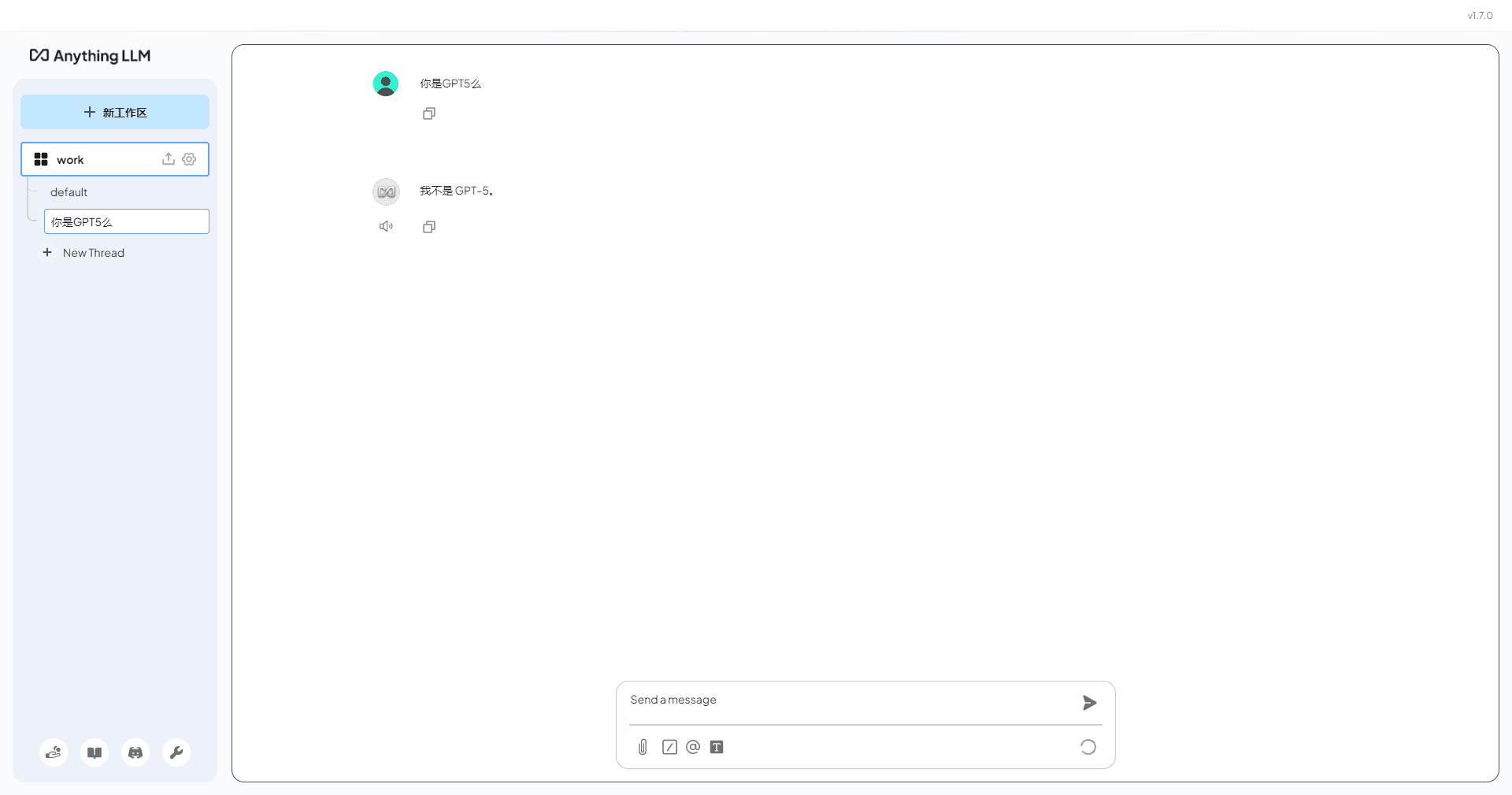

2. AnythingLLM: the "smart brain" of enterprise-level knowledge bases

AnythingLLM is positioned as the "intelligent brain" of enterprise-level knowledge bases, focusing on intelligent document processing and knowledge management.

Unique Advantages

- Document Intelligence Quiz: AnythingLLM supports indexing of documents in PDF, Word and other formats. With advanced vector retrieval technology, AnythingLLM is able to quickly and accurately locate document fragments and generate highly contextually relevant answers in conjunction with a large model, which greatly improves document processing efficiency.

- Flexible localized deployment options: AnythingLLM can be seamlessly connected with local inference engines such as Ollama without relying on cloud services, effectively guaranteeing the security of sensitive enterprise data and meeting the strict requirements of data security application scenarios.

- Integration of retrieval and generation: AnythingLLM is able to retrieve the content of the knowledge base first, and then call the big model to generate the answer. This "retrieval-enhanced generation" mode ensures the professionalism and accuracy of the answer, and avoids the problem of "illusion" that may occur in the traditional big model.

Applicable Scenarios

- Automated Q&A for in-house document repositories: For example, quickly answer employee questions about employee handbooks, technical documentation, and more to reduce manual customer service stress.

- Academic research literature summarization and information extraction: Assist researchers to quickly review and understand massive literature, extract key information, and improve research efficiency.

- Massive notes and eBooks management for individual users: Helps individual users to efficiently manage and utilize a large number of notes, eBooks and other knowledge resources.

Suggestions for selection

AnythingLLM is especially suited for enterprises, research organizations, and teams that need to build private knowledge bases with high document processing requirements.

For more information, please visit the AnythingLLM website:https://anythingllm.com

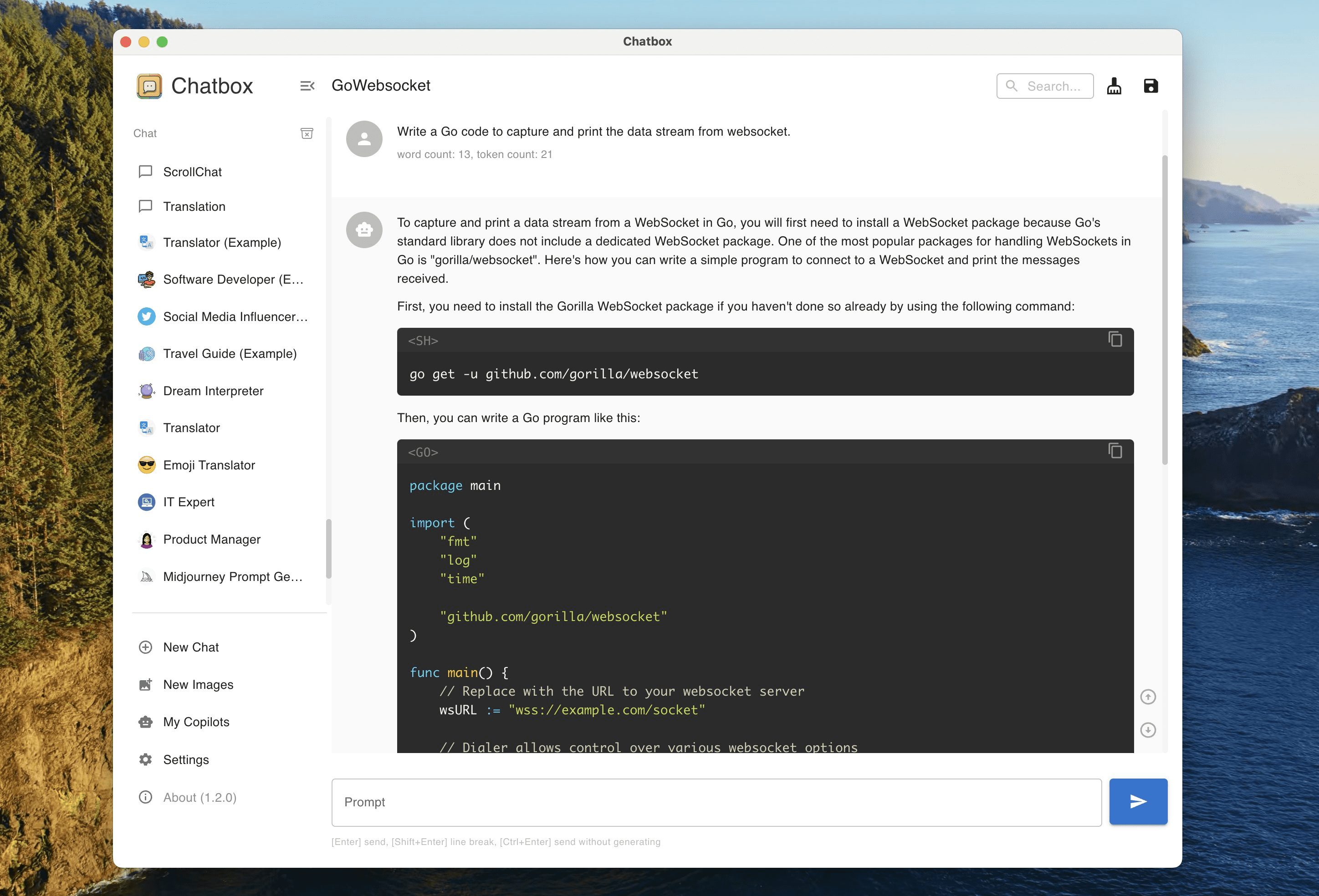

3. Chatbox: a minimalist "lightweight chat specialist"

Chatbox It's a minimalist "lightweight chat expert" that focuses on delivering a simple and efficient conversational AI experience.

Unique Advantages

- Zero-configuration fast experience: Chatbox is ready to use right after installation, without the need for a tedious configuration process. It provides similar ChatGPT With a simple user interface that is easy to understand, it's perfect for novice users who want to get started with AI big models.

- Local model friendly: Chatbox provides good support for local models and is compatible with local reasoning tools such as Ollama, allowing users to easily run open source models on local devices and experience cutting-edge technology without complicated network configuration.

- Lightweight and high performance at the same time: Chatbox guarantees great functionality while максимально controls resource usage, runs smoothly even in CPU environments, and does not require a high computer configuration, so even low-configuration devices can get a good experience.

Applicable Scenarios

- Individual users quickly test local modeling effects: Convenient for individual users to quickly experience and test the effect of various local large model generation, such as text generation, code generation and so on.

- Developers temporarily debug models or generate code snippets: Assist developers in rapid model debugging and code snippet generation to improve development efficiency.

- Educational scenarios teaching presentation tool: The easy-to-use interface and powerful features make Chatbox an ideal tool for teaching and demonstrating AI technology in educational scenarios, helping students understand and learn AI technology more intuitively.

Suggestions for selection

Chatbox is especially suited for individual developers, educators, and users who only need basic conversational functionality.

For more information, please visit the Chatbox website:https://chatboxai.app

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...