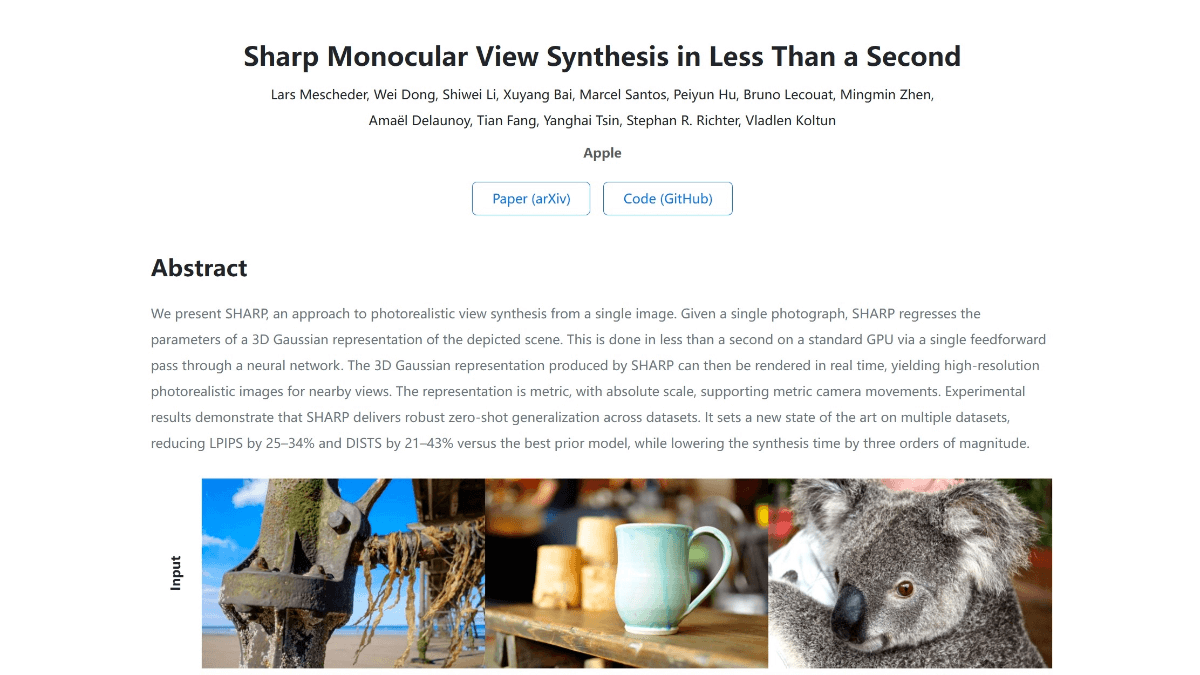

SHARP - Apple's open source monocular view 3D scene synthesis technology

What is SHARP?

SHARP (Sharp Monocular View Synthesis in Less Than a Second) is Apple's open source monocular view synthesis technology. It can quickly generate a realistic 3D scene representation from a single photo in less than a second.SHARP transforms the input image into a 3D Gaussian representation through a neural network, which supports real-time rendering, generates high-resolution, detailed images, and has an absolute scale to support metrics for camera motion.

Features of SHARP

- fast synthesis: It takes less than a second to generate a 3D scene representation from a single photo, a significant speed improvement.

- High resolution rendering: Supports high-resolution, detail-rich image rendering with realistic results.

- topicality: The generated 3D representations can be rendered in real-time and are suitable for dynamic scenes and interactive applications.

- Metric Camera Motion Support: Absolute scales are available to support precise metrics of camera motion.

- Strong generalization capabilities: Excellent performance on multiple datasets with good zero-sample generalization.

- open source resource: Provides complete code and resources for developers to use and further research.

SHARP's core strengths

- lightning fast processing: The ability to convert from a single photo to a 3D scene in less than a second, with processing speeds up to three orders of magnitude faster than traditional methods, enables near real-time 3D modeling.

- High-quality imaging: Generates 3D scenes with high resolution, fine texture and structural detail, and imaging quality that is substantially ahead of the strongest previous models in multiple benchmarks.

- real time rendering: Supports real-time rendering, generating photorealistic images at more than 100 frames per second on standard GPUs, suitable for dynamic interactive scenes such as AR/VR applications.

- metrics accuracy: The generated 3D representation has an absolute scale and supports metric camera motion, which can accurately simulate real-world camera movement and is suitable for applications that require high accuracy.

- Strong generalization capabilities: Trained on a large amount of data, SHARP is capable of zero-sample generalization to different scenarios and datasets with wide applicability.

- Open Source Support: Apple has open-sourced the complete code for SHARP and related resources, providing developers with a wealth of resources for rapid application and further development.

What is the official website for SHARP

- Project website:: https://apple.github.io/ml-sharp/

- GitHub repository:: https://github.com/apple/ml-sharp

- arXiv Technical Paper:: https://arxiv.org/pdf/2512.10685

Who SHARP is for

- 3D content creators: Can quickly generate 3D scenes from a single image, suitable for designers, artists and developers who need to create 3D content efficiently.

- AR/VR Developers: Supports real-time rendering and metric camera motion for developing augmented reality and virtual reality applications to enhance the user experience.

- game developer: It can be used to quickly generate 3D models of game scenarios and improve development efficiency, especially for teams that need to iterate and prototype quickly.

- Computer vision researchers: Open-source code and resources provide researchers with an experimental platform to study monocular viewgraph synthesis and 3D reconstruction.

- Spatial computing practitioners: For scenarios that require accurate 3D modeling and spatial analysis, such as architectural visualization, interior design, and other fields.

- Educators and students: as a teaching tool to help students better understand and practice 3D modeling and computer vision techniques.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...