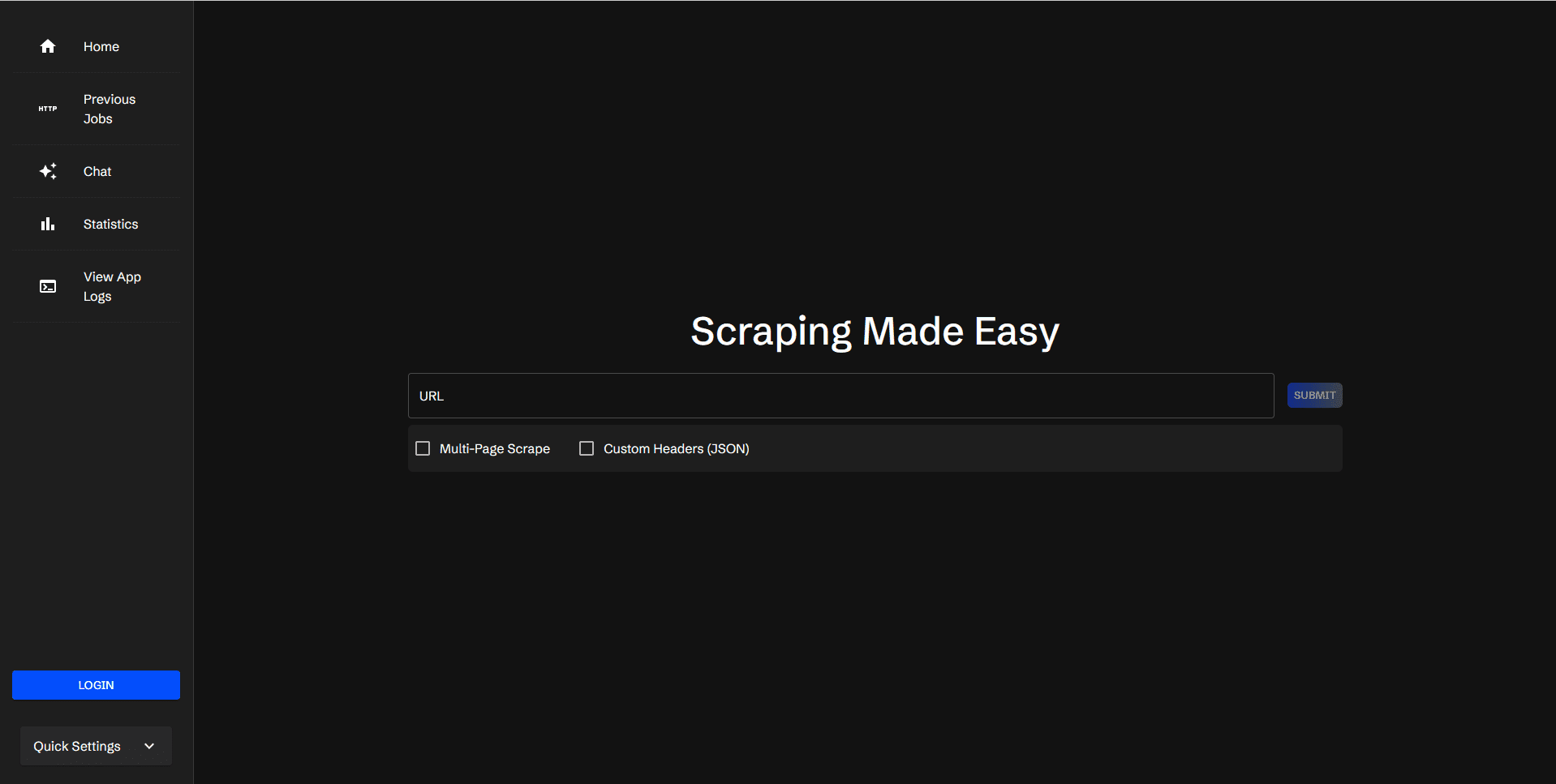

Scraperr: self-hosted web data scraping tool

General Introduction

Scraperr is a self-hosted web data scraping tool that allows users to specify XPath elements to scrape web data. Users submit a URL and corresponding crawling elements, and the results are displayed in a table that can be downloaded as an Excel file.Scraperr supports user login to manage crawling tasks, and provides log viewing and statistics.

Function List

- Submit and queue URLs for web crawling

- Adding and Managing Crawl Elements with XPath

- Crawl all pages under the same domain name

- Add custom JSON headers to send requests

- Displaying the results of the grabbed data

- Download the CSV file containing the results

- Rerun the capture task

- Viewing the status of queued tasks

- Favorites and View Favorite Tasks

- User login/registration to organize tasks

- View Application Logs

- View Task Statistics

- AI integration to support the inclusion of crawl results in the context of conversations

Using Help

Installation process

- Cloning Warehouse:

git clone https://github.com/jaypyles/scraperr.git - Setting environment variables and tags: In the

docker-compose.ymlfile to set environment variables and tags, for example:scraperr: labels: - "traefik.enable=true" - "traefik.http.routers.scraperr.rule=Host(`localhost`)" - "traefik.http.routers.scraperr.entrypoints=web" scraperr_api: environment: - LOG_LEVEL=INFO - MONGODB_URI=mongodb://root:example@webscrape-mongo:27017 - SECRET_KEY=your_secret_key - ALGORITHM=HS256 - ACCESS_TOKEN_EXPIRE_MINUTES=600 - Start the service:

docker-compose up -d

Usage Process

- Submit URL for crawling::

- After logging in to Scraperr, go to the Scraping Tasks page.

- Enter the URL to be crawled and the corresponding XPath element.

- After submitting a task, the system will automatically queue it and start capturing it.

- Managing Crawl Elements::

- On the crawl task page, you can add, edit, or delete XPath elements.

- Supports crawling all pages under the same domain.

- View Crawl Results::

- Once the crawl is complete, the results will be displayed in a table.

- The user can download a CSV file containing the results or choose to re-run the task.

- task management::

- Users can view the status of queued tasks, favorite and view favorite tasks.

- Provides the Task Statistics view, which displays statistics of the run tasks.

- Log View::

- On the Application Logs page, users can view the system logs for detailed information about the capture task.

- AI Integration::

- Support for incorporating crawl results into dialog context, currently supports Ollama and OpenAI.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...