What is the purpose of checking OpenRouter Transforms in Roo Cline?

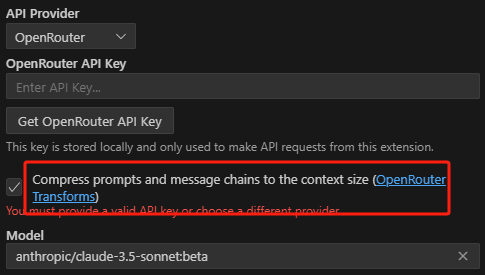

Open Roo Cline When setting the model service provider to OpenRouter, you will see the following settings:

He will guide you through what OpenRouter Transforms are. simple explanation: when you encounter a context that is too long, he will help you remove the middle part before sending it to the model.

Explained in detail below OpenRouter and its role in middle-out transforms.

Issue background: model context length limitations

First, you need to understand a concept:Model Context Length LimitThe

- Large Language Models (LLMs) do not have unlimited memory of previous conversations when processing text.

- Each model has a maximum context length (understood as a "memory" window) beyond which the model cannot remember previous inputs.

- For example, a model may have 8k tokens (which is about 6000 words) in context. If you enter more than 8k tokens of text, the model may forget the very first part of the text, resulting in a lower quality response.

OpenRouter's solution: transforms

To solve this problem, OpenRouter provides a transforms parameter that allows you to preprocess the prompts sent to the model for the main purpose:

- Handles prompts that are out of context length: If your prompts (including dialog history) are too long for the model's context length, OpenRouter can compress or truncate the prompts to fit within the model's constraints via the transforms parameter.

- Customize prompt handling: transforms doesn't just deal with length limits, but may in the future provide more functionality for working with hints, for example:

- Automatic Translation Alerts

- Adding Specific Instructions

middle-out conversion

middle-out is the only transformation currently available in transforms:Compresses or removes messages in the middle of the prompt to fit the context length limit of the model.

Specifically, it works as follows:

- Detects excessive length: middle-out checks to see if the total length of your prompt (or list of messages) exceeds the context length of the model.

- Compress the middle section: If exceeded, middle-out prioritizes the compression or deletion of messages in the middle portion of the prompt. This is based on the observation that LLM tends to pay more attention to the beginning and the end and less to the middle part when processing text. Therefore, sacrificing the message in the middle section usually ensures the quality of the model's response.

- Retain the head and tail: The middle-out will try to keep the beginning and the end part of the prompt as much as possible, as this part usually contains important information such as:

- primordial instruction

- Recent user input

- Reduce the number of messages: In addition to compressing the length, middle-out also reduces the number of messages in the messages list because some models (such as the AnthropicClaude) There is also a limit on the number of messages.

Default behavior and disabling of middle-out

- Enabled by default: If you use an OpenRouter endpoint with a context length less than or equal to 8k, middle-out conversion is enabled by default. That is, if your prompt exceeds the context length, OpenRouter will automatically compress it for you.

- Explicitly disabled: If you don't want OpenRouter to automatically compress your prompts, you can set transforms: [] in the request body to indicate that no transformations are used.

When to use middle-out

- The history of the dialog is too long: When you have multiple rounds of conversations and the history is accumulating, possibly exceeding the contextual length of the model, middle-out can help you keep the conversation coherent.

- Smaller model context length: When you use a model with a small context length, middle-out allows you to enter longer text without causing the model to "forget" about the previous input.

- Avoid loss of information: While middle-out removes some intermediate information, it is designed with the goal of maximizing the retention of important information and minimizing the impact of information loss.

When not to use middle-out

- Customized processing: If you wish to have full control over how prompts are handled, or use a custom compression algorithm, you can choose not to use middle-out and then handle the length of the prompts yourself.

- Full context is required: There are some scenarios where the full context may be required, and removing the information in the middle would cause serious problems, and it may not be appropriate to use middle-out at this point.

summarize

The main purpose of transforms and middle-out is:

- Simplify handling of out-of-context length prompts: Avoiding manual management and truncation of prompts allows the model to handle longer text and dialog histories.

- Enhancement of the user experience: Especially for models with small context lengths, making it easier for users to take advantage of the model's capabilities.

- Optimize the quality of model responses: Retain as much important information as possible within a limited context length to minimize model forgetting.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...