Ring-1T-preview - Ant Group's open-source trillion-parameter macromodel

What is Ring-1T-preview?

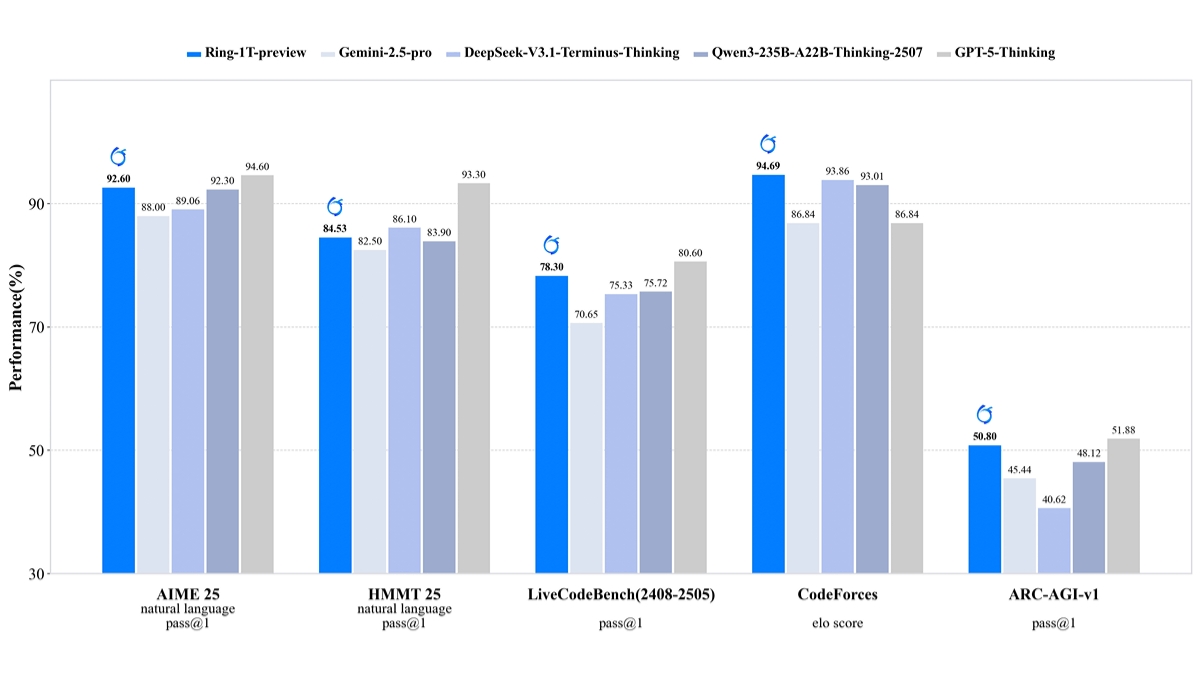

Ring-1T-preview is a trillion-parameter large model open-sourced by Ant Group, based on Ling 2.0 MoE architecture, pre-trained on 20T corpus, and trained in reasoning ability by ASystem, a self-developed reinforcement learning system. It performs well in natural language reasoning, scoring 92.6 in AIME 2025 test, close to GPT-5. In IMO 2025 test, it solves the 3rd question in one go, and gives partially correct answers to the other questions, demonstrating its high-level reasoning ability.

Features of Ring-1T-preview

- powerful natural language reasoning: Demonstrates reasoning capabilities close to or even beyond existing state-of-the-art models in several tests, such as achieving a score of 92.6 in the AIME 2025 test, which is close to the GPT-5 score of 94.6.

- Efficient code generation and optimization: Outstanding performance in programming competition tasks, such as scoring 94.69 over GPT-5 on the CodeForces test, showing strong code generation and optimization capabilities.

- Reasoning skills for higher order math competitions: Solved question 3 on the IMO 2025 test in one sitting, gave partially correct answers on the other questions, and possessed higher-order mathematical reasoning skills.

- Open Source Collaboration and Community SupportThe code and weights are completely open-source and published on the Hugging Face platform, which facilitates community exploration and feedback, and accelerates the iterative improvement of the model.

- Continuous training and optimization: The model is still under continuous training to optimize the performance and solve the current problems, such as language mixing and reasoning repetition, in order to further improve the stability and accuracy of the model.

Core Benefits of Ring-1T-preview

- Powerful reasoning: It performs well on a number of natural language reasoning tasks, such as achieving a score of 92.6 on the AIME 2025 test, which is close to the GPT-5 score of 94.6, demonstrating strong mathematical reasoning skills.

- Efficient code generation: Outstanding performance in programming competition tasks, such as scoring 94.69 over GPT-5 on the CodeForces test, showing strong code generation and optimization capabilities.

- Higher order mathematical reasoning: Solve question 3 on the IMO 2025 test in one sitting and give partially correct answers on other questions with higher order mathematical reasoning.

What is Ring-1T-preview's official website?

- Hugging Face Model Library:: https://huggingface.co/inclusionAI/Ring-1T-preview

Who is Ring-1T-preview for?

- artificial intelligence researcher: Open-source code and weights can be utilized for academic research to explore the potential of large models and directions for improvement.

- developers: It can be used to develop a variety of natural language processing and code generation related applications to improve development efficiency and application performance.

- educator: Can be used for teaching and research to help students and researchers better understand and apply big modeling techniques.

- Enterprises and innovation teams: Can be used for in-house project development and innovation to drive business development and technology upgrades.

- Math and Programming Contest Participants: Can be used to assist in problem solving and strategy optimization to enhance competition performance.

- Open source community members: Can participate in model improvement and optimization, and jointly promote the development of large model technology.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...