RIG (Retrieval Interleaved Generation): a retrieval strategy of writing while searching, suitable for querying real-time data

Technical core: Retrieval Interleaved Generation (RIG)

- What is RIG?

RIG is an innovative generative methodology designed to address the problem of hallucination in the processing of statistical data by large language models. Whereas traditional models may generate inaccurate numbers or facts out of thin air, RIG ensures the authenticity of data by inserting queries to external data sources into the generation process. - Working Principle ::

- When the model receives a question that requires statistics, it dynamically calls Data Commons (a public data knowledge base powered by Google) as it generates the answer.

- The query results are embedded in the output in natural language, for example:[DC("What is the population of France?") --> "67 million"]The

- This "interleaving of retrieval and generation" allows the model to maintain linguistic fluency while providing validated statistical information.

Model Details

- basic model : Gemma 2 (27B parameter version), an efficient open-source language model designed for research and experimentation.

- Fine-tuning targets : Specialized training enables it to recognize when it is necessary to query Data Commons and seamlessly integrate this data during the generation process.

- Inputs and outputs ::

- Input: Any text prompt (such as a question or statement).

- Output: English text, may contain embedded Data Commons query results.

- multilingualism : Mainly English is supported ("it" may mean "instruction-tuned", i.e., instruction-tuned version, but the page does not specify the language range).

application scenario

- target user : academic researchers, data scientists.

- use : Suitable for scenarios where accurate statistics are required, such as "the population of a certain country", "global CO2 emissions in a certain year", etc.

- limitation : Currently in an early version, this is for trusted testers only and is not recommended for production environments or commercial use.

Model:https://huggingface.co/google/datagemma-rig-27b-it

Original text:https://arxiv.org/abs/2409.13741

RIG realization process

RIG is an approach that interleaves retrieval and generation, aiming to improve the accuracy of the generated results by having LLM generate natural language queries to retrieve data from Data Commons. The following are the detailed steps for the implementation of RIG:

1. Model Fine-tuning (Model Fine-tuning)

goal: Let LLM learn to generate natural language queries that can be used to retrieve statistics from Data Commons.

move::

- Initial query and generation: When LLM receives a statistical query, it usually generates text containing a numerical answer. We will refer to this numerical answer asLLM-generated statistical values (LLM-SV)For example, for the query "What is the total population of California? For example, for the query "What is the total population of California?" , LLM might generate "The total population of California is about 39 million."

- Identify relevant data: From the text generated by the LLM, we need to identify the most relevant data from the Data Commons database in order to make it available to users as a fact-checking mechanism. We refer to this retrieved value asData Commons Statistical Values (DC-SV)The

- Generating Natural Language Queries: To accomplish this, we fine-tune LLM so that it generates a natural language query describing LLM-SV along with LLM-SV. This query will be used to retrieve data from Data Commons.

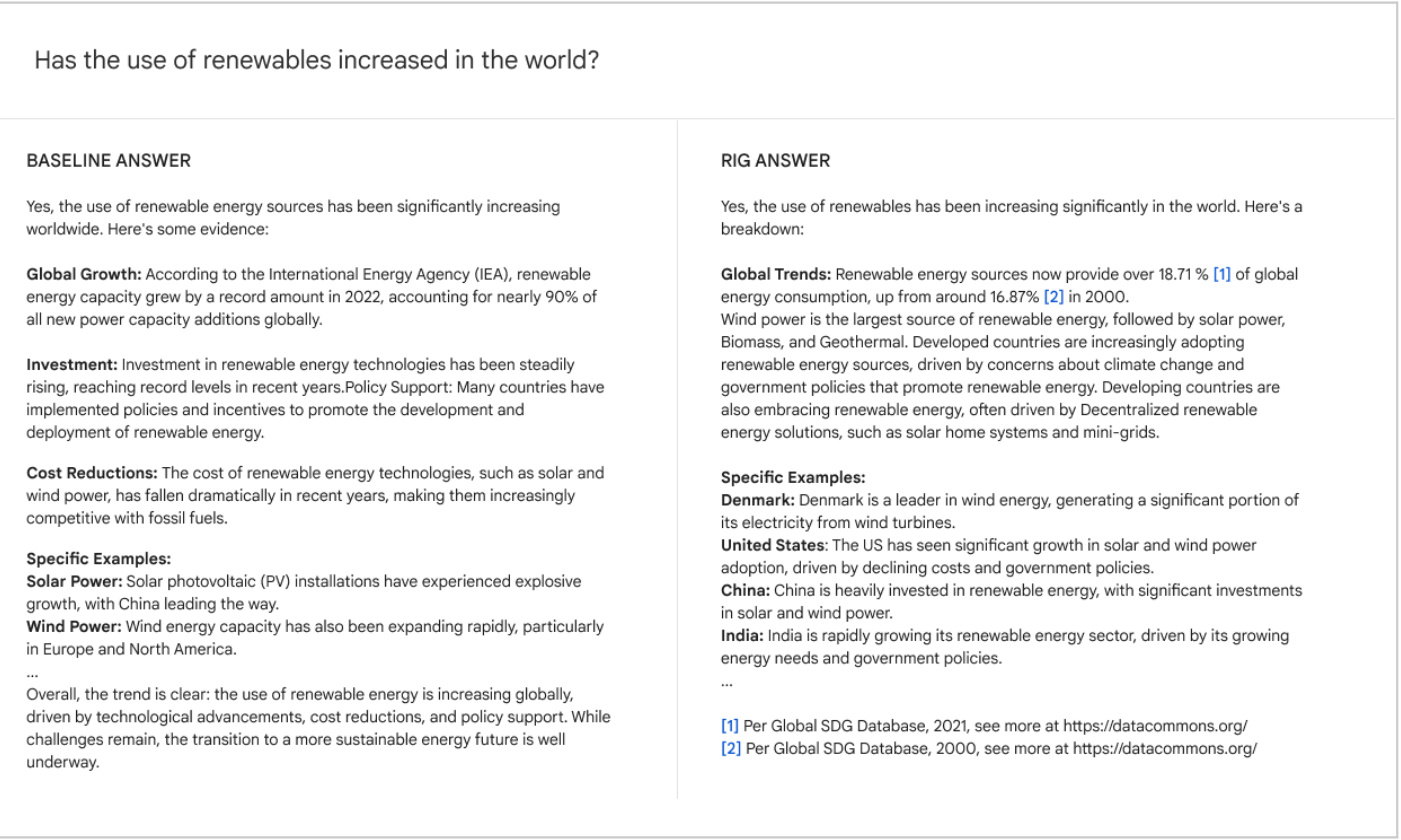

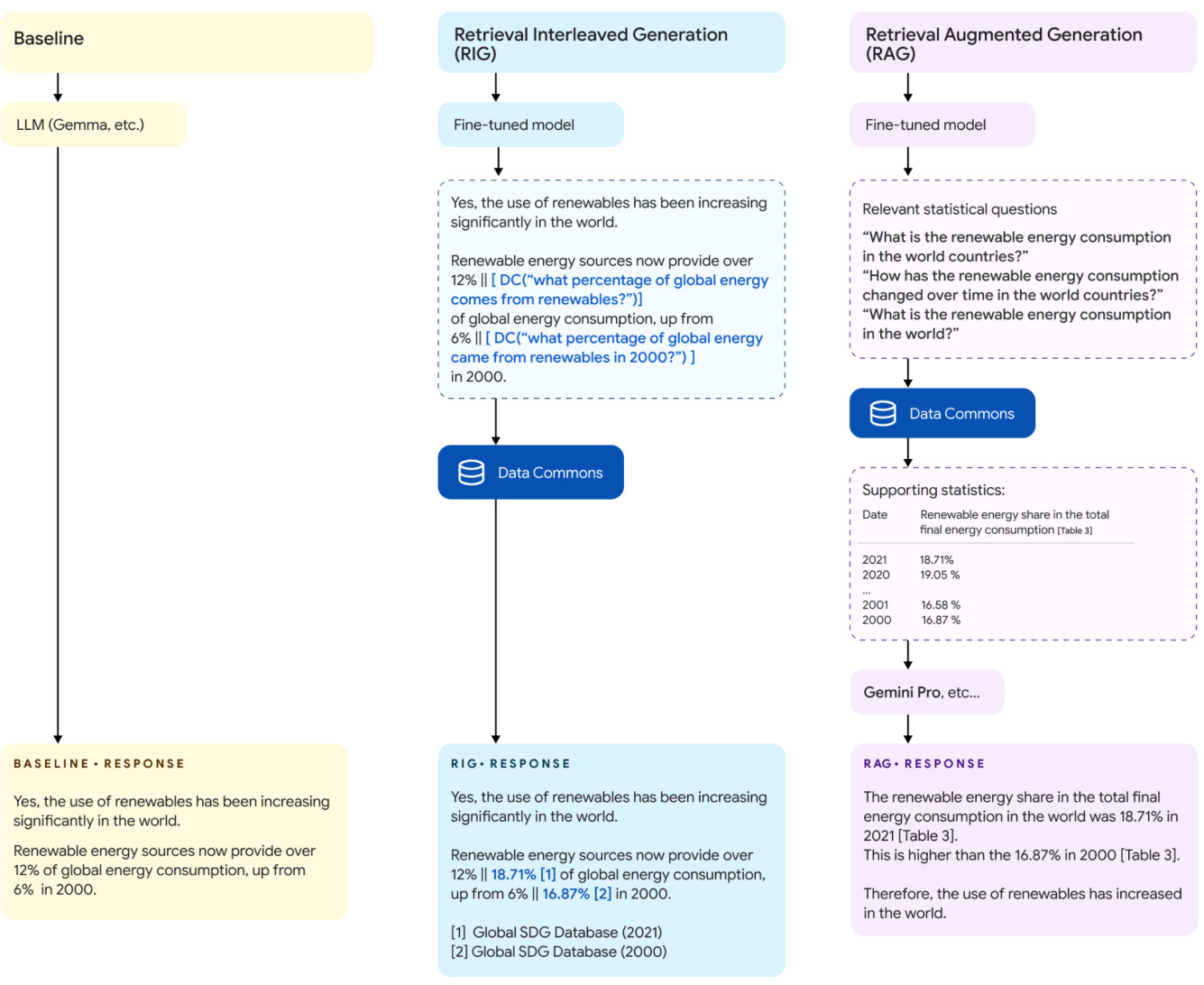

Figure 1: Comparison of answers to queries; baseline Gemma (Gemma 7B IT, Gemma 2 27B IT) not interfaced with Data Commons, and Retrieve Interleaved Generation (RIG) answers.Figure 1A comparison of the answers from the baseline Gemma model and the RIG model is shown.The RIG model not only generates the statistical values, but also the queries used for retrieval.

- Training dataset: We use a command-response dataset containing about 700 user queries for fine-tuning. For each query, we select responses generated by the base model that contain statistical data (about 400 examples) and use a more powerful LLM (e.g., Gemini 1.5 Pro) to generate natural language Data Commons calls.

typical example::

- consult (a document etc)Tell me one statistic about California, San Francisco, Alabama and the US.

- raw response::

- California is 1st as the nation's most populous state, with about 39 million people in 2020.

- In San Francisco, the diabetes rate is 9.2 cases per 10000 people.

- ...

- Fine-tuned response::

- California is 1st as the nation's most populous state, with about [DC("what was the population of California in 2020?") --> "39 million"] people.

- In San Francisco, the diabetes rate is [DC("what is the prevalence of diabetes in San Francisco?") --> "9.2 cases per 10000 people"].

- ...

2. Query Conversion

goal: Converts natural language queries generated by LLM into structured queries for retrieving data from Data Commons.

move::

- decomposition query: Decompose the natural language query into the following components:

- Statistical variables or topics:: e.g. "unemployment rate", "demographics", etc.

- pointFor example, "California".

- causality:: e.g., "ranking", "comparison", "rate of change", etc.

- Mapping and Identification: Map these components to their corresponding IDs in Data Commons. for example. using embedding-based semantic search indexing to identify statistical variables and string-based named entity identification to implement to identify locations.

- Category and template matching: Categorize queries into a fixed set of query templates based on the components identified. Example:

- How many XX in YY (YY中多少XX)

- What is the correlation between XX and YY across ZZ in AA (what is the correlation between XX and YY across ZZ in AA)

- Which XX in YY have the highest number of ZZ (YY中哪些XX的ZZ数量最多)

- What are the most significant XX in YY (YY中最显著的XX是什么)

Figure 2: Comparison of baseline, RIG, and RAG methods for generating responses with statistics. The baseline approach reports statistics directly without providing evidence, while RIG and RAG utilize Data Commons to provide authoritative data.Figure 2A comparison of the baseline, RIG, and RAG methods is shown.The RIG method generates statistical markup by interleaving it with natural language questions suitable for retrieval from Data Commons.

- Query Execution: Call Data Commons' Structured Data API to retrieve data based on query templates and IDs of variables and locations.

3. Fulfillment

goal: Present the retrieved data to the user along with the statistical values generated by LLM.

move::

- Data presentation: Present the answers returned by Data Commons to the user along with the statistics generated by the original LLM. This provides users with the opportunity to fact-check LLM.

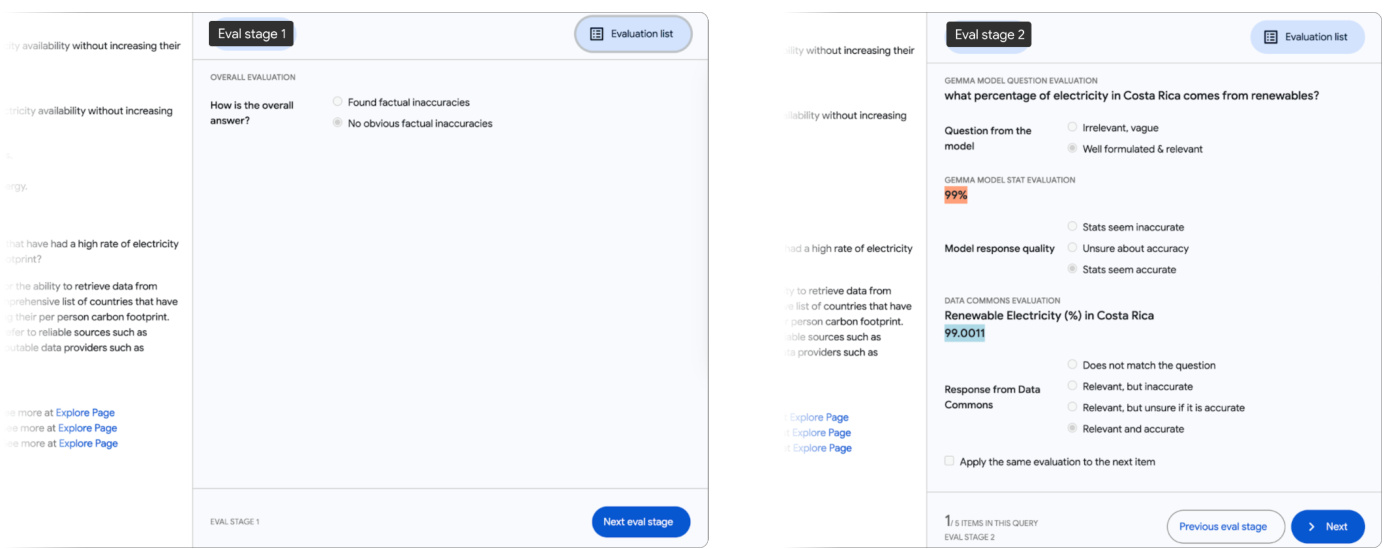

Figure 3: RIG assessment tool. This figure shows screen shots of the two assessment phases, displayed side-by-side. There are two panels for each stage. On the left, the user is shown the full response being assessed (excluded in the image above to save space). On the right side is the assessment task. In stage 1, the evaluator performs a quick check for any obvious errors. In stage 2, the evaluator assesses each statistic present in the response.Figure 3Demonstrates the process of using the RIG assessment tool. The evaluator can quickly check for any obvious errors and evaluate every statistic present in the response.

- user experience: There are a variety of ways to present this new result, such as side-by-side display, highlighting differences, footnotes, hover actions, etc., which can be explored as future work.

summarize

The RIG implementation process includes the following key steps:

- Model fine-tuning: Let LLM generate natural language queries that describe the statistical values generated by LLM.

- Query Conversion: Convert natural language queries into structured queries for retrieving data from Data Commons.

- Data Retrieval and Presentation: Retrieve data from Data Commons and present the retrieved data to the user along with statistics generated by LLM.

Through these steps, the RIG method can effectively combine the generative capabilities of LLM and the data resources of Data Commons, thus improving the accuracy of LLM in processing statistical queries.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...