Research Rabbit: Web research and report writing using native LLM, automatically drilling down into user-specified topics and generating summaries.

General Introduction

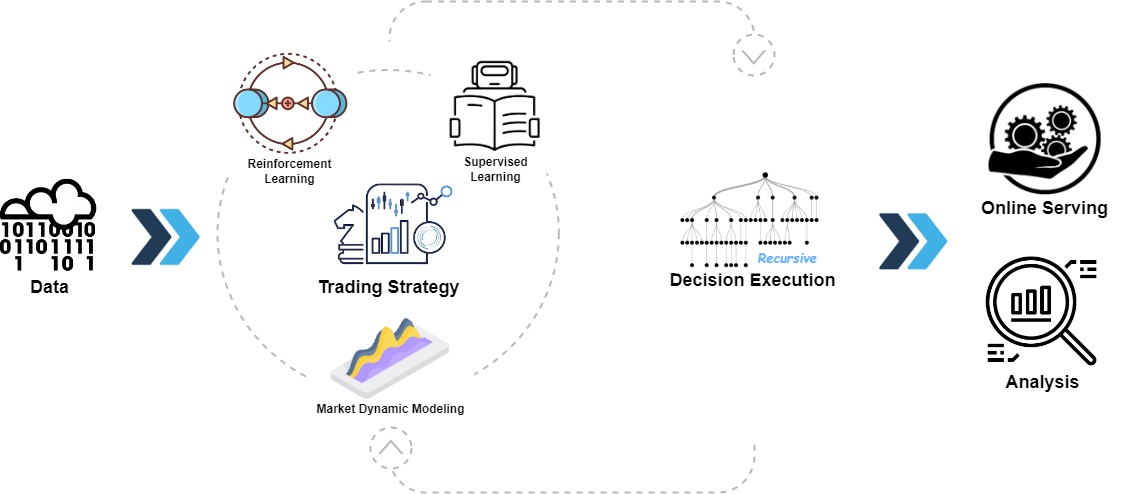

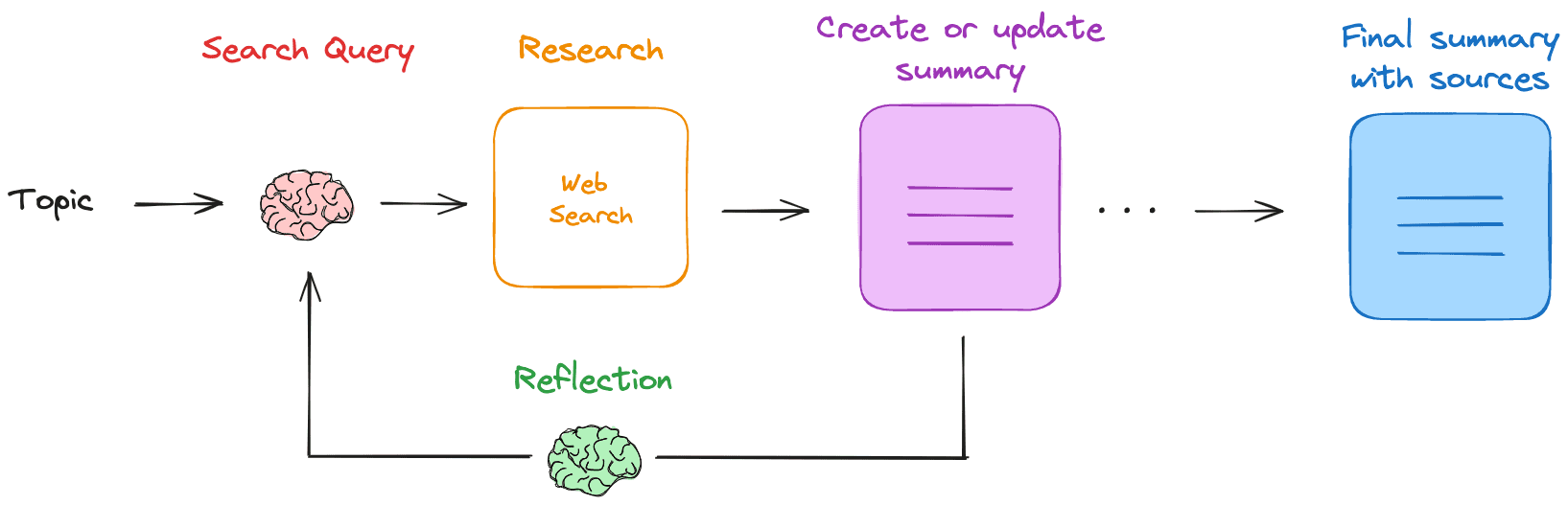

Research Rabbit is a native LLM (Large Language Model) based web research and summarization assistant. After the user provides a research topic, Research Rabbit generates a search query, obtains relevant web results, and summarizes those results. It iterates this process, filling in knowledge gaps, and ultimately generates a Markdown summary that includes all sources. The tool runs entirely locally, ensuring data privacy and security.

Function List

- Generate Search Queries: Generate search queries based on user-supplied topics.

- Web Search: Use a configured search engine (e.g. Tavily) to find relevant resources.

- Summarizing the results: Summarize web search results using local LLM.

- reflective summary: Identify knowledge gaps and generate new search queries.

- iterative updating: Repeatedly conduct searches and summaries to gradually refine the research.

- local operation: All operations are performed locally to ensure data privacy.

- Markdown Summary: Generate a final Markdown summary that includes all sources.

Using Help

Installation process

- Pull local LLM: Pull the required local LLM from Ollama, for example

ollama pull llama3.2The - Get Tavily API key: Register Tavily and get API key, set environment variables

export TAVILY_API_KEY=<your_tavily_api_key>The - clone warehouse: Run

git clone https://github.com/langchain-ai/research-rabbit.gitCloning Warehouse. - Installation of dependencies: Go to the project directory and run

uvx --refresh --from "langgraph-cli[inmem]" --with-editable . --python 3.11 langgraph devInstall the dependencies. - Startup Assistant: Start the LangGraph server and access the

http://127.0.0.1:2024View the API documentation and Web UI.

Usage Process

- Configuring LLM: Set the name of the local LLM to be used in the LangGraph Studio Web UI (default)

llama3.2). - Setting the depth of research: Configure the depth of the study iterations (default 3).

- Enter a research topic: Start the Research Assistant by entering the research topic in the Configuration tab.

- View Process: The assistant generates search queries, performs web searches, summarizes the results using LLM, and iterates the process.

- Getting the summary: Once the research is complete, the assistant generates a Markdown summary with all the sources, which the user can view and edit.

Main Functions

- Generate Search Queries: After entering a research topic, the assistant will automatically generate a search query.

- Web Search: The assistant uses the configured search engine to find relevant resources.

- Summarizing the results: The assistant uses the local LLM to summarize the search results and generate a preliminary report.

- reflective summary: The assistant identifies knowledge gaps in the summary and generates new search queries to continue the research.

- iterative updating: The assistant will iteratively conduct searches and summaries to gradually refine the research.

- Generating Markdown Summaries: Once the research is complete, the assistant generates a final Markdown summary with all the sources, which the user can view and edit.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...