ReAct: Reasoning and Action Working Together in Large Language Models

Original: https://arxiv.org/pdf/2210.03629.pdf

Can't understand how ReAct works and applies even after reading it? Check out theHands-on ReAct Implementation Logic" with practical examples.

summaries

While large-scale language models (llm) have demonstrated impressive performance in tasks of language comprehension and interactive decision-making, their reasoning capabilities (e.g., thought chain cueing) and action capabilities (e.g., action plan generation) have largely been studied as separate topics. This paper explores the use of llm to generate reasoning trajectories and task-specific actions in an interleaved manner, allowing for greater synergy between the two: reasoning trajectories help the model to generalize, track and update action plans, as well as deal with exceptions, while actions allow it to interact with external sources (e.g., knowledge bases or environments) and gather additional information. The proposed method ReAct is applied to a diverse set of linguistic and decision-making tasks and its effectiveness is demonstrated on a state-of-the-art baseline, in addition to improving human interpretability and trustworthiness. Specifically, in Question Answering (HotpotQA) and Fact Verification (Fever), ReAct overcomes the illusions and error propagation problems prevalent in chain-of-thought reasoning by interacting with a simple Wikipedia API and generating human-like task-solving trajectories that are easier to interpret than baselines without reasoning trajectories. Furthermore, on two interactive decision-making benchmarks (ALFWorld and WebShop), ReAct outperforms imitation and reinforcement learning approaches with an absolute success rate of 34% and 10%, respectively, while requiring only one or two contextual examples.

1 Introduction

A unique feature of human intelligence is the ability to seamlessly combine task-oriented actions with verbal reasoning, which is thought to play an important role in human cognition, contributing to self-regulation or strategy development as well as the maintenance of working memory. Taking the example of cooking a dish in the kitchen, between two specific actions, we may use verbal reasoning to track progress ("Now that everything is chopped, I should heat up the pan of water"), deal with anomalies or adjust the plan according to the situation ("I don't have any salt, then let me use soy sauce and pepper instead"), and realizing when external information is needed ("How do I prepare the dough? Let me do an internet search"). We may also take action (opening a recipe book to read a recipe, opening the refrigerator, checking ingredients) to support reasoning and answer questions ("What can I cook right now?") . This close synergy between "action" and "reasoning" allows humans to learn new tasks quickly and to make robust decisions or reasoning in previously uncharted environments or in the face of information uncertainty.

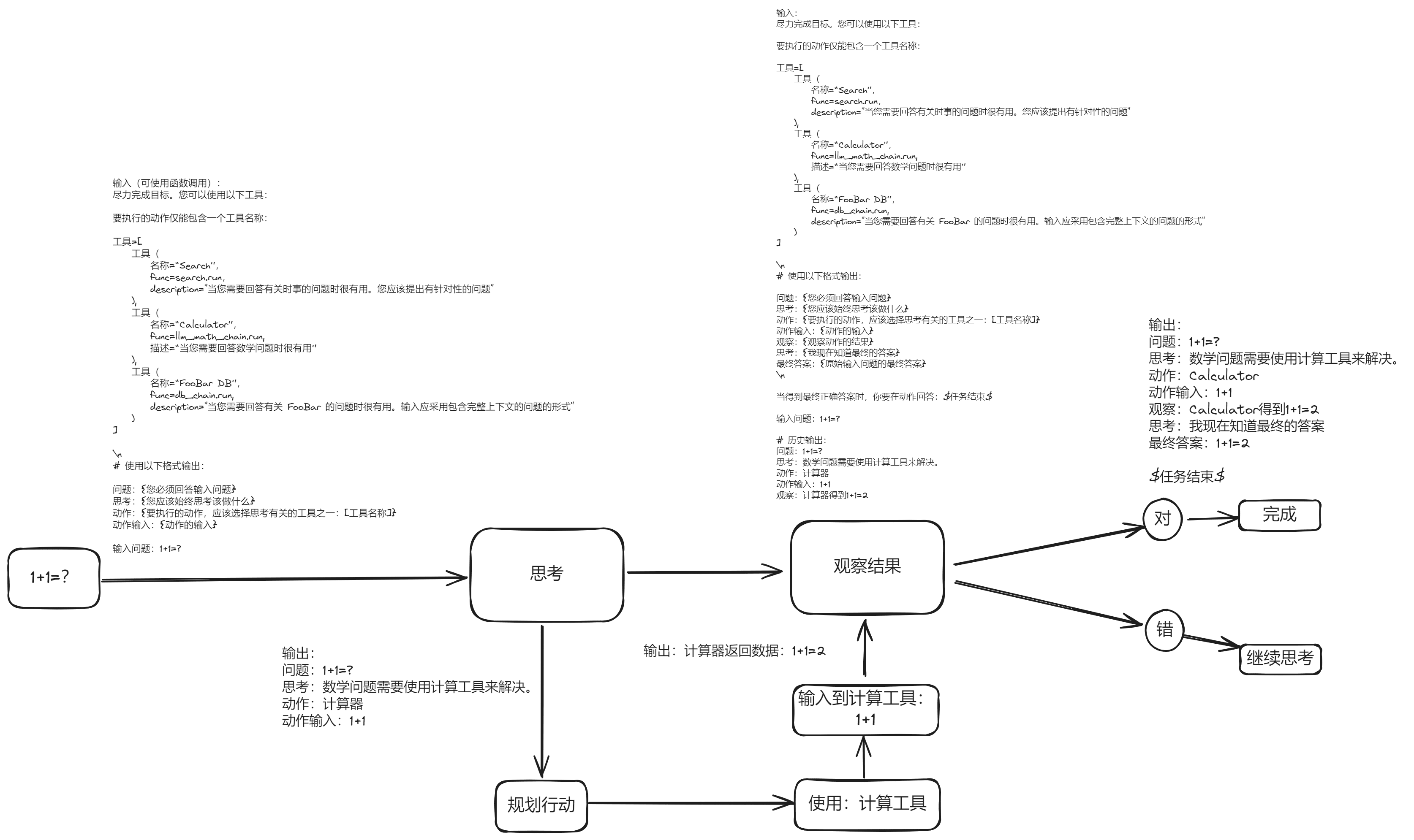

Recent results have hinted at the possibility of combining verbal reasoning with interactive decision making in autonomous systems. On the one hand, appropriately guided Large Language Models (LLMs) have demonstrated the ability to perform several steps of reasoning in arithmetic, commonsense, and symbolic reasoning tasks. However, this "chain-of-thinking" reasoning is a static black box in which the model uses its internal representation to generate thought and is not based on the external world, which limits its ability to reason reactively or update knowledge. This can lead to factual illusions and error propagation in the reasoning process (Figure 1(1b)). On the other hand, recent work has explored the use of pre-trained language models for planning and action in interactive environments, focusing on predicting actions through linguistic priors. These approaches typically convert multimodal observations into text, use language models to generate domain-specific actions or plans, and then use controllers to select or execute them. However, they do not use linguistic models to reason abstractly about high-level goals or to maintain working memory in support of actions, with the exception of Huang et al. (2022b) who performed a limited form of verbal reasoning to reiterate spatial facts about the current state. Beyond this simple embodied task interacting with a few building blocks, it has not been investigated how reasoning and action can be combined in a synergistic manner to solve general tasks, and whether this combination can provide systematic benefits compared to reasoning or action alone.

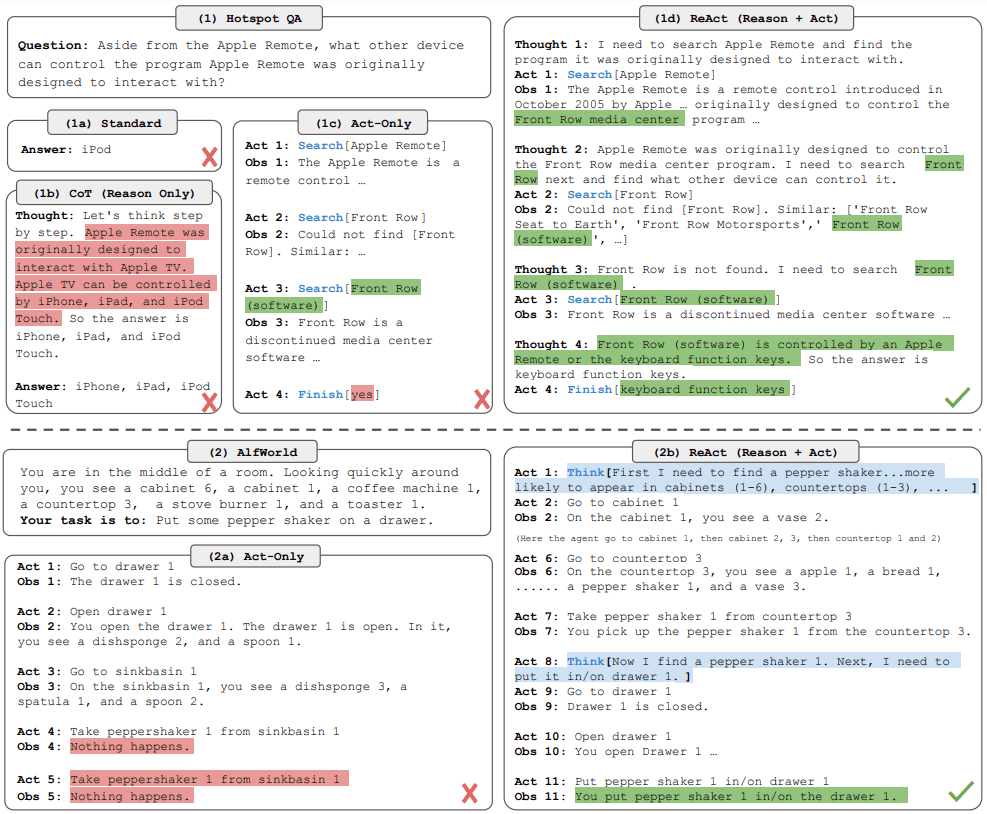

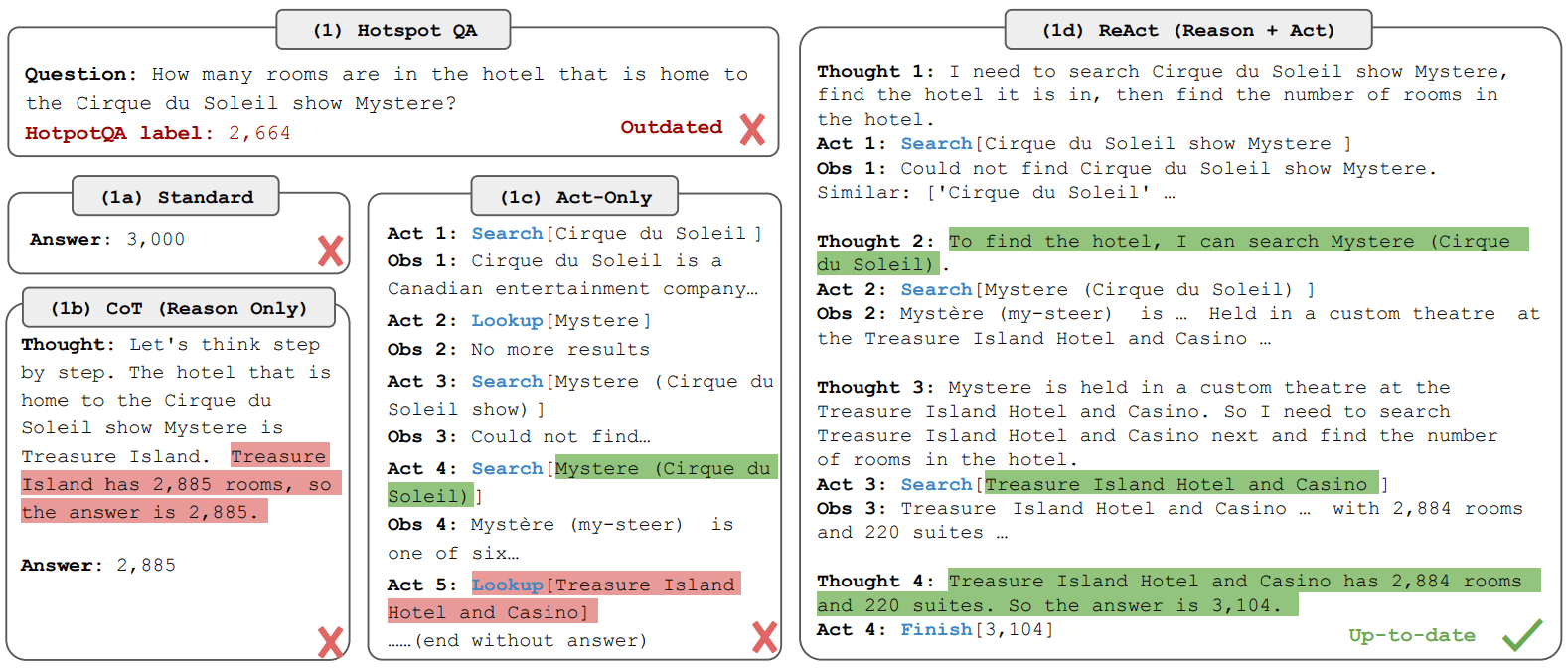

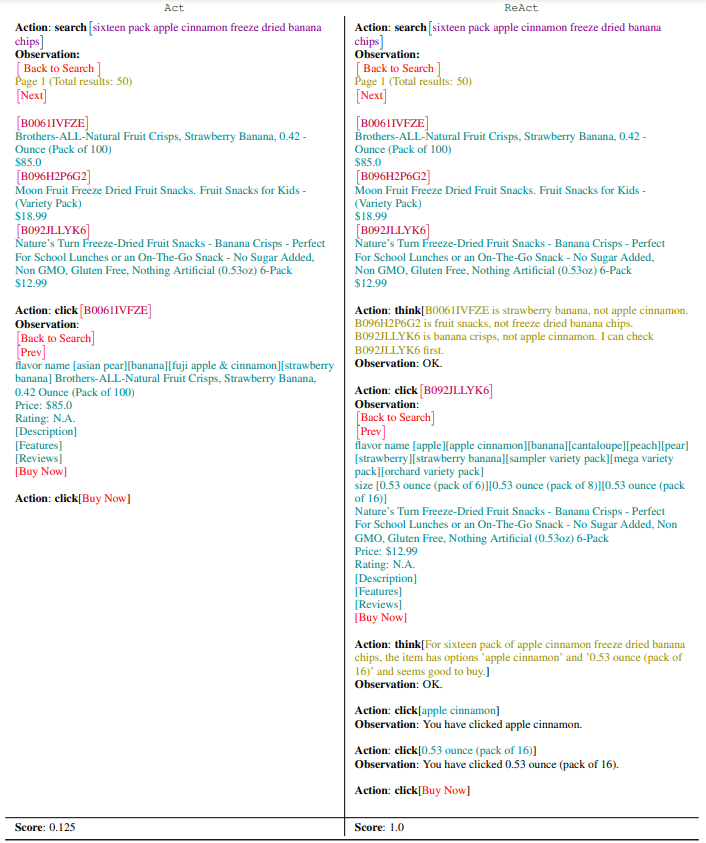

Figure 1: (1) Comparison of four prompting methods, (a) Standard Prompting, (b) Chain of Thought (CoT, Reasoning Only), (c) Action Only, and (d) ReAct (Reasoning + Action), to solve a HotpotQA (Yang et al., 2018) problem. (2) A comparison of (a) action-only and (b) ReAct hinting approaches to solve an AlfWorld (Shridhar et al., 2020b) game. In both domains, we omit contextual examples from the prompts and only show problem-solving trajectories generated by the model (action, thinking) and the environment (observation).

this paper presentsReAct, a generalized paradigm that combines reasoning and action with linguistic models to solve a variety of linguistic reasoning and decision-making tasks (Fig. 1).ReAct promptsllm to generate task-relevant verbal reasoning traces and actions in a crosscutting manner, which allows the model to perform dynamic reasoning to create, maintain, and adapt high-level plans of action (reasoned action) while interacting with external environments (e.g., Wikipedia) to incorporate additional information into the reasoning (reasoning by action).

This paper empirically evaluates ReAct and state-of-the-art baselines on four different benchmarks: quizzing, fact-checking, text games, and web navigation. For HotPotQA and Fever, ReAct outperforms common action generation models while competing with Chain of Thought Reasoning (CoT) by accessing a Wikipedia API that the models can interact with. Overall, the best approach is a combination of ReAct and CoT, which allows the use of both internal knowledge and externally obtained information in the reasoning process. On ALFWorld and WebShop, two or even one ReAct prompt can outperform the use of 103 ∼ 105Imitation or reinforcement learning methods trained on task instances resulted in absolute increases in success rates of 34% and 10%, respectively. The importance of sparse, generalized reasoning in decision making is demonstrated by showing a consistent advantage over a controlled baseline of only taking action. In addition to general applicability and performance gains, the combination of reasoning and action contributes to the interpretability, trustworthiness, and diagnosability of the model across all domains, as humans can easily distinguish information from the model's internal knowledge from the external environment, as well as examine inference trajectories to understand the decision-making underpinnings of the model's actions.

In summary, our main contributions are as follows.

(1) Proposing ReAct, a new cue-based paradigm for collaborative reasoning and action in language modeling to solve general tasks.

(2) Extensive experiments were conducted on different benchmarks to demonstrate the advantages of ReAct over previous approaches to inference or action generation alone in a sample less learning setting; the

(3) propose systematic ablations and analyses to understand the importance of action in reasoning tasks and the importance of reasoning in interaction tasks.

(4) The limitations of ReAct in the cued setting (i.e., limited support for inference and behavior) are analyzed and preliminary fine-tuning experiments are conducted, showing the potential for ReAct to be improved with additional training data. Extending ReAct to train and manipulate additional tasks and combining it with complementary paradigms such as reinforcement learning could further unlock the potential of large language models.

2 REACT: Collaborative Reasoning + Action

Consider the general setup of an intelligent body that interacts with its environment to solve a task. At the time steptOn, the agent receives an observation from the environmentot ∈ O, and according to some strategyπ(at|ct) Take an operationat ∈ A, wherect = (o1,a1,--- ,ot-1,at-1,ot) is the context of the agent. When mappingct →7 atLearning strategies are challenging when they are highly implicit and require a large amount of computation. For example, the agent shown in Fig. 1 (1c) is unable to generate the correct final action (Act 4) to accomplish the QA task because it requires complex reasoning about the trajectory context (Question, Act 1-3, Obs 1-3). Similarly, the agent shown in Fig. 1 (2a) is unable to understand from the context that Sink 1 does not contain Pepper Bottle 1, and thus keeps producing hallucinatory behaviors.

The idea of ReAct is simple:We expand the action space of an intelligent body to Aˆ = A ∪ L, where L is the language space. An action in the language spaceaˆt ∈ L, which we will refer to as a thought or reasoning trace, does not affect the external environment and therefore does not lead to observational feedback. In contrast, a thoughtaˆtAims to write useful information by reasoning about the current contextctand update the contextct+1 = (ct,aˆt) to support future reasoning or action. As shown in Figure 1, there can be various types of useful ideas, such as: breaking down task goals and creating action plans (2b, Act 1; 1d, Thought 1), injecting commonsense knowledge relevant to task resolution (2b, Thought 1), extracting important parts from observations (1d, Thoughts 2,4), tracking progress and transporting action plans (2b, Thought 8), dealing with exceptions and adjusting action plans ( 1d, thought 3), and so on.

However, since the language space L is infinite, learning in this augmented action space is difficult and requires a strong linguistic prior. In this paper, we focus on a frozen large language model PaLM-540B (Chowdhery et al., 2022) in this setting[1]Contextual examples with fewer samples were cued to generate domain-specific actions and free-form linguistic thoughts for task solution (Fig. 1 (1d), (2b)). The examples in each context are trajectories of human actions, thoughts, and environmental observations used to solve task instances (see Appendix C). For tasks where reasoning is of primary importance (Fig. 1(1)), we generate thoughts and actions alternatively so that the task solution trajectory consists of multiple thought-action-observation steps. In contrast, for decision-making tasks that may involve a large number of actions (Fig. 1(2)), thoughts only need to sparsely appear in the most relevant positions of the trajectory, so we let the language model decide for itself the asynchronous occurrence of thoughts and actions.

Due to the integration of decision-making and reasoning capabilities into a large language model, ReAct has several unique features.

A) Intuitive and easy to design:Designing ReAct prompts is easy because human annotators simply express their thoughts in words on top of their actions. This paper does not use specific format choices, think-aloud designs, or sample choices. We describe the prompt design for each task in detail in Sections 3 and 4.

B) Universal and flexible:Due to the flexible think-space and think-action occurrence format, ReAct is suitable for diverse tasks with different action spaces and reasoning needs, including but not limited to QA, fact-checking, text games, and web navigation.

C) Powerful and robust performance:ReAct demonstrates strong generalization when learning from just one to six contextual examples, consistently going beyond the baseline of only reasoning or acting consistently across domains. We also show additional benefits when enabling fine-tuning in Section 3, and the robustness of ReAct performance to cue selection in Section 4.

D) Aligned with humans and controllable:ReAct promises an interpretable sequential decision making and reasoning process where humans can easily check reasoning and factual correctness. In addition, as shown in Figure 5 in Section 4, humans can also control or correct intelligent body behavior by thinking about editing.

3 Knowledge-intensive reasoning tasks

Starting with knowledge-intensive reasoning tasks such as multi-hop quizzing and fact-checking. As shown in Figure 1 (1d), by interacting with the Wikipedia API, ReAct is able to retrieve information to support reasoning, while also using reasoning to determine what to retrieve next, demonstrating the synergy of reasoning and action.

3.1 Settings

Domains We consider two datasets that present challenges to knowledge retrieval and inference: (1) HotPotQA , a multi-hop question-answering benchmark that requires inference over two or more Wikipedia articles, and (2) FEVER , a fact-checking benchmark in which each statement is labeled SUPPORTS, REFUTES, or NOT ENOUGH INFO, depending on whether a Wikipedia article exists to validate the statement. In this work, we use a question-only setting in both tasks, where the model only receives questions/statements as inputs and does not have access to supporting passages and must rely on their internal knowledge or retrieve knowledge by interacting with the external environment to support reasoning.

Action Space We designed a simple Wikipedia Web API containing three types of operations to support interactive information retrieval:

(1) look for sth. [Entity], which returns the first 5 sentences from the corresponding entity wiki page if it exists, otherwise suggests the first 5 similar entities from the Wikipedia search engine.

(2) find [string], which will return the next sentence in the page containing the string, simulating the Ctrl+F function on a browser.

(3) fulfillment [answer], complete the current task with the answer. Most of this action space can only retrieve a small portion of a paragraph based on the exact paragraph name, which is much weaker than state-of-the-art lexical or neural retrievers. The goal is to simulate how humans interact with Wikipedia and force the model to retrieve through explicit reasoning about language.

3.2 Methodology

ReAct Prompting Applied to HotpotQA and Fever, we randomly selected 6 and 3 cases from the training set and manually wrote ReAct -format trajectories to be used as under-sampled samples in the prompts. Similar to Fig. 1 (d), each trajectory consists of multiple thought-action-observation steps (i.e., dense thinking) in which free-form thoughts are used for various purposes. Specifically, we use combinations of thoughts to decompose the problem ("I need to search for x, find y, and then find z"), extract information from Wikipedia observations ("x started in 1844", "the paragraph doesn't tell x"), perform common sense ("x is not y, so z must be ......") or arithmetic reasoning ("1844 < 1989"), directing search reconstruction (""Maybe I can search/find x"), and synthesizing the final answer (" "...... So the answer is x"). See Appendix C for more details.

Baselines We systematically removed ReAct trajectories to construct multiple baseline cues (in the format of Fig. 1 (1a-1c)).

(a)Standard prompting/Standard prompting(Standard), removes all thoughts, actions, and observations from the ReAct trajectory.

(b)Chain-of-thought prompting / Chain-of-thought prompting(CoT), which removes actions and observations and serves only as a baseline for reasoning. A self-consistent baseline (CoT-SC) was established by sampling trajectories with a 21 CoT decoding temperature of 0.7 during inference and using the majority of answers, which was found to consistently improve performance over the CoT.

(c)Acting-only prompt(Act), which removes ideas from the ReAct trajectory, is roughly analogous to how WebGPT interacts with the Internet to answer questions, although it operates on a different task and action space and uses imitation and reinforcement learning rather than prompts.

Combining Internal and External Knowledge / Combining Internal and External Knowledge As we will detail in Section 3.3, we observe thatReAct demonstrated a more factual and grounded problem-solving process, whereas CoT was more accurate in formulating a reasoning structure, but was prone to hallucinations.Therefore, we propose to merge ReAct and CoT-SC and let the model decide when to switch to the other method based on the following heuristic.

a) ReAct→CoT-SC:Fall back to CoT-SC when ReAct fails to return an answer within a given number of steps.We set 7 and 5 steps for HotpotQA and FEVER, respectively, because we found that more steps do not improve ReAct performance.

b) CoT-SC→ReAct:: WhennThe majority of answers in the CoT-SC sample appeared less thann/On 2 occasions (i.e., internal knowledge may not be able to confidently support the task), return to ReAct.

Fine tuning/Finetuning Due to the challenges of manually labeling inference trajectories and actions at scale, this paper considers a self-help approach similar to that of Zelikman et al. (2022), which uses 3000 trajectories with correct answers generated by ReAct (also used in other baselines) to fine-tune a smaller language model (PaLM-8/62B) to decode trajectories conditioned on input questions/declarations (all thoughts, actions, observations). See Appendix B.1 for more details.

3.3 Results and Observations

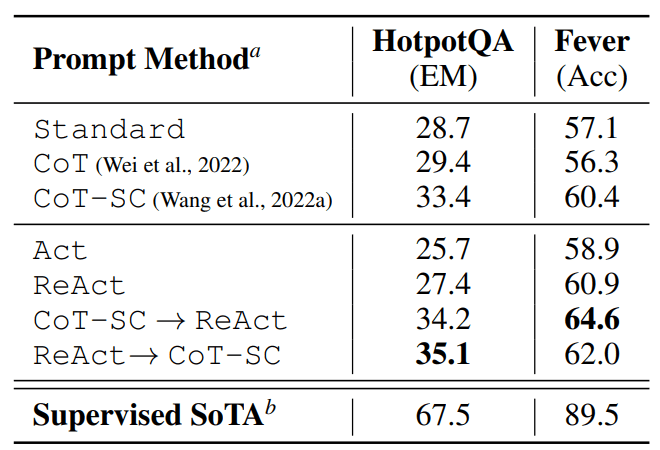

ReAct overall outperforms Act/ReAct outperforms Act consistently Table 1 shows the HotpotQA and Fever results using PaLM-540B as the base model and using different cueing methods. We note that ReAct outperforms Act on both tasks, demonstrating the value of reasoning to guide actions, especially in synthesizing final answers, as shown in Figure 1 (1c-d). Fine-tuning result 3 also confirms the benefits of inference traces for more informed action.

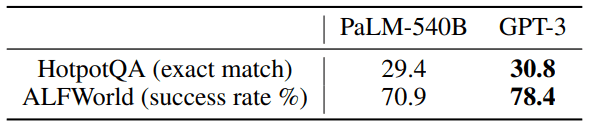

Table 1: PaLM-540B cueing results on HotpotQA and Fever.

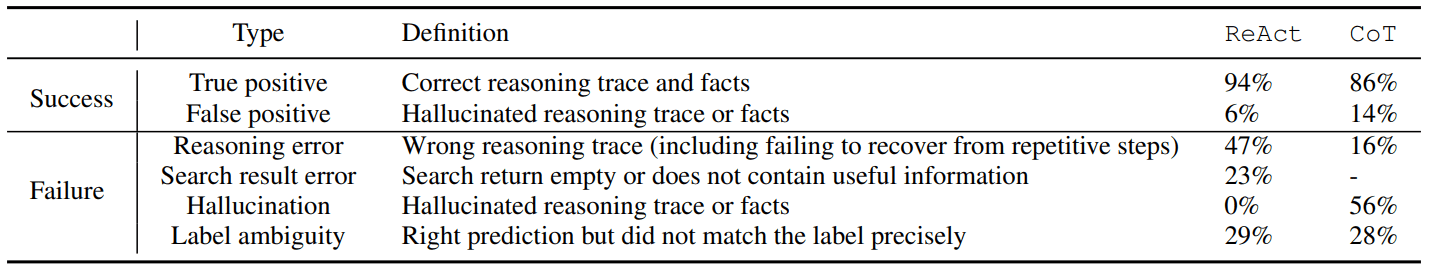

ReAct vs. CoT On the other hand, ReAct outperforms CoT on Fever (60.9 vs. 56.3) and lags slightly behind CoT on HotpotQA (27.4 vs. 29.4). There may be only minor differences for pro/rebuttal fervor statements (see Appendix D.1), so it is critical to take action to gain accurate and up-to-date knowledge. To better understand the behavioral differences between ReAct and CoT on HotpotQA, we randomly sampled 50 trajectories with correct and incorrect answers (EM judgments) from ReAct and CoT (200 examples in total), respectively, and manually labeled them with success and failure patterns in Table 2. The following are some key observations.

Table 2: Types of success and failure modes for ReAct and CoT on HotpotQA, and their percentages in randomly selected examples from human studies.

A) Hallucinations are a serious problem for CoTs, in success mode leads to a much higher false alarm rate than ReAct (14% vs. 6%) and constitutes its main failure mode (56%). In contrast, ReAct's problem solving trajectory is more relevant, fact-driven, and credible due to its ability to access an external knowledge base.

B) While interleaving the reasoning, action, and observation steps increases ReAct's practicality and credibility, this structural constraint also reduces its flexibility in formulating reasoning stepsWe note that ReAct has a specific frequent error pattern, where the model regenerates previous thoughts and actions, which we categorize as part of the "reasoning error" because the model fails to reason appropriately about the next action and jumps out of the loop.

C) For ReAct, successful retrieval of informational knowledge through search is critical.Non-informative searches accounted for 23% of the error cases, derailing model reasoning and making it difficult to recover and re-frame ideas. This may be an expected trade-off between factuality and flexibility, which inspired us to propose a strategy that combines the two approaches.

We provide examples for each success and failure mode in Appendix E.1. We also found that some HotpotQA questions may contain outdated answer labels, see Figure 4 for an example.

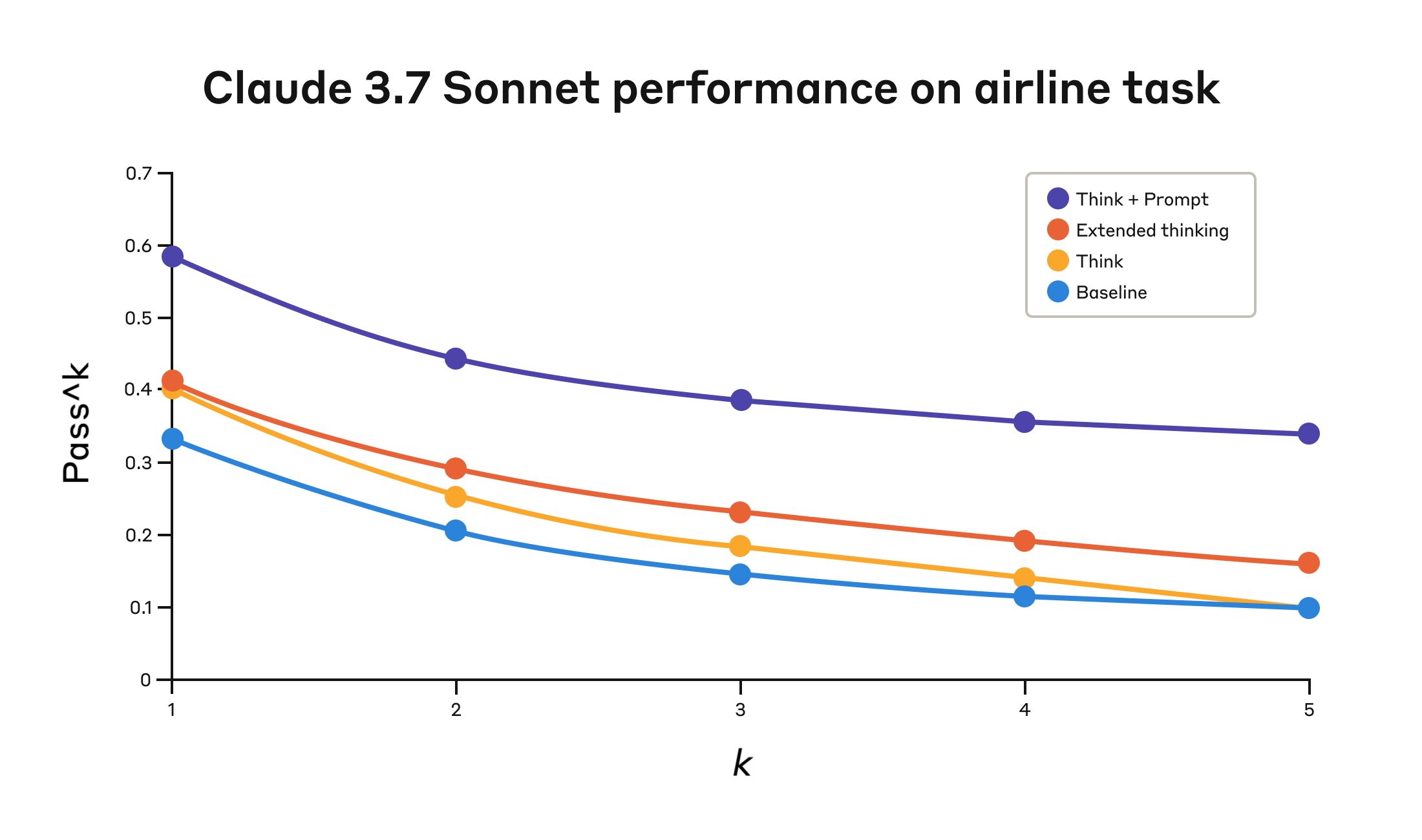

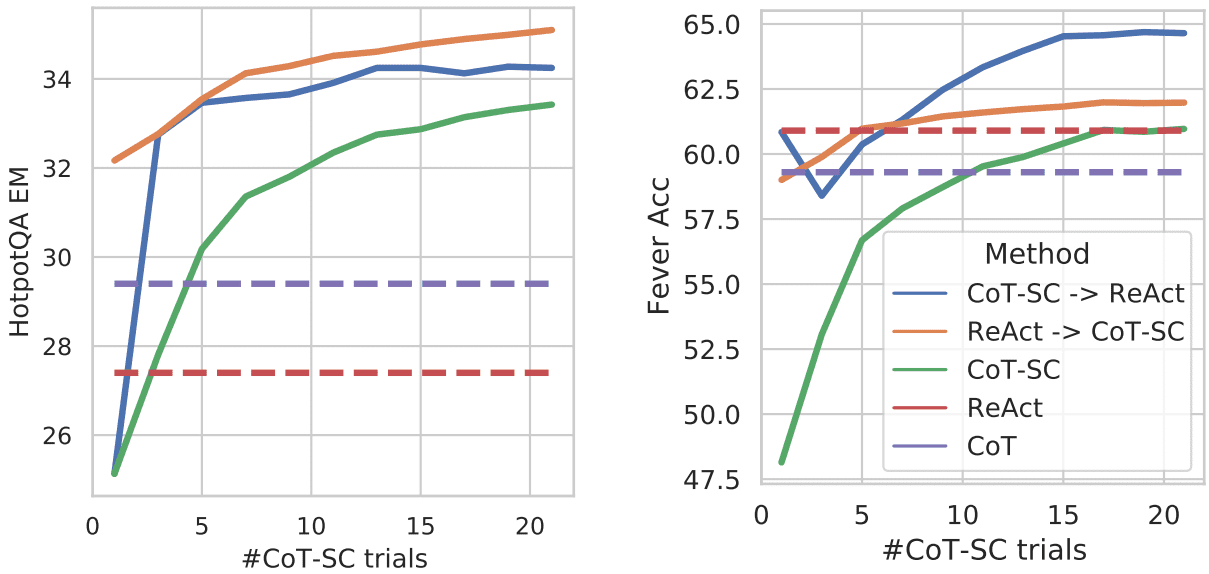

ReAct + CoT-SC performed best in prompting LLMs.As shown in Table 1, the best cueing methods on HotpotQA and Fever were ReAct → CoT-SC and CoT-SC → ReAct, respectively.In addition, Figure 2 demonstrates the performance of the different methods when using different numbers of CoT-SC samples. While the two ReAct + CoT-SC methods have advantages in their respective tasks, they both significantly and consistently outperform CoT-SC by using only 3-5 samples across a varying number of samples, a result that demonstrates the value of appropriately combining knowledge internal to the model with knowledge external to the reasoning task.

Figure 2: PaLM-540B prompt results demonstrated according to the number of CoT-SC samples used.

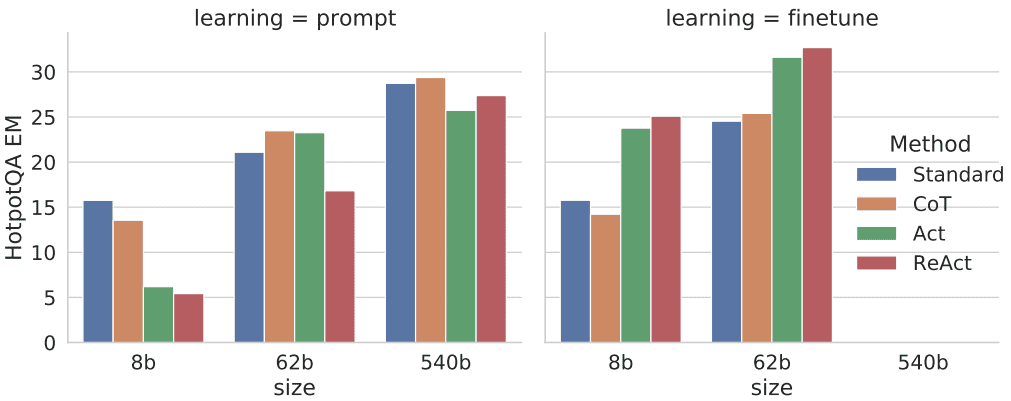

ReAct performs optimally in terms of fine-tuning.Figure 3 shows the extended effects of cueing/fine-tuning for the four methods (Standard, CoT, Act, ReAct) on HotpotQA. In PaLM-8/62B, prompting ReAct performed the worst of the four methods due to the difficulty of learning to reason and act from scenarios. However, when fine-tuned with only 3,000 examples, ReAct became the best of the four methods, with PaLM-8B fine-tuned ReAct outperforming all PaLM-62B cueing methods and PaLM-62B fine-tuned ReAct outperforming all 540B cueing methods. In contrast, fine-tuning Standard or CoT performed significantly worse than fine-tuning ReAct or Act, in PaLM8/62B the former of which primarily teaches the model to memorize (possibly fictional) knowledge facts, and the latter of which teaches the model how to (reason and) act on obtaining information from Wikipedia, a more generalized knowledge reasoning skill. Since all cueing methods are still significantly below the domain-specific state-of-the-art (Table 1), we believe that fine-tuning with more human-written data may be a better way to unleash ReAct's capabilities.

Figure 3: Extended results for cueing and fine-tuning HotPotQA using ReAct (our approach) and baselines.

4 Decision-making tasks

We also tested ReAct on two interactive language-based decision-making tasks, ALFWorld and WebShop, both of which have complex environments that require intelligences to take action over long time spans with sparse rewards, which requires effective reasoning for action and exploration.

ALFWorld ALFWorld (Figure 1(2)) is a synthetic text-based game designed to align with the embodied ALFRED benchmark. It consists of 6 types of tasks in which intelligences are required to navigate through textual actions (e.g., go to the coffee table1 , pick up the file2 , use the desk lamp1 ) and interact with a simulated family to achieve high-level goals (e.g., examine the file under the desk lamp). A task instance can have more than 50 locations and require more than 50 steps of expert strategy to solve, challenging the intelligences to plan and track subgoals as well as explore systematically (e.g., checking all tables one by one to find the desk lamp). In particular, one of the challenges built into ALFWorld is the need to determine the likely locations of common household items (e.g., a table lamp is likely to be on a table, shelf, or dresser), which makes this setting ideal for LLM to utilize its pre-trained commonsense knowledge. To cue ReAct, we randomly annotated three trajectories in the training set for each task type, each consisting of sparse ideas that (1) decompose the goal, (2) track the completion of the subgoal, (3) determine the next subgoal, and (4) find the location of the object and what to do with it through commonsense reasoning. We show the hints used for ALFWorld in Appendix C.4. Following the approach of Shridhar et al. we evaluated 134 unseen evaluation games in a specific task setting. For robustness, we constructed 6 cues for each task type through each permutation of each of our annotated 3 trajectories.Act cues were constructed using the same trajectories, but without the ideas-because the task instances were randomly selected from the training set, they were neither biased toward ReAct nor Act, providing a fair and controlled comparison to test the importance of sparse ideas. As a baseline, we use BUTLER, an imitation learning intelligence trained on 105 expert trajectories for each task type.

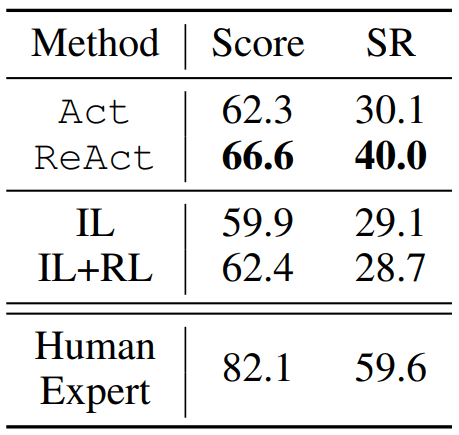

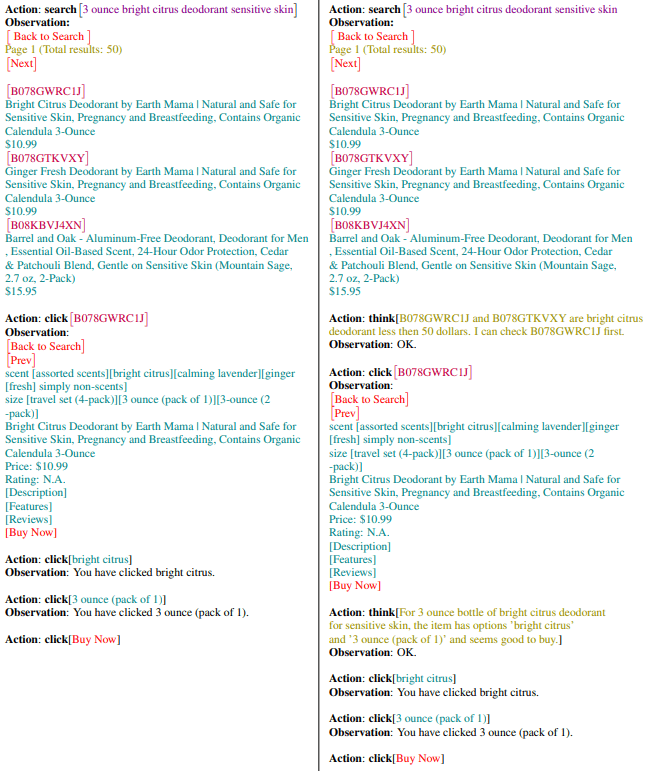

WebShop Can ReAct also interact with noisy real-world language environments for practical applications? We studied WebShop, a recently proposed online shopping website environment with 1.18 million real-world products and 12k human instructions. Unlike ALFWorld, Webshop contains a large amount of structured and unstructured text (e.g., product titles, descriptions, and options crawled from Amazon) and asks intelligences to interact with it based on user commands (e.g., "I am looking for a nightstand with drawers. It should have a nickel finish and cost less than $140") through web interactions (e.g., search for "nightstand drawers" and select buttons such as "Color: Modern Nickel White" or "Return to Search," etc.) to purchase a product. This task was evaluated by average score (percentage of required attributes covered by the selected product, averaged across all episodes) and success rate (percentage of episodes in which the selected product met all requirements) on 500 test instructions. We developed Act prompts that included actions for searching, selecting products, choosing options, and purchasing, while ReAct prompts reasoned additionally to determine what to explore, when to purchase, and which product options were relevant to the instructions. See Table 6 for example prompts, and Table 10 in the Appendix for model predictions. We compare this to an imitation learning approach trained with 1012 individually annotated trajectories, and an additional imitation + reinforcement learning approach (IL + RL) trained with 10587 training instructions.

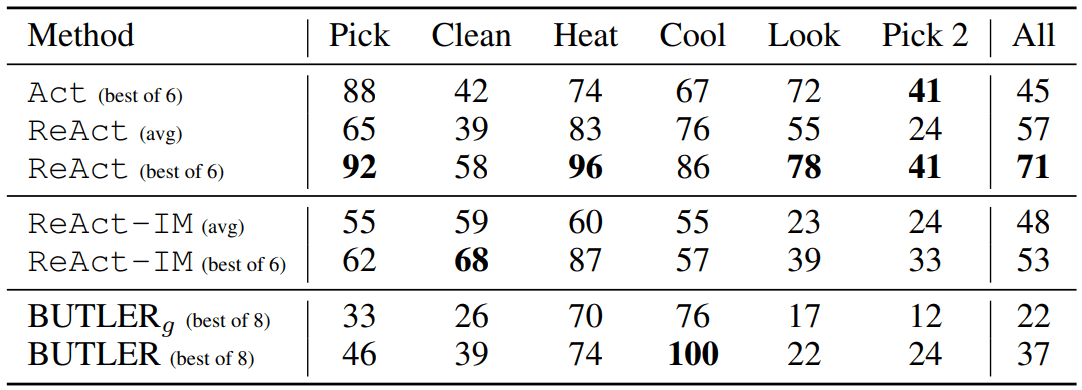

in the end ReAct outperforms Act on both the ALFWorld (Table 3) and Webshop (Table 4) platforms.On ALFWorld, the top performing ReAct experiment achieves an average success rate of 711 TP3T, greatly exceeding the best Act (451 TP3T) and BUTLER (371 TP3T) experiments. In fact, the worst performing ReAct experiment (481 TP3T) also outperformed the best experiments of both methods. Furthermore, the advantage of ReAct over Act persisted across the six controlled experiments, with relative performance gains ranging from 331 TP3T to 901 TP3T, averaging 621 TP3T.From a qualitative perspective, we found that Act, in the absence of any thought at all, fails to correctly break down the goal into smaller subgoals, or loses track of the current state of the environment. Example trajectories comparing ReAct and Act can be found in Appendix D.2.1 and Appendix D.2.2.

Table 3: AlfWorld task-specific success rates (%). Results for BUTLER and BUTLERg are from Shridhar et al. 2020b Table 4. All methods use greedy decoding, except BUTLER which uses beam search.

Table 4: Scores and success rates (SR) on Webshop. IL/IL+RL data taken from Yao et al. (2022).

On Webshop, one-time Act hints have been able to go hand-in-hand with IL and IL+RL methods. With additional sparse reasoning, ReAct achieves significantly better performance, with an absolute improvement of 101 TP3T over the previous best success rate. By examining examples, we find that ReAct is more likely to bridge the gap between noisy observations and actions by reasoning to identify products and options that are relevant to the instruction (e.g., "For the 'space-saving living room footstool stool,' the item is available in ' 39x18x18inch' and 'blue color' options that seem worth buying.") . However, existing methods still perform far less well than expert humans (Table 4), who perform significantly more product exploration and query reconstruction, which remains a challenge for cue-based methods.

On the value of internal reasoning versus external feedback To the best of our knowledge, ReAct is the first demonstration of LLM applied to a closed-loop system combining reasoning and action in an interactive environment. Perhaps the closest prior work is Internal Monologue (IM), from Huang et al. (2022b), in which the actions of embodied agents are driven by the eponymous "internal monologue".However, IM's "internal monologue" is limited to observations of the state of the environment and the tasks that the agent needs to accomplish in order to satisfy the goals.In contrast, the decision inference tracking in ReAct is flexible and sparse, allowing diverse inference types to be induced for different tasks (see Section 2).

To demonstrate the difference between ReAct and IM and to emphasize the importance of internal reasoning versus simple responses to external feedback, we conducted ablation experiments using a thought pattern consisting of IM-style dense external feedback. As shown in Table 3, ReAct greatly outperformed IM-style prompts (ReAct-IM) (71% vs. 53% for overall success), with consistent advantages on five of the six tasks. Qualitatively, we observed that ReAct-IM frequently made errors in determining when a sub-goal was completed or what the next sub-goal should be because of the lack of high-level goal decomposition. In addition, many of the ReAct-IM trajectories also experienced difficulties in determining where items might be located in the ALFWorld environment because of a lack of common sense reasoning.

Both of these shortcomings can be addressed in the ReAct paradigm. More details about ReAct-IM are in Appendix B.2.Example hints for ReAct-IM can be found in Appendix C.4, and example trajectories are in Appendix D.2.3.

5 Related work

A linguistic model of reasoning Perhaps the best known work on reasoning with Large Language Models (LLMs) is Chain-of-Thought (CoT), which revealed the ability of LLMs to formulate their own "thought processes" to solve problems. Several follow-up works have been conducted since then, including least-to-most prompting for complex tasks, zero-shot CoT, and self-consistency reasoning. Recently, (Madaan & Yazdanbakhsh, 2022) systematically investigated the construction and structure of CoTs and observed that the presence of symbols, schemas, and text is critical to the effectiveness of CoTs.

Other work has also extended to more complex reasoning architectures that go beyond simple hints. For example, Selection-Inference divides the inference process into "selection" and "inference" steps, and STaR guides the inference process by fine-tuning the model based on the correct inference generated by the model itself. Reasoning process. Faithful reasoning decomposes multi-step reasoning into three steps, each of which is executed by a specialized LM. Similar approaches, such as Scratchpad, also demonstrate improvements in multi-step computational problems by fine-tuning the LM on intermediate computational steps.

In contrast to these approaches, ReAct performs more than isolated, fixed reasoning and integrates model actions and their corresponding observations into a coherent input stream so that the model can reason more accurately and solve tasks that go beyond reasoning (e.g., interactive decision making).

Using language models for decision making. The capabilities of powerful LLMs allow them to perform tasks that go beyond language generation, utilizing LLMs as a strategy model for decision making, which is becoming increasingly popular, especially in interactive environments.WebGPT uses LLMs to interact with web browsers, navigate web pages, and infer answers to complex questions from ELI5 . In contrast, WebGPT does not explicitly model thinking and reasoning processes, but relies on expensive human feedback for reinforcement learning. In dialog modeling, systems like BlenderBot and Sparrow and task-oriented dialog systems like SimpleTOD also train LMs to make decisions about API calls. Unlike ReAct, they do not explicitly consider the inference process and rely on expensive datasets and human feedback sets for policy learning. In contrast, ReAct learns policy in a cheaper way, since the decision-making process only requires language to describe the reasoning process.

Language models (LLMs) are also increasingly being applied to planning and decision-making in interactive and embodied environments. In this regard, the most relevant to ReAct are probably SayCan andInner MonologueIn SayCan, they use LLMs for robot action planning and decision making. In SayCan, the LLMs are prompted to directly predict the actions the robot may take, which are then reordered by a visual environment-based availability model for final prediction.Inner Monologue is further improved by the addition of the famous "inner monologue", which is implemented as feedback injected from the environment. feedback from the environment. To our knowledge, Inner Monologue is the first work to demonstrate such a closed-loop system, and ReAct builds on it. However, we argue that Inner Monologue does not really contain inner thinking - this is detailed in Section 4. We also note that the use of language as a semantically rich input in interactive decision-making processes has been shown to be successful in other settings. It is becoming increasingly clear that with LLMs, language as a fundamental cognitive mechanism will play a key role in interaction and decision-making. Moreover, advances in LLMs have inspired the development of versatile and generalized agents like Reed et al.

6 Conclusion

We present ReAct-a simple yet effective approach for coordinating reasoning and action in large language models. Through a diverse set of experiments on multi-hop question answering, fact-checking, and interactive decision-making tasks, we show that ReAct leads to superior performance with parsable decision paths. Despite the simplicity of our approach, complex tasks in large action spaces require more examples to learn well, which can easily exceed input length limits in contextual learning. We tried the fine-tuned approach on HotpotQA, and initial results are encouraging, but learning in more high-quality human annotations would be expected to further improve performance. Allowing ReAct to scale up in multi-task training, combined with complementary paradigms like reinforcement learning, may result in more powerful agents that unlock the potential of LLMs for more applications.

A Additional conclusions

A.1 GPT-3 experiment

Table 5: Comparison of results for ReAct prompts using PaLM-540B with GPT-3 (text-davinci-002, greedy decoding). On HotpotQA, we randomly selected a subset of 500 validation questions. In ALFWorld, we used all 134 instances of unseen validation tasks with the best set of hints selected according to PaLM-540B.

We performed additional GPT-3 (Brown et al., 2020) experiments to confirm the generalization of ReAct cue performance across different large language models. As shown in Table 5, GPT-3 (text-davinci-002, greedy decoding) consistently outperforms PaLM-540B on HotpotQA and ALFWorld, possibly because it has been fine-tuned to follow human instructions. This suggests that the ReAct cue is effective on different tasks for different large language models. The code for these experiments can be found at https://react-lm.github.io/.

A.2 ReAct to gain up-to-date knowledge on HotpotQA

Figure 4: Another example of a fondue quiz question where the original tags are outdated. Only ReAct was able to obtain the most up-to-date answers through actual web interactions coupled with reasoning.

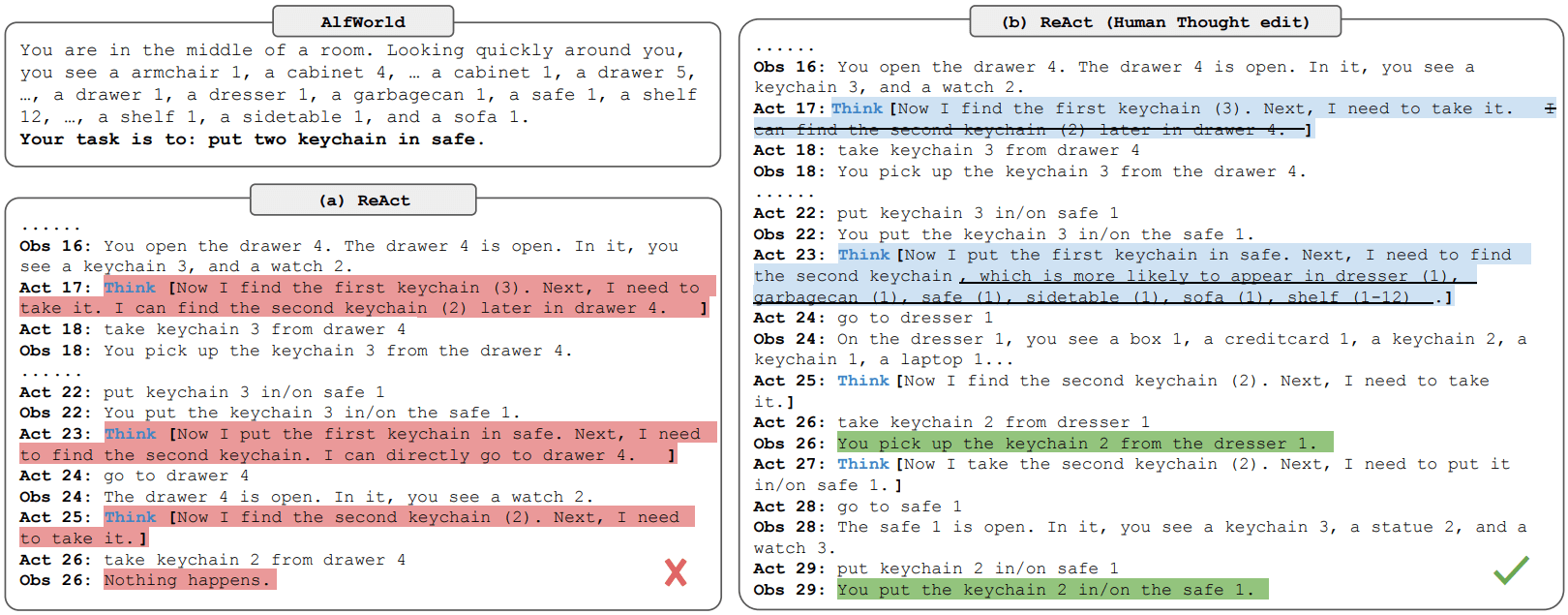

A.3 Behavioral Correction of Human Participation Cycles on ALFWorld

We also explored human engagement loop interactions with ReAct that allowed humans to inspect and edit ReAct's reasoning traces. Figure 5 shows that simply by removing a hallucinatory sentence in Act 17 and adding a few cues in Act 23, the ReAct can be made to significantly change its behavior, align with these human thought edits, and successfully complete the task. From a human perspective, solving such a task becomes much easier, from inputting dozens of actions to editing only a few thoughts, which allows for a new type of human-machine collaboration. We note that such strategy editing is difficult for Act and previous RL approaches because humans cannot change model parameters and changing a few actions may not edit the rest of the model's behavior. This paradigm also goes beyond human dialog to update goals or subgoals, as described in Huang et al. (2022b)-while editing the ReAct idea can do all of this, it can also modify any of the model's internal beliefs, reasoning styles, or flexible mindspace support to better solve the task. We believe this is an exciting direction for human alignment and leaves more systematic research as future work.

Figure 5: Examples of HCI behavioral corrections using ReAct in AlfWorld. (a) The ReAct trajectory fails due to hallucinatory thinking (behavior 17). (b) By simply editing the two minds (behaviors 17, 23) by a human, the ReAct trajectory produces the desired reasoning trajectory and action with success.

B Experimental details

B.1 Fine-tuning details of the Hot Topics quiz

For all fine-tuning, we use a batch size of 64. On the PaLM-8B, we perform 4,000 steps of fine-tuning for the ReAct and Act methods and 2,000 steps of fine-tuning for the Standard and CoT methods. On the PaLM-62B, we fine-tuned the ReAct and Act methods by 4,000 steps and the standard and CoT methods by 1,000 steps. We find that the ReAct and Act methods typically benefit from more training steps (and more training data), while the standard and CoT methods begin to degrade soon after fine-tuning.

B.2 ALFWORLD Instant Style Details

For the immediate style ablation experiments, the same expert trajectories used in ReAct were re-annotated, and these trajectories internally contained dense external feedback thinking that limited ReAct-IM to only consider (1) decomposition of the current goal and (2) the current subgoal that needs to be accomplished. Specifically, ReAct-IM lacks (1) the thinking that determines when a subgoal is completed (2) the thinking that determines what the next subgoal should be (3) the thinking that induces the LLM to refer to its internal pre-trained knowledge in order to recognize the possible locations of items in the environment.

C PROMPTS

C.1 Hot Topics Quiz

Hot Q&A Tips

| Original | |

| Question | Answer |

| What's the elevation range for the area that the eastern sector of the Colorado orogeny extends into? | 1,800 to 7,000 ft |

| Musician and satirist Allie Goertz wrote a song about "The Simpsons." character Who did Matt Groening name after Nixon? | Richard Nixon |

| Which documentary is about Finnish rock groups, Adam Clayton Powell, or The Saimaa Gesture? | The Saimaa Gesture |

| What profession do Nicholas Ray and Elia Kazan have in common? | Director, screenwriter, actor |

| Which magazine was started first, Arthur's Magazine or First for Women? | Arthur's Magazine |

| Are Pavel Urysohn and Leonid Levin known for the same type of work? | Yes |

| Original | |

| concern | solution |

| What is the elevation range of the eastern extension of the Colorado orogeny? | 1,800 to 7,000 ft. |

| Musician and satirist Allie Goertz wrote a song about the Simpsons character Milhouse, Matt Groening, named after which Nixon man? | Richard Nixon. |

| Which documentary is about a Finnish rock band, Adam Clayton Powell, or the actions of Saimaa? | Operation Saïmaa |

| What careers do Nicholas Ray and Elia Kazan have in common? | Director, screenwriter, actor |

| Which magazine was founded earlier, Arthur's Magazine or First for Women? | Arthur's Magazine |

| Are Pavel Urizon and Leonid Levin known for the same type of work? | be |

| Act | ||

| Question | Action | Observation |

| What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into? | Search [Colorado orogeny] | The Colorado orogeny was an episode of mountain building (an orogeny) in Colorado and surrounding areas. |

| Lookup [eastern sector] | (Result 1 / i) The eastern sector extends into the High Plains and is called the Central Plains orogeny. | |

| Search [High Plains] | High Plains refers to one of two distinct land regions. | |

| Search[High Plains (United States)] | The High Plains are a subregion of the Great Plains. From east to west, the High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130 m). [3] | |

| Finish | [1,800 to 7,000 ft] | |

| Musician and satirist Allie Goertz wrote a song about the "The Simpsons" character Milhouse, who Matt Groening named after who? | Search [Milhouse] | Milhouse Mussolini Van Houten is a recurring character in the Fox animated television series The simpsons voiced by Pamela Hayden and created by Matt The simpsons voiced by Pamela Hayden and created by Matt Groening. |

| Lookup [named after] | (Result 1 / l) Milhouse was named after U.S. president Richard Nixon, whose middle name was Milhous. | |

| Finish | [Richard Nixon] | |

| Which documentary is about Finnish rock groups, Adam clayton Powell or The Saimaa Gesture? | Search [Adam clayton Powell] | Could not find [Adam clayton Powell]. Similar: ['Adam Clayton Powel III', 'Seventh Avenue (Manhattan)', 'Adam clayton Powell Jr. State office Building', 'Isabel Washington Powell', 'Adam Clayton Powell (film)', 'Adam Clayton Powell (film)']. Powell', 'Adam Clayton Powell (film)', "Giancarlo Esposito"]. |

| Search [Adam clayton Powell (film)] | Adam clayton Powell is a 1989 American documentary film directed by Richard Kilberg. The film is about the rise and fall of influential African-American politician Adam clayton Powell Jr.[3][4] It was later aired as part of the PBs series The American Experience. The film is about the rise and fall of influential African-American politician Adam clayton Powell Jr.[3][4] It was later aired as part of the PBs series The American Experience. | |

| Finish | [The Saimaa Gesture] | |

| What profession does Nicholas Ray and Elia Kazan have in common? | Search [Nicholas Ray] | Nicholas Ray (born Raymond Nicholas Kienzle Jr., ) August 7,1911 -June 16, 1979) was an American film director, screenwriter, and actor best known for the 1955 film Rebel Without a Cause. |

| Search [Elia Kazan] | Elia Kazan was an American film and theater director, producer, screenwriter and actor. | |

| Finish[director, screenwriter, actor] | ||

| Which magazine was started first Arthur's Magazine or First for Women? | Search [Arthur's Magazine] | Arthur's Magazine (1844-1846) was an American literary periodical published in Philadelphia in the 19th century. |

| Search [First for Women] | First for Women is a woman's magazine published by Bauer Media Group in the USA.[1] The magazine was started in 1989. | |

| Finish [Arthur's Magazine] | ||

| Were Pavel Urysohn and Leonid Levin known for the same type of work? | Search [Pavel Urysohn] | Pavel Samuilovich Urysohn (February 3, 1898 - August 17, 1924) was a Soviet mathematician who is best known for his contributions in dimension theory. |

| Search [Leonid Levin] | Leonid Anatolievich Levin is a Soviet-American mathematician and computer scientist. | |

| Finish[yes] | ||

| Act | ||

| concern | movements | heed |

| What is the elevation range of the area into which the eastern section of the Colorado orogeny extends? | Search [Colorado Mountain Building Campaign] | The Colorado orogeny is a mountain building movement (orogeny) that occurred in and around Colorado. |

| Find [Eastern Sector] | (Results 1 / i) The eastern section extends into the High Plains and is known as the Central Plains orogeny. | |

| Search [High Plains] | The High Plains refers to one of two distinct areas: | |

| Search [High Plains (USA)] | The High Plains is a subregion of the Great Plains. From east to west, the elevation of the High Plains rises from about 1,800 feet (550 m) to 7,000 feet (2,130 m). [3] | |

| fulfillment | [1,800 to 7,000 feet] | |

| Musician and satirist Allie Goertz wrote a song about the Simpsons character Milhouse, after whom Matt Groening named the character? | Search [Milhouse] | Milhouse Mussolini Van Houten is a resident character on the Fox animated television series The Simpsons, voiced by Pamela Hayden and created by Matt Groening. |

| Find [named source] | (Results 1 / l) Milhouse was named after Richard Nixon, President of the United States, whose middle name was Milhous. | |

| fulfillment | [Richard Nixon] | |

| Which documentary is about a Finnish rock band, Adam Clayton Powell or The Saimaa Gesture? | Search [Adam Clayton Powell] | No [Adam Clayton Powell] was found. Similar: ['Adam Clayton Powel III', 'Seventh Avenue (Manhattan)', 'Adam clayton Powell Jr. State Office Building', 'Isabel Washington Powell', 'Adam Powell', 'Adam Clayton Powell (film)', 'Giancarlo Esposito']. |

| Search [Adam Clayton Powell (film)] | Adam Clayton Powell is a 1989 American documentary film directed by Richard Kilberg. The movie follows the rise and fall of influential African-American politician Adam Clayton Powell Jr. [3][4] It later aired as part of the PBS series The American Experience. | |

| fulfillment | [The Saimaa Gesture] | |

| What careers do Nicholas Ray and Elia Kazan have in common? | Search [Nicholas Ray] | Nicholas Ray (real name Raymond Nicholas Kienzle Jr.,) August 7, 1911 - June 16, 1979) was an American film director, screenwriter, and actor best known for the 1955 film Rebel Without a Cause. |

| Search [Elia Kazan] | Elia Kazan is an American film and theater director, producer, screenwriter and actress. | |

| Finished [director, screenwriter, actor] | ||

| Which magazine was started first, Arthur's Magazine or First for Women? | Search [Arthur's Magazine]. | Arthur's Magazine (1844-1846) was an American literary journal published in Philadelphia, USA in the 19th century. |

| Search [First for Women] | First for Women is a women's magazine published in the United States by Bauer Media Group.[1] It was founded in 1989. [1] The magazine was launched in 1989. | |

| Finish [Arthur's Magazine] | ||

| Are Pavel Urysohn and Leonid Levin known for the same type of work? | Search [Pavel Urysohn] | Pavel Samuilovich Urysohn (February 3, 1898 - August 17, 1924) was a Soviet mathematician best known for his contributions to dimensional number theory. |

| Search [Leonid Levin] | Leonid Anatolievich Levin was a Soviet-American mathematician and computer scientist. | |

| Completed [yes] | ||

| CoT | ||

| Question | Thought | Answer |

| What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into? | Let's think step by step. The eastern sector of Colorado orogeny extends into the High Plains. High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft. High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft. | 1,800 to 7,000 ft |

| Musician and satirist Allie Goertz wrote a song about the "The Simpsons" character Milhouse, who Matt Groening named after who? | Milhouse was named after U.S. president Richard Nixon, so the answer is Richard Nixon. | Richard Nixon |

| Which documentary is about Finnish rock groups, Adam Clayton Powell or The Saimaa Gesture? | Let's think step by step. Adam Clayton Powell (film) is a documentary about an African-American politician, not Finnish rock groups. Adam Clayton Powell (film) is a documentary about an African-American politician, not Finnish rock groups. | The Saimaa Gesture |

| What profession does Nicholas Ray and Elia Kazan have in common? | Professions of Nicholas Ray are director, screenwriter, and actor. Professions of Elia Kazan are director, producer, screenwriter, and actor. Professions of Elia Kazan are director, producer, and actor. | director, screenwriter, actor |

| Which magazine was started first Arthur's Magazine or First for Women? | Let's think step by step. Arthur's Magazine was started in 1844. First for Women was started in 1989. 1844 (Arthur's Magazine) < 1989 (First for Women), so Arthur's Magazine was started first. | Arthur's Magazine |

| Were Pavel Urysohn and Leonid Levin known for the same type of work? | Let's think step by step. Pavel Urysohn is a mathematician. Leonid Levin is a mathematician and computer scientist. So Pavel Urysohn and Leonid Levin have the same type of work. | Yes |

| CoT | ||

| concern | thought process | solution |

| What is the elevation range of the area into which the eastern section of the Colorado orogeny extends? | Let's consider this step by step. The eastern section of the Colorado orogeny extends into the High Plains. The elevation of the High Plains rises from about 1,800 feet to 7,000 feet, so the answer is 1,800 to 7,000 feet. | 1,800 to 7,000 feet. |

| Musician and satirist Allie Goertz wrote a song for the Simpsons character Milhouse, after whom Matt Groening named the character? | Let's consider this step by step.Milhouse is named after Richard Nixon, President of the United States, so the answer is Richard Nixon. | Richard Nixon. |

| Is the documentary about the Finnish rock band Adam Clayton Powell or The Semaja Gesture? | Let's consider it step by step. Adam Clayton Powell (the movie) is a documentary about an African-American politician, not about a Finnish rock band. So a documentary about a Finnish rock band would be The Semaja Gesture. | The Semaya Gesture |

| What careers do Nicholas Ray and Ilya Kazan have in common? | Let's consider it step by step. Nicholas Ray's career is director, screenwriter and actor. Ilya Kazan's occupation is director, producer, screenwriter and actor. So Nicholas Ray and Ilya Kazan share the profession of director, screenwriter and actor. | Director, screenwriter, actor |

| Was Arthur's Magazine or First for Women started first? | Let's consider this step by step. Arthur's Magazine was founded in 1844. First for Women was founded in 1989. 1844 (Arthur's Magazine) < 1989 (First for Women), so Arthur's Magazine was founded first. | Arthur's Magazine |

| Are Pavel Urysohn and Leonid Levin known for the same type of work? | Let's consider this step by step.Pavel Urysohn is a mathematician.Leonid Levin is a mathematician and computer scientist. So Pavel Urysohn and Leonid Levin have the same type of job. | be |

| ReAct | |||

| Question | Thought | Action | Observation |

| What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into? | I need to search Colorado orogeny, find the area that the eastern sector of the Colorado orogeny extends into, then find the elevation range of the area. | Search [Colorado orogeny] | The Colorado orogeny was an episode of mountain building (anorogeny) in Colorado and surrounding areas. |

| It does not mention the eastern sector. So I need to look up eastern sector. | Lookup [eastern sector] | (Result 1 / 1) The eastern sector extends into the High Plains and is called the Central Plains orogeny. | |

| The eastern sector of Colorado orogeny extends into the High Plains. So I need to search High Plains and find its elevation range. | Search [High Plains] | High Plains refers to one of two distinct land regions | |

| I need to instead search High Plains (United States). | Search[High Plains (United States)] | The High Plains are a subregion of the Great Plains. From east to west, the High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130 m). [3] | |

| High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft. | Finish [1,800 to 7,000 ft] | ||

| Musician and satirist Allie Goertz wrote a song about the "The Simpsons" character Milhouse, who Matt Groening named after who? | The question simplifies to "The Simpsons" character Milhouse is named after who. I only need to search Milhouse and find who it is named after. | Search [Milhouse] | Milhouse Mussolini Van Houten is a recurring character in the Fox animated television series The Simpsons voiced by Pamela Hayden and created by Matt The Simpsons voiced by Pamela Hayden and created by Matt Groening. |

| The paragraph does not tell who Milhouse is named after, maybe I can look up "named after". | Lookup [named after] | (Result 1 / 1) Milhouse was named after U.S. president Richard Nixon, whose middle name was Milhous. | |

| Milhouse was named after U.S. president Richard Nixon, so the answer is Richard Nixon. | Finish [Richard Nixon] | ||

| Which documentary is about Finnish rock groups, Adam Clayton Powell or The Saimaa Gesture? | I need to search Adam Clayton Powell and The Saimaa Gesture, and find which documentary is about Finnish rock groups. | Search [Adam Clayton Powell] | Could not find [Adam Clayton Powell]. Similar: ['Adam Clayton Powell III', 'Seventh Avenue (Manhattan)', 'Adam Clayton Powell Jr. Clayton Powell Jr. State Office Building', 'Isabel Washington Powell', 'Adam Powell (film)', 'Adam Clayton Powell (film)', 'Adam Clayton Powell (film)'. ', 'Adam Clayton Powell (film)', 'Giancarlo Esposito']. |

| To find the documentary, I can search Adam Clayton Powell (film). | Search [Adam Clayton Powell (film)] | Adam Clayton Powell is a 1989 American documentary film directed by Richard Kilberg. The film is about the rise and fall of influential African-American politician Adam Clayton Powell Jr.[3][4] It was later aired as part of the PBS series The American Experience. The film is about the rise and fall of influential African-American politician Adam Clayton Powell Jr.[3][4] It was later aired as part of the PBS series The American Experience. | |

| Adam Clayton Powell (film) is a documentary about an African-American politician, not Finnish rock groups. So the documentary about Finnish rock groups must instead be The Saimaa Gesture. | Finish [The Saimaa Gesture] | ||

| What profession does Nicholas Ray and Elia Kazan have in common? | I need to search Nicholas Ray and Elia Kazan, find their professions, then find the profession they have in common. | Search [Nicholas Ray] | Nicholas Ray (born Raymond Nicholas Kienzle Jr., August 7, 1911 - June 16, 1979) was an American film director, screenwriter, and actor best known for the 1955 film Rebel Without a Cause. |

| Professions of Nicholas Ray are director, screenwriter, and actor. I need to search Elia Kazan next and find his professions. | Search [Elia Kazan] | Elia Kazan was an American film and theater director, producer, screenwriter and actor. | |

| Professions of Elia Kazan are director, producer, screenwriter, and actor. So profession Nicholas Ray and Elia Kazan have in common is director, screenwriter, and actor. | Finish[director, screenwriter, actor] | ||

| Which magazine was started first Arthur's Magazine or First for Women? | I need to search Arthur's Magazine and First for Women, and find which was started first. | Search [Arthur's Magazine] | Arthur's Magazine (1844-1846) was an American literary periodical published in Philadelphia in the 19th century. |

| Arthur's Magazine was started in 1844. I need to search First for Women next. | Search [First for Women] | First for Women is a woman's magazine published by Bauer Media Group in the USA.[1] The magazine was started in 1989. | |

| First for Women was started in 1989. 1844 (Arthur's Magazine) < 1989 (First for Women), so Arthur's Magazine was started first . | Finish [Arthur's Magazine] | ||

| Were Pavel Urysohn and Leonid Levin known for the same type of work? | I need to search Pavel Urysohn and Leonid Levin, find their types of work, then find if they are the same. | Search [Pavel Urysohn] | Pavel Samuilovich Urysohn (February 3, 1898 - August 17, 1924) was a Soviet mathematician who is best known for his contributions in dimension theory. |

| Pavel Urysohn is a mathematician. I need to search Leonid Levin next and find its type of work. | Search [Leonid Levin] | Leonid Anatolievich Levin is a Soviet-American mathematician and computer scientist. | |

| Leonid Levin is a mathematician and computer scientist. So Pavel Urysohn and Leonid Levin have the same type of work. | Finish[yes] | ||

ReAct | ||

| concern | reflections | act |

| What is the elevation range of the region into which the eastern section of the Colorado orogenic belt extends? | I need to search the Colorado orogenic belt, find the area that the eastern section extends into, and then find the elevation range of that area. | Search [Colorado orogenic belt] |

| There is no mention of the east section. So I need to look up the east section. | Find [Eastern Sector] | |

| The eastern section of the Colorado orogenic belt extends into the High Plains. So I need to search the High Plains and find its elevation range. | Search [High Plains] | |

| The elevation of the High Plains rises from about 1,800 feet to 7,000 feet, so the answer is 1,800 feet to 7,000 feet. | Completion [1800 feet to 7000 feet] | |

| Musician and satirist Allie Goertz wrote a song about the character Milhouse in "The Simpsons", after whom Matt Groening named Milhouse? | The question is simplified to who is the character Milhouse named after in "The Simpsons". I just need to search for Milhouse and find out who it is named after. | Search [Milhouse] |

| The paragraph doesn't say who Milhouse is named after, so maybe I can look up "named after...". Named after". | Find [named after... Name] | |

| Milhouse is named after Richard Nixon, the President of the United States, so the answer is Richard Nixon. | Finished [Richard Nixon] | |

| Is the documentary about the Finnish rock band Adam Clayton Powell or The Saimaa Gesture? | I need to search for Adam Clayton Powell and The Saimaa Gesture and find out which documentary is about a Finnish rock band. | Search [Adam Clayton Powell] |

| To find documentaries, I would search for Adam Clayton Powell (film). | Search [Adam Clayton Powell (film)] | |

| Adam Clayton Powell (film) is a documentary about an African-American politician, not about a Finnish rock band. So a documentary about a Finnish rock band would have to be The Saimaa Gesture. | Finish [The Saimaa Gesture] | |

| What careers do Nicholas Ray and Elia Kazan have in common? | I need to search for Nicholas Ray and Elia Kazan, find their occupations, and then find out what occupations they have in common. | Search [Nicholas Ray] |

| Nicholas Ray's occupation was director, screenwriter and actor. I next need to search for Elia Kazan and find his occupation. | Search [Elia Kazan] | |

| Elia Kazan's occupation is director, producer, screenwriter and actor. So Nicholas Ray and Elia Kazan share the same profession of director, screenwriter and actor. | Completion [director, screenwriter, actor] | |

| Which magazine was started first, Arthur's Magazine or First for Women? | I need to search for Arthur's Magazine and First for Women and find out which one started first. | Search [Arthur's Magazine]. |

| Arthur's Magazine was founded in 1844. I need to search First for Women next. | Search [First for Women] | |

| First for Women was founded in 1989. 1844 (Arthur's Magazine) < 1989 (First for Women), so Arthur's Magazine was founded first. | Finish [Arthur's Magazine] | |

| Are Pavel Urysohn and Leonid Levin known for the same type of work? | I need to search for Pavel Urysohn and Leonid Levin to find out what type of work they do and then find out if they are the same. | Search [Pavel Urysohn] |

| Pavel Urysohn is a mathematician. I next need to search for Leonid Levin and find out what type of work he does. | Search [Leonid Levin] | |

| Leonid Levin is a mathematician and computer scientist. So Pavel Urysohn and Leonid Levin have the same type of job. | Completed [yes] | |

C.2 Fact Extraction and Validation Dataset/FEVER

FEVER Prompts

| Original | |

| Question:Determine if there is Observation that SUPPORTS or REFUTES a Claim, or if there is NOT ENOUGH INFORMATION. | |

| Claim | Answer |

| Nikolaj Coster-Waldau worked with the Fox Broadcasting Company. | SUPPORTS |

| Stranger Things is set in Bloomington, Indiana. | REFUTES |

| Beautiful reached number two on the Billboard Hot 100 in 2003. | NOT ENOUGH INFO |

| Original | |

| QUESTION: Determine if there are observations that support or refute the statement, or if there is insufficient information. | |

| herald | solution |

| Nikolaj Coster-Waldau has worked with Fox Broadcasting Co. | be in favor of |

| Stranger Things is set in Bloomington, Indiana. | retort |

| The song "Beautiful" reached number two on the Billboard Hot 100 in 2003. | Insufficient information |

| Act | ||

| Question:Determine if there is Observation that SUPPORTS or REFUTES a Claim, or if there is NOT ENOUGH INFORMATION. | ||

| Claim | Action | Observation |

| Nikolaj Coster-waldau worked with the Fox Broadcasting Company. | Search [Nikolaj Coster-Waldau] | Nikolaj William Coster-Waldau (born 27 July 1970) is a Danish actor and producer. He graduated from the Danish National School of Performing Arts in Copenhagen in 1993,[1] and had his breakthrough role in Denmark with the film Nightwatch (1994). Copenhagen in 1993,[1] and had his breakthrough role in Denmark with the film Nightwatch (1994). He played Jaime Lannister in the HBO fantasy drama series Game of Thrones, for which he received two Primetime Emmy Award nominations for Outstanding Supporting Actor in a Drama Series. Coster-Waldau has appeared in numerous films in his native Denmark and Scandinavia, including Headhunters (2011) and A Thousand Times Good Night (2013). . In the U.S., his debut film role was in the war film Black Hawk Down (2001), playing Medal of Honor recipient Gary Gordon.[2] He then played a detective in the short-lived Fox television series New York City. In the U.S., his debut film role was in the war film Black Hawk Down (2001), playing Medal of Honor recipient Gary Gordon.[2] He then played a detective in the short-lived Fox television series New Amsterdam (2008), and appeared in the 2009 Fox television film Virtuality, originally intended as a pilot. |

| Finish[SUPPORTS] | ||

| Stranger Things is set in Bloomington, Indiana. | Search [Stranger Things] | Stranger Things is an American science fiction horror drama television series created by the Duffer Brothers. Set in the 1980s, primarily in the Set in the 1980s, primarily in the fictional town of Hawkins, Indiana, the series centers on a number of mysteries and supernatural events occurring around the town and their impact on an ensemble of child and adult characters. ensemble of child and adult characters. |

| Finish [REFUTES] | ||

| Beautiful reached number two on the Billboard Hot 100 in 2003. | Search [Beautiful] | Could not find [Beautiful]. Similar: ['Beautiful', 'Beautiful, Beautiful', 'A Beautiful Mind (film) ', 'Beautiful (Christina Aguilera song)', 'Life Is Beautiful']. |

| Search[Beautiful (Christina Aguilera song) | "Beautiful" is a song recorded by American singer Christina Aguilera for her fourth studio album, Stripped (2002). | |

| Lookup [Billboard Hot 100] | (Result 1 / 3) The song peaked at number two on the Billboard Hot 100 in the United States, where it was certified Gold for 500,000 units shipped. | |

| Finish [NOT ENOUGH INFO] | ||

| Act | ||

| Problem: Determine if an observation supports or refutes a statement, or if there is insufficient information. | ||

| herald | act | heed |

| Nikolaj Coster-waldau has worked with Fox Broadcasting Company. | Search [Nikolaj Coster-Waldau] | Nikolaj William Coster-Waldau (born July 27, 1970) is a Danish actor and producer. He graduated from the Danish National Academy of Performing Arts in Copenhagen in 1993,[1] and landed his breakthrough role in Denmark through the film Night Watch (1994). He received two Primetime Emmy Award nominations for Best Supporting Actor in a Drama Series for his portrayal of Jaime Lannister in the HBO fantasy series Game of Thrones, for which he received two Primetime Emmy Award nominations.Coster-Waldau has appeared in numerous films in his native Denmark and Scandinavia including Headhunters (2011) and A Thousand Times Goodnight (2013). In the United States, his first film role was as Medal of Honor recipient Gary Gordon in the war film Black Hawk Down (2001).[2] He also played a detective in the short-lived Fox television series New Amsterdam (2008) and appeared in the 2009 Fox television movie Virtua Fighter, which was originally intended as a pilot episode. |

| Done [supported] | ||

| Stranger Things is set in Bloomington, Indiana. | Search [Stranger Things] | Stranger Things is an American science fiction horror drama series created by the Duff Brothers. Set in the 1980s, the episodes take place primarily in the fictional town of Hawkins, Indiana, and center on a series of mysterious and supernatural events that occur in the town and their effects on a group of children and adult characters. |

| Done. [Rebuttal] | ||

| 'Beautiful' reached number two on the Billboard Hot 100 in 2003. | Search [Beautiful] | No [Beautiful] was found. Similar: ['Beautiful', 'Beautiful, Beautiful', 'A Beautiful Mind (film) ', 'Beautiful (Christina Aguilera song)', 'Life Is Beautiful']. |

| Search [Beautiful (Christina Aguilera song)] | "Beautiful" is a song recorded by American singer Christina Aguilera for her fourth studio album Stripped, released in 2002. | |

| Find [Billboard Hot 100] | (Results 1 / 3) The song reached #2 on the Billboard Hot 100 and was certified gold with 500,000 units shipped. | |

| Completion [insufficient information] | ||

| CoT | ||

| Question:Determine if there is Observation that SUPPORTS or REFUTES a Claim, or if there is NOT ENOUGH INFORMATION. | ||

| Claim | Thought | Answer |

| Nikolaj Coster-Waldau worked with the Fox Broadcasting Company. | Nikolaj William Coster-Waldau appeared in the 2009 Fox television film Virtuality, so he has worked with the Fox Broadcasting Company. | SUPPORTS |

| Stranger Things is set in Bloomington, Indiana. | Stranger Things is in the fictional town of Hawkins, Indiana, not in Bloomington, Indiana. | REFUTES |

| Beautiful reached number two on the Billboard Hot 100 in 2003. | The song peaked at number two on the Billboard Hot 100 in the United States, but not sure if it was in 2003. | NOT ENOUGH INFO |

| COT | ||

| Problem: Determine if there are observations that support or refute a claim, or if there is insufficient information. | ||

| viewpoint | reflections | solution |

| Nikolaj Coster-Waldau has worked with Fox Broadcasting Co. | Nikolai William Coster-Waldau starred in the 2009 Fox TV movie Virtual Life, so he has worked with Fox Broadcasting. | be in favor of |

| Stranger Things takes place in Bloomington, Indiana. | Stranger Things takes place in the fictional town of Hawkins, Indiana, not Bloomington, Indiana. | retort |

| The song "Beautiful" reached number two on the Billboard Hot 100 in 2003. | The song reached number two on the Billboard Hot 100, but it is uncertain if it was in 2003. | Insufficient information |

| ReAct | |||

| Question:Determine if there is Observation that SUPPORTS or REFUTES a Claim, or if there is NOT ENOUGH INFORMATION. | |||

| Claim | Thought | Action | Observation |

| Nikolaj Coster-Waldau worked with the Fox Broadcasting Company. | I need to search Nikolaj Coster-Waldau and find if he has worked with the Fox Broadcasting Company. | Search [Nikolaj Coster-Waldau] | Nikolaj William Coster-Waldau (born 27 July 1970) is a Danish actor and producer. He graduated from the Danish National School of Performing Arts in Copenhagen in 1993,[1] and had his breakthrough role in Denmark with the film Nightwatch (1994). Copenhagen in 1993,[1] and had his breakthrough role in Denmark with the film Nightwatch (1994). He played Jaime Lannister in the HBO fantasy drama series Game of Thrones, for which he received two Primetime Emmy Award nominations for Outstanding Supporting Actor in a Drama Series. Coster-Waldau has appeared in numerous films in his native Denmark and Scandinavia, including Headhunters (2011) and A Thousand Times Good Night (2013). . In the U.S., his debut film role was in the war film Black Hawk Down (2001), playing Medal of Honor recipient Gary Gordon.[2] He then played a detective in the short-lived Fox television series New York City. In the U.S., his debut film role was in the war film Black Hawk Down (2001), playing Medal of Honor recipient Gary Gordon.[2] He then played a detective in the short-lived Fox television series New Amsterdam (2008), and appeared in the 2009 Fox television film Virtuality, originally intended as a pilot. |

| Because he "appeared in the 2009 Fox television film Virtuality", he should have worked with the Fox Broadcasting Company. | Finish[SUPPORTS] | ||

| Stranger Things is set in Bloomington, Indiana. | I should search for Stranger Things, and see if it is set in Bloomington, Indiana. | Search [Stranger Things] | Stranger Things is an American science fiction horror drama television series created by the Duffer Brothers. Set in the 1980s, primarily in the Set in the 1980s, primarily in the fictional town of Hawkins, Indiana, the series centers on a number of mysteries and supernatural events occurring around the town and their impact on an ensemble of child and adult characters. ensemble of child and adult characters. |

| The observation says that it is set in a "fictional town of Hawkins, Indiana", so it is not set in Bloomington. | Finish [REFUTES] | ||

| Beautiful reached number two on the Billboard Hot 100 in 2003. | I need to search the song Beautiful and find if it reached number two on the Billboard Hot 100 in 2003. | Search [Beautiful] | Could not find [Beautiful]. Similar: ['Beautiful', 'Beautiful, Beautiful', 'A Beautiful Mind (film) ', 'Beautiful (Christina Aguilera song)', 'Life Is Beautiful']. |

| From suggestions, I should search "Beautiful (Christina Aguilera song)" to find the song. | Search [Beautiful (Christina Aguilera song)] | "Beautiful" is a song recorded by American singer Christina Aguilera for her fourth studio album, Stripped (2002). | |

| It does not mention Billboard, so I need to look up "Billboard Hot 100" to find if it reached number two on it in 2003. | Lookup [Billboard Hot 100] | (Result 1 / 3) The song peaked at number two on the Billboard Hot 100 in the United States, where it was certified Gold for 500,000 units shipped. | |

| It only says the song peaked at number two on the Billboard Hot 100, but not if it was in 2003. I am not sure if this claim is true or not. | Finish [NOT ENOUGH INFO] | ||

ReAct | |||

| QUESTION: Determine if there are observations that support or refute a statement or if there is insufficient information. | |||

| herald | reflections | act | heed |

| Nikolaj Coster-Waldau has worked with Fox Broadcasting Co. | I need to do a search on Nikolaj Coster-Waldau to find out if he ever worked with Fox Broadcasting. | Search [Nikolaj Coster-Waldau] | Nikolaj Wilhelm Koster-Waldau (born July 27, 1970) is a Danish actor and producer. He graduated from the Danish National Academy of Performing Arts in Copenhagen in 1993,[1] and achieved his breakthrough role in Denmark with the 1994 film Night Shift. He received two Primetime Emmy Award nominations for Best Supporting Actor in a Drama Series for his role as Jaime Lannister in the HBO fantasy series Game of Thrones. Koster-Waldau has appeared in numerous films in his native Denmark and Scandinavia, including Headhunters (2011) and A Thousand Times Goodnight (2013). In the United States, his first film role was as Medal of Honor recipient Gary Gordon in the war film Black Hawk Down (2001). [2] He played a detective in the Fox television sketch show New Amsterdam (2008) and appeared in the 2009 Fox television movie Virtual Worlds, which was originally produced as a pilot episode. |

| Since he starred in the "2009 Fox TV movie Virtua Fighter," he should have worked with Fox Broadcasting. | Done [supported] | ||

| Stranger Things takes place in Bloomington, Indiana. | I should search for Stranger Things to see if it takes place in Bloomington, Indiana. | Search for [Stranger Things]. | Stranger Things is an American science fiction horror drama series created by the Duff Brothers. Set in the 1980s, primarily in the fictional town of Hawkins, Indiana, the show centers on a series of mysterious and supernatural events that occur in the town and their effects on a group of children and adult characters. |

| Observations indicate that it is set in the "fictional town of Hawkins, Indiana," so it does not take place in Bloomington. | Done. [Rebuttal] | ||

| Beautiful reached number two on the Billboard Hot 100 in 2003. | I need to search for the song Beautiful and find out if it reached #2 on the Billboard Hot 100 in 2003. | Search [Beautiful] | ['Beautiful'] was not found. Similar: ['Beautiful', 'Beautiful, Beautiful', 'A Beautiful Mind (film) ', 'Beautiful (Christina Aguilera song)', 'Life Is Beautiful']. |

| From the suggestions, I should have searched for "Christina Aguilera's song "Beautiful"" to find this song. | Search for [Christina Aguilera's song "Beautiful"] | "Beautiful" is a song recorded by American singer Christina Aguilera for her fourth studio album Stripped, released in 2002. | |

| It doesn't mention Billboard, so I'd need to look up "Billboard Hot 100" to see if it reached #2 in 2003. | Find [Billboard Hot 100] | (Results 1 / 3) The song peaked at number two on the Billboard Hot 100 and was certified gold for 500,000 units there. | |

| It only mentions that the song reached #2 on the Billboard Hot 100, but not whether it was in 2003. I'm not sure if this statement is true or not. | Completion [insufficient information] | ||

C.3 Internet shopping

Table 6: Prompts used in Chapter 4 for the Web Store experiment. We used the cues on the left for action (Act) and the cues on the right (adding reasoning) for reaction (ReAct).

| Instructions:I would like a 3 oz bottle of bright citrus scented deodorant for sensitive skin for under $50.00! | |||

|---|---|---|---|

| Act | ReAct | ||

| movements | heed | movements | heed |

| Search for [3 oz Bright Citrus Scented Deodorant for Sensitive Skin] | [Back to Search] Page 1 (Total Results: 50) [Next][B078GWRC1J]Earth Mama's Bright Citrus Deodorant | Natural and for Sensitive skin, pregnancy and breastfeeding with organic Calendula 3 oz. $10.99[B078GTKVXY]Earth Mama's Ginger Fresh Deodorant | Natural and for Sensitive skin, pregnancy and breastfeeding with organic Calendula 3 oz. $10.99[B08KBVJ4XN]Barrel and Oak - Aluminum-Free Deodorant for Men Essential oil based scent, 24 hour odor protection, Cedarwood & Patchouli blend, gentle for sensitive skin (Mt sage. (2.7 oz, 2 bottles) $15.95 | Search 3 oz Bright Citrus Scented Deodorant for Sensitive Skin | [Back to Search] Page 1 (Total Results: 50) [Next][B078GWRC1J]Earth Mama's Bright Citrus Deodorant | Natural and for Sensitive skin, pregnancy and breastfeeding with organic Calendula 3 oz. $10.99[B078GTKVXY]Earth Mama's Ginger Fresh Deodorant | Natural and for Sensitive skin, pregnancy and breastfeeding with organic Calendula 3 oz. $10.99[B08KBVJ4XN]Barrel and Oak - Aluminum-Free Deodorant for Men Essential oil based scent, 24 hour odor protection, Cedarwood & Patchouli blend, gentle for sensitive skin (Mt sage. (2.7 oz, 2 bottles) $15.95 |

| Click on [B078GWRC1J]. | Back to search previous page Fragrance [Various Scents] [Bright Citrus] [Soothing Lavender] Ginger [Refreshing] Simply Unscented] Size [Travel Pack (4 Bottles)] [3 oz. Bottle)] Earth Mama's Bright Citrus Deodorant | Natural & Suitable for Sensitive skin, pregnancy and breastfeeding with organic Calendula 3 oz. Price: $10.99 Rating: N.A. [Description] [Features] [Reviews] [Buy Now] | Thoughts [B078GWRC1J and B078GTKVXY are bright citrus scented deodorants for under $50. I might check B078GWRC1J first]. | Okay. |

| Click on [bright citrus] | You have clicked Bright Citrus. | Click on [B078GWRC1J]. | [Back to search][Previous] Scent [Various Scents][Bright Citrus][Soothing Lavender] Ginger [Refreshing] Simply Unscented] Size [Travel Pack (4 Bottles)] [3 oz. Bottle)] Earth Mama's Bright Citrus Deodorant | Natural & Suitable for Sensitive skin, pregnancy and breastfeeding with organic Calendula 3 oz. Price: $10.99 Rating: N.A. [Description] [Features] [Reviews] [Buy Now] |

| Click [3 oz. | You have clicked on 3 oz (1 bottle). | Thoughts [For the 3 oz bottle of Bright Citrus scented deodorant for sensitive skin, the item is available in 'Bright Citrus' and '3 oz (1 bottle)' options, which looks like it could be purchased]. | Okay. |

| Click [Buy Now] | Click on [bright citrus] | You have clicked Bright Citrus. | |

| Click [3 oz. | You have clicked on 3 oz (1 bottle). |

C.4 ALFWORLD

ALFWORLD Simulator, an environment containing over 25,000 unique, program-generated tasks covering real environments such as kitchens, living rooms, bedrooms and more.

You are in the middle of a room. Looking quickly around you, you see a cabinet

13, a cabinet 12, a cabinet 11, a cabinet 10, a cabinet 9, a cabinet 8, a cabinet

7, a cabinet 6, a cabinet 5, a cabinet 4, a cabinet 3, a cabinet 2, a cabinet

1, a coffeemachine 1, a countertop 1, a diningtable 1, a drawer 1, a fridge 1, a

garbagecan 1, a microwave 1, a shelf 3, a shelf 2, a shelf 1, a sinkbasin 1, a

stoveburner 4, a stoveburner 3, a stoveburner 2, a stoveburner 1, and a toaster 1.

Your task is to: put a clean lettuce in diningtable.

> go to fridge 1

The fridge 1 is closed.

> open fridge 1

You open the fridge 1. The fridge 1 is open. In it, you see a cup 3, a egg 2, a

potato 3, and a potato 2.

> go to diningtable 1

On the diningtable 1, you see an apple 1, a bread 1, a butterknife 2, a cup 2, a

fork 2, a knife 2, a knife 1, a ladle 1, a lettuce 1, a mug 2, a mug 1, a pan 2, a

peppershaker 1, a spatula 3, a tomato 2, and a tomato 1.

> take lettuce 1 from diningtable 1

You pick up the lettuce 1 from the diningtable 1.

> go to sinkbasin 1

On the sinkbasin 1, you see an apple 2, a ladle 2, a spoon 1, and a tomato 3.

> clean lettuce 1 with sinkbasin 1

You clean the lettuce 1 using the sinkbasin 1.

> Now I clean a lettuce (1). Next, I need to put it in/on diningtable 1.

OK.