Raycast-G4F: Free access to GPT-4, Llama-3, and many other AI models via Raycast!

General Introduction

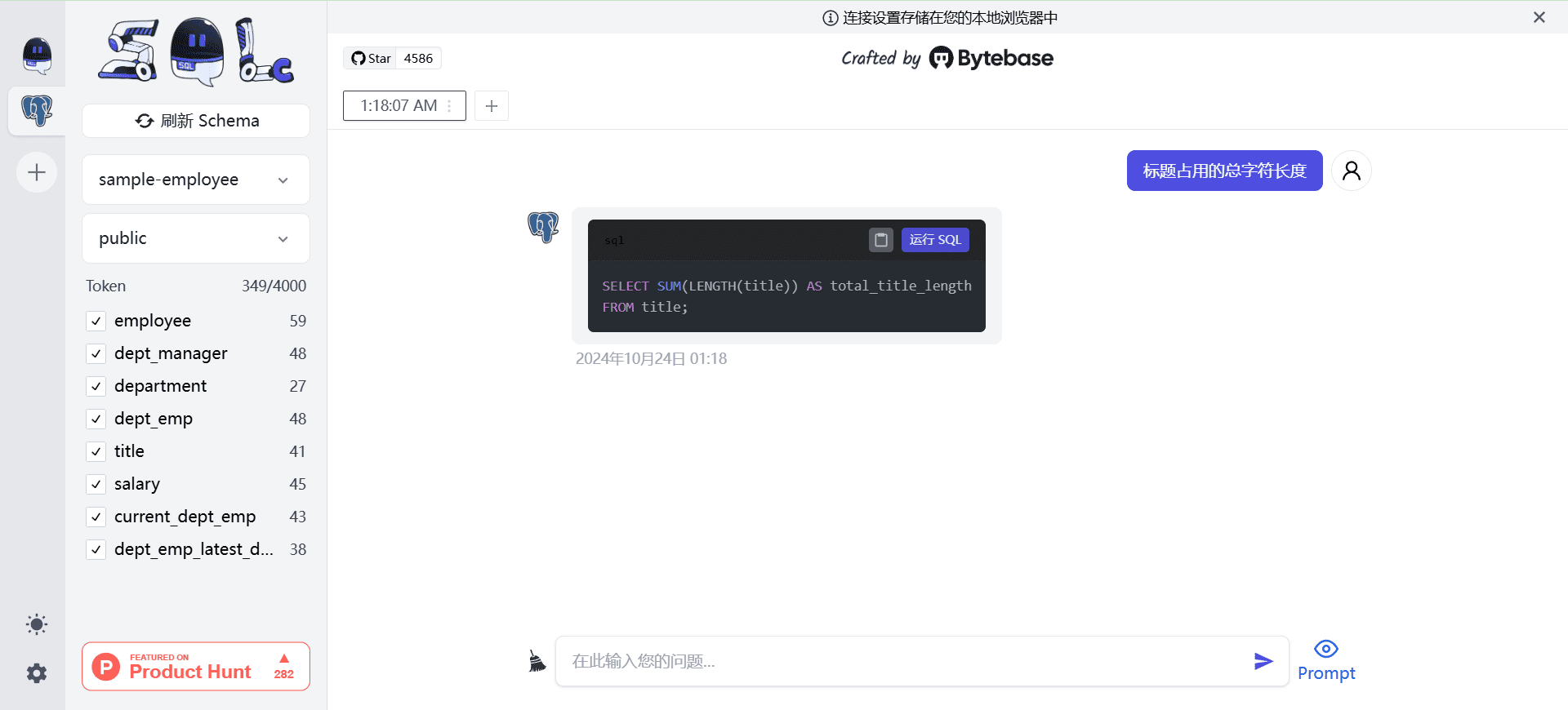

Raycast-G4F (GPT4Free) is a powerful Raycast The extension gives users free access to a wide range of advanced AI models, including GPT-4 and Llama-3. The extension not only provides real-time dialog streaming, but also supports diverse functions such as web search, file upload, image generation, and more. Most notably, it supports more than 40 different AI providers and models, allowing users to switch freely according to their needs. The extension is completely open source and focuses on user privacy protection, with all data stored locally on the device. Although it is not currently available on the official Raycast store, it can be used through a simple source code installation and provides automatic updates to ensure that the latest version is always available.

Raycast-G4F is currently only available for macOS, with a Windows version in development. Users can install the extension from source code with simple installation steps and enjoy its powerful AI features.

Function List

- Real-time dialog streaming functionality with instant message loading support

- 18 different commands for various usage scenarios

- Supports access to over 40 AI providers and models

- Intelligent chat log saving and session naming features

- Built-in web search capability for up-to-date information

- Supports image, video, audio and text file uploads

- AI image generation function

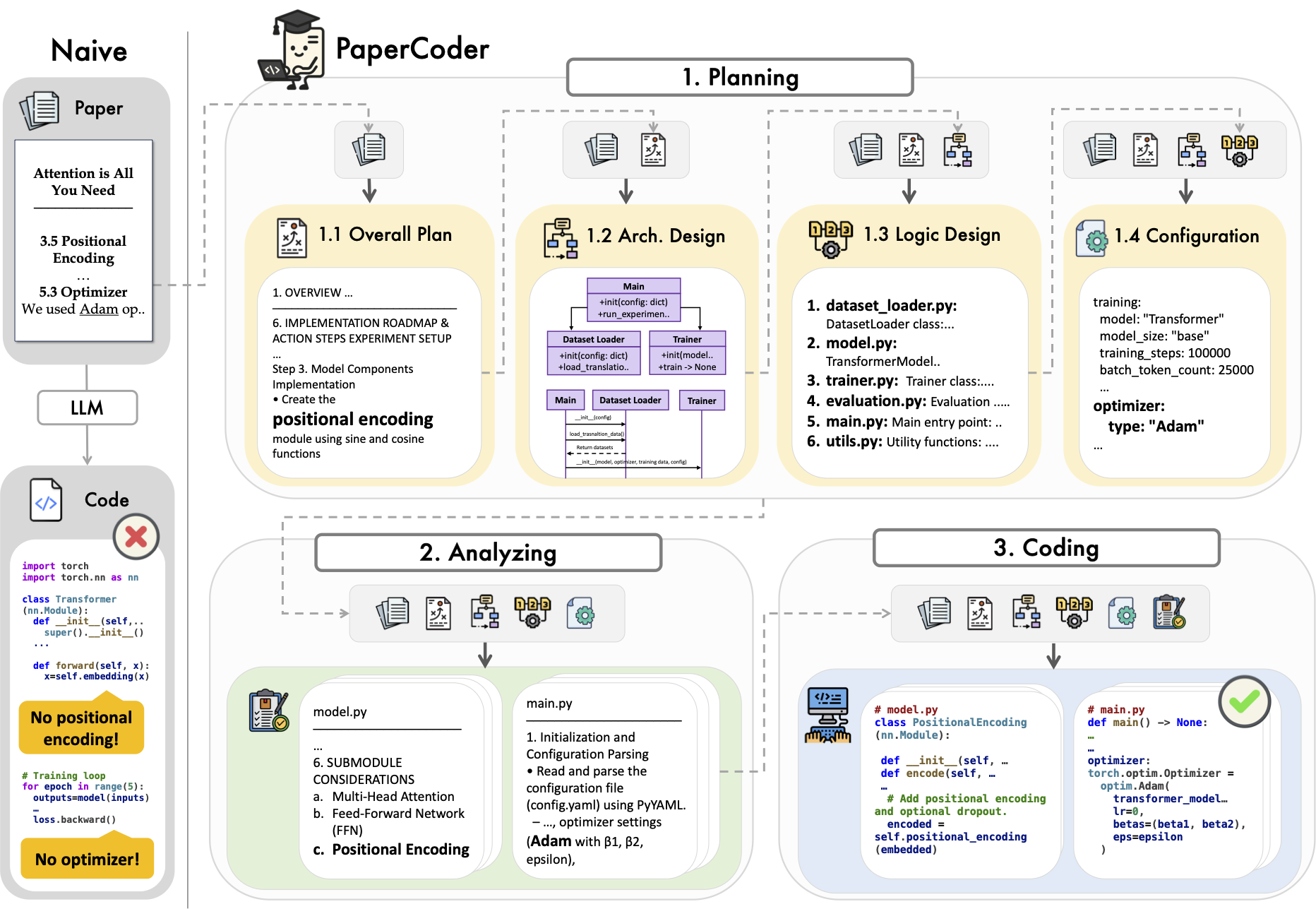

- Support for creating custom AI commands

- Code Interpreter Functions (Beta)

- Persistent storage function ensures safe data retention

Using Help

1. Pre-installation preparation

- Make sure you have the Raycast app installed (currently only supported on macOS)

- Install Node.js (recommended version v20.18.1 or higher)

2. Installation steps

- Download the latest version of the source code from GitHub or clone the repository

- Open the terminal and go to the download folder

- (of a computer) run

npm ci --productionInstallation of dependencies - (Optional) Run

pip3 install -r requirements.txtInstalling Python dependencies - (of a computer) run

npm run devBuild and import extensions

3. Updating modalities

Automatic update:

- Use the extension's built-in "Check for Updates" command.

- Enable "Automatically check for updates" in Preferences (on by default)

Manual update:

Execute the following commands in order:

git pullnpm ci --productionnpm run dev

4. Description of the use of the main functions

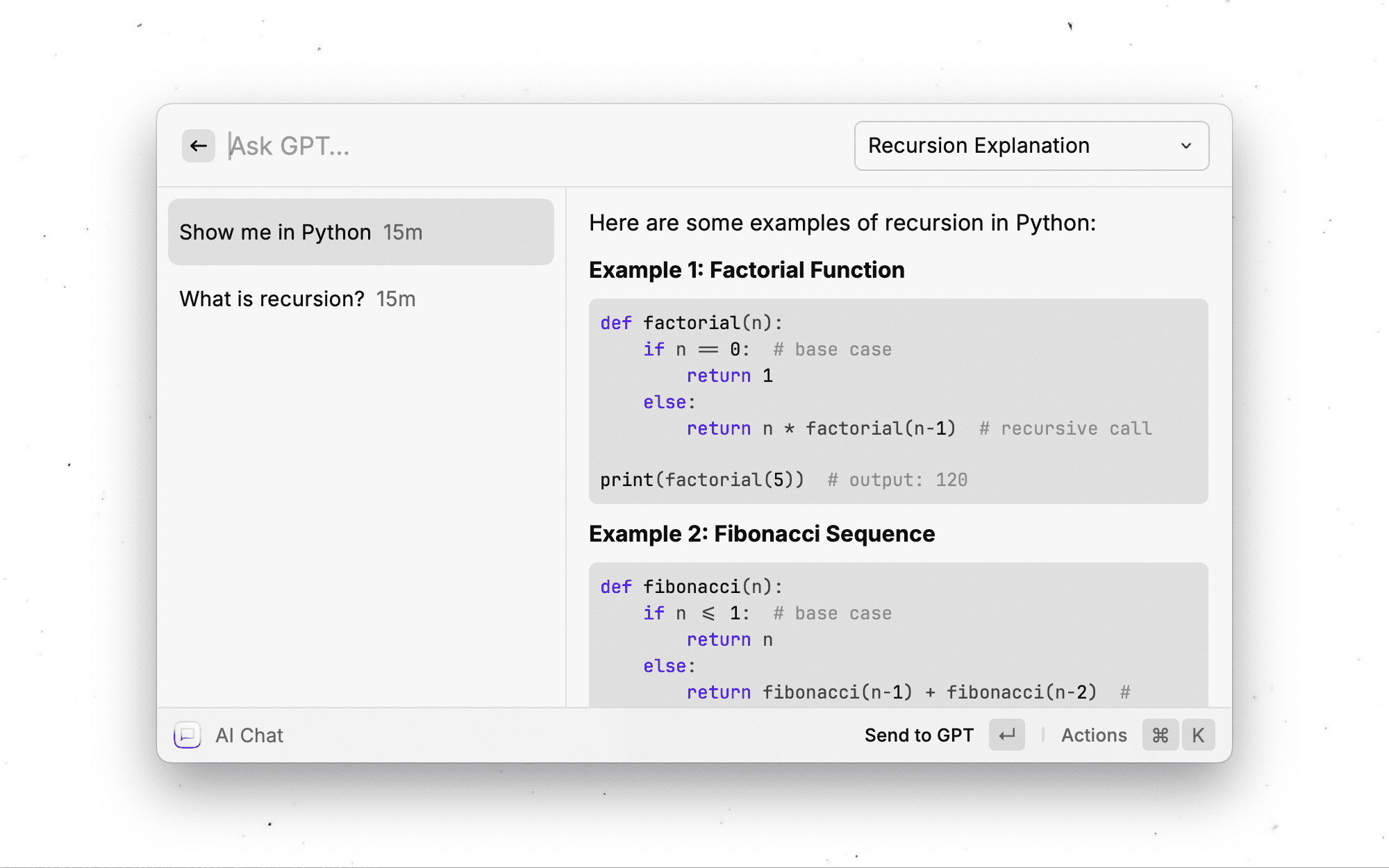

AI Chat feature

- Support for real-time dialog streaming

- Automatically save chat history

- Smart Session Naming

- Web search can be enabled in a single session

Web search function

Four modes are offered:

- Disable (default)

- Auto Mode: automatically enabled in AI chat only

- Balanced mode: enabled in both AI commands and chat

- Always enabled: all queries use web search

Document-processing capacity

- Supports text file (.txt, .md, etc.) uploading.

- Some providers support images, videos, audio files

- Supports the "Ask Screen Content" command.

Customized Functions

- Creating Personalized AI Commands

- Setting AI Presets

- Configuring Persistent Storage

- Customizing the OpenAI-compatible API

5. Advanced feature configuration

Google Gemini API Settings

- Visit https://aistudio.google.com/app/apikey

- Sign in to Google Account

- Creating an API Key

- Fill the key into the Extended Preferences

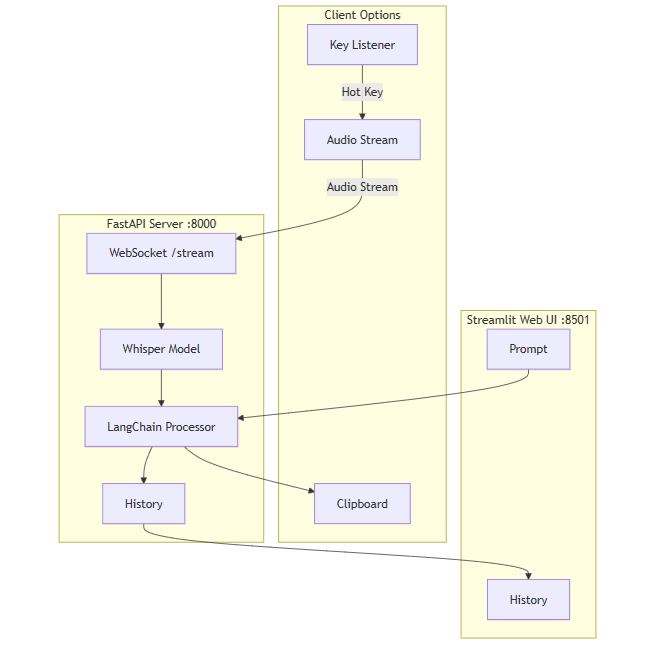

Code interpreter (beta)

- Support for local execution of Python code

- Only specific models with function call capability are supported

- Security restrictions have been configured to ensure safe code execution

Free models and service providers offered

| provider (company) | mould | functionality | state of affairs | tempo | Extended author ratings and notes |

|---|---|---|---|---|---|

| Nexra | gpt-4o (default) | ▶️ | Active | very fast | 8.5/10, the best performing model. |

| Nexra | gpt-4-32k | Active | moderate | 6.5/10, no streaming support, but otherwise a great model. | |

| Nexra | chatgpt | ▶️ | Unknown | very fast | 7.5/10 |

| Nexra | Bing | ▶️ | Active | moderate | 8/10, based on GPT-4 and with web search capability. |

| Nexra | llama-3.1 | ▶️ | Active | plain-spoken | 7/10 |

| Nexra | gemini-1.0-pro | ▶️ | Active | plain-spoken | 6.5/10 |

| DeepInfra | meta-llama-3.3-70b | ▶️ | Active | plain-spoken | 8.5/10, the latest model with large context size. |

| DeepInfra | meta-llama-3.2-90b-vision | ▶️ 📄¹ | Active | plain-spoken | 8/10, the latest model with visual capabilities. |

| DeepInfra | meta-llama-3.2-11b-vision | ▶️ 📄¹ | Active | very fast | 7.5/10 |

| DeepInfra | meta-llama-3.1-405b | ▶️ | Inactive | moderate | 8.5/10, state-of-the-art open model for complex tasks. |

| DeepInfra | meta-llama-3.1-70b | ▶️ | Active | plain-spoken | 8/10 |

| DeepInfra | meta-llama-3.1-8b | ▶️ | Active | very fast | 7.5/10 |

| DeepInfra | llama-3.1-nemotron-70b | ▶️ | Active | plain-spoken | 8/10 |

| DeepInfra | WizardLM-2-8x22B | ▶️ | Active | moderate | 7/10 |

| DeepInfra | DeepSeek-V2.5 | ▶️ | Active | plain-spoken | 7.5/10 |

| DeepInfra | Qwen2.5-72B | ▶️ | Active | moderate | 7.5/10 |

| DeepInfra | Qwen2.5-Coder-32B | ▶️ | Active | plain-spoken | 7/10 |

| DeepInfra | QwQ-32B-Preview | ▶️ | Active | very fast | 7.5/10 |

| Blackbox | custom model | ▶️ | Active | plain-spoken | 7.5/10 with very fast generation with built-in web search capabilities, but optimized for encoding. |

| Blackbox | llama-3.1-405b | ▶️ | Active | plain-spoken | 8.5/10 |

| Blackbox | llama-3.1-70b | ▶️ | Active | very fast | 8/10 |

| Blackbox | gemini-1.5-flash | ▶️ | Active | lightning fast | 7.5/10 |

| Blackbox | qwq-32b-preview | ▶️ | Active | lightning fast | 6.5/10 |

| Blackbox | gpt-4o | ▶️ | Active | very fast | 7.5/10 |

| Blackbox | claude-3.5-sonnet | ▶️ | Active | plain-spoken | 8.5/10 |

| Blackbox | gemini-pro | ▶️ | Active | plain-spoken | 8/10 |

| DuckDuckGo | gpt-4o-mini | ▶️ | Active | lightning fast | 8/10, authentic GPT-4o-mini model with strong privacy protection. |

| DuckDuckGo | claude-3-haiku | ▶️️ | Active | lightning fast | 7/10 |

| DuckDuckGo | meta-llama-3.1-70b | ▶️️ | Active | very fast | 7.5/10 |

| DuckDuckGo | mixtral-8x7b | ▶️️ | Active | lightning fast | 7.5/10 |

| BestIM | gpt-4o-mini | ▶️ | Inactive | lightning fast | 8.5/10 |

| Rocks | claude-3.5-sonnet | ▶️ | Active | plain-spoken | 8.5/10 |

| Rocks | claude-3-opus | ▶️ | Active | plain-spoken | 8/10 |

| Rocks | gpt-4o | ▶️ | Active | plain-spoken | 7.5/10 |

| Rocks | gpt-4 | ▶️ | Active | plain-spoken | 7.5/10 |

| Rocks | llama-3.1-405b | ▶️ | Active | plain-spoken | 7.5/10 |

| Rocks | llama-3.1-70b | ▶️ | Active | very fast | 7/10 |

| ChatgptFree | gpt-4o-mini | ▶️ | Active | lightning fast | 8.5/10 |

| AI4Chat | gpt-4 | Active | very fast | 7.5/10 | |

| DarkAI | gpt-4o | ▶️ | Active | very fast | 8/10 |

| Mhystical | gpt-4-32k | Active | very fast | 6.5/10 | |

| PizzaGPT | gpt-4o-mini | Active | lightning fast | 7.5/10 | |

| Meta AI | meta-llama-3.1 | ▶️ | Active | moderate | 7/10, the latest model with Internet access. |

| Replicate | mixtral-8x7b | ▶️ | Active | moderate | ?/10 |

| Replicate | meta-llama-3.1-405b | ▶️ | Active | moderate | ?/10 |

| Replicate | meta-llama-3-70b | ▶️ | Active | moderate | ?/10 |

| Replicate | meta-llama-3-8b | ▶️ | Active | plain-spoken | ?/10 |

| Phind | Phind Instant | ▶️ | Active | lightning fast | 8/10 |

| Google Internet company Gemini | auto (gemini-1.5-pro, gemini-1.5-flash) | ▶️ 📄 | Active | very fast | 9/10, very good generic model, but requires an API key. (It isfree(Please refer to the following section) |

| Google Gemini (Experimental) | auto (changes frequently) | ▶️ 📄 | Active | very fast | - |

| Google Gemini (Thinking) | auto (changes frequently) | ▶️ 📄 | Active | very fast | - |

| Custom OpenAI-compatible API | - | ▶️ | Active | - | Allows you to use any custom OpenAI-compatible API. |

▶️ - Streaming support.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...