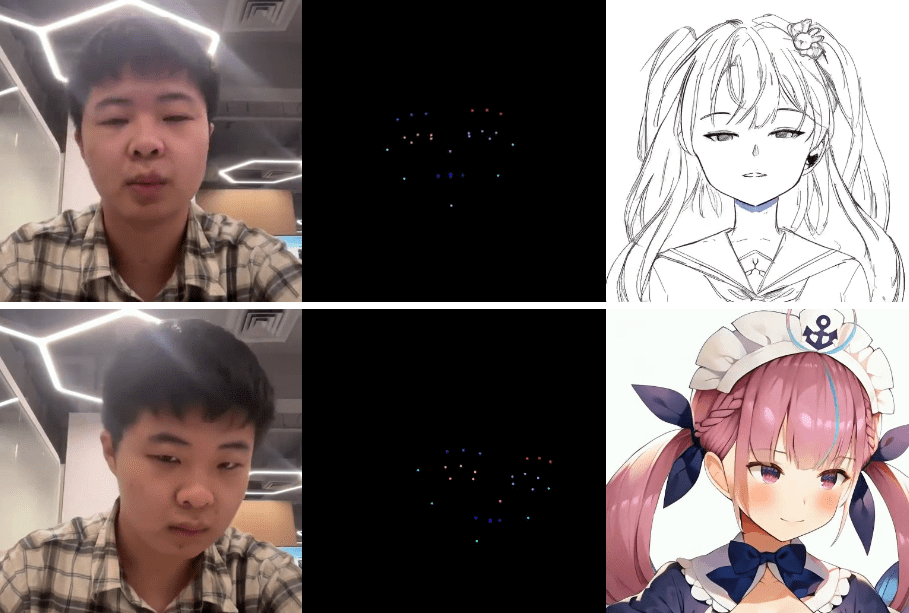

RAIN: Real-time Capture of Real People's Expressions to Generate Video Streams of Anime Images

General Introduction

RAIN (Real-time Animation Of Infinite Video Stream) is an open source project designed to enable real-time generation of animation effects for infinite video streams. Developed by Pscgylotti, the project provides a solution for video generation on common user devices. Through the use of the latest deep learning techniques , RAIN is able to generate a continuous video stream based on user uploaded images , applicable to animation production , video effects and other fields .

RAIN produces real-time animation on consumer-grade devices.

Function List

- Generate animated videos in real time

- Support for video generation on user devices

- Provides a variety of pre-trained models and weights

- Support for TensorRT acceleration

- Provides an interface to the Gradio application

- Supports multiple video generation parameter adjustments

Using Help

Installation process

- Make sure Python >= 3.10 is installed.

- Install PyTorch (recommended version >= 2.3.0), which can be downloaded from the official PyTorch website.

- Clone the RAIN project repository:

git clone https://github.com/Pscgylotti/RAIN.git

cd RAIN

- Install the dependencies required for reasoning:

pip install -r requirements_inference.txt

Usage Process

- Download pre-trained models and weights:

- Download the original RAIN weights from Google Drive or Huggingface Hub and put them into the

weights/torch/Catalog. - Download the other required model files and place them in the appropriate directories (e.g.

weights/onnx/).

- Download the original RAIN weights from Google Drive or Huggingface Hub and put them into the

- Start the Gradio application:

python gradio_app.py

Open your browser and visithttp://localhost:7860/Upload the upper body portrait of any anime character, adjust the parameters and start the face morphing.

- Adjust the video generation parameters:

- In the Gradio interface, various parameters can be adjusted as needed to get the best animation effect.

- Pay special attention to the adjustment of eye-related parameters to ensure the best facial compositing effect.

Detailed Functions

- Generate video streams in real time: RAIN is capable of generating continuous video streams in real time based on user uploaded images, suitable for animation production and video effects.

- Support for user uploaded images: Users can upload any image and RAIN will generate a corresponding video stream based on the image.

- Provide a variety of video generation parameters adjustment: The user can adjust the generation parameters to get the best animation effect.

- Support for TensorRT acceleration: By enabling TensorRT acceleration, RAIN can complete model compilation in a shorter period of time, improving inference speed.

- Provide pre-trained models for download: Users can download pre-trained models from multiple platforms to facilitate a quick start.

- Compatible with multiple deep learning frameworks: RAIN supports a variety of deep learning frameworks such as PyTorch, which facilitates secondary development.

hardware requirement

- Running an entire inference demo typically requires about 12 GiB of device RAM.

- The synthesized model requires about 8 GiB of device RAM when run alone.

Reference projects

RAIN project is based on several open source projects , such as AnimateAnyone, Moore-AnimateAnyone, Open-AnimateAnyone , etc., combining the advantages of these projects to achieve efficient real-time animation generation .

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...