RAG: Retrieval Augmentation

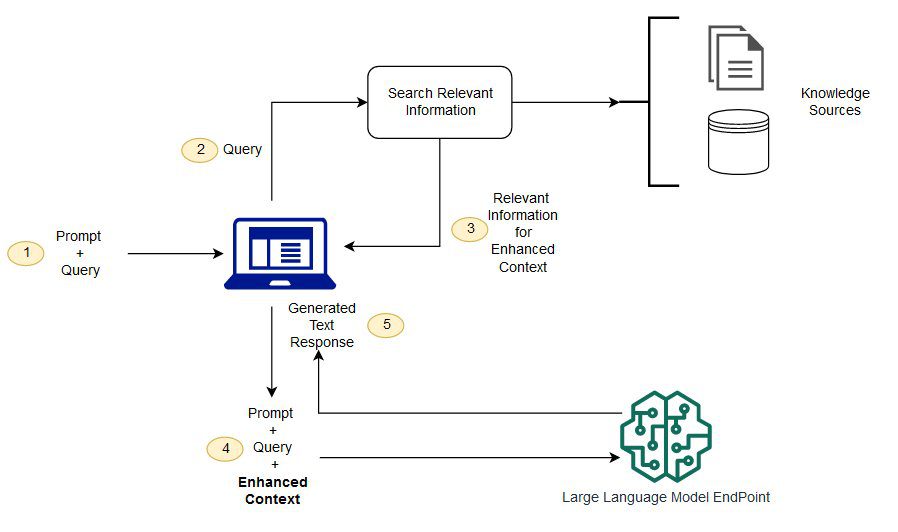

RAG (Retrieve Augmented Generation) is a technique for optimizing the output of Large Language Models (LLMs) based on information from authoritative knowledge bases. This technique improves the quality of the output and ensures its relevance, accuracy, and usefulness in a variety of contexts by extending the functionality of LLMs to refer to the internal knowledge base of a particular domain or organization when generating responses.

Optimizing Large Language Models (LLMs)

Large Language Models (LLMs) are typically trained on large-scale datasets with billions of parameters to generate raw output for tasks such as answering questions, language translation, and sentence completion. However, the fact that LLMs are trained on static data and have a cut-off date in terms of updating their knowledge makes it possible for the models to provide incorrect information when answers are lacking or to output outdated or ambiguous responses when responding to queries that require access to specific up-to-date information. In addition, it is also possible that LLMs' answers may come from non-authoritative sources or generate erroneous responses due to terminological confusion, i.e., the same term may refer to different content in different data sources.

RAG's solution

RAG solves the above problem by directing LLMs to retrieve relevant information from predefined, authoritative knowledge sources. The base model is usually trained offline and it has no access to any data generated after the training is over.LLMs are usually trained on very general domain corpora and hence they are less efficient in processing domain-specific tasks.The RAG model is able to retrieve information from data other than that of the base model and augment the cues by adding the relevant retrieved data to the context.

For more information on the RAG model architecture, seeRetrieval-Enhanced Generation of Knowledge-Intensive NLP Tasks.The

Advantages of Retrieval Augmented Generation (RAG)

By using RAG, data from a range of external data sources (e.g., document repositories, databases, or APIs) can be accessed to enhance cues. The process starts with transforming documents and user queries into a compatible format for relevance search. Format compatibility in this context means that we need to utilize an embedding language model to transform the document collection (which can be considered as a knowledge base) and the user-submitted query into a numerical representation for comparison and search.The main task of the RAG model is to compare the embedding of the user query with the vectors within the knowledge base.

The enhanced prompts are then sent to the base model, where the original user prompts are combined with relevant context from similar documents in the knowledge base. You can also asynchronously update the knowledge base and its associated embedded representations to further improve the efficiency and accuracy of the RAG model.

Challenges for RAG

Although RAG provides a way to optimize large language models to thoroughly address the challenges of information retrieval and question answering in practice, it still faces a number of issues and challenges.

1. Accuracy and reliability of data sources

In retrieval augmentation generation models, the accuracy and updating of the data source is critical. If the data source contains incorrect information, outdated information, or unvalidated information, it may lead to incorrect or low quality answers generated by RAG. Therefore, to ensure the quality of data sources is a challenge that needs to be faced and solved continuously.

2. Retrieval efficiency

Although RAG can be performed effectively on large data sets, it may face efficiency problems when the knowledge base becomes large or the query becomes complex. Because finding relevant information in a large amount of data is a time- and computation-intensive task, optimizing the efficiency of the retrieval algorithm is also a challenge for RAG.

3. Generalization capacity

RAG models may be particularly sensitive to specific data and queries, and some problems may appear to be more difficult to adopt sensible and effective strategies, which often requires models with good generalization capabilities. The issues and challenges that need to be addressed when training models to deal with a variety of queries and data sources are quite complex.

4. Heterogeneous data processing

In practice, data does not always exist in the form of documents, but may also exist in other forms such as images, audio and video. It is also a big challenge to make RAG handle these forms of data for retrieval and cross-referencing between a wider range of knowledge sources.

Addressing the above challenges requires finding a balance between the power of the RAG and its potential problems so that its performance can be assured in a variety of real-world applications.

For details, see the following sample notebook:

Important reference: https://docs.aws.amazon.com/pdfs/sagemaker/latest/dg/sagemaker-dg.pdf#jumpstart-foundation-models-customize-rag

Retrieval-enhanced generation: Q&A based on customized datasets

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...