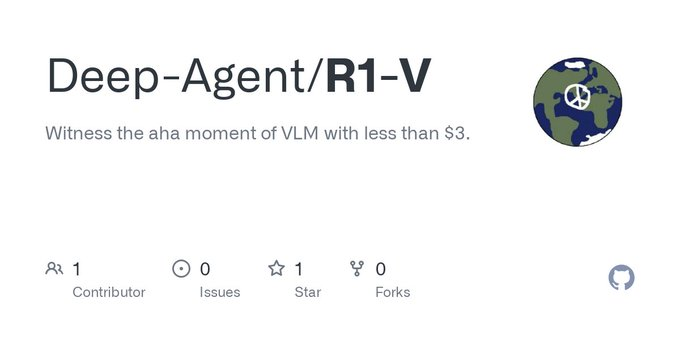

R1-V: Low-cost reinforcement learning for visual language model generalization capability

General Introduction

R1-V is an open source project that aims to achieve breakthroughs in visual language modeling (VLM) through low-cost reinforcement learning (RL). The project utilizes a verifiable reward mechanism to incentivize VLMs to learn generic counting abilities. Amazingly, R1-V's 2B model outperforms a 72B model after only 100 training steps, while costing less than $3 to train. The entire training process took only 30 minutes on 8 A100 GPUs at a total cost of $2.62. The R1-V project is completely open source, and users can explore the unlimited potential of AI by experimenting and developing with R1-V models by accessing and contributing code through the GitHub platform.

Function List

- visual language model: Combine image and text data for processing and analysis.

- Intensive learning: Enhance the generalization ability of the model through a verifiable reward mechanism.

- Low-cost training: Efficient training in a short period of time at low cost.

- deep learning: Support complex deep learning tasks and improve model accuracy and efficiency.

- natural language processing (NLP): Processing and understanding natural language text with multilingual support.

- computer vision: Analyze and understand image content to support tasks such as image classification and target detection.

- open source: Full open source code is provided for easy download, modification and contribution.

- Community Support: An active developer community that provides technical support and a platform for communication.

Using Help

Installation process

- clone warehouse: Run the following command in a terminal to clone the project repository:

git clone https://github.com/Deep-Agent/R1-V.git

- Installation of dependencies: Go to the project directory and install the required dependencies:

cd R1-V

pip install -r requirements.txt

- Configuration environment: Configure environment variables and paths according to project requirements.

Usage

- Loading Models: Load the R1-V model in the code:

from r1v import R1VModel

model = R1VModel()

- Processing images and text: Use models to process image and text data:

image_path = 'path/to/image.jpg'

text = '描述图像的文本'

result = model.process(image_path, text)

print(result)

- training model: Train models as needed for specific tasks:

model.train(data_loader)

Detailed function operation flow

- image classification: Load the image and use the model for classification:

from PIL import Image

image = Image.open('path/to/image.jpg')

classification = model.classify(image)

print(classification)

- target detection: Target detection using models:

detections = model.detect_objects(image)

for detection in detections:

print(detection)

- Text Generation: Generate descriptive text based on images:

description = model.generate_text(image)

print(description)© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...