r1-reasoning-rag: new ideas for RAG based on recursive reasoning from collected information

Recently discovered an open source project that provides a great RAG The idea that it will DeepSeek-R1 The reasoning ability of the combination of Agentic Workflow Applied to RAG search

Project Address

https://github.com/deansaco/r1-reasoning-rag.git

The project is achieved by combining DeepSeek-R1,Tavily cap (a poem) LangGraph, which implements an AI-led dynamic information retrieval and answering mechanism that utilizes the deepseek (used form a nominal expression) r1 Reasoning to proactively retrieve, discard, and synthesize information from a knowledge base to answer a complex question completely

Old vs. New RAG

Traditional RAG (Retrieval Augmented Generation) is a bit rigid, usually after processing the search, we find some content through similarity search, reorder it according to the degree of match, and select the pieces of information that seem to be reliable and give them to a large language model (LLM) to generate the answer. But this is especially dependent on the quality of the reordering model, and if the model isn't strong, it's easy to miss important information or pass the wrong stuff to the LLM, and the resulting answer won't be reliable.

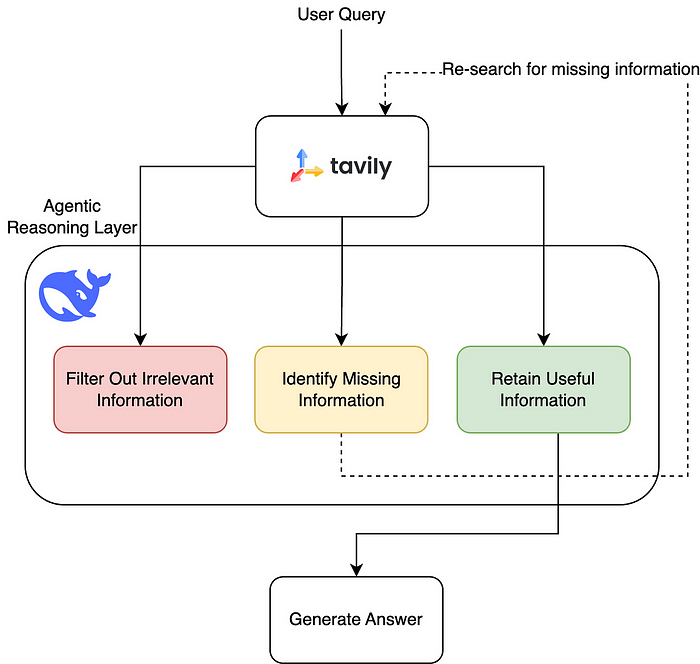

present . LangGraph The team made a big upgrade to the process, using the DeepSeek-R1 The powerful reasoning ability of the AI has transformed the previous immobile filtering method into a more flexible and dynamic process that can be adjusted according to the situation. They call this "agent retrieval", which allows the AI to not only actively find missing information, but also continuously optimize its own strategy in the process of searching for information, forming a kind of cyclic optimization effect, so that the content handed over to the LLM will be more accurate.

This improvement actually applies the concepts of extended testing-time reasoning from within the model to RAG retrieval, greatly improving the accuracy and efficiency of retrieval. For those who are involved in RAG retrieval techniques, this new approach is definitely worth looking into!

Core Technology and Highlights

DeepSeek-R1 Reasoning ability

updated DeepSeek-R1 is a powerful inference model

- Deep Thinking Analytics Information Content

- Evaluation of existing content

- Identify missing content through multiple rounds of reasoning to improve the accuracy of search results

Tavily Instant Information Search

Tavily Provide instant information search, which can make the big model in the past the latest information, expanding the scope of knowledge of the model.

- Dynamic retrieval to flush out missing information rather than relying solely on static data.

LangGraph Recursive Retrieval (RR)

via Agentic AI mechanism to allow the large model to form a closed-loop learning after multiple rounds of retrieval and inference, with the following general process:

- The first step is to search for information about the problem

- Step 2 Analyze whether the information is sufficient to answer the question

- Step 3 If there is insufficient information, make further inquiries

- Step 4 Filter Irrelevant Content and Keep Only Valid Information

this kind of 递归式 Retrieval mechanism to ensure that the large model can continuously optimize the query results, making the filtered information more complete and accurate

source code analysis

From the source code, it's a simple three files:agent,llm,prompts

Agent

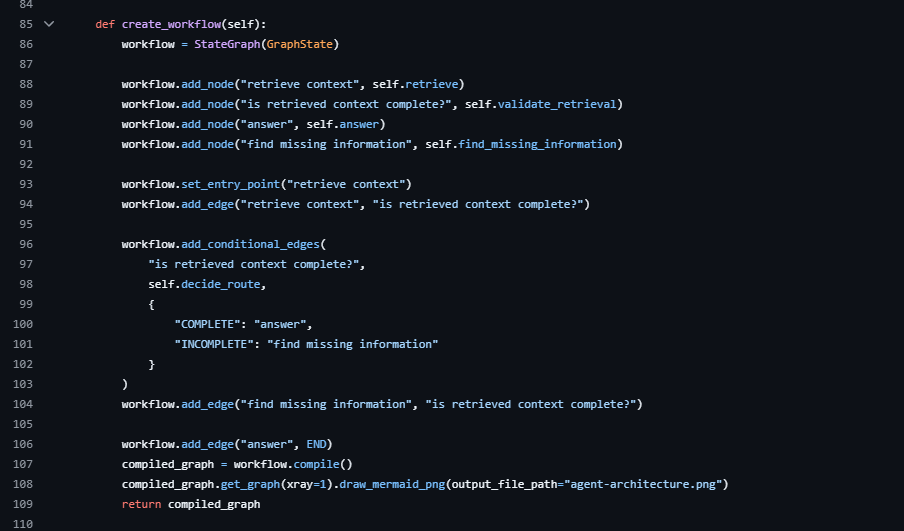

The core idea of this section is to create_workflow this function

It defines this

It defines this workflow The nodes of the add_conditional_edges Part of the definition is the conditional edge, and the whole idea of processing is the recursive logic of the graph seen at the beginning

If unfamiliar LangGraph If you do, you can check out the information.LangGraph The construct is a graph data structure with nodes and edges, and its edges can be conditional.

After each retrieval, it is screened by the big model, filtering out useless information (Filter Out Irrelevant Information), retaining useful information (Retain Useful Information), and for the missing information (Identify Missing Information) it is again The process is repeated until the desired answer is found.

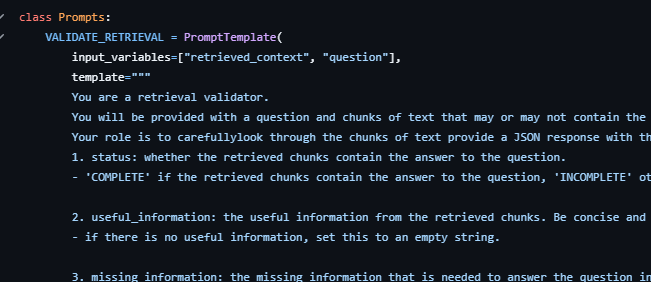

prompts

There are two main cue words defined here.VALIDATE_RETRIEVAL : It is used to verify that the retrieved information can answer the given question. The template has two input variables: retrieved_context and question. Its main purpose is to generate a JSON formatted response that determines whether or not they contain information that can answer the question based on the text blocks provided.

ANSWER_QUESTION: Used to instruct a question answering agent to answer a question based on a provided block of text. The template also has two input variables: retrieved_context and question. Its main purpose is to provide a direct and concise answer based on the given context information.

llm

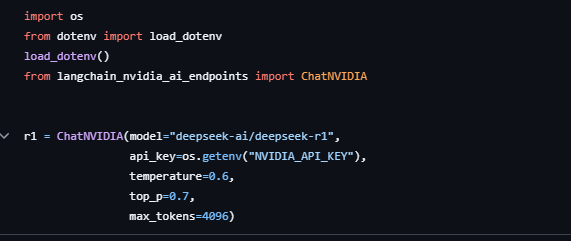

Here it is simple to define the use of the r1 mould

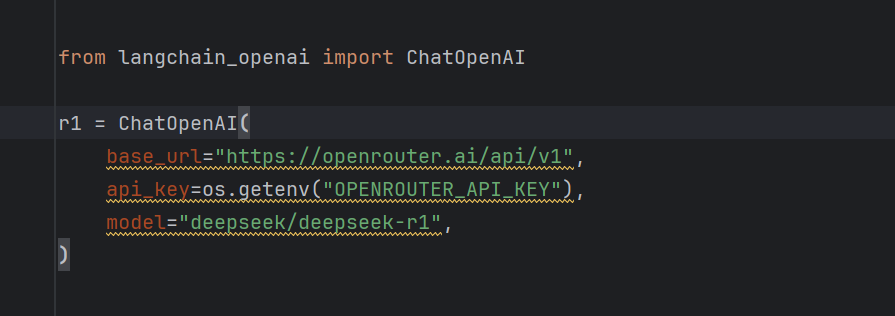

It is possible to switch to models provided by other vendors, for example openrouter free r1 mould

test effect

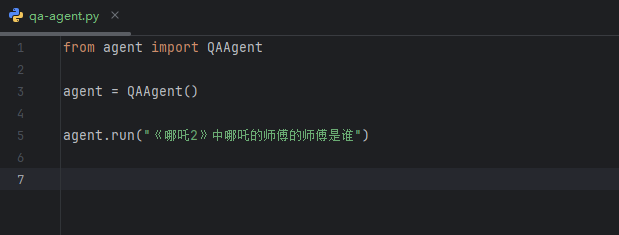

I've written a separate script here that doesn't use the one in the project, asking about the 《哪吒2》中哪吒的师傅的师傅是谁

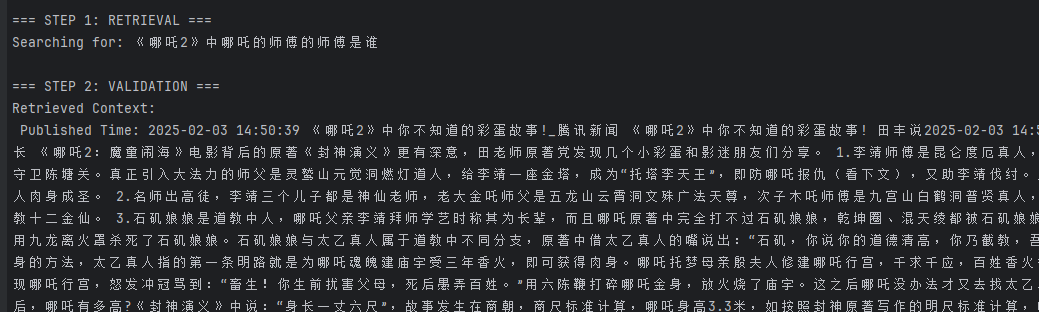

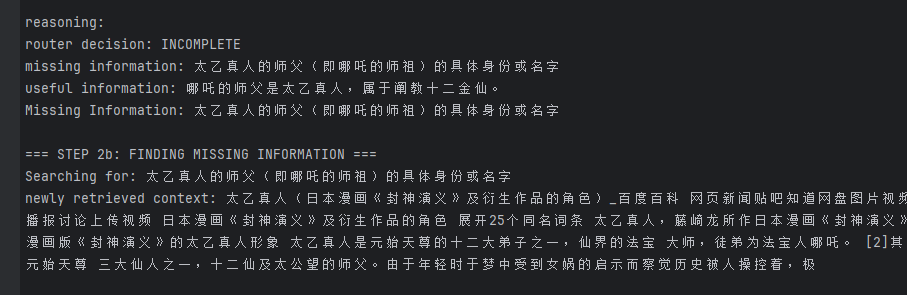

First it will call the search to look up the information and then it will start to validate the

Then it will start analyzing and get 哪吒的师父是太乙真人 This valid information, but also found missing information The specific identity or name of Taiyi's master (i.e., Nezha's master)

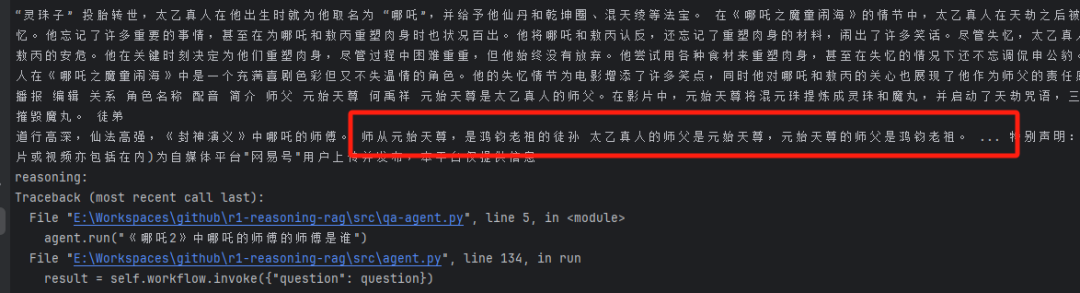

It then goes on to search for the missing information and continues to analyze and verify the information returned from the search

It reported an error later because the network was down on my end, but as you can see from the image above, it should have been able to find this key piece of information

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...