The World's First Multilingual ColBERT: Jina ColBERT V2 and its 'Russian nesting doll' technology

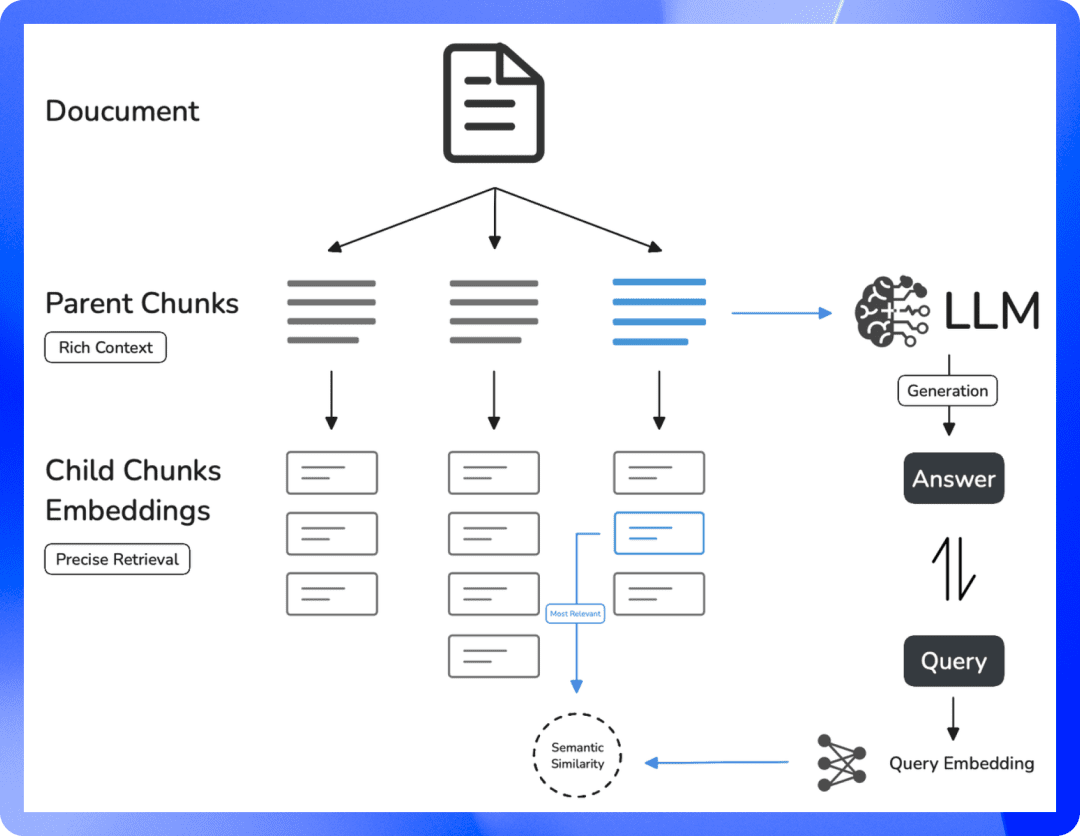

exist RAG domain, the multivector model ColBERT is used to model a document by assigning a single vector to each of the document's token The generation of independent vectors brings about an improvement in retrieval accuracy. However, it also brings a dramatic increase in storage requirements and only supports English, which limits its application scope.

To address these issues, we have improved the architecture and training process of ColBERT, especially making breakthroughs in multilingual processing. The latest Jina-ColBERT-v2 supports 89 languages and introduces the option of customized output dimensions, which significantly reduces storage requirements and improves the efficiency and accuracy of multilingual retrieval.

Core highlights of the new release

performance enhancement: 6.51 TP3T improvement in English search performance compared to the original ColBERT-v2, and 5.41 TP3T compared to its predecessor, jina-colbert-v1-en. Multi-language support: The new version supports up to 89 languages, including Arabic, Chinese, English, Japanese, Russian and other languages, as well as programming languages. Output dimensions are customizableThe new version utilizes Matryoshka Representation Learning (MRL) and offers 128, 96, and 64 dimensional output vectors, allowing the user to choose the appropriate dimensionality for their needs.

The full technical report can be found on arXiv: https://arxiv.org/abs/2408.16672

jina-colbert-v2 performance at a glance

In terms of retrieval performance, jina-colbert-v2 demonstrates a significant advantage over its predecessor, both in terms of English retrieval tasks and multi-language support. The advantages of this multi-vector model can also be fully utilized with the input length of 8192 tokens inherited from Jina AI. Below is a comparison with other versions, where the core improvements can be clearly seen:

jina-colbert-v2 | jina-colbert-v1-en | Original ColBERTv2 | |

|---|---|---|---|

| Average of English BEIR tasks | 0.521 | 0.494 | 0.489 |

| Multi-language support | 89 languages | English only | English only |

| output dimension | 128, 96 or 64 | Fixed 128 | Fixed 128 |

| Maximum query length | 32 tokens | 32 tokens | 32 tokens |

| Maximum Document Length | 8192 tokens | 8192 tokens | 512 tokens |

| Number of parameters | 560 million | 137 million | 110 million |

| Model size | 1.1GB | 550MB | 438MB |

1. Performance enhancements

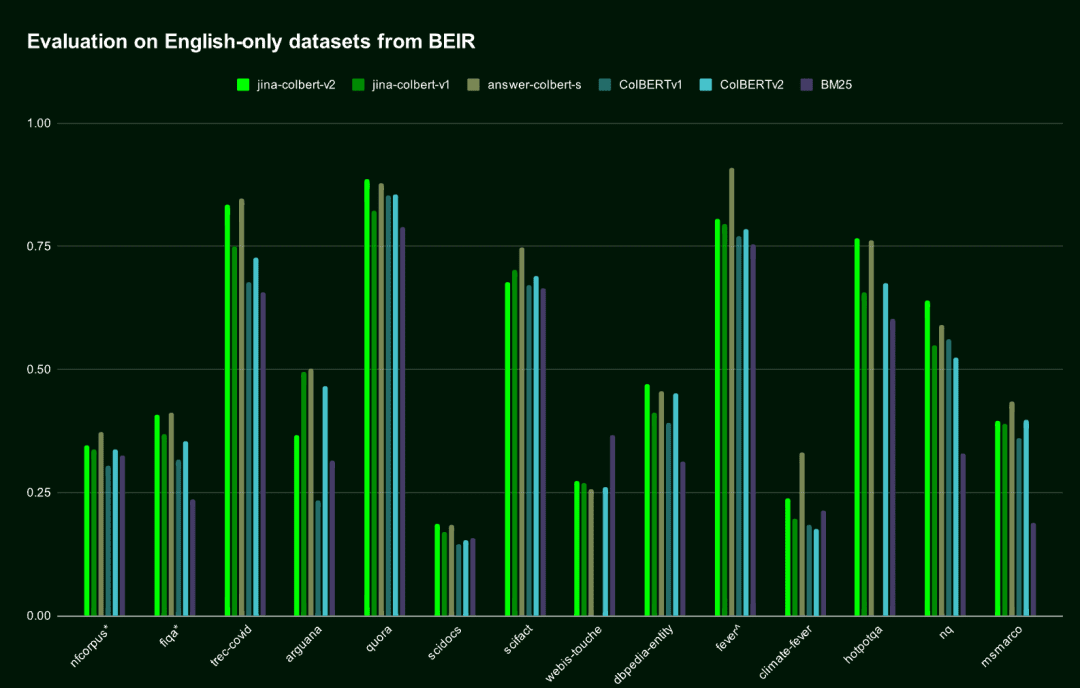

In the English retrieval task, jina-colbert-v2 outperforms the previous generation of jina-colbert-v1-en and the original ColBERT v2, approaching the level of the AnswerAI-ColBERT-small model, which was designed specifically for English.

| Model name | Average score on the English BEIR benchmark test | Multi-language support |

|---|---|---|

jina-colbert-v2 | 0.521 | Multi-language support |

jina-colbert-v1-en | 0.494 | English only |

| ColBERT v2.0 | 0.489 | English only |

| AnswerAI-ColBERT-small | 0.549 | English only |

Performance of Jina ColBERT v2 on the English-based BEIR dataset

Performance of Jina ColBERT v2 on the English-based BEIR dataset2. Multilingual support

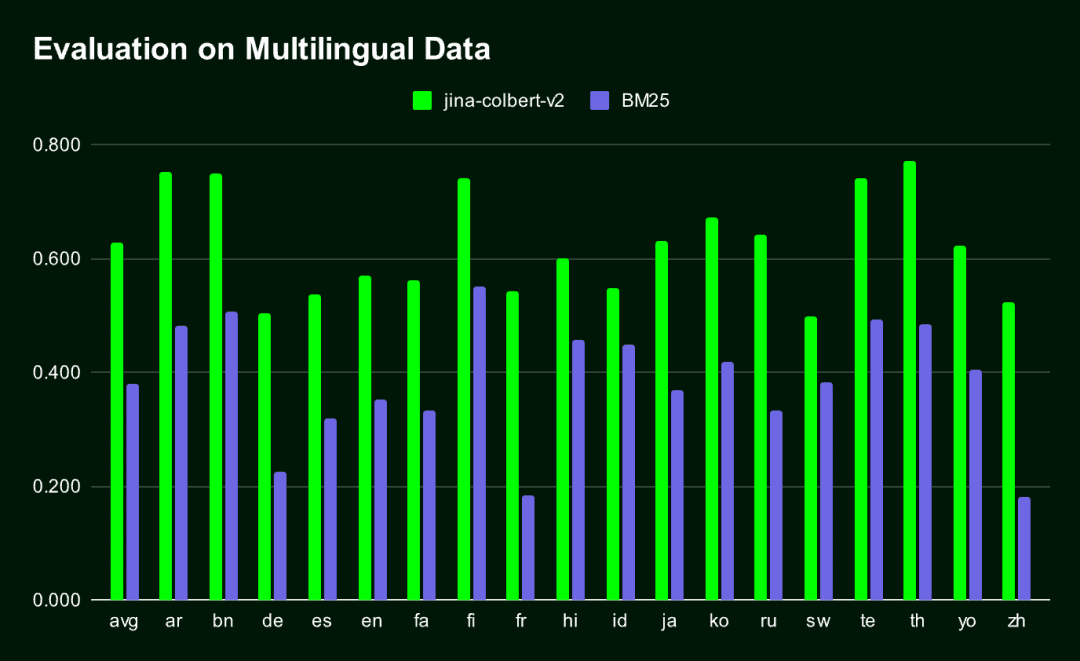

Jina-ColBERT-v2 is the only ColBERT model that supports multiple languages.The result is a very compact Embedding vector, which is significantly better than the traditional one. BM25 Retrieval methods (in all languages benchmarked by MIRACL).

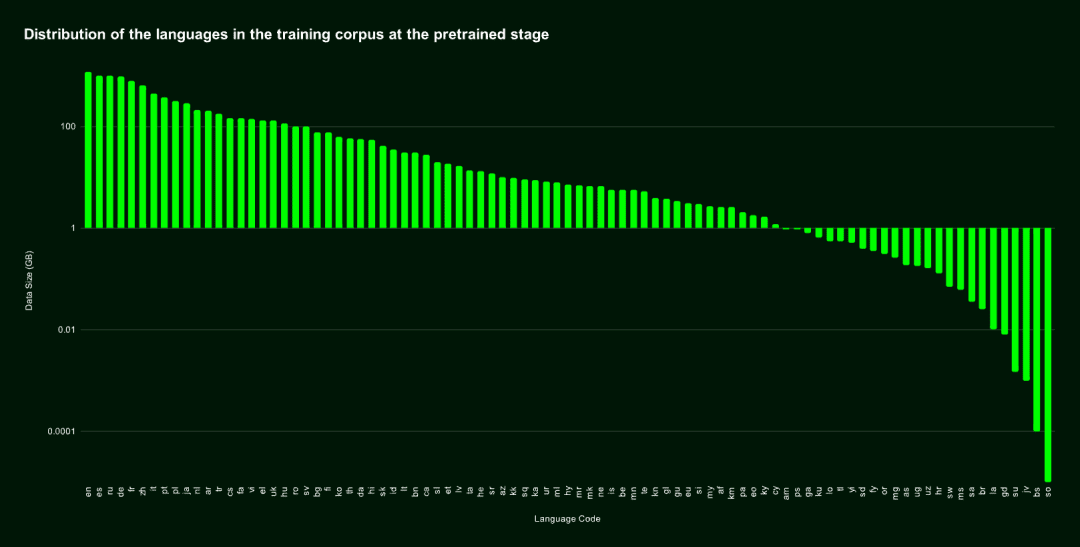

jina-colbert-v2 The training corpus covers 89 languages and includes 450 million weakly supervised semantically related sentence pairs, question-answer pairs, and query-document pairs. Half of them are in English, and the remaining part includes 29 different non-English languages, in addition to 3.0% of programming language data and 4.3% of cross-language data.

We also specialize in the Arabic, Chinese, French, German, Japanese, Russian, Spanish and other mainstream languages cap (a poem) programming language Additional training was performed, which enabled the model to perform well in handling cross-language tasks by introducing an aligned bilingual text corpus.

Language distribution of the pre-training dataset

Language distribution of the pre-training datasetThe following figure shows the performance comparison results between Jina-ColBERT-v2 and BM25 on 16 languages in the MIRACL benchmark.

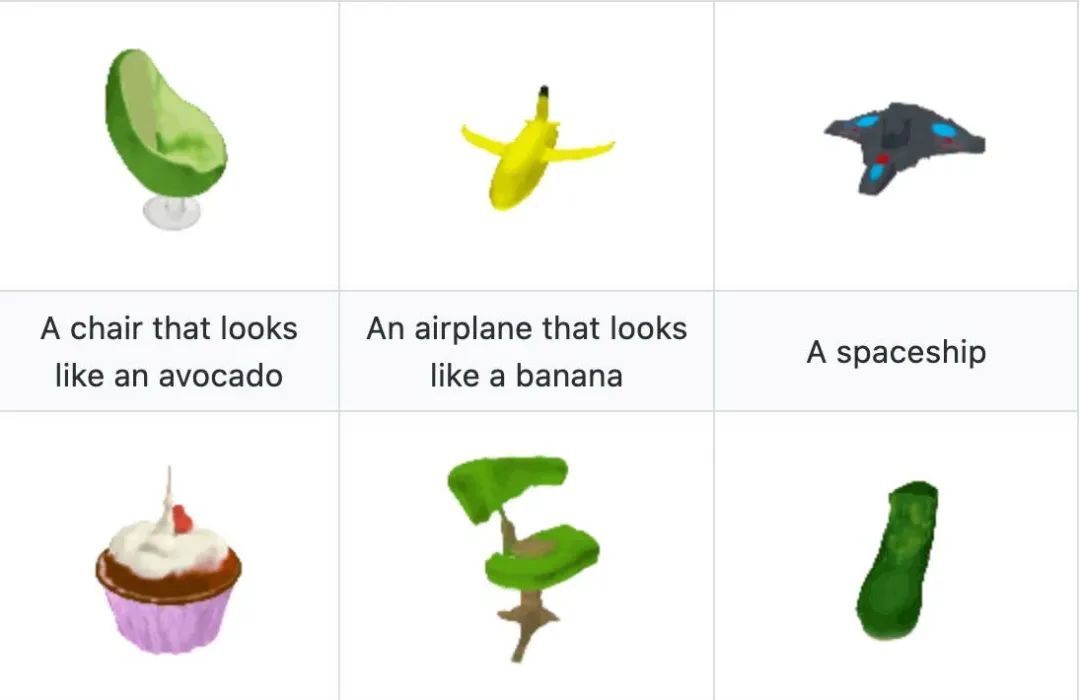

3. Russian nesting doll representation learning

Matryoshka Representation Learning (MRL) is a flexible and efficient training method that minimizes accuracy loss while supporting different output vector dimensions. It is implemented by introducing multiple linear layers in the hidden layer of the model, with each layer processing a different dimension. For more details on this technique, see: https://arxiv.org/abs/2205.13147

By default, theJina-ColBERT-v2 generates 128-dimensional Embedding, but you can choose shorter vectors, such as 96 or 64 dimensions.. Even if the vector is shortened by 25% or 50%, it has almost negligible impact on the performance (drop is less than 1.5%). In other words, no matter how long you need the vectors to be, the model maintains efficient and accurate performance.

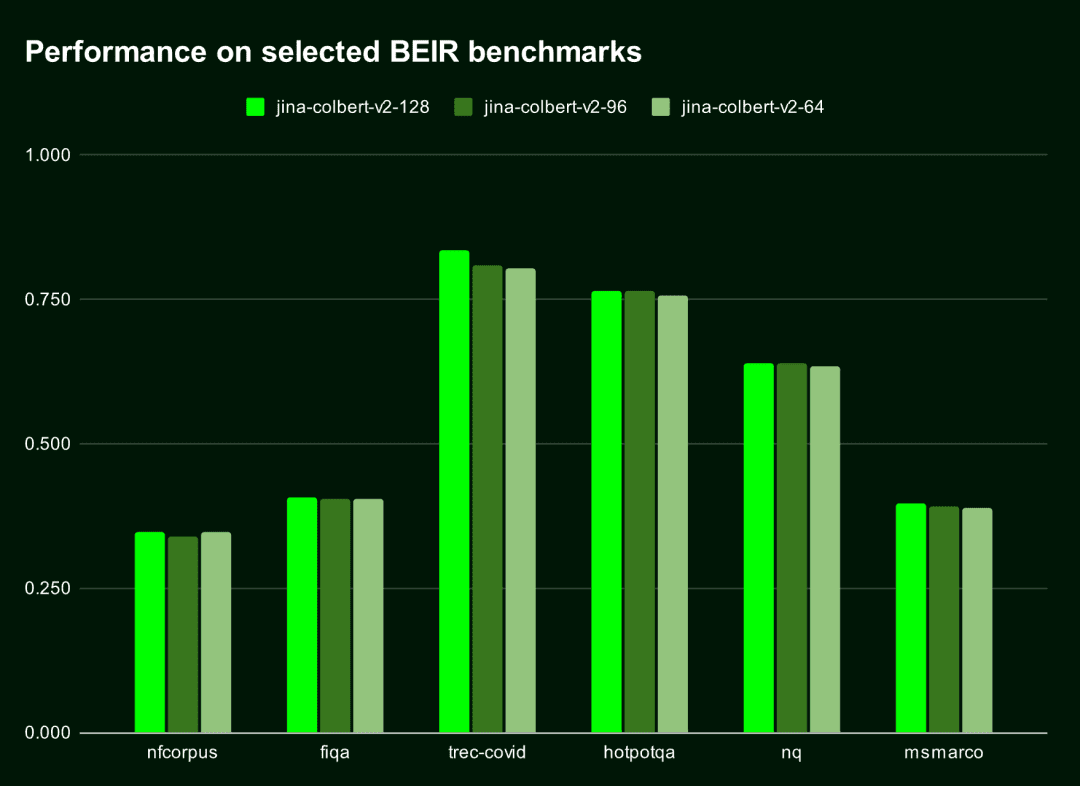

The table below shows the performance of Jina-ColBERT-v2 on the top ten results (nDGC@10) of these six BEIR benchmarks. It can be seen thatThe performance difference between 128 and 96 dimensions is less than 11 TP3T, while the difference between 128 and 64 dimensions is less than 1.51 TP3T.

| output dimension | Average score (nDGC@10 for 6 benchmarks) |

|---|---|

| 128 | 0.565 |

| 96 | 0.558 |

| 64 | 0.556 |

The following figure visualizes the performance of Jina-ColBERT-v2 under different output dimensions.

Choosing smaller output vectors not only saves storage space, but also improves computational speed, especially in scenarios where vectors need to be compared or distances need to be computed, such as vector retrieval systems.

Choosing smaller output vectors not only saves storage space, but also improves computational speed, especially in scenarios where vectors need to be compared or distances need to be computed, such as vector retrieval systems.

There is also a very noticeable drop in cost. According to Qdrant's Cloud Cost Estimator, storing 100 million documents on AWS with 128-dimensional vectors costs $1,319.24 per month, whereas with 64-dimensional vectors, the cost can be cut right in half, down to $659.62 per month. That's not only a speed boost, but also a significant cost reduction.

How to get started with Jina ColBERT v2

Jina ColBERT v2 is now live on the Jina Search Foundation API, AWS Marketplace and Azure platforms. It is also available under the CC BY-NC-4.0 license on the Hugging Face On the open source for non-commercial use.

AWS Marketplace: https://aws.amazon.com/marketplace/seller-profile?id=seller-stch2ludm6vgy Azure: https://azuremarketplace.microsoft.com/en-gb/marketplace/apps?search=Jina Hugging Face: https://huggingface.co/jinaai/jina-colbert-v2

via the Jina Search Foundation API

for Embedding

Using the Jina Embedding API to get the Embedding of jina-colbert-v2 is the easiest and most direct way.

Two key parameters:

dimensions: Indicates the output dimension of Embedding, default is 128, you can also choose 64.

input_type: determines the input type.query is limited to 32 tokens, which will be automatically truncated, and the document The document encoding can be processed offline so that only the query portion of the query needs to be encoded at the time of querying, which can significantly increase processing speed.

The Jina API Key is available at jina.ai/embeddings.

curl https://api.jina.ai/v1/multi-vector \\

-H "Content-Type: application/json" \\

-H "Authorization: Bearer <YOUR JINA API KEY>" \\

-d '{

"model": "jina-colbert-v2",

"dimensions": 128, # 或 64

"input_type": "document", # 这里需要注意input_type 是 document 还是 query

"embedding_type": "float",

"input": [

"在此输入你的文档文本",

"你可以发送多个文本",

"每个文本最长可达 8192 个标记"

]}'

For Reranker

To use the Jina Reranker API via the jina-colbert-v2To do this, pass in a query and multiple documents, and return a Rerankable match score, construct the following request:

curl https://api.jina.ai/v1/rerank \\

-H "Content-Type: application/json" \\

-H "Authorization: Bearer <YOUR JINA API KEY>" \\

-d '{

"model": "jina-colbert-v2",

"query": "柏林的人口是多少?",

"top_n": 3,

"documents": [

"2023年柏林的人口比去年增长了0.7%。因此,到去年年底,柏林的居民人数比2022年增加了约27300人。30岁到40岁的人群是数量最多的年龄组。柏林拥有约88.1万外国居民,来自约170个国家,平均年龄为42.5岁。",

"柏林山是南极洲玛丽·伯德地的一个冰川覆盖的火山,距离阿蒙森海约100公里(62英里)。它是一个宽约20公里(12英里)的山峰,有寄生火山口,由两座合并的火山组成:柏林火山,其火山口宽2公里(1.2英里),以及距离柏林火山约3.5公里(2.2英里)的Merrem峰,火山口宽2.5×1公里(1.55英里×0.62英里)。",

"截至2023年12月31日,各联邦州按国籍和州统计的人口数据",

"柏林的都市区人口超过450万,是德国人口最多的都市区。柏林-勃兰登堡首都地区约有620万人口,是德国第二大都市区,仅次于莱茵-鲁尔区,并且是欧盟第六大都市区(按GDP计算)。",

"欧文·柏林(原名以色列·贝林)是一位美国作曲家和词曲作者。他的音乐是《伟大的美国歌本》的一部分。柏林获得了许多荣誉,包括奥斯卡奖、格莱美奖和托尼奖。",

"柏林是美国康涅狄格州首都规划区的一个城镇。2020年人口普查时人口为20,175。",

"柏林是德国的首都和最大城市,无论是面积还是人口。其超过385万居民使其成为欧盟人口最多的城市(按城市限界内人口计算)。",

"《柏林,柏林》是一部为ARD制作的电视剧,于2002年至2005年在德国第一电视台的晚间节目中播出。导演包括弗朗茨斯卡·迈耶·普莱斯、克里斯托夫·施内、斯文·温特瓦尔特和蒂图斯·塞尔格。"

]

}'

Note also. top_n parameter, which specifies the number of documents to be returned in the search. If you only need optimal matches, you can set the top_n Set to 1.

For more sample code in Python or other languages, visit the https://jina.ai/embeddings page, or select from the drop-down menu at https://jina.ai/reranker/ jina-colbert-v2The

Via Stanford ColBERT

If you have used the Stanford ColBERT library, you can now seamlessly replace it with the Jina ColBERT v2 version. Simply specify the jinaai/jina-colbert-v2 as a model source.

from colbert.infra import ColBERTConfig

from colbert.modeling.checkpoint import Checkpoint

ckpt = Checkpoint("jinaai/jina-colbert-v2", colbert_config=ColBERTConfig())

docs = ["你的文本列表"]

query_vectors = ckpt.queryFromText(docs)

Via RAGatouille

Jina ColBERT v2 is also integrated into the RAGatouille system through the RAGPretrainedModel.from_pretrained() The method is easy to download and use.

from ragatouille import RAGPretrainedModel

RAG = RAGPretrainedModel.from_pretrained("jinaai/jina-colbert-v2")

docs = ["你的文本列表"]

RAG.index(docs, index_name="your_index_name")

query = "你的查询"

results = RAG.search(query)

via Qdrant

Starting with Qdrant version 1.10, Qdrant supports multivectors and the late-interaction model, which you can use directly with jina-colbert-v2. Whether you are deploying locally or cloud-hosted Qdrant, you can insert documents into a multivector collection by simply configuring the multivector_config parameter correctly in the client.

Creating a new collection using the MAX_SIM operation

from qdrant_client import QdrantClient, models

qdrant_client = QdrantClient(

url="<YOUR_ENDPOINT>",

api_key="<YOUR_API_KEY>",

)

qdrant_client.create_collection(

collection_name="{collection_name}",

vectors_config={

"colbert": models.VectorParams(

size=128,

distance=models.Distance.COSINE,

multivector_config=models.MultiVectorConfig(

comparator=models.MultiVectorComparator.MAX_SIM

),

)

}

)

⚠️ Correct setting multivector_config Parameters are the key to using the ColBERT model in Qdrant.

Inserting a document into a multivector set

import requests

from qdrant_client import QdrantClient, models

url = 'https://api.jina.ai/v1/multi-vector'

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer <YOUR BEARER>'

}

data = {

'model': 'jina-colbert-v2',

'input_type': 'query',

'embedding_type': 'float',

'input': [

'在此输入你的文本',

'你可以发送多个文本',

'每个文本最长可达 8192 个标记'

]

}

response = requests.post(url, headers=headers, json=data)

rows = response.json()["data"]

qdrant_client = QdrantClient(

url="<YOUR_ENDPOINT>",

api_key="<YOUR API_KEY>",

)

for i, row in enumerate(rows):

qdrant_client.upsert(

collection_name="{collection_name}",

points=[

models.PointStruct(

id=i,

vector=row["embeddings"],

payload={"text": data["input"][i]}

)

],

)

lookup collection

from qdrant_client import QdrantClient, models

import requests

url = 'https://api.jina.ai/v1/multi-vector'

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer <YOUR BEARER>'

}

data = {

'model': 'jina-colbert-v2',

"input_type": "query",

"embedding_type": "float",

"input": [

"Jina AI 的Embedding模型支持多少个标记的输入?"

]

}

response = requests.post(url, headers=headers, json=data)

vector = response.json()["data"][0]["embeddings"]

qdrant_client = QdrantClient(

url="<YOUR_ENDPOINT>",

api_key="<YOUR API_KEY>",

)

results = qdrant_client.query_points(

collection_name="{collection_name}",

query=vector,

)

print(results)

summarize

Based on its predecessor, jina-colbert-v2 covers 89 global languages and a variety of Embedding output dimensions, which enables users to flexibly balance the needs of accuracy and efficiency, and save computation and time costs. Click https://jina.ai to try it now and get 1 million free Token.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...