Proposition Retrieval

Original: EnglishDense X Retrieval: What Retrieval Granularity Should We Use?The

Note: This method is suitable for a small number of models, such as the OPENAI series, Claude series, Mixtral, Yi, qwen, and so on.

summaries

In open-domain Natural Language Processing (NLP) tasks, dense retrieval has become an important method for acquiring relevant contextual or world knowledge. When we use learned dense retrievers for retrieving corpora at inference time, an often overlooked design choice is the retrieval unit, i.e., the granularity at which the corpus is indexed, e.g., documents, paragraphs, or sentences. We find that the choice of retrieval unit significantly affects retrieval performance as well as the performance of downstream tasks. In contrast to the traditional approach using paragraphs or sentences, we introduce a new retrieval unit, propositions, for intensive retrieval. Propositions are defined as atomic expressions in text, each of which encapsulates a unique fact and is presented in a concise, self-contained natural language format. We empirically compare different retrieval granularities. Our results show that proposition-based retrieval significantly outperforms traditional paragraph- or sentence-based approaches in dense retrieval. In addition, retrieval by propositions enhances the performance of downstream question-answering (QA) tasks because the retrieved text more compactly contains information relevant to the question, reducing the need for long input tokens and minimizing the inclusion of irrelevant information.

1 Introduction

Dense retrievers are a class of techniques used to access external sources of information for knowledge-intensive tasks. Before we can use a learned dense retriever to retrieve from a corpus, one of the important design decisions we must make is the retrieval unit-that is, the level of granularity at which we segment and index the retrieved corpus in our reasoning. In practice, the choice of retrieval units, e.g., documents, fixed-length paragraph chunks, or sentences, is usually predetermined based on how the dense retrieval model was instantiated or trained.

In this paper, we explore a research question that has been neglected in dense retrieval reasoning - at what retrieval granularity should we segment and index the retrieval corpus? We find that choosing the appropriate retrieval granularity at the time of reasoning can be a simple but effective strategy to improve the performance of dense retrievers for both retrieval and downstream tasks.

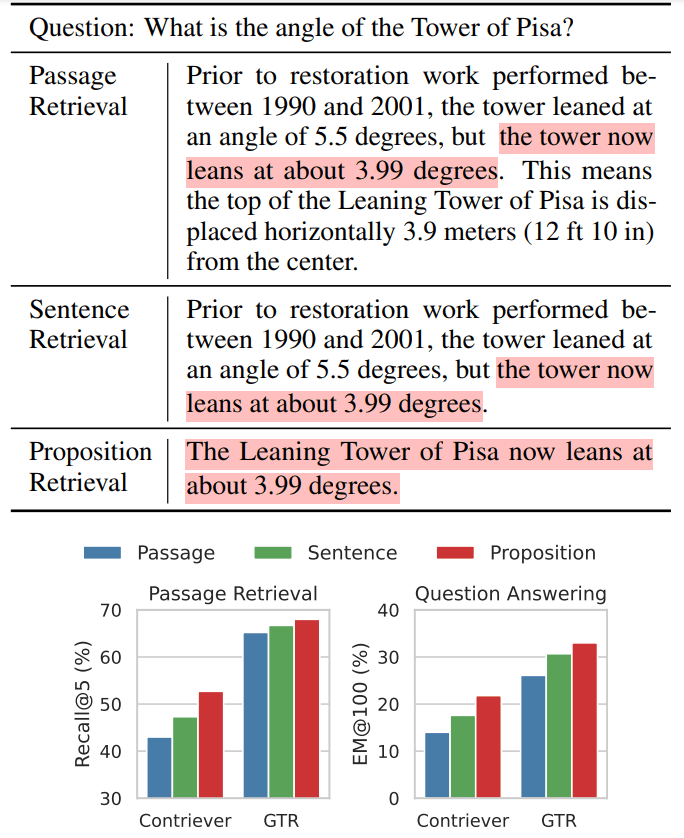

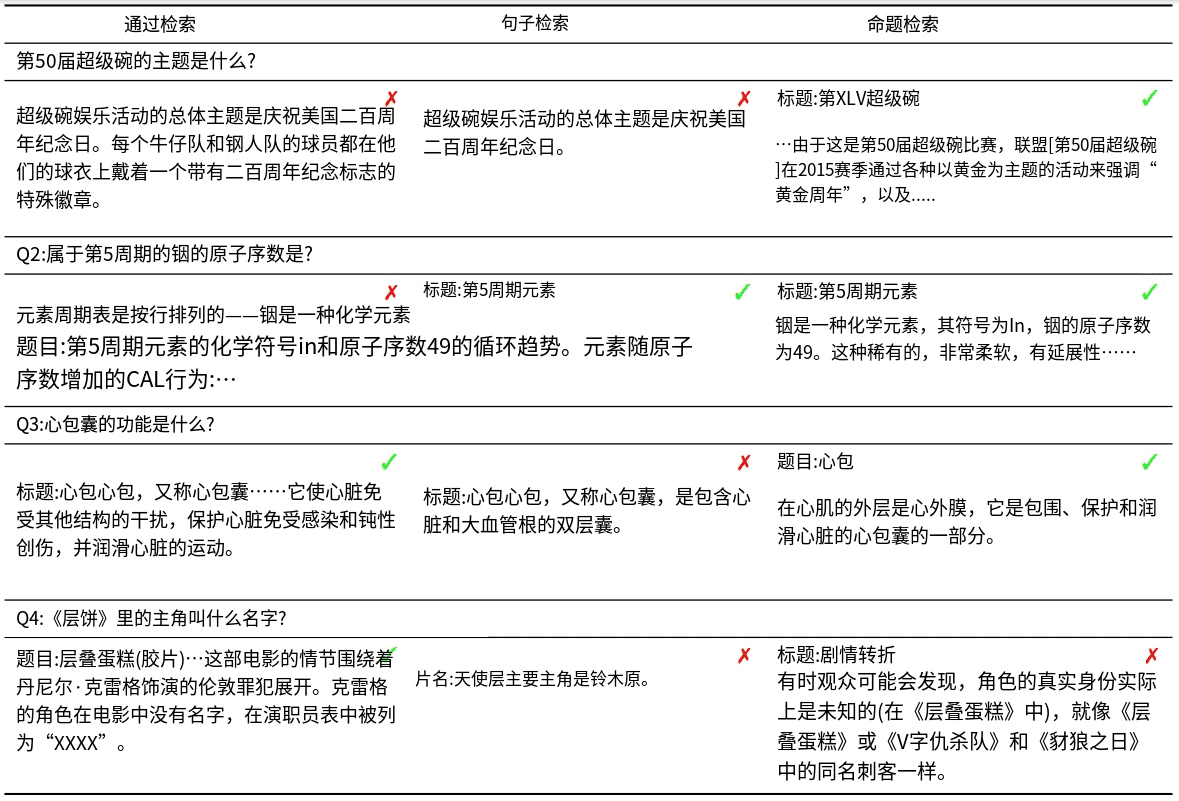

We illustrate our intuition with an example of open domain question and answer (QA) (Table 1).

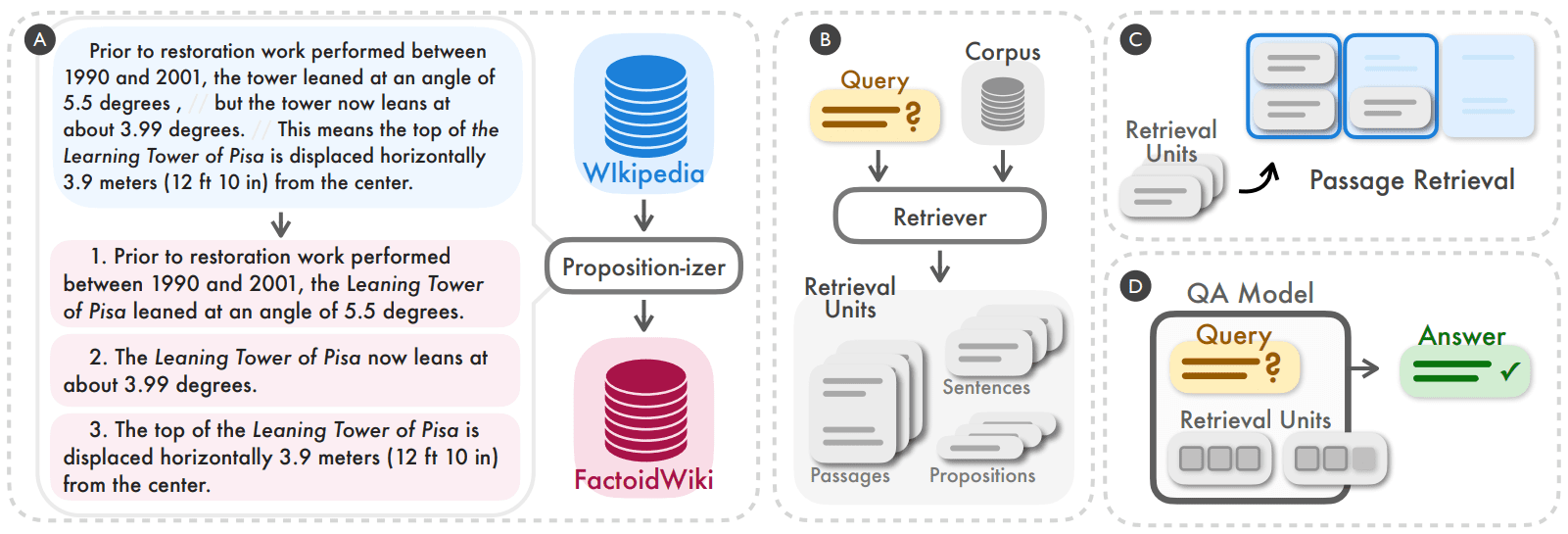

Table 1 Figure 1: Examples of three search granularities for Wikipedia text when using intensive searching

Top: Demonstrates that Wikipedia text can be divided into three different search units when using the dense search method.

BOTTOM: We observe that retrieval by propositions (propositions) yields the best retrieval performance in both paragraph retrieval tasks and downstream open-domain question-and-answer (QA) tasks. For example, Contriever (Izacard et al., 2022) or GTR (Ni et al., 2022) were used as backbone retrievers. Highlighted sections indicate sections that contain the answer to a question.

This example shows the same model retrieving text at three different levels of granularity. Theoretically, passages representing coarser granularity can provide more relevant information to the question. However, paragraphs often contain additional details that may distract the attention of the retriever and the language model of the downstream task (e.g., the recovery period and horizontal displacement in the example in Table 1). Sentence-level indexing, on the other hand, provides a more granular approach, but does not fully address this issue. This is because sentences may still be complex and compound, and they are usually not self-contained, lacking the necessary contextual information to determine query-document relevance.

To address these shortcomings of traditional retrieval units (e.g., paragraphs or sentences), we propose the use of propositions as a novel retrieval unit for intensive search. Propositions are defined as atomic expressions in a text, each of which encapsulates a unique fact and is presented in a concise, self-contained natural language format. We show an example proposition in Table 1. The proposition describes information about the current tilt angle of the Leaning Tower of Pisa in a self-contained manner and responds exactly to what the question queries. We provide a more detailed definition and description in Section II.

To validate the effectiveness of using propositions as retrieval units for dense retriever inference, we first processed and indexed an English-language Wikipedia dump that splits all documents into propositions, which we call FACTOIDWIKI.We then conducted experiments on five different open-domain QA datasets and empirically compared the performance of the six dual-encoder retriever retrievers when the Wikipedia is indexed by paragraphs, sentences, and propositions that we present performance of six dual-encoder retrievers when indexed by paragraph, sentence, and proposition. Our evaluation is twofold: we examine both retrieval performance and the impact on downstream QA tasks. Notably, our findings suggest that proposition-based retrieval outperforms sentence- and paragraph-based approaches in terms of generalization, as discussed in Section V. This suggests that propositions act as compact and content-rich contexts that enable dense retrievers to access precise information while maintaining proper context. The improvement in average recall @20 for passage-based retrieval is +10.1 on the unsupervised dense retriever, and +2.2 on the supervised retriever.In addition, we observe a clear advantage in downstream QA performance when using proposition-based retrieval, as detailed in Section VI. Given the typically limited length of input tokens in a language model, propositions inherently provide a higher density of question-relevant information.

The main contribution of our paper is:

- We propose to use propositions as retrieval units when indexing a retrieved corpus to improve intensive retrieval performance.

- We introduce FACTOIDWIKI, a processed English Wikipedia dump in which each page is split into multiple granularities: 100-word paragraphs, sentences, and propositions.

Figure 2: We found that segmenting and indexing the retrieval corpus at the propositional level can be a simple and effective strategy to improve the generalization performance of dense retrievers when reasoning (A, B). We empirically compare the performance of the dense retriever on retrieval and downstream open-domain question-and-answer (QA) tasks when working at the 100-word paragraph, sentence, or proposition level (C, D).

2 Proposition as a search unit

The goal of our study is to empirically understand how the granularity of a retrieval corpus affects the performance of a dense retrieval model. In addition to commonly used retrieval units, such as 100-word paragraphs or sentences, we propose the use of propositions as an alternative choice of retrieval unit. Here, propositions represent atomic expressions of meaning in a text and are defined by the following three principles.

- Each proposition should correspond to a unique fragment of meaning in the text, and the combination of all propositions will represent the semantics of the entire text.

- The proposition should be minimal, i.e., it cannot be further divided into separate propositions.

- Propositions should be contextualized and self-contained. Propositions should contain all the necessary context from the text (e.g., co-reference) to explain their meaning.

The use of propositions as retrieval units was inspired by a series of recent work that has been successful in representing and evaluating the semantics of texts at the propositional level. We demonstrate the notion of propositions and how a paragraph can be partitioned into its set of propositions through the example on the left side of Figure 2. The paragraph contains three propositions, each corresponding to a unique fact about the Leaning Tower of Pisa: the angle before restoration, the current angle, and the horizontal displacement. Within each proposition, the necessary context from the passage is included so that the meaning of the proposition can be interpreted independently of the original text, e.g., the reference to the tower in the first proposition is parsed as its full name, the Leaning Tower of Pisa. We expect each proposition to accurately describe a contextualized atomic fact, so our intuition is that propositions will be suitable as retrieval units for information retrieval problems.

3 FACTOIDWIKI: Wikipedia's proposition-level indexing and searching

We empirically compared the use of 100-word paragraphs, sentences and propositions as retrieval units on Wikipedia. Wikipedia is a commonly used retrieval source in knowledge-intensive NLP tasks. To allow for fair comparisons at different granularities, we processed 2021-10-13 English Wikipedia dumps, as used by Bohnet et al. (2022). We split each document text into three different granularities: 100-word paragraphs, sentences, and propositions. We use the same granularity in theAppendix Acontains detailed information about the paragraph and sentence level segmentation corpus.

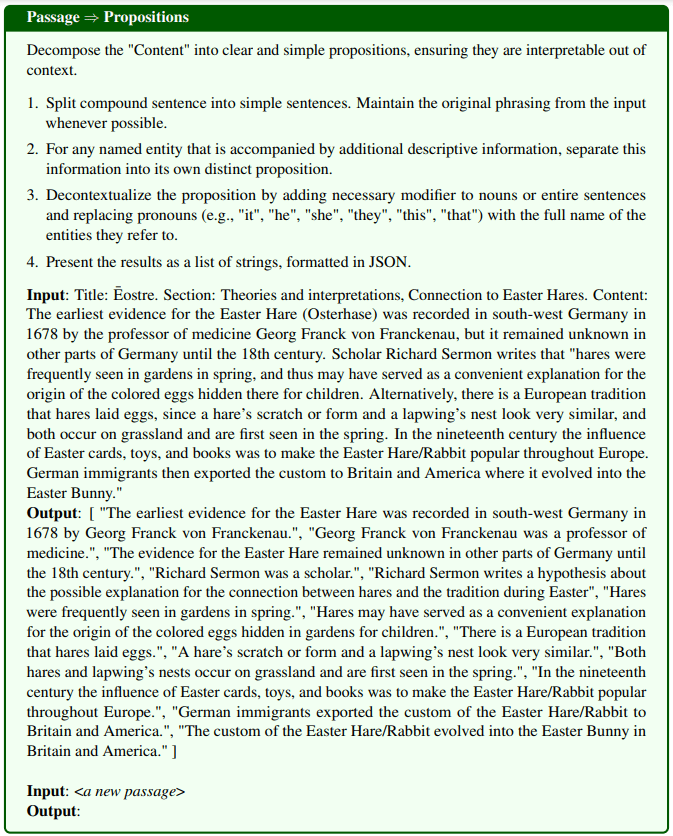

The parsing passage is propositional:In order to segment Wikipedia pages into propositions, we fine-tuned a text generation model that we call the Propositionizer. The propositionizer takes a paragraph as input and generates a list of propositions within the paragraph. Following the approach of Chen et al. (2023b), we train the propositionizer using a two-step distillation process. First, we use the instruction prompt GPT-4 containing proposition definitions and 1 example presentation . We include details of the prompts in Figure 8. We start with a set of 42k passages and use GPT-4 to generate a seed set of passage-to-proposition pairs. Next, we use the seed set to fine-tune the FlanT5-large model.

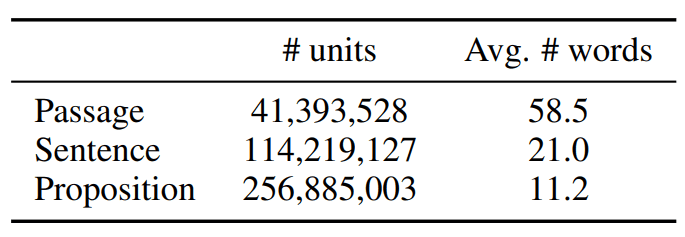

We refer to the processed corpus as FACTOIDWIKI.The statistics of FACTOIDWIKI are shown in Table 1.

Table 1: Statistics for text units in the English Wikipedia data dump for October 13, 2021

4 Experimental setup

To assess the impact of the three choices of retrieval units, we conducted experiments on five different open-domain QA datasets on FACTOIDWIKI. For each dataset, we evaluated paragraph retrieval and downstream QA performance when the dense retriever uses Wikipedia indexed at different granularities.

4.1 Open domain QA datasets

We conduct our evaluation on five different open-domain QA datasets that use Wikipedia as a retrieval source: Natural Questions (NQ) (Kwiatkowski et al., 2019), TriviaQA (TQA) (Joshi et al., 2017), Web Questions ( WebQ) (Berant et al., 2013), SQuAD (Rajpurkar et al., 2016) and Entity Questions (EQ) (Sciavolino et al., 2021).

4.2 Dense Search Model

We compare the performance of the following six supervised or unsupervised dense retriever models. In this context, supervised models refer to models that are trained during the training period using the

Manually labeled query-paragraph pairs as supervised models and vice versa.

- SimCSE is a BERT-base encoder that is trained on randomly selected unlabeled sentences from Wikipedia.

- Contriever is an unsupervised searcher instantiated as a BERT-base encoder.Contriever is trained by comparing contrast pairs constructed from document pairs from unlabeled Wikipedia and web crawling data.

- DPR is a two-encoder BERT-base model fine-tuned on five open-domain QA datasets, including the four datasets in our evaluation (NQ, TQA, WebQ, and SQuAD).

- ANCE The same settings as for DPR were used and the model was trained by Approximate Nearest Neighbor Negative Contrast Estimation (ANCE), a hard negative based training method.

- TAS-B is a two-encoder BERT-base model extracted from a teacher model with cross-attention training and fine-tuned on MS MARCO.

- GTR is a T5-based encoder pre-trained on unlabeled online forum QA data pairs and fine-tuned on MS MARCO and Natural Question.

4.3 Assessment of paragraph searches

We evaluate the performance of retrieval at the paragraph level when indexing the corpus at the paragraph, sentence or proposition level. For sentence- and proposition-level retrieval, we follow the setup introduced by Lee et al. (2021b), where the score for a paragraph is based on the maximum similarity score between the query and all sentences or propositions in the paragraph. In practice, we first retrieve slightly more text units, map each unit to a source paragraph, and return the top k unique paragraphs. We use recall @k as our evaluation metric, which is defined as the percentage of questions that find the correct answer in the first k retrieved passages.

4.4 Downstream QA Assessment

To understand the impact of using different retrieval units on downstream open-domain QA tasks, we evaluate the use of the retrieval model in a retrieve-then-read setting. In the retrieve-then-read setting, the retrieval model first retrieves k text units for a given query. These k retrieved text units are then used as inputs to the reader model along with the query to arrive at the final answer. Usually, the choice of k is limited by the maximum input length constraint or computational budget of the reader model, which scales with the number of input tokens.

Therefore, we follow an evaluation setup where the maximum number of retrieved words is limited to l = 100 or 500, i.e., only the first l words from a paragraph, sentence, or proposition level retrieval are entered into the reader model. We evaluate the percentage of questions for which the predicted answer exactly matches (EM) the true answer. We refer to our metric as EM@l. For our evaluation, we use T5-large size UnifiedQA-v2 as the reader model.

5 Results: paragraph search

In this section, we report and discuss the performance of the retrieval task. Our results show that although none of the models were trained on proposition-level data, all retrieval models exhibit comparable or superior performance to paragraph- or sentence-based retrieval when the corpus is indexed at the proposition level.

5.1 Paragraph search performance

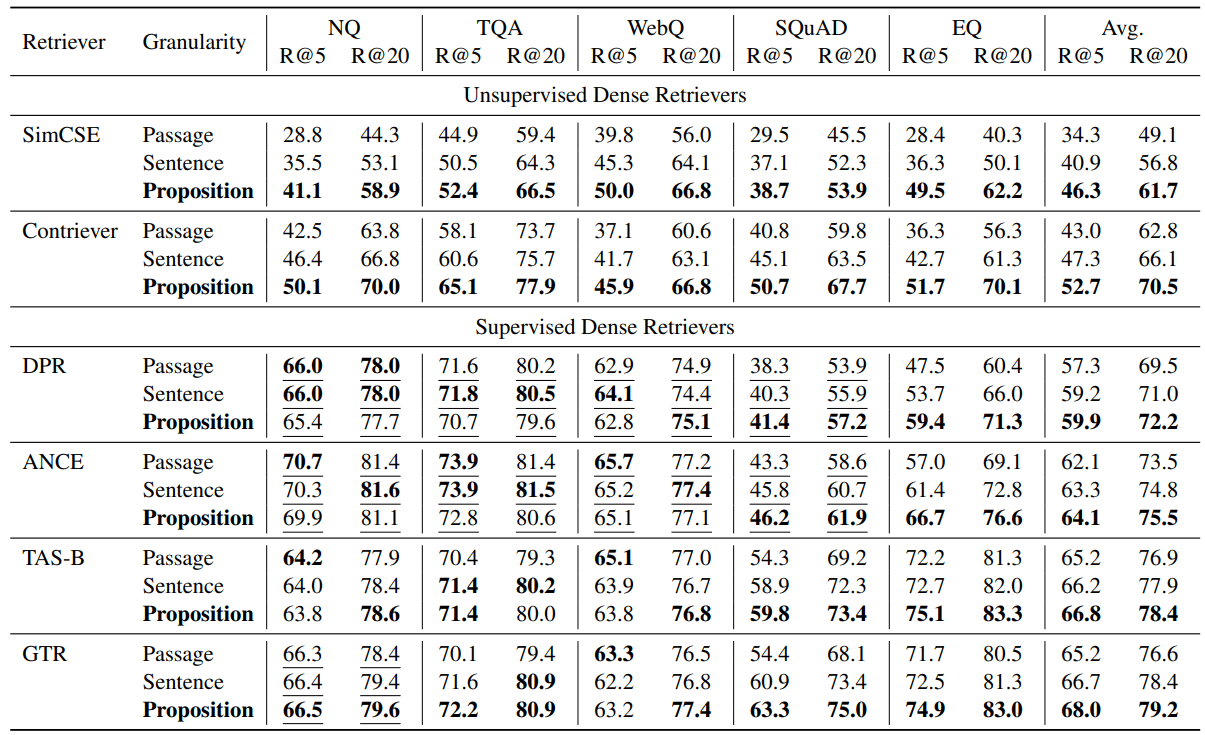

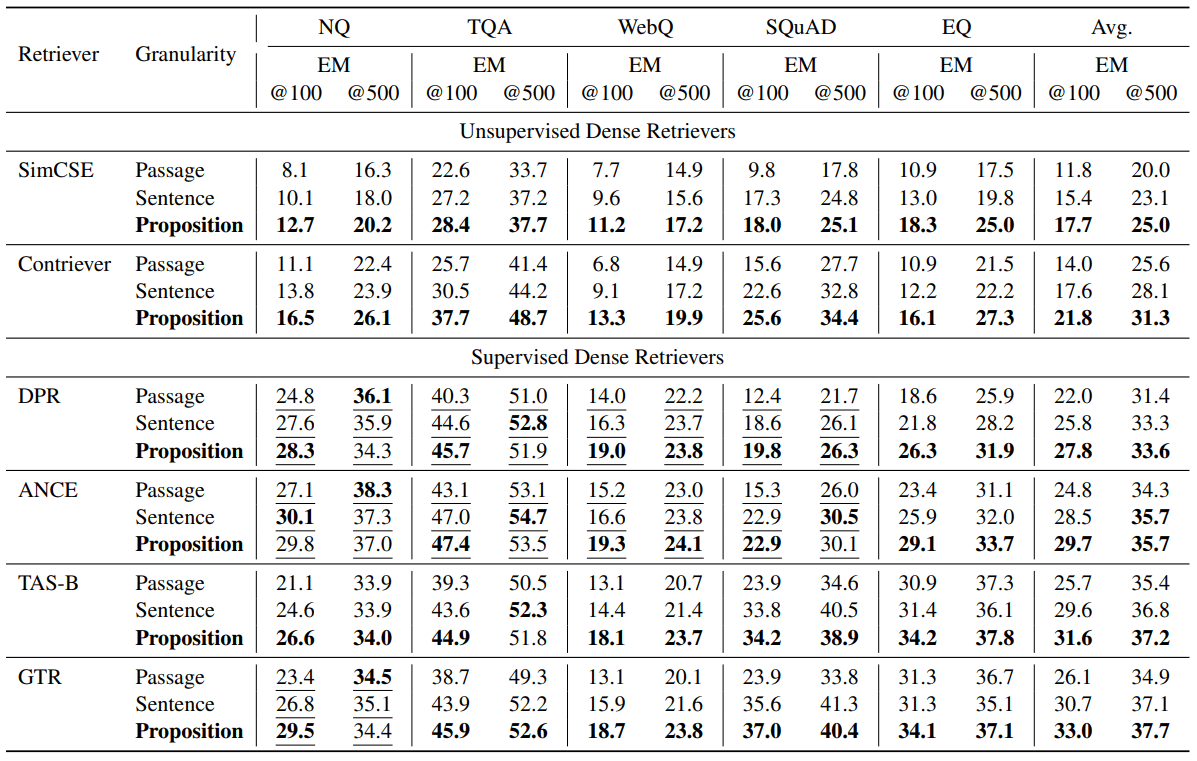

We report our evaluation results in Table 2. We observe that proposition-based retrieval outperforms sentence- or paragraph-based retrieval on most tasks for both unsupervised and supervised retrievers.

Across all the intensive retrievers tested, proposition-based retrieval consistently outperformed sentence- and paragraph-based retrieval on average across the five datasets. For the unsupervised retrievers, i.e., SimCSE and Contriever, we see an average Recall@5 improvement of +12.0 and +9.3 over the five datasets (a relative improvement of 35.01 TP3T and 22.51 TP3T).

For supervised retrievers, proposition-level retrieval still shows an average advantage, but the improvement is smaller. We hypothesize that this is due to the fact that these retrievers are trained on query-paragraph pairs. For example, for DPR and ANCE, which are trained on NQ, TQA, WebQ, and SQuAD, we observe that proposition-based and sentence-based retrieval are slightly worse than paragraph-based retrieval on all three datasets except SQuAD. As shown in Table 2, on NQ, TQA and WebQ, the performance of all supervised retrievers on the three retrieval granularities is comparable.

However, on datasets that the retriever model has not seen during training, we observe a clear advantage of proposition-based retrieval. For example, on SQuAD or EntityQuestions, we observe that proposition-based retrieval significantly outperforms the other two granularities. We see a relative improvement in Recall@5 for 17-25% on relatively weak retrievers such as DPR and EntityQuestions for ANCE. In addition, Recall@5 for proposition-based retrieval on SQuAD improves the most on TAS-B and GTR, with a relative improvement of 10-16%.

Table 2: Paragraph retrieval performance (recall @k = 5, 20) on five different open-domain Q&A datasets when the pre-trained dense retriever works with three different granularities in the retrieval corpus. Underlining indicates that the training segmentation of the target dataset is included in the training data of the dense retriever.

5.2 Proposition-based retrieval ⇒ better cross-task generalization

Our results show that the benefits of proposition-based retrieval are most evident in the cross-task generalization setting. We observe that proposition-based retrieval brings more performance gains in cross-task generalization on SQuAD and EntityQuestions.

To better understand the sources of improvement, we performed additional analysis on EntityQuestions. Since EntityQuestions feature questions that target long-tail entity attributes, we investigated how retrieval performance is affected by the popularity of the target entity in the question, i.e., how often the entity appears in Wikipedia, at three different granularities. We estimate the popularity of each entity using the following method. Given the surface form of an entity, we use BM25 to retrieve the first 1000 relevant paragraphs from Wikipedia. We use the number of times the entity appears in its first 1000 paragraphs as an estimate of its popularity. Out of the 20,000 test queries in EntityQuestion, about 251 TP3T of the target entities have a popularity value less than or equal to 3.

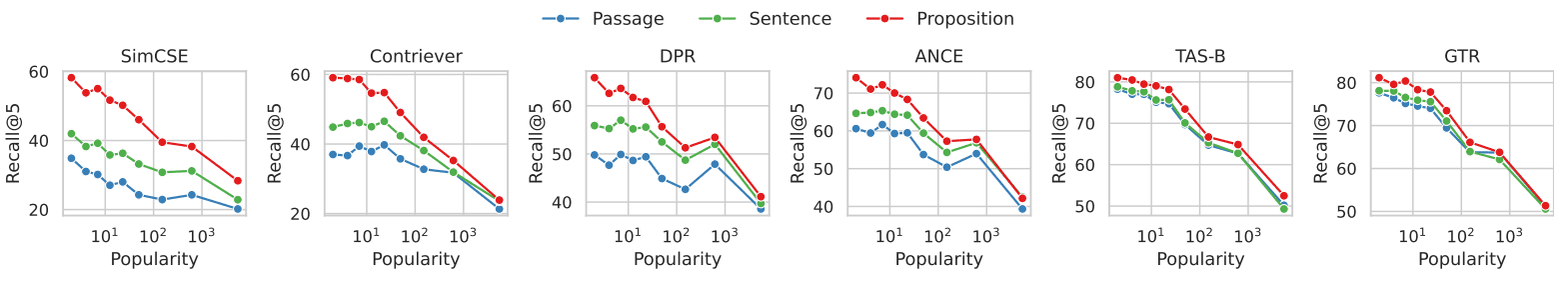

Figure 3 shows the relationship between the performance of paragraph retrieval and the popularity of the targeted entities in each problem. Across all six dense retrievers, we observe that proposition-based retrieval shows a greater advantage over paragraph-based retrieval for problems targeting less common entities. The performance gap decreases as the popularity of entities increases. Our findings suggest that the performance improvement of proposition-based retrieval is mainly attributed to queries targeting long-tail information. This echoes our observation that proposition-based retrieval improves the cross-task generalization performance of dense retrievers.

Figure 3: Document retrieval recall vs. popularity of each question's target entity in the EntityQuestions dataset

In the EntityQuestions dataset, the popularity of each question (i.e., a smaller value indicates that the entity is less common, and vice versa) was estimated by the frequency of occurrence of the entity in the first 1,000 passages retrieved by BM25.

For queries containing less common entities, we observe that retrieval by propositions shows a greater advantage compared to retrieval by entities.

6 Result: Open field QA

In this section, we investigate how retrieval granularity selection affects downstream open-domain QA tasks. We show the strong impact of proposition-based retrieval on downstream QA performance in a retrieve-then-read setup, where the number of retrieved tokens input to the reader's QA model is limited to l = 100 or 500 words. We observe that QA performance on the average EM@l metric is higher across retrievers when using propositions as retrieval units.

6.1 QA Performance

Table 3 shows the evaluation results. Across the different retrievers, we observe higher QA performance on the average EM@l metric when using propositions as retrieval units. The unsupervised retrievers SimCSE and Contriever improved their EM@100 scores by +5.9 and +7.8, respectively (relative improvements for 50% and 55%). The supervised retrievers DPR, ANCE, TAS-B, and GTR improved +5.8, +4.9, +5.9, and +6.9 on the EM@100, respectively (relative improvements for 26%, 19%, 22%, and 26%). Similar to our paragraph retrieval evaluation observations, we found proposition-based retrieval to be more beneficial in terms of downstream QA performance, especially when the retriever is not trained on the target dataset. In other cases, proposition-based retrieval is still advantageous, but with smaller average margins.

Table 3: Open-domain quiz performance (EM = exact match) in the post-retrieval read setting, where the number of retrieved words passed to the reader quiz model was limited to l = 100 or 500. we used UnifiedQA V2 (Khashabi et al., 2022) as the reader model. The first l words from the connected top retrieved text units were passed as input to the reader model. Underlining indicates that the training segmentation of the target dataset was included in the training data of the dense retriever. In most cases, we see that using smaller retrieval units leads to better Q&A performance.

6.2 Proposition ⇒ higher density of question-relevant information

Intuitively, the advantage of propositions over sentences or paragraphs as retrieval units is that the retrieved propositions have a higher density of information relevant to the query. Using finer-grained retrieval units, the correct answer to a query is more likely to appear in the top- l search terms of a dense searcher.

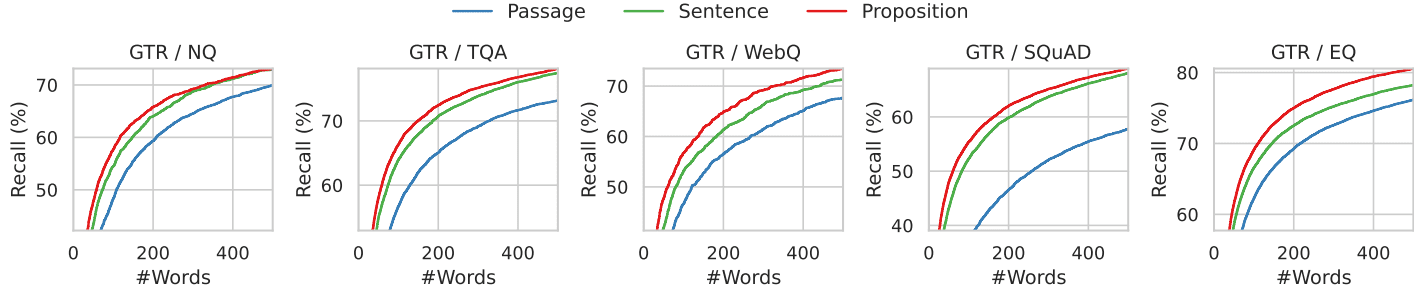

We illustrate this phenomenon with the analysis shown in Figure 4. Here, we examine the position of the correct answer in the first l retrieved words. Specifically, we calculate the recall of the golden answer in the first l words retrieved by the GTR retriever when using Wikipedia indexed at three different granularities.

We show the results in Figures 4 and 7, where l ranges from 0 to 500, covering all five datasets. For a fixed budget of retrieved words, propositional retrieval has a higher success rate than sentence and paragraph retrieval methods in the range of the first 100-200 words, which corresponds to approximately 10 propositions, 5 sentences or 2 paragraphs. As the word count increases further, the recall of the three granularities converges, since all the information relevant to the question is contained in the retrieved text.

Figure 4: Recall of the gold standard answer when the retrieved text is restricted to the first k words for the GTR retriever. More fine-grained retrievals have higher recall across all word count ranges.

6.3 Error Case Studies

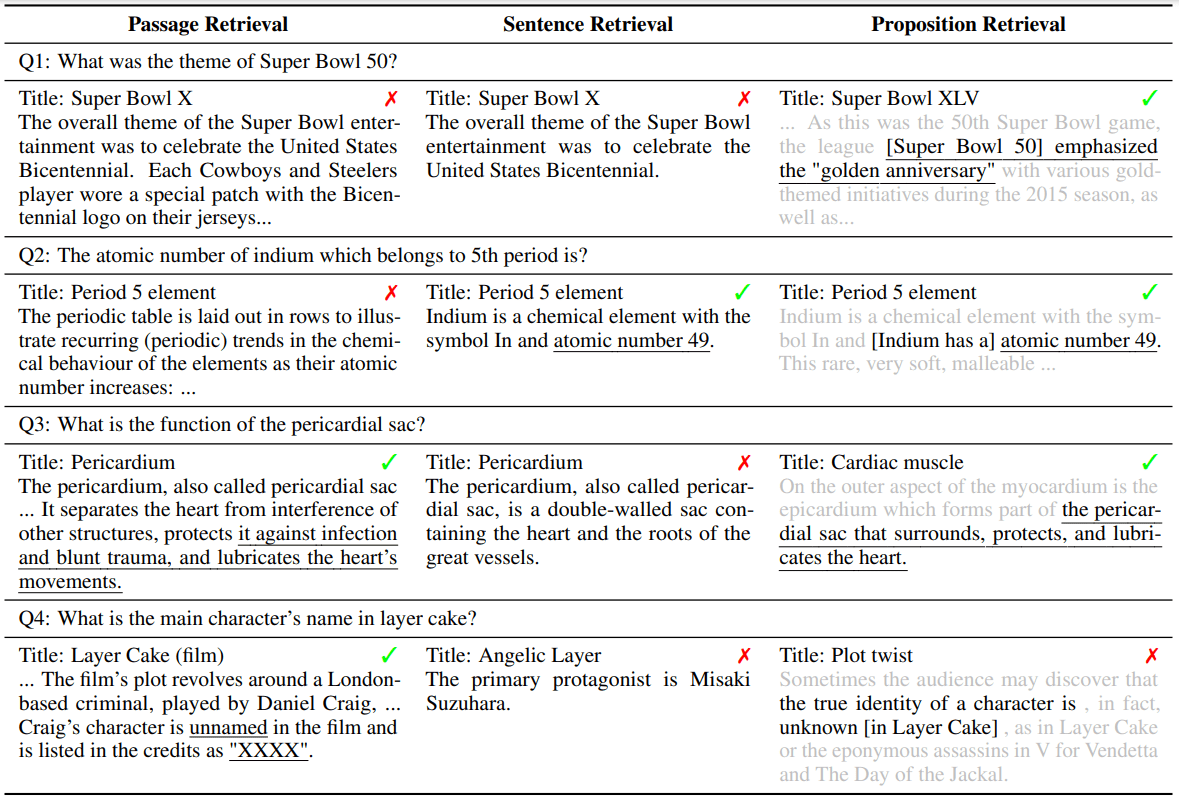

To understand the sources of errors at each retrieval granularity, we present and discuss four typical error examples in Table 4. In each example, the problems retrieved by the GTR retriever at the three granularities and their corresponding top-1 text units are shown.

In channel-level retrieval, the ambiguity of entities or their references poses a challenge to dense searchers, echoing the findings of (Min et al., 2020). For example, in example Q1, the question asks for "Super Bowl 50", but the retrieved article and sentence point to "Super Bowl 5". In example Q2, the paragraph search fails to recognize the section that references the correct "atom number". Instead, the first article retrieved referred to "atomic number" in a different and unrelated context to the question. Sentence-by-sentence retrieval may also have similar problems as paragraph-by-paragraph retrieval, as in Example Q1, and another challenge for sentence retrieval is the lack of context. In example Q3, sentence-based retrieval fails because the correct sentence in the retrieved article uses "it" to refer to the pericardial sac.

Proposition-based retrieval solves the above problem by ensuring that each retrieval unit contains only one fact with the necessary context in the proposition. However, proposition-based retrieval faces the challenge of multi-hop reasoning involving long-range textual analysis. In Example Q4, the retrieved passages describe the names of the actors and the roles they play, respectively. None of the propositions contains both questions and answers.

Table 4: Example cases showing the cases where the first ranked text unit retrieved at each search granularity fails to provide the correct answer. The underlined text is the correct answer. The gray text is the context of the proposition, but it is used for illustrative purposes only and is not provided to the retriever and the downstream Q&A model.

7 Related work

Recent work on dense retrievers typically utilizes a dual encoder architecture. With dual encoders, each query and document is encoded separately as a low-dimensional feature vector, and the correlation between them is measured by a nonparametric similarity function between the embedded vectors. Due to the limited expressive power of the similarity function, dual-encoder models usually have poor generalization ability on new tasks, especially when training data is scarce. For this reason, previous studies have used techniques such as data augmentation, continuous pre-training, task-aware training, hybrid sparse-dense retrieval, or hybrid strategy retrieval to improve the cross-task generalization performance of dense retrievers.

Our work is motivated in part by echoes of multi-vector retrieval, e.g., ColBERT, DensePhrase (Lee et al., 2021a,b), ME-BERT, MVR, where the retrieval model learns to encode candidate retrieval units into multiple vectors in order to increase the model representativeness and to improve retrieval granularity. Our work focuses on settings that do not update the dense retriever model or its parameters. We show that partitioning the retrieval corpus into finer-grained propositions can be a simple and orthogonal strategy to improve the generalization ability of the dual-encoder dense retriever during inference.

The idea of using propositions as textual representation units can be traced back to the pyramid approach in automatic digest evaluation (Nenkova and Passonneau, 2004), where model-generated summaries are evaluated by each proposition. Extracting propositions from text has been a long-standing task in NLP, with early formulations focusing on structured representations of propositions, e.g., open information extraction, semantic role labeling. More recent studies have successfully extracted propositions in natural language form by using large language models with fewer cues or fine-tuning smaller models.

Retrieval-then-reading, or more broadly - retrieval-enhanced generation, has recently become a popular paradigm for open-domain Q&A. While early work provided downstream readers with up to 100 retrieved passages, the amount of allowed context is significantly reduced (e.g., the first 10) when using recent large language models because of their limited context window length and inability to handle long contexts. Recent efforts have attempted to improve the quality of reader contexts by filtering or compressing retrieved documents. Our work effectively addresses this problem by utilizing a new retrieval unit, the proposition, which not only reduces the context length but also provides higher information density.

8 Conclusion

We propose to use propositions as retrieval units to index corpora in order to improve the performance of dense retrieval during inference. Through our experiments on five open-domain QA datasets using six different intensive retrievers, we find that proposition-based retrieval outperforms paragraph- or sentence-based retrieval in terms of both paragraph retrieval accuracy and downstream QA performance with fixed retrieval word budgets. We introduce FACTOIDWIKI, an indexed version of the English Wikipedia dump in which text from 6 million pages is partitioned into 250 million propositions. We hope that FACTOIDWIKI and our findings in the paper will facilitate future research on information retrieval.

limitations

Our current study on retrieval corpus granularity has the following limitations. (1) Retrieval corpus - Our study focuses only on Wikipedia as a retrieval corpus, since most open-domain QA datasets employ Wikipedia as a retrieval corpus. (2) Types of Dense Retrievers Evaluated - In the current version of this paper, we evaluate only six popular dense retrievers, most of which follow a dual-encoder or double-encoder architecture. In future versions, we will include and discuss results for a wider range of dense retrievers. (3) Language - Our current study is limited to the English Wikipedia. We leave the exploration of other languages for future work.

A Retrieving corpus processing

The English-language Wikipedia dump used in this study was published by Bohnet et al. in 2022, and was chosen to be used because it has been filtered to remove charts, tables, and lists, and is organized in paragraph form. The dump dates back to 10/13/2021. We segmented Wikipedia into three search units for this study: 100-word paragraph blocks, sentences, and propositions. We used a greedy method to partition paragraphs into 100-word paragraph blocks. We segment only at the end of sentences to ensure that each paragraph block contains complete sentences. As we process the paragraphs, we add sentences one by one. If including the next sentence would cause the paragraph block to exceed 100 words, we start a new paragraph block from that sentence. However, if the final paragraph block is less than 50 words, we merge it with the previous one to avoid too small fragments. We further use the widely used Python SpaCy en_core_web_lg model to split each paragraph into sentences. In addition, our Propositionizer model decomposes each paragraph into propositions. In total, we decomposed 6 million pages into 41.39 million paragraphs, 11.42 million sentences, and 257 million propositions. On average, a paragraph contains 6.3 propositions and a sentence contains 2.3 propositions.

B Training proposers

We used GPT-4 to generate a list of propositions from a given passage by prompting, as shown in Figure 8. After filtering, a total of 42,857 pairs were used to fine-tune the Flan-T5-Large model. We named the model Propositionizer. We use the AdamW optimizer with a batch size of 64, a learning rate of 1e-4, and a weight decay of 1e-4 , and train for 3 cycles. To compare the performance of propositions generated by different models, we set up a development set and an evaluation metric. The development set contains an additional 1000 pairs of samples collected by GPT-4 using the same methodology as the training set. We assess the quality of the predicted propositions by the F1 scores of the two sets of propositions. Inspired by the F1 scores of the two sets of markers in BertScore, we design F1 scores for the two sets of propositions. Let P = {p1, ... , pn} denote the set of labeled propositions, ˆP = {ˆp1, ... , ˆpm} denote the set of predicted propositions. We use sim(pi, ˆpj) to represent the similarity between two propositions. Theoretically, any textual similarity metric can be used. We choose to use BertScore as the sim function because we want our metric to reflect the semantic differences between propositions. We define:

Recall = 1 / |P| ∑(max(sim(pi, ˆpj)) for ˆpj in ˆP) Precision = 1 / |ˆP| ∑(max(sim(pi, ˆpj)) for pi in P) F1 = 2 * Precision * Recall / (Precision + Recall)

Here is a visual explanation of the F1 score: recall represents the percentage of propositions in the labeled set that are similar to propositions in the generated set, precision represents the percentage of propositions in the generated set that are similar to propositions in the labeled set, and F1 is the reconciled average of recall and precision. F1 is 1 if the two sets are identical and 0 if any two propositions are semantically different.

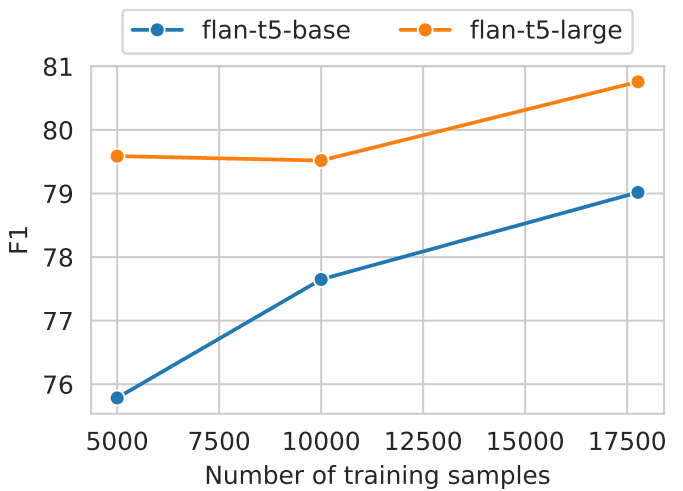

We conducted a comparative analysis of basic and large size Flan-T5 models that were trained using different amounts of data (as shown in Fig. 5). The results of the study show that larger models with a large amount of training data produce better results. The propositional analyzer achieved an F1 value of 0.822. after manually reviewing the generated propositions, we found them to be satisfactory.

Fig. 5: Propositional level decomposition performance for models of different sizes and number of training data.

C Offline Index

We encoded the retrieved units as embeddings using the pyserini and faiss packages. We encoded each text unit using multiple GPUs grouped into 1 million units with a batch size of 64. After preprocessing the embeddings, we performed all experiments using exact search with inner product (faiss.IndexFlatIP).The pure index of FACTOIDWIKI is approximately 768 GB in size. To minimize memory pressure, the embedding is split into 8 slices. Each slice performs an approximate nearest neighbor search before aggregating all results. Although the number of propositions is six times the number of passages, sub-linear search times relative to the total number of vectors can be achieved using efficient indexing techniques. Furthermore, utilizing GPU parallelism and distributed indexing significantly reduces the online search time. Thus, with a proper implementation, we can make propositional search a practical and efficient option.

D Retriever model

We used the transformers and sentence-transformers packages for model implementation. We used the following checkpoints published on HuggingFace: SimCSE (princeton-nlp/unsup-simcse-bert-base-uncased), Contriever (facebook/contriever), DPR (facebook/dpr- ctx_encoder-multiset-base, facebook/dpr-question_encoder-multiset-base), ANCE (castorini/ance-dpr-context-multi, castorini/ance-dpr- question-multi,), TAS-B (sentence-transformers/msmarco-distilbert-base-tas-b) and GTR (sentence-transformers/gtr-t5-base).

E Additional results

In Section 5.2, we show the advantages of proposition-based retrieval over sentence-based retrieval, especially in EQs where the population of entities decreases. We use the number of occurrences in the first 1000 paragraphs retrieved by BM25 as a proxy for popularity, instead of using the number of hyperlinks to entities as used in Sciavolino et al. (2021). As a result, the trend of the performance vs. popularity graph (Figure 6) shows some differences between our results and those of Sciavolino et al. (2021). For example, some entities are ambiguous (e.g., 1992, a TV series). In this case, the number of occurrences of the surface form of the entity is large. At the same time, questions related to fuzzy entities are difficult to answer, resulting in low recall. In Section 6.2, we discuss the recall of answers in retrieved text as a function of context length. We further show the performance trend of the six dense retrievers, as shown in Fig. 7. The results show that the recall of propositions is consistently better than that of sentences and paragraphs. Our findings lead to the conclusion that there is a greater density of question-relevant units in propositions compared to sentences and paragraphs.

Figure 8: Hints for generating propositions from articles using GPT-4.

Decompose the "Content" into clear and simple propositions, ensuring they are interpretable out

of context.

1. Split compound sentence into simple sentences. Maintain the original phrasing from the input

whenever possible.

2. For any named entity that is accompanied by additional descriptive information, separate this

information into its own distinct proposition.

3. Decontextualize the proposition by adding necessary modifier to nouns or entire sentences

and replacing pronouns (e.g., "it", "he", "she", "they", "this", "that") with the full name of the

entities they refer to.

4. Present the results as a list of strings, formatted in JSON.Input. Title: Eostre. Section: Theories and interpretations, Connection to Easter Hares. Content: ¯

The earliest evidence for the Easter Hare (Osterhase) was recorded in south-west Germany in

1678 by the professor of medicine Georg Franck von Franckenau, but it remained unknown in

other parts of Germany until the 18th century. Scholar Richard Sermon writes that "hares were

frequently seen in gardens in spring, and thus may have served as a convenient explanation for the

Alternatively, there is a European tradition of "the coloring of the eggs", which is a form of "the coloring of the eggs", and "the coloring of the eggs".

that hares laid eggs, since a hare's scratch or form and a lapwing's nest look very similar, and

Both occur on grassland and are first seen in the spring. In the nineteenth century the influence of

of Easter cards, toys, and books was to make the Easter Hare/Rabbit popular throughout Europe.

German immigrants then exported the custom to Britain and America where it evolved into the

Easter Bunny."

Output. ["The earliest evidence for the Easter Hare was recorded in south-west Germany in

1678 by Georg Franck von Franckenau.", "Georg Franck von Franckenau was a professor of

medicine.", "The evidence for the Easter Hare remained unknown in other parts of Germany

until the 18th century.", "Richard Sermon was a scholar.", "Richard Sermon writes a hypothesis

about the possible explanation for the connection between hares and the tradition during Easter".

"Hares were frequently seen in gardens in spring.", "Hares may have served as a convenient

explanation for the origin of the colored eggs hidden in gardens for children.", "There is a

European tradition that hares laid eggs.", "A hare's scratch or form and a lapwing's nest look very

similar.", "Both hares and lapwing's nests occur on grassland and are first seen in the spring.", "Both hares and lapwing's nests occur on grassland and are first seen in the spring.".

"In the nineteenth century the influence of Easter cards, toys, and books was to make the Easter

Hare/Rabbit popular throughout Europe.", "German immigrants exported the custom of the

Easter Hare/Rabbit to Britain and America.", "The custom of the Easter Hare/Rabbit evolved into

the Easter Bunny in Britain and America."]Input. <a new passage>

Output.

Break down "content" into clear and simple ideas, making sure they still make sense when taken out of their original context.

1. Break up compound sentences into simple ones and, where possible, retain the original formulation.

2. For specific entities with additional descriptive information, this information is separated into separate assertions.

3. Taking assertions out of context by adding necessary modifiers to nouns or entire sentences, or by replacing pronouns (e.g., "it", "he", "she", "they", "this", "that") with the full name of the entity to which they refer.

4. Present the results as a list of strings and use the JSON format.Input:Title: Eostre. chapter: theories and explanations, with links to the Easter Bunny. CONTENT: The earliest evidence of the Easter Bunny (Osterhase) was recorded by Georg Franck von Franckenau in 1678 in southwestern Germany, but it was unknown in the rest of Germany until the 18th century. Scholar Richard Sermon writes that "Hares were often seen in gardens in the spring, so they may have been used as a convenient explanation for the origin of the eggs that children found in their gardens. In addition, there is a tradition in Europe that hares lay eggs because their scrapes or forms closely resemble the nests of wheatbirds, and they are found in meadows and are first discovered in the spring. In the 19th century, the influence of Easter cards, toys, and books led to the popularization of the Easter Bunny throughout Europe. The custom was then spread by German immigrants to England and the United States, where it evolved into the Easter Bunny as we now know it."

Output:[ "The earliest evidence relating to the Easter Bunny was found by Georg Franck von Franckenau in southwestern Germany in 1678." , "Georg Franck von Franckenau was a professor of medicine." , "It wasn't until the 18th century that people in other parts of Germany began to understand this evidence of Easter Hare association." , "Richard Sermon was a scholar." , "Richard Sermon offers a hypothesis on possible explanations for the hare's traditional association with Easter.", "Hares can often be found in gardens in the springtime." , "Hares may be a simple explanation for why children find colored eggs in their gardens." , "There is a traditional view in Europe that hares lay eggs." , "The hare's scrape or form is extremely similar to that of a wheatbird's nest, both of which are found in meadows and are first discovered in the spring." , "In the 19th century, the Easter Bunny became well known in Europe due to the popularity of Easter cards, toys, and books." , "German immigrants brought the custom of the Easter Bunny to England and the United States." , "In England and the United States, the custom of the Easter Bunny gradually evolved into the Easter Bunny we now know." ]Input: <新的段落>

Output:

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...