PromptWizard: an open source framework for optimizing prompt projects to improve task performance

General Introduction

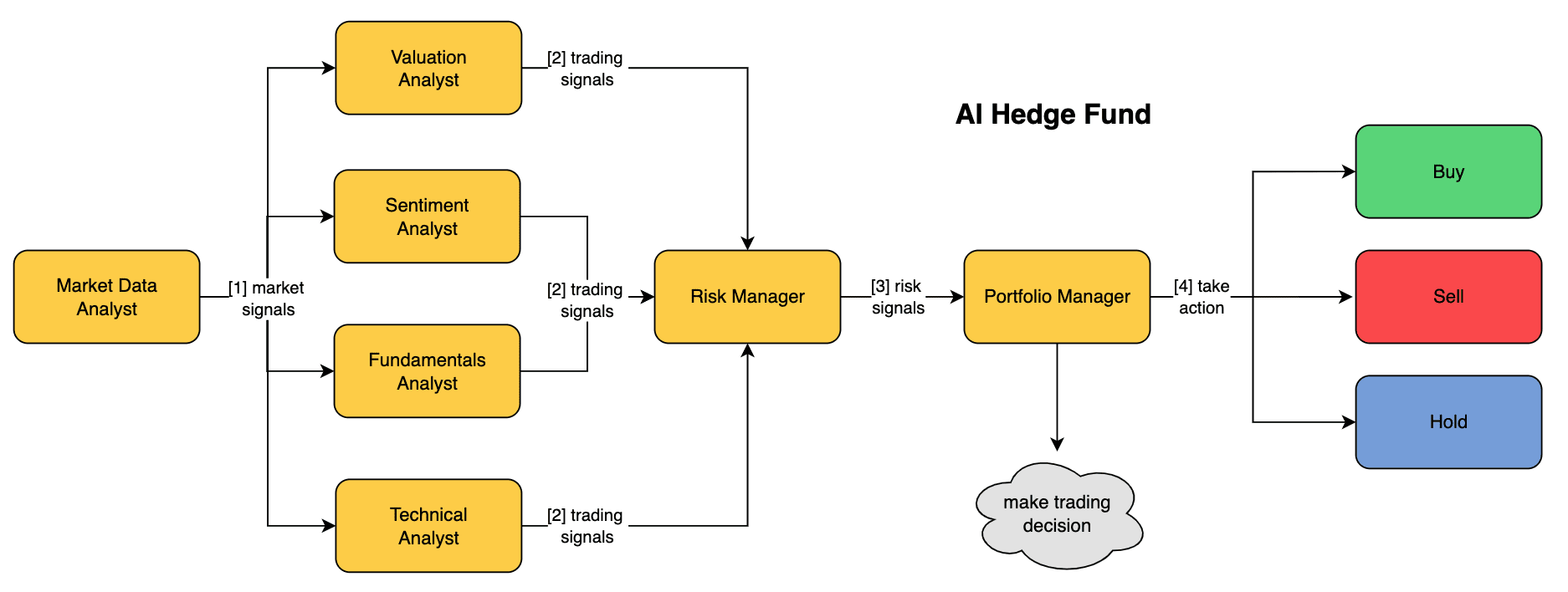

PromptWizard is an open source framework developed by Microsoft that uses a self-evolutionary mechanism that allows the model to generate, evaluate and improve prompt words and generate examples by itself, and improve the quality of the output through continuous feedback. It can autonomously optimize prompts, generate and select appropriate examples, and reason and validate, and finally output high-quality prompts, reducing the workload of manual prompt engineering through automated processes. Features include the incorporation of the concepts of task intent and expert roles, the use of a chain-of-mind approach to optimize reasoning, and the combination of positive, negative, and synthetic examples to improve performance. It is adaptable to the task requirements of different domains, stable in performance, and supports multiple LLMs at multiple scales.

Function List

- Feedback-driven optimization: Continuously improve task performance by generating, criticizing, and improving tips and examples.

- Diversified Example Generation: Generating Robust and Task-Aware Synthetic Examples, Optimizing Cues and Examples.

- Adaptive Optimization: Optimizing instruction and context learning examples through a self-evolutionary mechanism.

- multitasking support: Apply to multiple tasks and large language models to improve modeling accuracy and efficiency.

- Cost-effective: Significantly reduce computational costs and enable efficient tip engineering.

Using Help

Installation process

- clone warehouse::

git clone https://github.com/microsoft/PromptWizard

cd PromptWizard

- Create and activate a virtual environment::

- Windows:

bash

python -m venv venv

venv\Scripts\activate - macOS/Linux:

bash

python -m venv venv

source venv/bin/activate

- Windows:

- installer::

pip install -e .

Guidelines for use

PromptWizard offers three main ways to use it:

- Optimize the prompt for no example::

- Ideal for scenarios where you need to optimize cues but don't have training data.

- Generate synthesis examples and optimize tips::

- Optimize prompts by generating synthetic examples for tasks that require example support.

- Optimizing tips using training data::

- Cue optimization in conjunction with existing training data for tasks with rich data support.

Configuration and environment variable settings

- configuration file::

- utilization

promptopt_config.yamlfile to set the configuration. For example, for the GSM8k task, you can use this file to set the configuration.

- utilization

- environment variable::

- utilization

.envfile to set environment variables. For example, for the GSM8k task, the following variables can be set:plaintext

AZURE_OPENAI_ENDPOINT="XXXXX" # 替换为你的Azure OpenAI端点

OPENAI_API_VERSION="XXXX" # 替换为API版本

AZURE_OPENAI_CHAT_DEPLOYMENT_NAME="XXXXX" # 创建模型部署并放置部署名称

- utilization

workflow

- Selection of usage scenarios::

- Select the appropriate usage scenario (no example optimization, synthetic example optimization, training data optimization) based on the task requirements.

- Configuration environment::

- Set configuration and environment variables for API calls.

- runtime optimization::

- Run PromptWizard to optimize the prompts based on the selected scenario.

typical example

Here is a simple example showing how to optimize prompts using PromptWizard:

from promptwizard import PromptWizard

# 初始化PromptWizard

pw = PromptWizard(config_file="promptopt_config.yaml")

# 选择场景并运行优化

pw.optimize_scenario_1()

With these steps, users can easily install and use PromptWizard for prompt optimization to improve task performance in large language models.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...