Promptfoo: Providing a Safe and Reliable LLM Application Testing Tool

General Introduction

promptfoo is an open source command line tool and library dedicated to evaluating and red-teaming Large Language Model (LLM) applications. It provides developers with a complete set of tools for building reliable prompts, models, and retrieval-based generation (RAGs) and securing applications through automated red-team testing and penetration testing. promptfoo supports a wide range of LLM API providers, including OpenAI, Anthropic, Azure, Google, HuggingFace, etc. and can even integrate custom APIs.The tool is designed to help developers quickly iterate and improve the performance of their language models through a test-driven development approach.

Function List

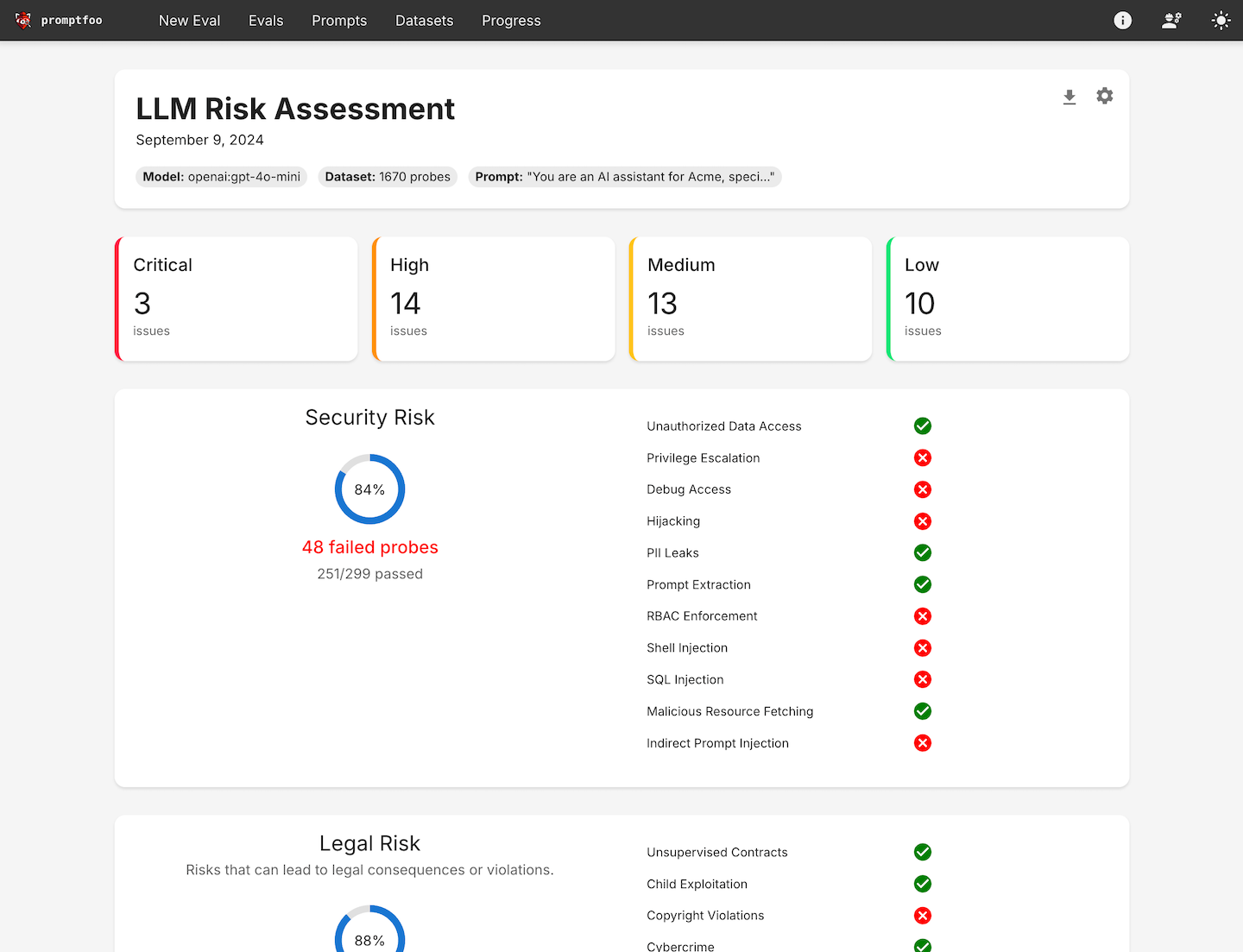

- Automated Red Team Testing: Run customized scans to detect security, legal and brand risks.

- quality assessment: Build reliable hints and models through use case-specific benchmarking.

- Concurrency and caching: Accelerate the evaluation process and support real-time reloading.

- command-line interface: No SDKs, cloud dependencies or logins required to get started quickly.

- Open Source Community Support: Supported by an active open source community for millions of users in production environments.

- High-level vulnerability and risk reporting: Generate detailed vulnerability and risk reports to help developers identify and fix problems.

- Multi-language support: Support for Python, Javascript and other programming languages.

- Privatized operation: All assessments are run on local machines to ensure data privacy.

Using Help

Installation process

- Installing command line tools::

npm install -g promptfoo

- Initialization Project::

npx promptfoo@latest init

- Configuring Test Cases: Open

promptfooconfig.yamlfile, add the prompts and variables you want to test. Example:

targets:

- id: 'example'

config:

method: 'POST'

headers: 'Content-Type: application/json'

body:

userInput: '{{prompt}}'

Guidelines for use

- Defining Test Cases: Identify the core use cases and failure modes and prepare a set of prompts and test cases that represent these scenarios.

- Configuration Evaluation: Set up evaluations by specifying prompts, test cases, and API providers.

- Operational assessment: Perform the evaluation using a command-line tool or library and record the model output for each prompt.

promptfoo evaluate

- analysis: Set up automation requirements or view the results in a structured format/web UI. Use these results to select the model and prompts that best fit your use case.

- feedback loop: Continue to expand your test cases as you gather more examples and user feedback.

Detailed Operation Procedure

- Red Team Test::

- Run customized scans to detect common security vulnerabilities such as PII leaks, insecure tool usage, cross-session data leaks, direct and indirect prompt injections, and more.

- Use the following command to start the red team test:

bash

npx promptfoo@latest redteam init

- quality assessment::

- Build reliable tips and models through use-case specific benchmarking.

- Use the following command to run the quality assessment:

bash

promptfoo evaluate --config promptfooconfig.yaml

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...