Prompt Optimizer: an open source tool to optimize prompt words for mainstream AI models

General Introduction

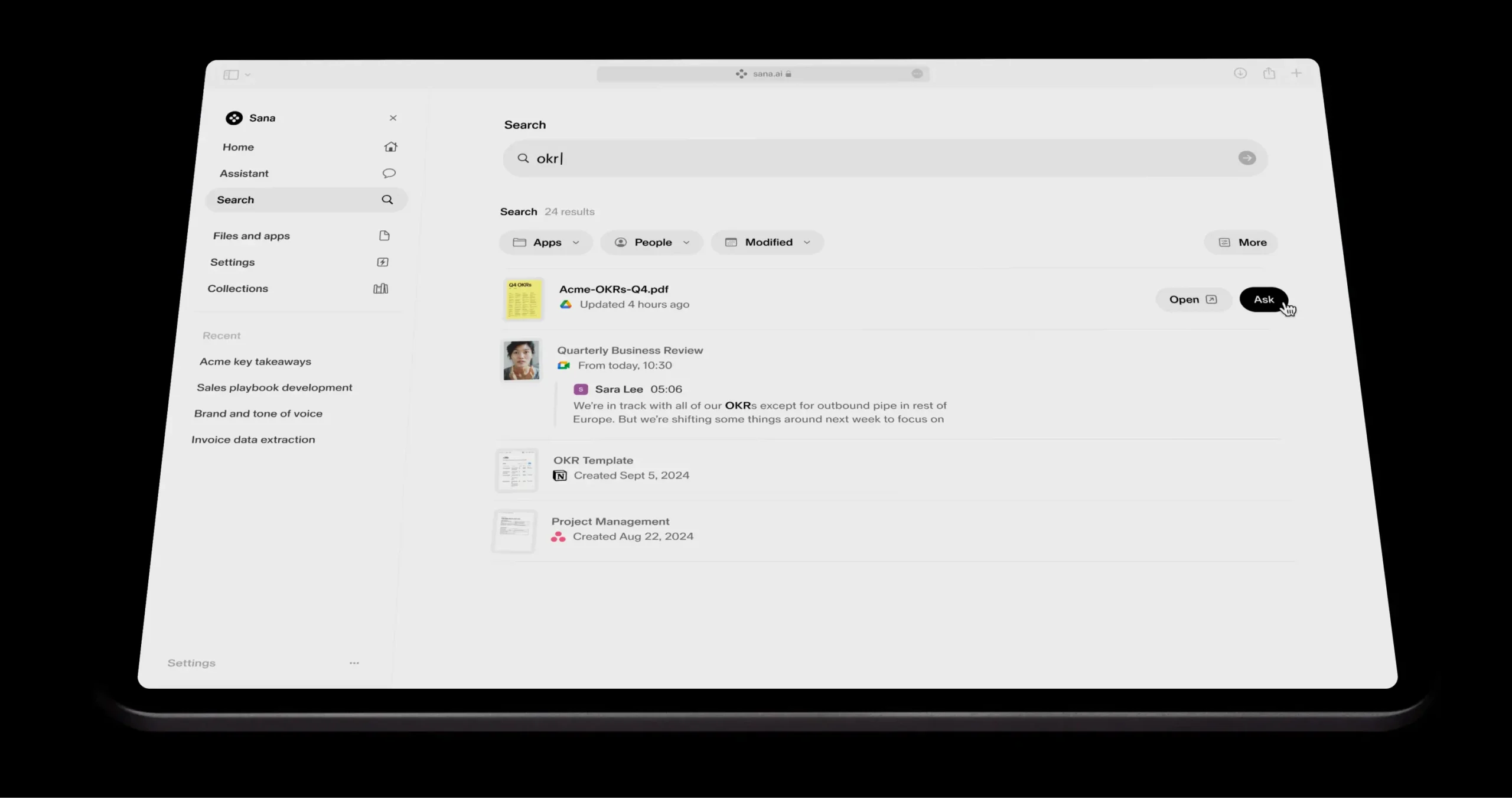

Prompt Optimizer is an open source tool focused on prompt word optimization, developed by linshenkx on GitHub. It helps users optimize the prompt words of AI models through intelligent algorithms to improve the quality and accuracy of generated content. The tool supports one-click deployment to Vercel, which is easy to operate and suitable for developers and AI enthusiasts. The project integrates several mainstream AI models (e.g. OpenAI, Gemini, DeepSeek, etc.) and provides real-time testing so that users can see the optimized results immediately. In addition, it focuses on privacy protection, with all data processing done on the client side, and API keys and history stored through local encryption to ensure security. It has already gained a lot of attention on the X platform, and users have commented that it is "a great tool in the toolbox".

Function List

- Smart Cue Optimization: Algorithms automatically optimize user-entered prompt words to improve the quality of AI-generated results.

- Real-time effect test: Support for instant testing of optimized cue words to directly show output comparisons of different models.

- Multi-model integrationCompatible with OpenAI, Gemini, DeepSeek and other mainstream AI models to meet diversified needs.

- One-Click Deployment to Vercel: Provides an easy deployment process that requires no complex configuration to go live.

- Privacy protection mechanism: All operations are done on the client side, with local encrypted storage of keys and records and no risk of data leakage.

- Multi-package engineering structures: Extensible with core functionality (core), UI component libraries (ui), web applications (web), and Chrome plug-ins (extension).

- History Management: Save optimization history for users to review and reuse previous cue word schemes.

Using Help

Installation process

Prompt Optimizer is a GitHub-based open source project that supports local development and Vercel one-click deployment. The following are detailed steps for installation and use :

Mode 1: Local Installation and Operation

- cloning project

Clone the project locally by entering the following command in the terminal:git clone https://github.com/linshenkx/prompt-optimizer.git

Then go to the project directory:

cd prompt-optimizer

- Installation of dependencies

The project uses pnpm as a package management tool, so make sure you have pnpm installed (available via thenpm install -g pnpm(Install). Then run:pnpm installThis will install all the necessary dependency packages.

- Configuring API Keys

The project supports multiple AI models and needs to configure the corresponding API keys. In the project root directory, create the.envfile, add the following (choose the configuration according to your actual needs):VITE_OPENAI_API_KEY=your_openai_api_key VITE_GEMINI_API_KEY=your_gemini_api_key VITE_DEEPSEEK_API_KEY=your_deepseek_api_key VITE_CUSTOM_API_KEY=your_custom_api_key VITE_CUSTOM_API_BASE_URL=your_custom_api_base_url VITE_CUSTOM_API_MODEL=your_custom_model_nameThe key can be obtained from the corresponding AI service provider (e.g., the OpenAI website).

- Starting Development Services

Execute the following command to start the local development environment:pnpm devOr use the following command to rebuild and run again (recommended for initial startup or after an update):

pnpm dev:freshUpon startup, the browser will automatically open

http://localhost:xxxx(the specific port number is subject to the terminal prompt), you can access the tool interface.

Approach 2: Vercel One-Click Deployment

- Visit the GitHub project page

Open https://github.com/linshenkx/prompt-optimizer and click the "Fork" button in the upper right corner to copy the project to your GitHub account. - Import to Vercel

Log in to Vercel, click "New Project", select "Import Git Repository" and enter your project address (e.g.https://github.com/your-username/prompt-optimizer). - Configuring Environment Variables

In Vercel's project settings, go to the "Environment Variables" page and add the above API key (e.g.VITE_OPENAI_API_KEY) to ensure consistency with the local configuration. - Deployment projects

Click the "Deploy" button and Vercel will automatically build and deploy the project. After the deployment is complete, you will be given a public URL (e.g.https://prompt-optimizer-yourname.vercel.app), the tool can be accessed through this link.

Main function operation flow

1. Optimization of intelligent prompt words

- procedure::

- Open the main page of the tool and find the text box "Enter prompt word".

- Enter an initial prompt word, for example, "Help me write an article about AI."

- Click the "Optimize" button, the system will automatically analyze and generate optimized prompt words, such as "Please write a clearly structured and in-depth article about the development of AI technology".

- The optimization results are displayed below and can be copied and used directly.

- Usage Scenarios: Ideal for tasks that require improved quality of AI output, such as writing, code generation, etc.

2. Real-time effects testing

- procedure::

- Below the optimized cue word, click the "Test Effect" button.

- Select the target AI model (e.g., OpenAI or Gemini) and the system will call the corresponding model to generate the output.

- The test results are displayed on the interface, which supports comparison with the output of the original cue word.

- Usage Scenarios: Verify that the optimization results are as expected and quickly adjust the cue words.

3. Configuring multi-model support

- procedure::

- On the Settings page (usually the gear icon in the upper right corner), go to the "Model Selection" option.

- Select a configured model (e.g., OpenAI, Gemini) from the drop-down menu, or manually enter API information for a custom model.

- After saving the settings, return to the main interface to optimize and test with the selected model.

- Usage Scenarios: Flexible model switching when you need to compare effects across models.

4. Viewing history

- procedure::

- Click the "History" button (usually a clock icon) on the screen.

- The system lists previously optimized cue words and their test results.

- Click on a record to view the details, or to directly reuse the cue word.

- Usage Scenarios: Review past optimization scenarios to avoid duplication of efforts.

caveat

- Ensure that the network connection is stable and that API calls require real-time access to external services.

- API keys need to be kept secure to avoid leakage.

- If you encounter deployment or operational issues, you can refer to the "Issues" board on the GitHub page for help.

With the above steps, users can quickly get started with Prompt Optimizer, making it easy to optimize prompt words and improve the performance of AI models, whether developed locally or deployed online.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...