PrivateGPT: Document Q&A System with Fully Localized RAG Processing Flow

General Introduction

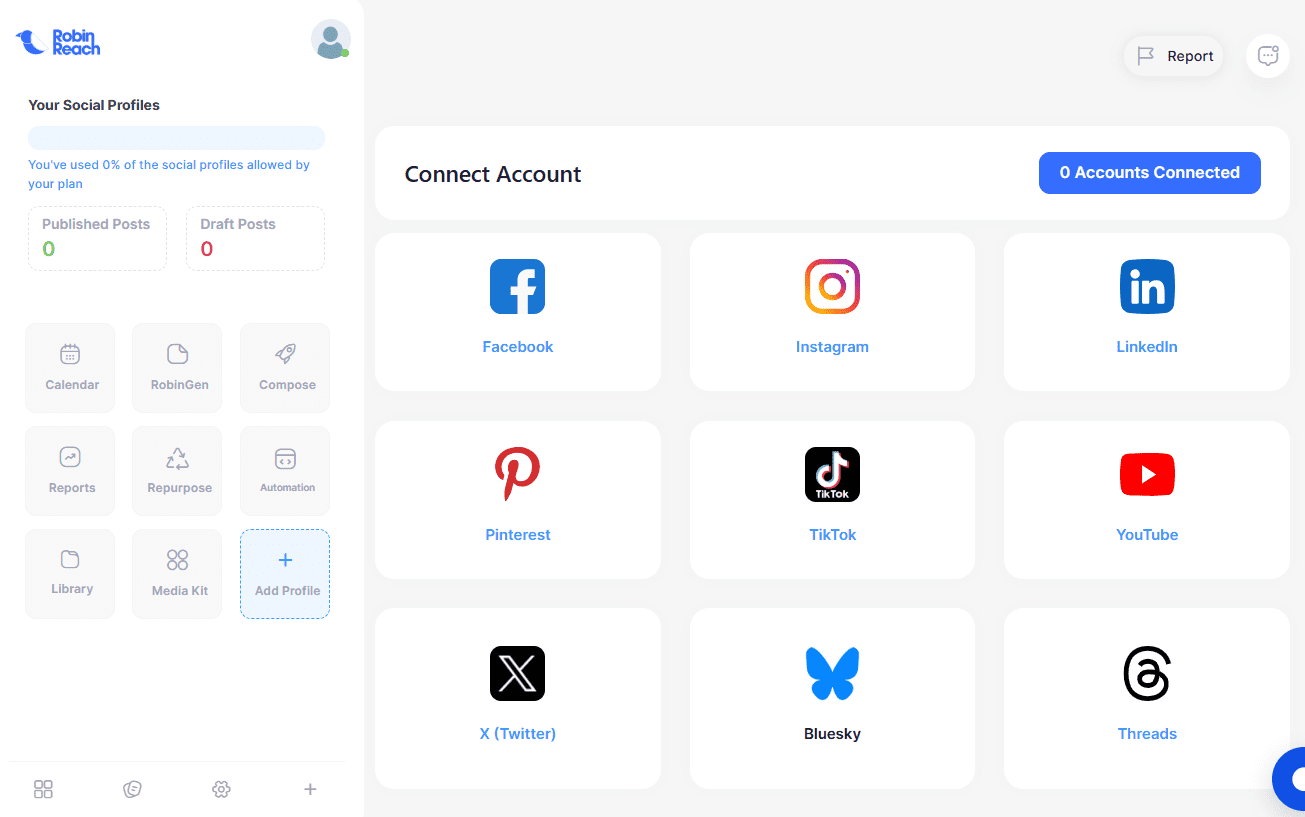

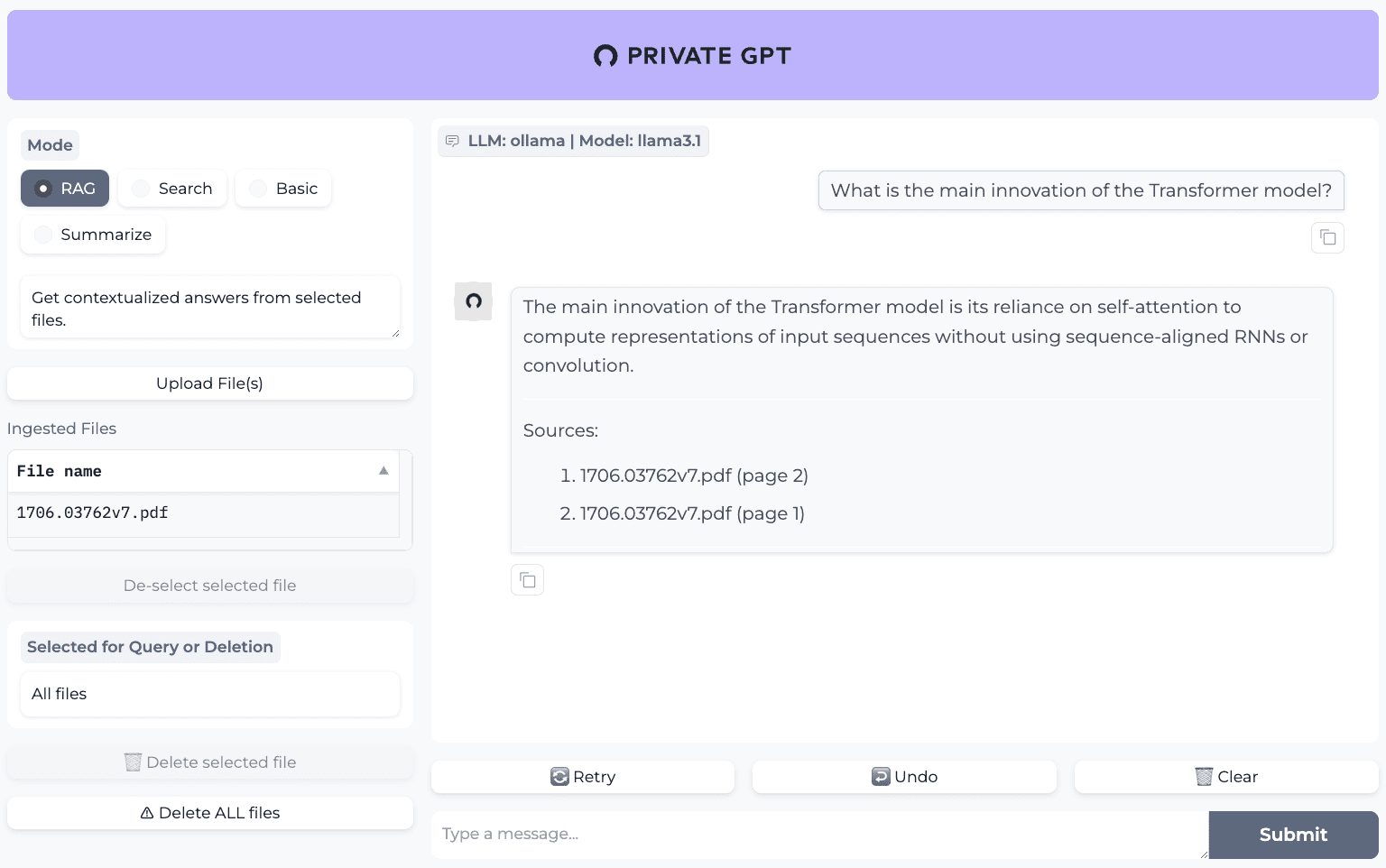

PrivateGPT is an AI project available for production environments that allows users to quiz documents using Large Language Models (LLMs) without an internet connection. The project ensures data privacy for 100%, and all data is processed in the user's execution environment without disclosure.PrivateGPT was developed by the Zylon team to provide an API to support building private, context-aware AI applications. The project follows and extends the OpenAI API standard to support both normal and streaming responses.PrivateGPT is suitable for domains that require a high degree of data privacy, such as healthcare and law.

Similar projects:Kotaemon: simple to deploy open source multimodal document quiz tool

Function List

- Document ingestion: Manage document parsing, splitting, metadata extraction, embedding generation and storage.

- Chat & Finish: Use the context of the ingested document for conversation and task completion.

- Embedding Generation: Generate embedding based on text.

- Context Block Search: ingests the most relevant blocks of text in a document based on the query returns.

- Gradio UI Client: Provides a working client for testing the API.

- Tools for batch model download scripts, ingestion scripts, document folder monitoring, and more.

Using Help

Installation process

- clone warehouse: First, clone PrivateGPT's GitHub repository.

git clone https://github.com/zylon-ai/private-gpt.git

cd private-gpt

- Installation of dependencies: Use

pipInstall the required Python dependencies.

pip install -r requirements.txt

- Configuration environment: Configure environment variables and setup files as needed.

cp settings-example.yaml settings.yaml

# 编辑settings.yaml文件,配置相关参数

- Starting services: Start the service using Docker.

docker-compose up -d

Using the Documentation Q&A Function

- document ingestion: Place the documents to be processed in the specified folder and run the ingestion script.

python scripts/ingest.py --input-folder path/to/documents

- Q&A Interaction: Use the Gradio UI client for Q&A interactions.

python app.py

# 打开浏览器访问http://localhost:7860

High-Level API Usage

- Document parsing and embedding generation: Document parsing and embedding generation using high-level APIs.

from private_gpt import HighLevelAPI

api = HighLevelAPI()

api.ingest_documents("path/to/documents")

- Context search and answer generation: Context retrieval and answer generation using high-level APIs.

response = api.chat("你的问题")

print(response)

Low-Level API Usage

- Embedding Generation: Generate text embedding using the low-level API.

from private_gpt import LowLevelAPI

api = LowLevelAPI()

embedding = api.generate_embedding("你的文本")

- context block search: Context block retrieval using low-level APIs.

chunks = api.retrieve_chunks("你的查询")

print(chunks)

Toolset Usage

- Batch Model Download: Use the Bulk Model Download script to download the required models.

python scripts/download_models.py

- Documents folder monitoring: Automatically ingest new documents using the Document Folder Monitor tool.

python scripts/watch_folder.py --folder path/to/documents© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...