PRAG: Parameterized Retrieval Augmentation Generation Tool for Improving the Performance of Q&A Systems

General Introduction

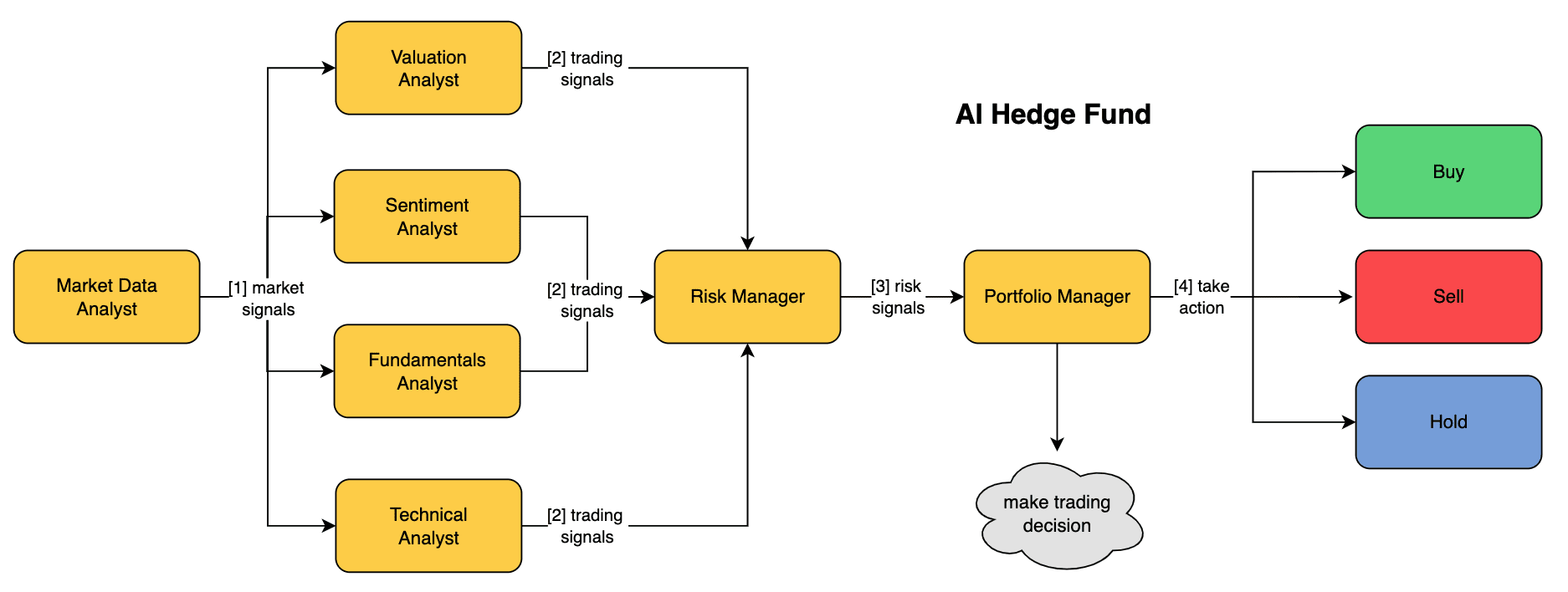

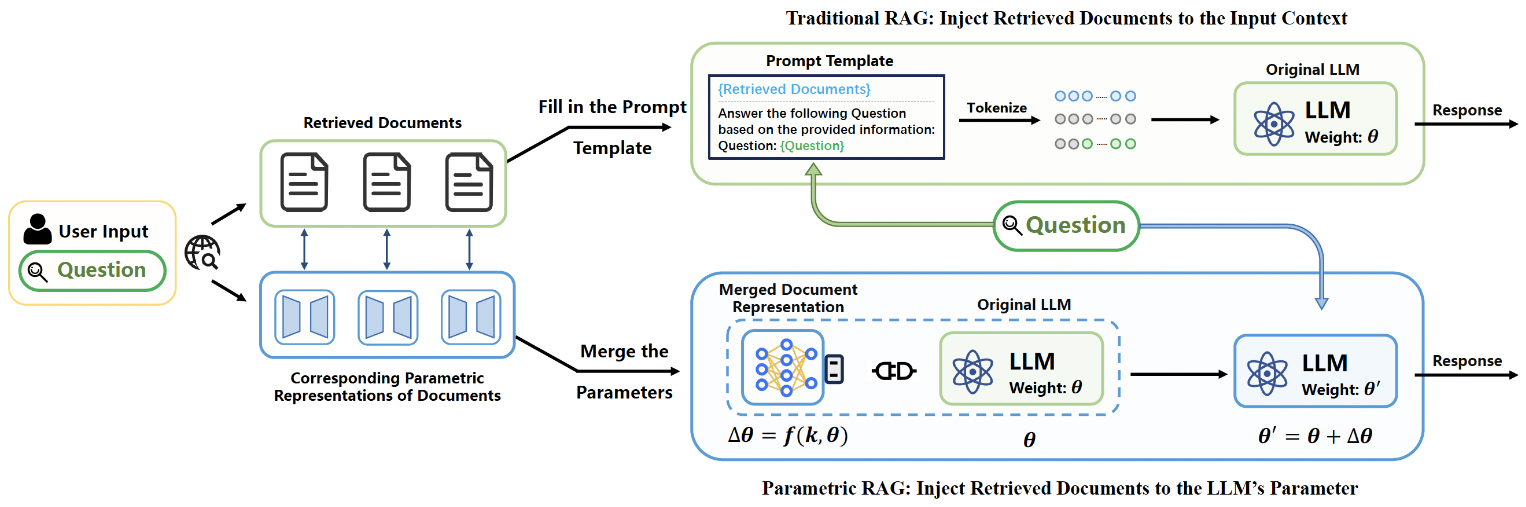

PRAG (Parametric Retrieval-Augmented Generation) is an innovative retrieval-augmented generation tool designed to enhance generation by embedding external knowledge directly into the parameter space of a Large Language Model (LLM). The tool overcomes the limitations of traditional contextual retrieval-augmented generation methods, reduces computational overhead, and enhances the model's reasoning and synthesis capabilities by deeply integrating external knowledge.PRAG provides end-to-end implementations including a data enhancement module, a parameter training module, and an inference module for performance testing of various quiz datasets.

Function List

- Data Enhancement Module: Convert documents into data-enhanced datasets.

- Parameter Training Module: Train additional LoRA parameters to generate a parameterized representation of the document.

- inference module: Merge parameterized representations of related documents and insert them into the LLM for inference.

- Environment Installation: Provides detailed steps and dependencies for installing the environment.

- self-improvement: Supports direct use of pre-enhanced data files or self-processed data enhancements.

- Search preparation: Download and prepare Wikipedia datasets for retrieval.

Using Help

Environment Installation

- Create and activate a virtual environment:

conda create -n prag python=3.10.4

conda activate prag

- Install the necessary dependencies:

pip install torch==2.1.0

pip install -r requirements.txt

- modifications

src/root_dir_path.pyhit the nail on the headROOT_DIRvariable is the address of the folder where the PRAG is stored.

data enhancement

- Use pre-enhanced data files:

tar -xzvf data_aug.tar.gz

- Self-processing data enhancement:

- Download the Wikipedia dataset:

bash

mkdir -p data/dpr

wget -O data/dpr/psgs_w100.tsv.gz https://dl.fbaipublicfiles.com/dpr/wikipedia_split/psgs_w100.tsv.gz - intend BM25 Retrieve:

bash

# 具体步骤请参考项目文档

- Download the Wikipedia dataset:

parametric training

- Generate a parameterized representation of the document:

# 具体步骤请参考项目文档

inference

- Parameterized representations of related documents are merged and inserted into the LLM for inference:

# 具体步骤请参考项目文档© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...