Portkey: a development tool for connecting multiple AI models and managing applications

General Introduction

Portkey is a platform that helps enterprises and developers rapidly build, manage and optimize AI applications. It connects over 200 Large Language Models (LLMs) through a unified AI gateway, providing features such as prompt management, load balancing, and real-time monitoring. The core goal of the site is to make AI applications more reliable, efficient, and secure in production environments. portkey is suitable for both small teams needing to develop AI functionality quickly, as well as large organizations with complex compliance and high performance needs. It is open source and developers can deploy it for free or opt for paid enterprise services. The site has a clean interface and powerful features that are popular with technical teams.

Function List

- AI Gateway: Connect 250+ large language models and provide a unified API interface to simplify model invocation.

- Cue Management: Support for collaborative team creation, testing, and version control AI prompts to improve development efficiency.

- Load balancing and automatic switching: Automatically assigns requests to multiple models and switches alternate models in case of failure.

- real time monitoring: Provides 40+ performance metrics to track the operational status of AI applications.

- Smart Cache: Accelerates response and reduces costs when repeating queries.

- safety protection: Supports input and output validation to ensure data security and compliance.

- multimodal support: Compatible with multimodal models such as image and speech to extend the application scenarios.

- open source deployment: Local deployment options are available and developers can use the core features for free.

Using Help

Portkey is very easy to use and both developers and business users can get started quickly. Here's a detailed how-to guide to help you get the most out of it.

Installation and Configuration

Portkey offers two ways to use it: cloud hosting service and local deployment. Below are the specific steps:

Cloud Hosting Services

- register an account

Open https://portkey.ai/ and click the "Sign Up" button in the upper right corner. Enter your email address and password to complete your registration. After registering, you will receive a verification email, click on the link to activate your account. - Getting the API key

Once logged in, go to the Dashboard and click on the "API Keys" option. Click "Generate" to generate an API key, copy it and save it. This key is your main credentials to invoke the Portkey service. - integrated code

Portkey is compatible with a variety of programming languages, such as Python and JavaScript. in Python, for example, it takes only a few lines of code to get started:from portkey_ai import Portkey client = Portkey(api_key="你的API密钥") response = client.chat.completions.create( messages=[{"role": "user", "content": "你好,今天天气如何?"}], model="gpt-4o-mini" ) print(response)

Replace "your API key" with the one you just copied and run the code to invoke the AI model.

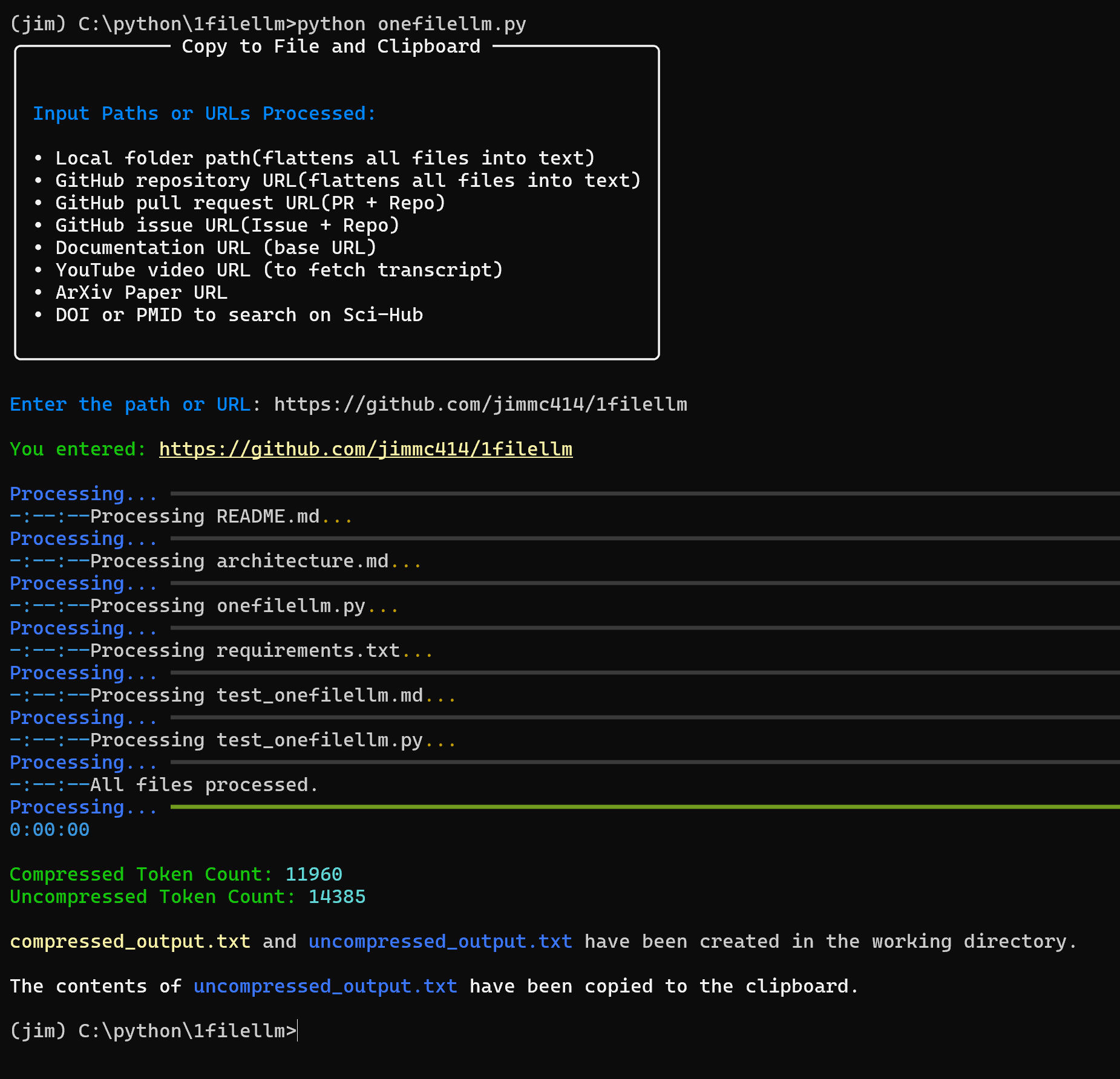

Local deployment (open source version)

- Installing Node.js

Make sure you have Node.js (recommended version 16 or above) installed on your computer. You can download and install it from the Node.js website. - Run command

Open a terminal and enter the following command to deploy the Portkey Gateway with one click:npx @portkey-ai/gatewayAfter successful deployment, the gateway runs by default on the

http://localhost:8787/public/The - configuration model

In the local gateway console, enter your model provider API key (e.g., OpenAI'ssk-xxx), save the configuration. After that you can invoke the model through the local gateway.

Main Functions

AI Gateway Usage

- connectivity modelLogin to the Portkey dashboard and go to the "Gateway" page. Select the model you want to use (e.g. OpenAI, Anthropic, etc.), enter the corresponding API key, and click "Save". After that, all requests will be routed through Portkey.

- load balancing: In the "Routing" setting, add multiple model keys and enable "Load Balancing". the portkey will automatically allocate requests to ensure optimal performance.

- automatic switching: Enable "Fallbacks" to add a fallback model. If the primary model fails, the system will automatically switch to the fallback model.

Cue Management

- Create a Tip: Go to the "Prompts" page and click on "New Prompt". Enter a prompt such as "You are an assistant who helps users with their questions" and save.

- Test Tips: Enter a user message in the test area on the right and click "Run" to view the model output. Adjust the prompts until you are satisfied.

- version control: Click "Save as New Version" after each modification prompt. You can switch or rollback in the version history.

- Teamwork: Click "Share" to generate a link to invite team members to edit together.

real time monitoring

- View Log: On the "Logs" page, you can see the input, output, and elapsed time of each request in real time. If something goes wrong, you can see the error details directly.

- performance analysisGo to Analytics to see metrics such as number of requests, latency and cost. Supports filtering data by day, week, and month.

Featured Function Operation

Smart Cache

- Enable "Semantic Caching" in "Cache" settings. The system will return the cached results directly when you repeat the query, saving time and cost.

- Example: If a user asks "What's the weather like today" multiple times, the response will be faster the second time around.

multimodal support

- When calling a multimodal model, simply specify the model name (e.g., a model that supports images) in the code and upload the file:

response = client.chat.completions.create( messages=[{"role": "user", "content": "描述这张图片", "image": "path/to/image.jpg"}], model="multimodal-model" ) - The returned result will contain the image analysis.

With these steps, you can easily get started with Portkey, whether you're developing a simple AI chat tool or managing a complex enterprise AI application.

application scenario

- Enterprise customer service system

Portkey can connect multiple language models to build intelligent customer service. Load balancing and automatic switching ensure uninterrupted service during peak periods. Prompt management helps optimize response quality. - Content generation tools

Writers or marketing teams can test different prompts with Portkey to generate articles, adwords, etc. Smart caching reduces duplicate generation costs. - Educational Assistant Development

Developers can use Portkey's multimodal support to build educational applications that answer questions and analyze images. Local deployment is suitable for small educational organizations.

QA

- What programming languages does Portkey support?

Supports Python, JavaScript and REST APIs, compatible with OpenAI SDK, Langchain and other commonly used libraries. - Do I have to pay for local deployment?

No, the open source version is completely free. The enterprise version of the cloud service is the only one that requires a subscription. - How do you keep your data secure?

Portkey is ISO 27001 and SOC 2 certified, encrypts all data transmission and storage, and supports private cloud deployments.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...