Ovis: visual and text alignment model for accurate backpropagation of image cue words

General Introduction

Ovis (Open VISion) is an open source multimodal large language model (MLLM) developed by the AIDC-AI team of Alibaba's International Digital Commerce Group and hosted on GitHub, which employs an innovative structural embedding alignment technique to efficiently merge visual and textual data, support multimodal inputs such as images, text, and video, and generate the corresponding output content. As of March 2025, Ovis has launched the Ovis2 family (1B to 34B parameter scales), which offers superior miniaturization performance, enhanced inference capabilities, and the ability to process high-resolution images and video. The project is aimed at developers and researchers, provides detailed documentation and code, emphasizes open-source collaboration, and has already gained traction in the community.

Function List

- Multi-modal input support: Handles multiple input types such as images, text, video, etc.

- Visual text alignment: Generate text descriptions that precisely match the image or video content.

- High Resolution Image Processing: Optimized to support high-resolution images, preserving detail.

- Video and Multi-graph Analysis: Supports sequential processing of video frame sequences and multiple images.

- Enhanced reasoning skills: Enhance logical reasoning through instruction tuning and DPO training.

- Multi-language OCR support: Recognize and process multilingual image text.

- Multiple model options: Models with parameters from 1B to 34B are available to accommodate different hardware.

- Quantitative version support: e.g., the GPTQ-Int4 model, which lowers the operational threshold.

- Gradio Interface Integration: Provide an intuitive interface for web interaction.

Using Help

Installation process

The installation of Ovis relies on specific Python environments and libraries, as detailed below:

- environmental preparation

- Make sure Git and Anaconda are installed.

- Clone the Ovis repository:

git clone git@github.com:AIDC-AI/Ovis.git - Create and activate a virtual environment:

conda create -n ovis python=3.10 -y conda activate ovis

- Dependent Installation

- Go to the project catalog:

cd Ovis - Install dependencies (based on

requirements.txt):pip install -r requirements.txt - Install the Ovis package:

pip install -e . - (Optional) Installation of acceleration libraries (such as Flash Attention):

pip install flash-attn==2.7.0.post2 --no-build-isolation

- Go to the project catalog:

- Environmental validation

- Check the PyTorch version (2.4.0 recommended):

python -c "import torch; print(torch.__version__)"

- Check the PyTorch version (2.4.0 recommended):

How to use Ovis

Ovis supports both command-line reasoning and Gradio interface operations, and the following is a detailed guide:

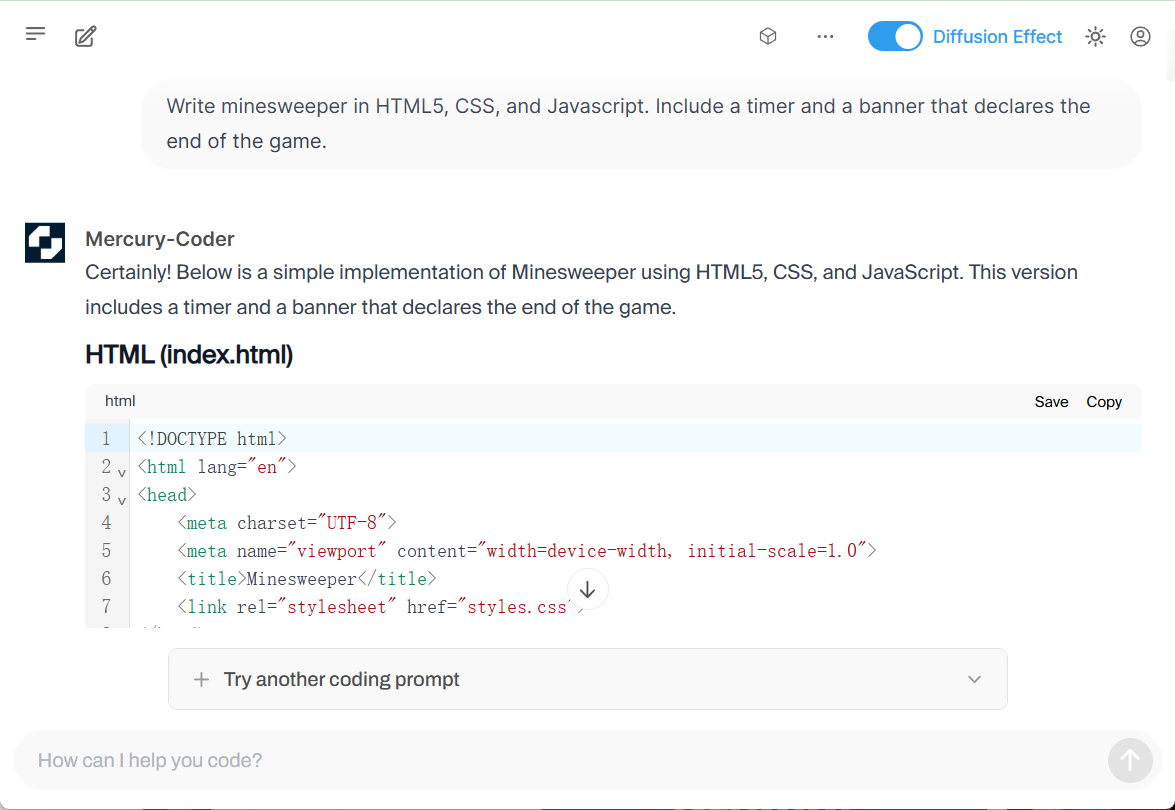

command-line reasoning

- Preparing models and inputs

- Download the model from Hugging Face (e.g. Ovis2-8B):

git clone https://huggingface.co/AIDC-AI/Ovis2-8B - Prepare input files, e.g. images

example.jpgand the prompt "Describe this picture".

- Download the model from Hugging Face (e.g. Ovis2-8B):

- running inference

- Creating Scripts

run_ovis.py::import torch from PIL import Image from transformers import AutoModelForCausalLM # 加载模型 model = AutoModelForCausalLM.from_pretrained( "AIDC-AI/Ovis2-8B", torch_dtype=torch.bfloat16, multimodal_max_length=32768, trust_remote_code=True ).cuda() # 获取 tokenizer text_tokenizer = model.get_text_tokenizer() visual_tokenizer = model.get_visual_tokenizer() # 处理输入 image = Image.open("example.jpg") text = "描述这张图片" query = f"<image>\n{text}" prompt, input_ids, pixel_values = model.preprocess_inputs(query, [image]) attention_mask = torch.ne(input_ids, text_tokenizer.pad_token_id) # 生成输出 with torch.inference_mode(): output_ids = model.generate( input_ids.unsqueeze(0).cuda(), pixel_values=[pixel_values.cuda()], attention_mask=attention_mask.unsqueeze(0).cuda(), max_new_tokens=1024 ) output = text_tokenizer.decode(output_ids[0], skip_special_tokens=True) print("输出结果:", output) - Execute the script:

python run_ovis.py

- Creating Scripts

- View Results

- Example output: "The picture is of a dog standing in a grassy field with a blue sky in the background".

Gradio Interface Operation

- Starting services

- Run it from the Ovis catalog:

python ovis/serve/server.py --model_path AIDC-AI/Ovis2-8B --port 8000 - Waiting to load, accessing

http://127.0.0.1:8000The

- Run it from the Ovis catalog:

- interface operation

- Upload a picture to the interface.

- Enter prompts such as "What's in this picture?" .

- Click Submit to view the generated results.

Featured Functions

High Resolution Image Processing

- procedure: Upload high-resolution images and the model is automatically partitioned (max. number of partitions 9).

- take: Suitable for tasks such as artwork analysis and map interpretation.

- Hardware RecommendationsThe newest addition to the program is the 16GB graphics memory, which ensures smooth operation.

Video and Multi-graph Analysis

- procedure::

- Prepare video frames or multiple images such as

[Image.open("frame1.jpg"), Image.open("frame2.jpg")]The - Modify the inference code in the

pixel_valuesThe parameter is a list of multiple images.

- Prepare video frames or multiple images such as

- take: Analyze video clips or sequences of consecutive images.

- Sample output: "The first frame is the street, the second is the pedestrian."

Multi-language OCR support

- procedure: Upload images containing text in multiple languages and enter the prompt "Extract text from image".

- take: Scanning documents, translating image text.

- Examples of results: Extract mixed Chinese and English text and generate descriptions.

Enhanced reasoning skills

- procedure: Enter complex questions such as "How many people are in the picture? Please explain step by step".

- take:: Education, data analysis tasks.

- Sample output:: "There are two people in the picture, the first step is to observe one person on the left side and the second step is to observe a second person on the right side".

caveat

- hardware requirement: Ovis2-1B requires 4GB of video memory, Ovis2-34B recommends multiple GPUs (48GB+).

- Model Compatibility: Support for mainstream LLMs (e.g. Qwen2.5) and ViTs (e.g. aimv2).

- Community Feedback: Issues can be submitted to GitHub Issues.

Ovis2 Image Backpropagation Prompt Words One-Click Installer

Based on the Ovis2-4B and Ovis2-2B models.

Quark: https://pan.quark.cn/s/23095bb34e7c

Baidu: https://pan.baidu.com/s/12fWAbshwKY8OYcCcv_5Pkg?pwd=2727

Unzip the password to find it yourself at jian27.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...