Orion: Xiaomi's Open Source End-to-End Autonomous Driving Reasoning and Planning Framework

General Introduction

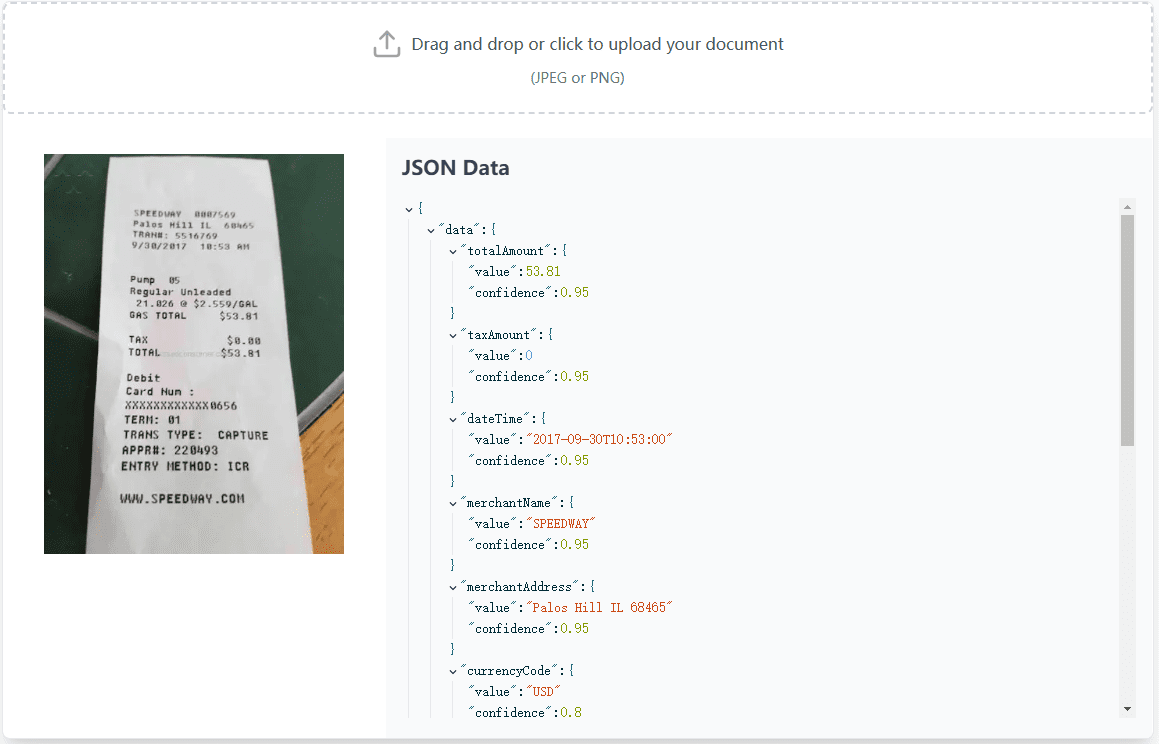

Orion is an open source project developed by Xiaomi Labs, focusing on end-to-end (E2E) autonomous driving technology. It solves the problem of insufficient causal reasoning in complex scenarios of traditional autonomous driving methods through visual language modeling (VLM) and generative planners.Orion integrates long-term historical context, driving scenario reasoning, and precise trajectory prediction to significantly improve decision-making accuracy. The project provides inference code, pre-trained models, and configuration files to support developers to perform both open-loop and closed-loop evaluations on the Bench2Drive dataset.Orion performs excellently in closed-loop tests, with a Driving Score (DS) of 77.74 and a Success Rate (SR) of 54.62%, which is far better than comparable methods. It is suitable for developers, researchers and enterprises in the field of autonomous driving for algorithm development and scenario testing.

Function List

- Long-term historical contextualization: With the QT-Former module, long-term data is extracted and integrated while the vehicle is in motion, providing more comprehensive scenario information.

- Driving Scenario Reasoning: Analyze the road environment using a Large Language Model (LLM) to generate logical driving instructions.

- Accurate trajectory prediction: A generative planner is used to generate multimodal trajectories (e.g., acceleration, steering) based on linguistic commands to improve path planning accuracy.

- Unified optimization framework: Support end-to-end optimization of visual questioning and answering (VQA) and planning tasks, bridging the gap between semantic reasoning and action output.

- Open-loop vs. closed-loop evaluation: Provides open-loop evaluation scripts and closed-loop evaluation configurations to support Bench2Drive dataset testing.

- Visual Analytics Tools: Generate comparative videos of driving scenarios, labeled with decision points and predicted trajectories for easy analysis of model performance.

- Open Source Resource Support: Inference code, pre-trained models and configuration files are provided to facilitate secondary development and research.

Using Help

Orion is an open source framework for self-driving developers and researchers hosted on GitHub. Below is a detailed usage guide to help users quickly deploy, run, and develop.

Installation process

Orion needs to be run in an environment that supports Python, and a Linux system (e.g. Ubuntu 20.04) is recommended to ensure compatibility. Here are the detailed installation steps:

- Preparing the environment

Ensure that the following tools are installed on your system:- Python 3.8 (the officially recommended version). Check the command:

python3 --version - Git. installation commands:

sudo apt update sudo apt install git - Conda (for creating virtual environments). If Conda is not available, you can download it from the Anaconda website.

- Python 3.8 (the officially recommended version). Check the command:

- Creating a Virtual Environment

Use Conda to create and activate a virtual environment:conda create -n orion python=3.8 -y conda activate orion - clone warehouse

Download the Orion source code using Git:git clone https://github.com/xiaomi-mlab/Orion.git cd Orion - Installing PyTorch

The official recommendation is to install PyTorch 2.4.1 (supports CUDA 11.8). Run the following command:pip install torch==2.4.1+cu118 torchvision==0.19.1+cu118 torchaudio==2.4.1 --index-url https://download.pytorch.org/whl/cu118 - Installing additional dependencies

Install the other Python libraries needed for your project:pip install -v -e . pip install -r requirements.txtin the event that

requirements.txtIf some of the libraries in thetransformers,numpy). - Download pre-trained model

Orion uses external pre-trained models, including:- 2D language model weights (source:Hugging Face).

- Visual coder and projector weights (source:OmniDrive).

Download and unzip it to the specified directory:

mkdir ckpts cd ckpts wget https://huggingface.co/exiawsh/pretrain_qformer/resolve/main/pretrain_qformer.pth wget https://github.com/NVlabs/OmniDrive/releases/download/v1.0/eva02_petr_proj.pth - Preparing the dataset

Orion uses the Bench2Drive dataset for evaluation. Users are required to download and prepare their own data, refer to Bench2Drive Data Preparation Documentation. Extract the dataset to the project directory. for example:unzip bench2drive.zip -d ./data - Verify Installation

Run the inference script to check the environment configuration:./adzoo/orion/orion_dist_eval.sh adzoo/orion/configs/orion_stage3.py ckpts/Orion.pth 1If no errors are reported, the installation was successful.

Main Functions

Orion's core function is end-to-end autopilot reasoning and planning. Here's how it works:

1. Operational open-loop assessment

Open-loop evaluation is used to test the performance of a model on a fixed dataset. Operational Steps:

- Make sure the dataset and model weights are ready.

- Run the evaluation script:

./adzoo/orion/orion_dist_eval.sh adzoo/orion/configs/orion_stage3.py ckpts/Orion.pth 1 - To enable CoT (Chain-of-Thought) reasoning, run:

./adzoo/orion/orion_dist_eval.sh adzoo/orion/configs/orion_stage3_cot.py ckpts/Orion.pth 1 - The output is saved in the

outputCatalog, including trajectory coordinates and evaluation metrics (e.g., L2 error).

2. Operational closed-loop assessment

Closed-loop evaluation simulates a real driving environment and requires a CARLA simulator. Operating Procedure:

- Installation of CARLA: Reference Bench2Drive Evaluation Tool Documentation Installation of CARLA and assessment tools.

- Configure evaluation scripts: Edit the evaluation script (e.g.

eval.sh), set the following parameters:GPU_RANK=0 TEAM_AGENT=orion TEAM_CONFIG=adzoo/orion/configs/orion_stage3_agent.py+ckpts/Orion.pth - Operational closed-loop assessment: Execute the evaluation commands (refer to the Bench2Drive documentation for specific commands).

- Outputs include Driving Score (DS), Success Rate (SR), and visualization videos.

3. Visualization and analysis

Orion supports the generation of comparative videos of driving scenarios that demonstrate model decisions and trajectory predictions. Operational Steps:

- Run the visualization script:

python visualize.py --data_path ./data/test --model_path ckpts/Orion.pth - The output video is saved in the

output/videosCatalog, labeled with traffic lights, obstacles, and predicted trajectories (green lines). Users can compare the performance of Orion with other methods (e.g. UniAD, VAD).

Featured Function Operation

Orion features a combination of visual language modeling and generative planning to provide powerful scene inference and trajectory generation capabilities. Here is how it works in detail:

1. Scenario reasoning and verbal instructions

Orion's language model supports text-based instructions to adjust driving behavior. For example, enter "Slow down at intersections". Step by step:

- Creating a command file

commands.txt, write command:在交叉路口减速慢行 - Runs the inference script and specifies the command file:

python inference.py --model_path ckpts/Orion.pth --command_file commands.txt - The output will reflect the adjustment of the trajectory by the command, saved in the

output/trajectoriesThe

2. Multimodal trajectory generation

Orion's generative planner supports multiple trajectory modes (e.g. acceleration, deceleration, steering). Generative parameters can be adjusted by the user through a configuration file:

- compiler

configs/planner.yaml::trajectory_mode: multimodal max_acceleration: 2.0 max_steering_angle: 30 - Run the planning script:

python planner.py --config configs/planner.yaml --model_path ckpts/Orion.pth - The output traces are saved in the

output/trajectories, supports multiple candidate paths.

3. End-to-end optimization

Orion supports visual question and answer (VQA) and unified optimization of planning tasks. Users can test it by following the steps below:

- Configure inference scripts to enable VQA mode:

python inference.py --model_path ckpts/Orion.pth --vqa_mode true - Input test questions (e.g., "Is there a pedestrian ahead?") , the model returns the semantic answer and the corresponding trajectory.

caveat

- hardware requirement: NVIDIA A100 (32GB RAM) or higher GPUs are recommended for inference. larger RAM is required for training (80GB recommended).

- data format: The input data must conform to the Bench2Drive format (e.g.

.jpg,.pcd), otherwise pretreatment is required. - model weight: The official Orion model has been released (Hugging Face), but the training framework is not yet open.

- Update Frequency: Check your GitHub repositories regularly for the latest code and documentation.

With these steps, users can quickly deploy Orion to run inference tasks or perform algorithm development.

application scenario

- Autonomous Driving Algorithm Development

Developers can use Orion to test end-to-end autonomous driving algorithms and optimize decision-making capabilities in complex scenarios. Open source code and models support rapid iteration. - academic research

Based on Orion, researchers can explore the application of visual language models in autonomous driving, analyze the synergistic effect of reasoning and planning, and produce high-quality papers. - simulation test

Automakers can integrate Orion into simulation environments such as CARLA to test vehicle performance in virtual scenarios and reduce road test costs. - Education and training

Colleges and universities can use Orion as a teaching tool to help students understand the principles of autonomous driving and enhance their practical skills with visualization videos.

QA

- What datasets does Orion support?

The Bench2Drive dataset is currently supported and other datasets (e.g. nuScenes) may be supported in the future. Users need to prepare the data in the official format. - How do you solve the problem of slow reasoning?

CoT reasoning is slow and can be switched to standard reasoning mode (orion_stage3.py). Optimizing GPU performance or reducing model precision (e.g. fp16) can also improve speed. - What tools are needed for closed-loop assessment?

CARLA emulator and Bench2Drive evaluation tool need to be installed, please refer to the official document for detailed configuration. - Does Orion support real-time driving?

The current version is based on offline reasoning, real-time applications need to further optimize the code and hardware, it is recommended to pay attention to the official update.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...