OpenVoice (MyShell): Instant Speech Cloning in Multiple Languages with Fewer Samples

General Introduction

OpenVoice is a versatile, on-the-fly voice cloning method that replicates the voice of a reference speaker and generates multilingual speech using only short audio clips of the speaker. In addition to replicating timbre, OpenVoice allows fine control over voice style, including emotion, accent, rhythm, pauses, and intonation.

OpenVoice related text-to-speech projects: https://github.com/myshell-ai/MeloTTS

The program can train its own speech using the dataset, but lacks a training interface. It is not the same as Instantaneous Speech Cloning, and focuses more on text-to-speech using a stably trained model.

Function List

Accurate Tone Cloning: OpenVoice can accurately replicate reference tones and generate speech in multiple languages and accents.

Flexible Voice Style Control: OpenVoice allows for fine-grained control over voice style, including emotion, accent, rhythm, pauses and intonation.

Zero-shot cross-language speech cloning: the generated speech does not need to be in the same language as the reference speech, nor does it need to be presented in a large-scale multilingual training dataset.

Featured:

1. Accurate tone cloning. OpenVoice can accurately clone reference tones and generate speech in multiple languages and accents.

2. Flexible tone control. OpenVoice provides fine-grained control over voice style (e.g., emotion and accent) as well as other stylistic parameters, including rhythm, pauses, and intonation.

3. zero sample cross-language speech cloning. Neither the language in which the speech is generated nor the language in which the speech is referenced needs to be present in a large-scale speaker multilingual training dataset.

Using Help

Refer to the instructions for use for detailed guidance.

Please check QA for frequently asked questions, we will update the list of questions and answers regularly.

Apply in MyShell:Direct use of instant speech reproduction and speech synthesis (TTS) services.

Minimalist Example:Quickly experience OpenVoice without the need for high quality.

Linux installation:For researchers and developers only.

Quick trial in google colab

/content!git clone -b dev https://github.com/camenduru/OpenVoice /content/OpenVoice!apt -y install -qq aria2!aria2c --console- log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/camenduru/OpenVoice/resolve/main/checkpoints_1226.zip -d /content -o checkpoints_ 1226.zip!unzip -d /content/OpenVoice! 1226.zip!unzip /content/checkpoints_1226.zip!pip install -q gradio==3.50.2 langid faster-whisper whisper-timestamped unidecode eng-to-ipa pypinyin cn2an!python openvoice_app.py --share

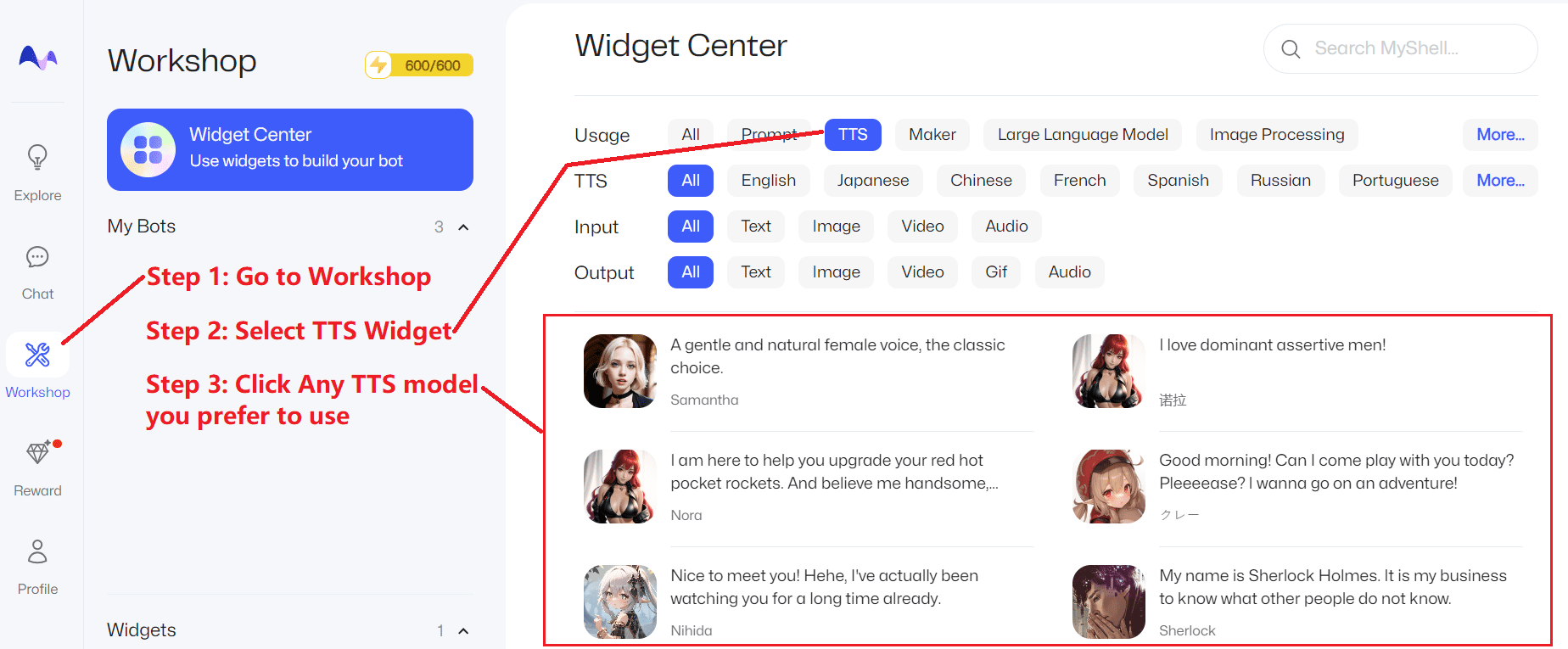

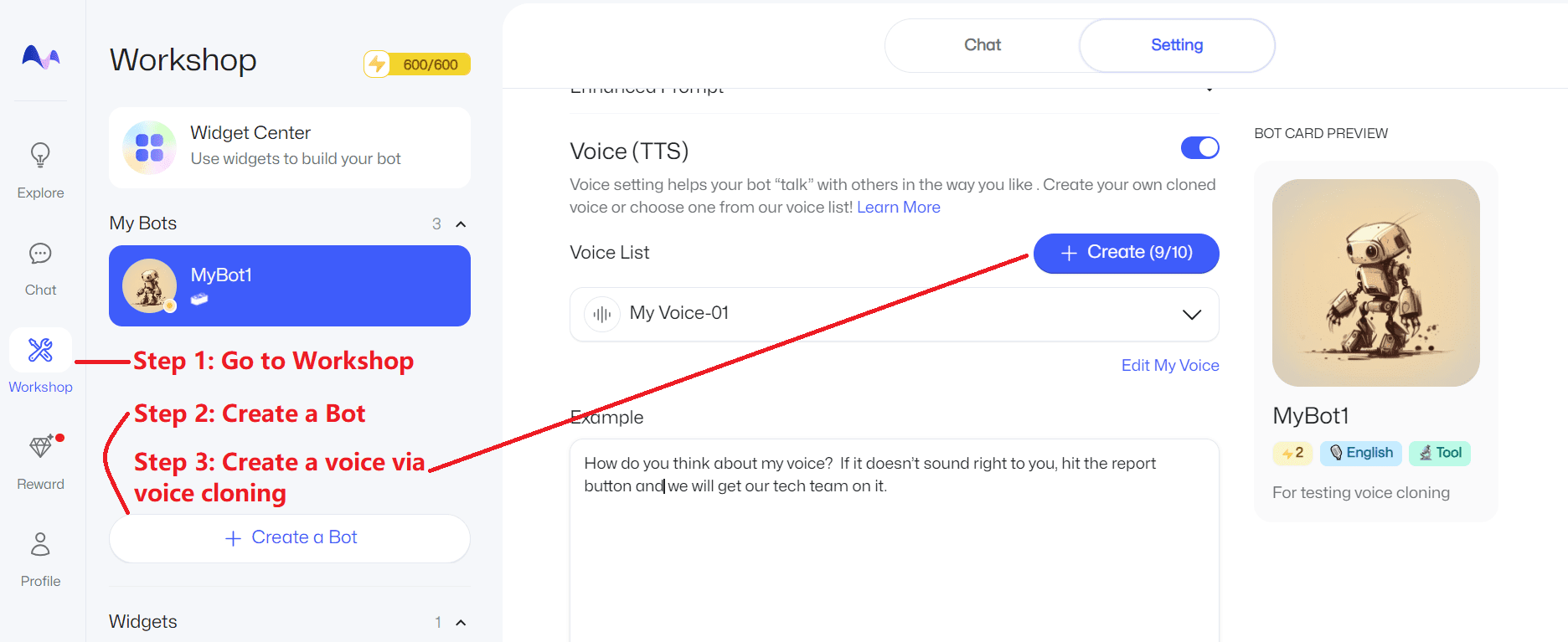

Apply in MyShell

For most users, the most convenient way to experience the free TTS and Live Voice Replication services is directly in MyShell.

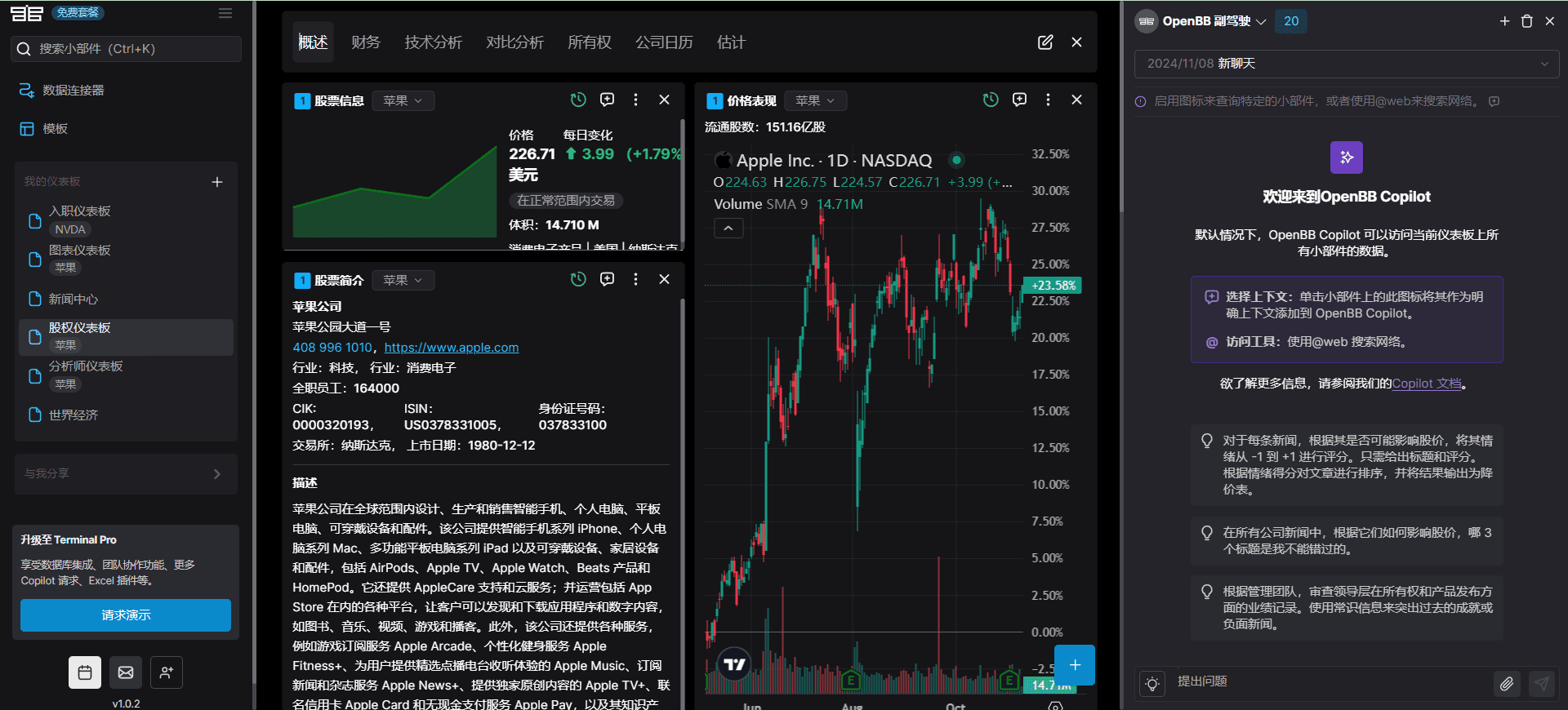

TTS Services

Click hereand follow the steps below:

voice cloning

Click hereand follow the steps below:

Minimalist Example

For those who want to have a quick experience with OpenVoice and don't have high demands on quality and stability, you can click on any of the links below:

Lepton AI:https://www.lepton.ai/playground/openvoice

MySHell:https://app.myshell.ai/bot/z6Bvua/1702636181

HuggingFace:https://huggingface.co/spaces/myshell-ai/OpenVoice

Linux Installation

This section is primarily for developers and researchers skilled in Linux, Python, and PyTorch. Clone this repository and do the following:

conda create -n openvoice python=3.9

conda activate openvoice

git clone git@github.com:myshell-ai/OpenVoice.git

cd OpenVoice

pip install -e .

From [here are] download the checkpoint and then unzip it into the checkpoints file (paper)

1. Flexible voice style control:See [demo_part1.ipynb]Learn how OpenVoice controls the style of cloned speech.

2. Cross-language speech cloning:Please refer to [demo_part2.ipynb]Learn about presentations in languages that are visible or invisible in the MSML training set.

3. Gradio Demo:Here we provide a minimal local gradio simulation. If you have problems with the gradio demo, we strongly recommend that you check out the demo_part1.ipynb,demo_part2.ipynb and [QnA] Use the python -m openvoice_app --share Start the local gradio demo.

3. Advanced User's Guide:The base speech model can be replaced by any model (any language, any style) favored by the user. As shown in the demo, using the se_extractor.get_se Methods for extracting tone embeddings for new base speakers.

4. A proposal for generating natural speech:There are many single or multi-speaker TTS methods for generating natural speech that are readily available. By simply replacing the base speaker model with your preferred model, you can bring the naturalness of your speech to the level you desire.

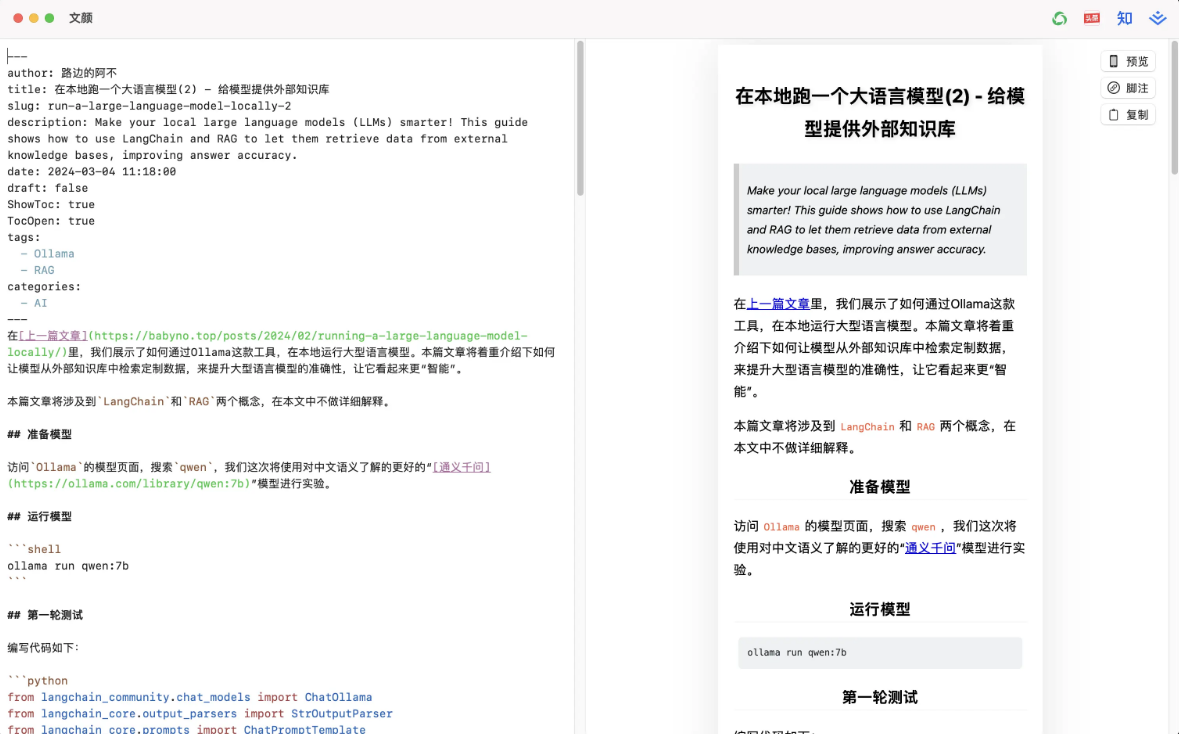

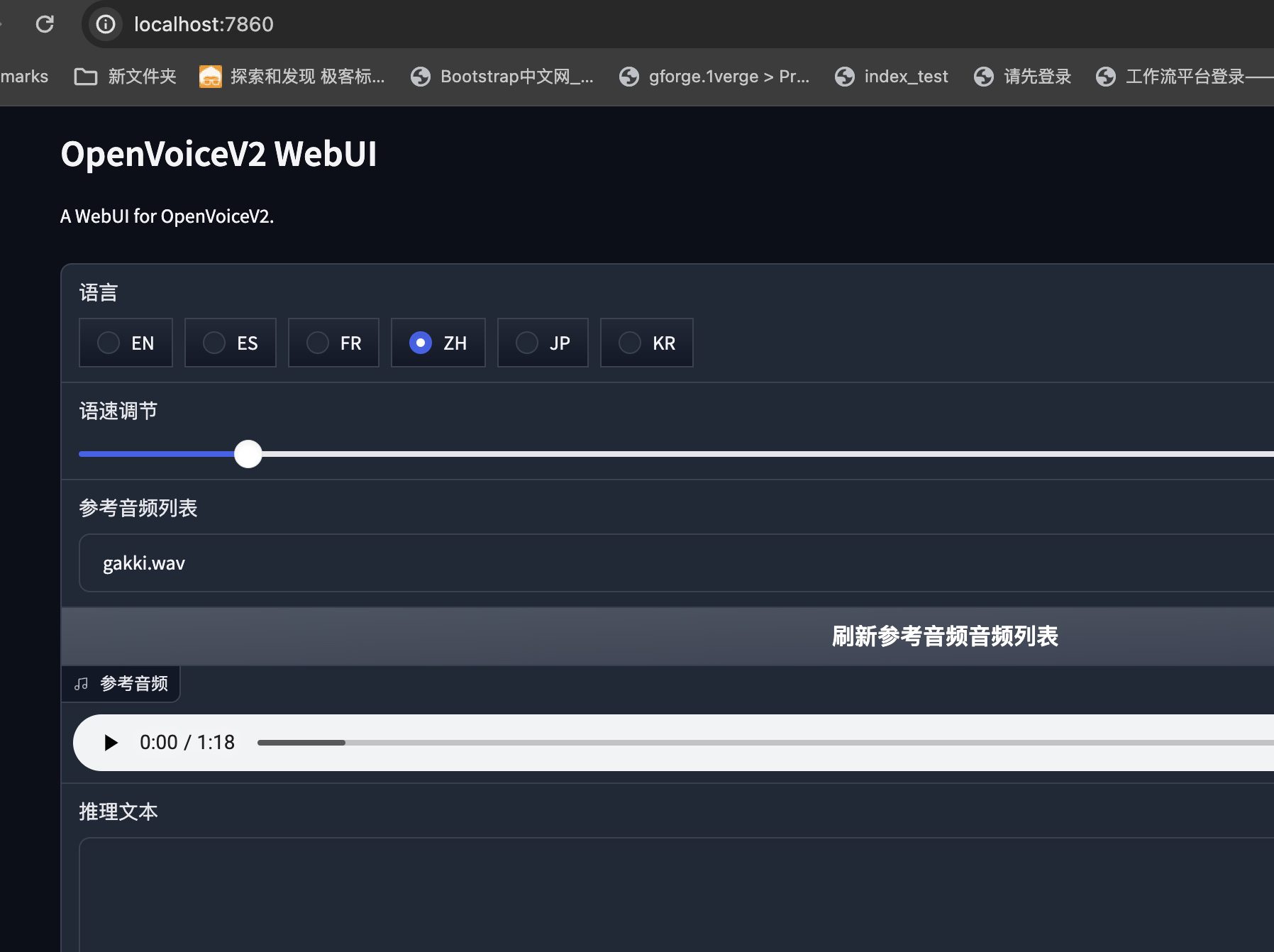

OpenVoiceV2 local deployment tutorial, Apple MacOs deployment process

Recently the OpenVoice project has been updated with V2 version, the new model is more friendly to Chinese inference, and the timbre has been improved to some extent, this time we share how to deploy the V2 version of OpenVoice locally in Apple's MacOs system.

First download the OpenVoiceV2 zip file:

OpenVoiceV2-for-mac代码和模型 https://pan.quark.cn/s/33dc06b46699

This version has been optimized for MacOs system, and the Chinese voice has been modified for loudness uniformity.

After unzipping, first copy the hub folder from HF_HOME in the project directory to the following directory on your current system:

/Users/当前用户名/.cache/huggingface

This is the default saving path of huggingface model in Mac system, if you don't copy it, you need to download more than ten G of pre-training model from scratch, which is very troublesome.

Then go back to the root directory of the project and enter the command:

conda create -n openvoice python=3.10

Create a virtual environment with Python version 3.10. Note that the version can only be 3.10.

Next activate the virtual environment:

conda activate openvoice

The system returns:

(base) ➜ OpenVoiceV2 git:(main) ✗ conda activate openvoice

(openvoice) ➜ OpenVoiceV2 git:(main) ✗

Indicates successful activation.

Installation is done via brew since the bottom layer requires mecab:

brew install mecab

Start installing dependencies:

pip install -r requirements.txt

Since OpenVoice is only responsible for the extraction of phonemes, converting speech also requires tts support, here the underlying dependency on the melo-tts module.

Go to the Melo directory:

(openvoice) ➜ OpenVoiceV2 git:(main) ✗ cd MeloTTS

(openvoice) ➜ MeloTTS git:(main) ✗

Install the MeloTTS dependency:

pip install -e .

After success, you need to download the dictionary file separately:

python -m unidic download

Then just go back to the root directory and start the project:

python app.py

The system returns:

(openvoice) ➜ OpenVoiceV2 git:(main) ✗ python app.py

Running on local URL: http://0.0.0.0:7860

IMPORTANT: You are using gradio version 3.48.0, however version 4.29.0 is available, please upgrade.

--------

To create a public link, set `share=True` in `launch()`.

This completes the deployment for OpenVoice in MacOs.

concluding remarks

One of OpenVoice's breakthrough features is its ability to perform zero-shot cross-language voice cloning. It can clone voices into languages that are not included in the training dataset without having to provide training data for those languages with a large number of speakers. However, the fact is that Zero-shot learning typically faces lower accuracy on unknown categories, especially on complex categories, compared to traditional supervised learning with rich labeling data. Relying on auxiliary information may introduce noise and inaccuracies, so OpenVoice does not work well for some very specific tones, and has to be fine-tuned for the underlying modality to be able to solve such problems.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...