OpenManus: the open source version of Manus by MetaGPT

General Introduction

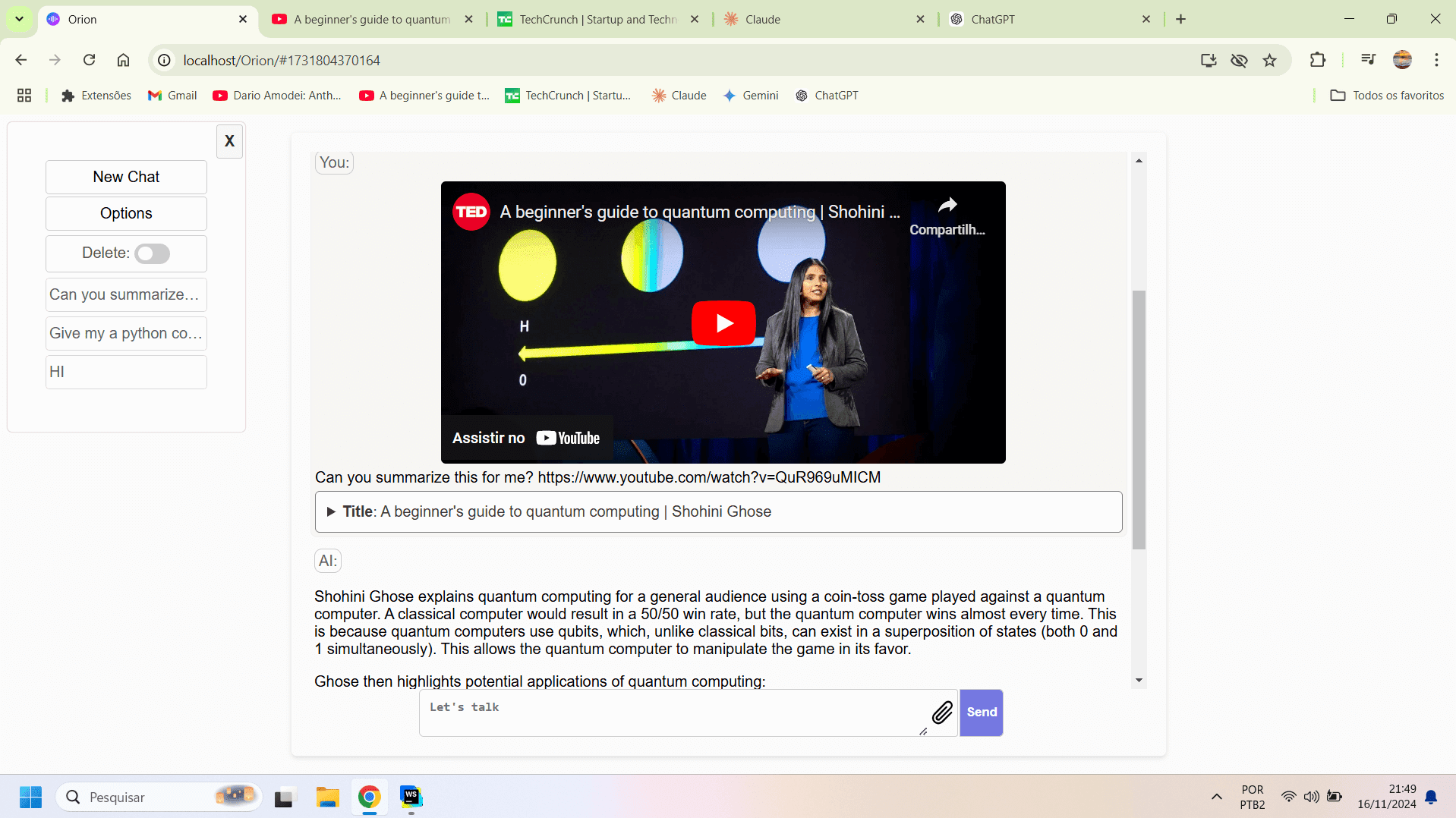

OpenManus is an open source project designed to help users run intelligences locally with simple configuration to realize various creative ideas. It consists of MetaGPT Community members @mannaandpoem, @XiangJinyu, @MoshiQAQ, and @didiforgithub developed it in just 3 hours, and in the meantime, you can follow their automated programming project! MGX OpenManus Compared to Manus, which requires an invitation code, OpenManus does not require any entry barrier, and users only need to clone the code and configure the LLM API to get started quickly. The project is based on Python development, with a simple and clear structure, and supports the input of tasks through the terminal to drive intelligent bodies to perform operations. It is currently a rudimentary implementation, and the team welcomes suggestions or code contributions from users. Future plans include optimizing task planning and adding real-time demonstration capabilities.

OpenManus interface version: https://github.com/YunQiAI/OpenManusWeb

OpenManusWeb (unofficial)

Function List

- Local intelligentsia operation: Execute automated operations locally using the configured LLM APIs by entering tasks through the terminal.

- Supports mainstream LLM models: GPT-4o is integrated by default, and the user can adjust the model configuration as needed.

- one-touch start: Run

python main.pyYou can quickly enter the task entry mode. - Experimental version: Provided

python run_flow.pyUsed to test new features in development. - Community collaboration: Support participation in project development by submitting issues or code via GitHub.

Using Help

Installation process

The installation of OpenManus is simple and suitable for users familiar with Python. Here are the detailed steps:

1. Creating the Conda environment

To avoid dependency conflicts, it is recommended to create a standalone environment using Conda:

conda create -n open_manus python=3.12

conda activate open_manus

- clarification: The first command creates a file named

open_manusenvironment, using Python 3.12; the second command activates the environment, and the terminal prompt changes to(open_manus)The - pre-conditions: Conda needs to be installed and can be downloaded from the Anaconda website.

2. Cloning the code repository

Download the OpenManus project from GitHub:

git clone https://github.com/mannaandpoem/OpenManus.git

cd OpenManus

- clarification: The first command clones the code locally and the second command enters the project directory.

- pre-conditions: Git needs to be installed, which can be done via the

git --versionCheck if it is available, if not download it from git-scm.com.

3. Installation of dependencies

Install the Python packages needed for your project:

pip install -r requirements.txt

- clarification::

requirements.txtfile lists all the dependent packages that will be automatically installed by running this command. - network optimization: If the download is slow, you can use domestic mirrors such as

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simpleThe

Configuration steps

OpenManus requires the LLM API to be configured to drive the smartbody functionality, as described below:

1. Creating configuration files

In the project root directory of the config folder to create a configuration file:

cp config/config.example.toml config/config.toml

- clarification: This command copies the example file as an actual configuration file.

config.tomlis the file read at runtime.

2. Editing configuration files

show (a ticket) config/config.toml, fill in your API key and parameters:

# 全局 LLM 配置

[llm]

model = "gpt-4o"

base_url = "https://api.openai.com/v1"

api_key = "sk-..." # 替换为你的 OpenAI API 密钥

max_tokens = 4096

temperature = 0.0

# 可选的视觉模型配置

[llm.vision]

model = "gpt-4o"

base_url = "https://api.openai.com/v1"

api_key = "sk-..." # 替换为你的 OpenAI API 密钥

- Parameter description::

model: Specifies the LLM model, the default is GPT-4o.base_url: The access address of the API, which uses the official OpenAI interface by default.api_key: Key obtained from OpenAI for authentication.max_tokens: Maximum number of single-generation token number that controls the output length.temperature: Controls the randomness of the generated content, with 0.0 indicating the most stable output.

- Getting the API key: Visit the OpenAI website, log in and generate a key on the "API Keys" page and copy it to your configuration file.

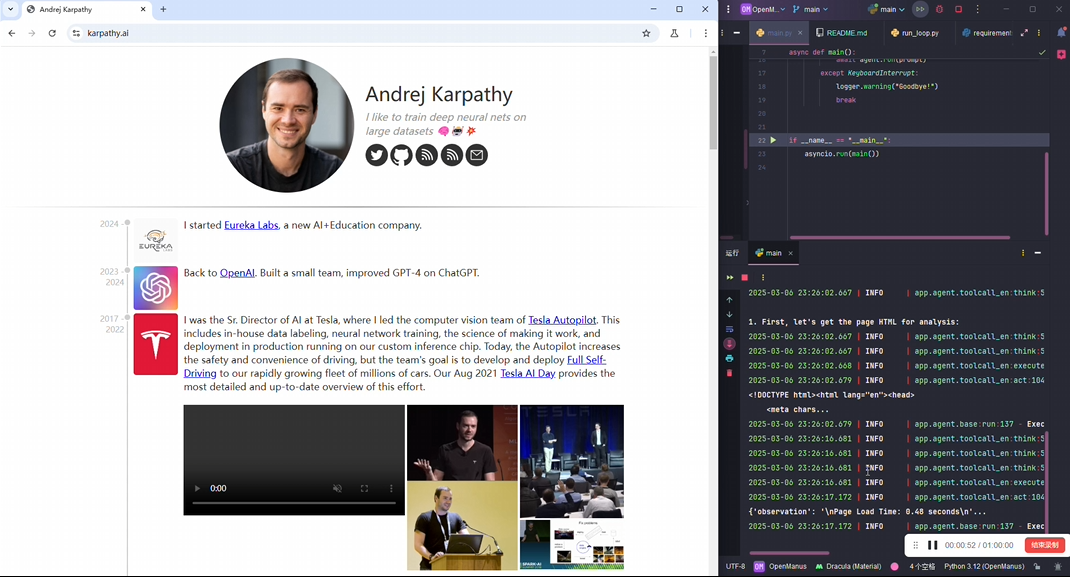

Operation and use

After completing the installation and configuration, OpenManus can be started in the following ways:

1. Basic operations

Run the main program:

python main.py

- workflow::

- After the terminal displays the prompt, enter your task (e.g., "Help me generate a weekly plan").

- Press Enter to commit and OpenManus calls LLM to process the task.

- Processing results are displayed directly on the terminal.

- Usage Scenarios: Ideal for quick testing or performing simple tasks such as generating text or code snippets.

2. Experimental runs

Run the unstable version to experience the new features:

python run_flow.py

- specificities: Includes features under development, may have bugs, and is suitable for users who want to try it out.

- take note of: Make sure the configuration file is correct or the run may fail.

Featured Function Operation

Local intelligentsia operation

- Functional Description: Enter a task via the terminal and OpenManus calls LLM locally to process it and return the result.

- Example of operation::

- (of a computer) run

python main.pyThe - Input: "Write a Python function that calculates the sum of 1 to 100".

- Example output:

def sum_to_100(): return sum(range(1, 101))

- (of a computer) run

- dominance: Runs locally without uploading data, protects privacy and is responsive.

Supports mainstream LLM models

- Functional Description: Users can switch between different LLM models according to their needs.

- procedure::

- compiler

config.tomlwillmodelChange to another model (e.g."gpt-3.5-turbo"). - Save and run

python main.pyThe - Input tasks to experience the output of different models.

- compiler

- suggestion: GPT-4o for complex tasks and GPT-3.5-turbo for simple tasks are more cost effective.

Frequently Asked Questions

- concern: Runs with the error "ModuleNotFoundError".

- tackle: Verify that the dependencies are fully installed and re-run

pip install -r requirements.txtThe

- tackle: Verify that the dependencies are fully installed and re-run

- concern: "Invalid API key".

- tackle: Inspection

config.tomlhit the nail on the headapi_keyis correct, or regenerate the key.

- tackle: Inspection

- concern: Runs stuttered or unresponsive.

- tackle: Check that the network connection is stable, or reduce the number of

max_tokensvalues to reduce the amount of computation. - View Demo: The project provides Demo Video, demonstrating actual operating results.

- tackle: Check that the network connection is stable, or reduce the number of

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...